Quote for the day:

"I am not a product of my circumstances. I am a product of my decisions." -- Stephen Covey

The CISO’s paradox: Enabling innovation while managing risk

When security understands revenue goals, customer promises and regulatory

exposure, guidance becomes specific and enabling. Begin by embedding a security

liaison with each product squad so there is always a known face to engage in

identity, data flows, logging and encryption decisions as they form. We should

not want to see engineers opening two-week tickets for a simple question. There

should be open “office hours,” chat channels and quick calls so they can get

immediate feedback on decisions like API design, encryption requirements and

regional data moves. ... Show up at sprint planning and early design reviews to

ask the questions that matter — authentication paths, least-privilege access,

logging coverage and how changes will be monitored in production through SIEM

and EDR. When security officers sit at the same table, the conversation changes

from “Can we do this?” to “How do we do this securely?” and better outcomes

follow from day one. ... When developers deploy code multiple times a day, a

“final security review” before launch just wouldn’t work. This traditional,

end-of-line gating model doesn’t just block innovation but also fails to catch

real-world risks. To be effective, security must be embedded during development,

not just inspected after. ... This discipline must further extend into

production. Even with world-class DevSecOps, we know a zero-day or configuration

drift can happen.

When security understands revenue goals, customer promises and regulatory

exposure, guidance becomes specific and enabling. Begin by embedding a security

liaison with each product squad so there is always a known face to engage in

identity, data flows, logging and encryption decisions as they form. We should

not want to see engineers opening two-week tickets for a simple question. There

should be open “office hours,” chat channels and quick calls so they can get

immediate feedback on decisions like API design, encryption requirements and

regional data moves. ... Show up at sprint planning and early design reviews to

ask the questions that matter — authentication paths, least-privilege access,

logging coverage and how changes will be monitored in production through SIEM

and EDR. When security officers sit at the same table, the conversation changes

from “Can we do this?” to “How do we do this securely?” and better outcomes

follow from day one. ... When developers deploy code multiple times a day, a

“final security review” before launch just wouldn’t work. This traditional,

end-of-line gating model doesn’t just block innovation but also fails to catch

real-world risks. To be effective, security must be embedded during development,

not just inspected after. ... This discipline must further extend into

production. Even with world-class DevSecOps, we know a zero-day or configuration

drift can happen. Resilience Means Fewer Recoveries, Not Faster Ones

Resilience has become one of the most overused words in management. Leaders

praise teams for “pushing through” and “bouncing back,” as if the ability to

absorb endless strain were proof of strength. But endurance and resilience are

not the same. Endurance is about surviving pressure. Resilience is about

designing systems so people don’t break under it. Many organizations don’t build

resilience; they simply expect employees to endure more. The result is a quiet

crisis of exhaustion disguised as dedication. Teams appear committed but are

running on fumes. ... In most organizations, a small group carries the load when

things get tough — the dependable few who always say yes to the most essential

tasks. That pattern is unsustainable. Build redundancy into the system by

cross-training roles, rotating responsibilities, and decentralizing authority.

The goal isn’t to reduce pressure to zero; it’s to distribute it evenly enough

so that no one person becomes the safety net for everyone else. ... Too many

managers equate resilience with recovery, celebrating those who saved the day

after the crisis is over. But true resilience shows up before the crisis hits.

Observe your team to recognize the people who spot problems early, manage risks

quietly, or improve workflows so that breakdowns don’t happen. Crisis prevention

doesn’t create dramatic stories, but it builds the calm, predictable environment

that allows innovation to thrive.

Resilience has become one of the most overused words in management. Leaders

praise teams for “pushing through” and “bouncing back,” as if the ability to

absorb endless strain were proof of strength. But endurance and resilience are

not the same. Endurance is about surviving pressure. Resilience is about

designing systems so people don’t break under it. Many organizations don’t build

resilience; they simply expect employees to endure more. The result is a quiet

crisis of exhaustion disguised as dedication. Teams appear committed but are

running on fumes. ... In most organizations, a small group carries the load when

things get tough — the dependable few who always say yes to the most essential

tasks. That pattern is unsustainable. Build redundancy into the system by

cross-training roles, rotating responsibilities, and decentralizing authority.

The goal isn’t to reduce pressure to zero; it’s to distribute it evenly enough

so that no one person becomes the safety net for everyone else. ... Too many

managers equate resilience with recovery, celebrating those who saved the day

after the crisis is over. But true resilience shows up before the crisis hits.

Observe your team to recognize the people who spot problems early, manage risks

quietly, or improve workflows so that breakdowns don’t happen. Crisis prevention

doesn’t create dramatic stories, but it builds the calm, predictable environment

that allows innovation to thrive.Facial Recognition’s Trust Problem

Surveillance by facial recognition is almost always in a public setting, so it’s

one-to-many. There is a database and many cameras (usually a large number of

cameras – an estimated one million in London and more than 30,000 in New York).

These cameras capture images of people and compare them to the database of known

images to identify individuals. The owner of the database may include watchlists

comprising ‘people of interest’, so the ability to track persons of interest

from one camera to another is included. But the process of capturing and using

the images is almost always non-consensual. People don’t know when, where or how

their facial image was first captured, and they don’t know where their data is

going downstream or how it is used after initial capture. Nor are they usually

aware of the facial recognition cameras that record their passage through the

streets. ... Most people are wary of facial recognition systems. They are

considered personally intrusive and privacy invasive. Capturing a facial image

and using it for unknown purposes is not something that is automatically

trusted. And yet it is not something that can be ignored – it’s part of modern

life and will continue to be so. In the two primary purposes of facial

recognition – access authentication and the surveillance of public spaces – the

latter is the least acceptable. It is used for the purpose of public safety but

is fundamentally insecure. What exists now can be, and has been, hijacked by

criminals for their own purposes.

Surveillance by facial recognition is almost always in a public setting, so it’s

one-to-many. There is a database and many cameras (usually a large number of

cameras – an estimated one million in London and more than 30,000 in New York).

These cameras capture images of people and compare them to the database of known

images to identify individuals. The owner of the database may include watchlists

comprising ‘people of interest’, so the ability to track persons of interest

from one camera to another is included. But the process of capturing and using

the images is almost always non-consensual. People don’t know when, where or how

their facial image was first captured, and they don’t know where their data is

going downstream or how it is used after initial capture. Nor are they usually

aware of the facial recognition cameras that record their passage through the

streets. ... Most people are wary of facial recognition systems. They are

considered personally intrusive and privacy invasive. Capturing a facial image

and using it for unknown purposes is not something that is automatically

trusted. And yet it is not something that can be ignored – it’s part of modern

life and will continue to be so. In the two primary purposes of facial

recognition – access authentication and the surveillance of public spaces – the

latter is the least acceptable. It is used for the purpose of public safety but

is fundamentally insecure. What exists now can be, and has been, hijacked by

criminals for their own purposes. The Urgent Leadership Playbook for AI Transformation

Banking executives talk enthusiastically about AI. They mention it frequently in

investor presentations, allocate budgets to pilot programs, and establish

innovation labs. Yet most institutions find themselves frozen between

recognition of AI’s potential and the organizational will to pursue

transformation aggressively. ... But waiting for perfect clarity guarantees

competitive disadvantage. Even if only 5% of banks successfully embed AI across

operations — and the number will certainly grow larger — these institutions will

alter industry dynamics sufficiently to render non-adopters progressively

irrelevant. Early movers establish data advantages, algorithmic sophistication,

and operational efficiencies that create compounding benefits difficult for

followers to overcome. ... The path from today’s tentative pilots to tomorrow’s

AI-first institution follows a proven playbook developed by "future-built"

companies in other sectors that successfully generate measurable value from AI

at enterprise scale. ... Scaling AI requires reimagining organizational

structures around technology-human collaboration based on three-layer

guardrails: agent policy layers defining permissible actions, assurance layers

providing controls and audit trails, and human responsibility layers assigning

clear ownership for each autonomous domain.

Banking executives talk enthusiastically about AI. They mention it frequently in

investor presentations, allocate budgets to pilot programs, and establish

innovation labs. Yet most institutions find themselves frozen between

recognition of AI’s potential and the organizational will to pursue

transformation aggressively. ... But waiting for perfect clarity guarantees

competitive disadvantage. Even if only 5% of banks successfully embed AI across

operations — and the number will certainly grow larger — these institutions will

alter industry dynamics sufficiently to render non-adopters progressively

irrelevant. Early movers establish data advantages, algorithmic sophistication,

and operational efficiencies that create compounding benefits difficult for

followers to overcome. ... The path from today’s tentative pilots to tomorrow’s

AI-first institution follows a proven playbook developed by "future-built"

companies in other sectors that successfully generate measurable value from AI

at enterprise scale. ... Scaling AI requires reimagining organizational

structures around technology-human collaboration based on three-layer

guardrails: agent policy layers defining permissible actions, assurance layers

providing controls and audit trails, and human responsibility layers assigning

clear ownership for each autonomous domain.

Creative cybersecurity strategies for resource-constrained institutions

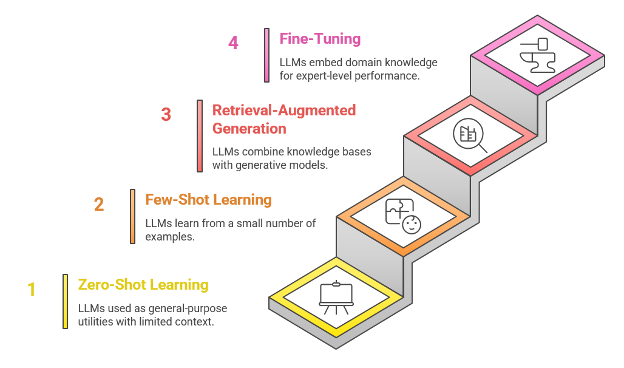

There’s a well-worn phrase that gets repeated whenever budgets are tight: “We have to do more with less.” I’ve never liked it because it suggests the team wasn’t already giving maximum effort. Instead, the goal should be to “use existing resources more effectively.” ... When you understand the users’ needs and learn how they want to work, you can recommend solutions that are both secure and practical. You don’t need to be an expert in every research technology. Start by paying attention to the services offered by cloud providers and vendors. They constantly study user pain points and design tools to address them. If you see a cloud service that makes it easier to collect, store, or share scientific data, investigate what makes it attractive. ... First, understand how your policies and controls affect the work. Security shouldn’t be developed in a vacuum. If you don’t understand the impact on researchers, developers, or operational teams, your controls may not be designed and implemented in a manner that helps enable the business. Second, provide solutions, don’t just say no. A security team that only rejects ideas will be thought of as a roadblock, and users will do their best to avoid engagement. A security team that helps people achieve their goals securely becomes one that is sought out, and ultimately ensures the business is more secure.Architecting Intelligence: A Strategic Framework for LLM Fine-Tuning at Scale

As organizations race to harness the transformative power of Large Language

Models, a critical gap has emerged between experimental implementations and

production-ready AI systems. While prompt engineering offers a quick entry

point, enterprises seeking competitive advantage must architect sophisticated

fine-tuning pipelines that deliver consistent, domain-specific intelligence at

scale. The landscape of LLM deployment presents three distinct approaches for

fine-tuning, each with architectural implications. The answer lies in

understanding the maturity curve of AI implementation. ... Fine-tuning

represents the architectural apex of AI implementation. Rather than relying on

prompts or external knowledge bases, fine-tuning modifies the AI model itself by

continuing its training on domain-specific data. This embeds organizational

knowledge, reasoning patterns, and domain expertise directly into the model’s

parameters. Think of it this way: a general-purpose AI model is like a talented

generalist who reads widely but lacks deep expertise. ... The decision involves

evaluating several factors. Model scale matters because larger models generally

offer better performance but demand more computational resources. An

organization must balance the quality improvements of a 70-billion-parameter

model against the infrastructure costs and latency implications.

As organizations race to harness the transformative power of Large Language

Models, a critical gap has emerged between experimental implementations and

production-ready AI systems. While prompt engineering offers a quick entry

point, enterprises seeking competitive advantage must architect sophisticated

fine-tuning pipelines that deliver consistent, domain-specific intelligence at

scale. The landscape of LLM deployment presents three distinct approaches for

fine-tuning, each with architectural implications. The answer lies in

understanding the maturity curve of AI implementation. ... Fine-tuning

represents the architectural apex of AI implementation. Rather than relying on

prompts or external knowledge bases, fine-tuning modifies the AI model itself by

continuing its training on domain-specific data. This embeds organizational

knowledge, reasoning patterns, and domain expertise directly into the model’s

parameters. Think of it this way: a general-purpose AI model is like a talented

generalist who reads widely but lacks deep expertise. ... The decision involves

evaluating several factors. Model scale matters because larger models generally

offer better performance but demand more computational resources. An

organization must balance the quality improvements of a 70-billion-parameter

model against the infrastructure costs and latency implications.

How smart tech innovation is powering the next generation of the trucking industry

Real-time tracking has now become the backbone of digital trucking. These

systems provide the real time updates on the location of vehicles, fuel

consumption, driving behavior and performance of the engine. Fleets also make

informed decisions based on data to directly influence operational efficiencies.

Furthermore, the IoT-enabled ‘one app’ solution monitors cargo temperature,

location, and overall load conditions throughout the journey. ... Now, with AI

driven algorithms, fleet managers anticipate most optimal routes via analysis of

historical demand, weather patterns, and traffic. AI-powered intelligent route

optimization applications allow fleets to optimize fuel usage and lower travel

times. Additionally, with predictive maintenance capabilities, trucking

companies are less concerned about vehicle failures, because a more proactive

approach is used. AI tools spot anomalies in engine data and warn the fleet

owners before expensive vehicle failure occurs, improving the overall fleet

operations. ... The trucking industry is transforming faster than ever before.

Technologies are turning every vehicle into a connected network and digital

asset. Fleets can forecast demand, optimize routes, preserve cargo quality, and

ensure safety at every step. The smarter goals align seamlessly with cost saving

opportunities as logistics aggregators transition from the manual heavy

paperwork to the digital locker convenience

Real-time tracking has now become the backbone of digital trucking. These

systems provide the real time updates on the location of vehicles, fuel

consumption, driving behavior and performance of the engine. Fleets also make

informed decisions based on data to directly influence operational efficiencies.

Furthermore, the IoT-enabled ‘one app’ solution monitors cargo temperature,

location, and overall load conditions throughout the journey. ... Now, with AI

driven algorithms, fleet managers anticipate most optimal routes via analysis of

historical demand, weather patterns, and traffic. AI-powered intelligent route

optimization applications allow fleets to optimize fuel usage and lower travel

times. Additionally, with predictive maintenance capabilities, trucking

companies are less concerned about vehicle failures, because a more proactive

approach is used. AI tools spot anomalies in engine data and warn the fleet

owners before expensive vehicle failure occurs, improving the overall fleet

operations. ... The trucking industry is transforming faster than ever before.

Technologies are turning every vehicle into a connected network and digital

asset. Fleets can forecast demand, optimize routes, preserve cargo quality, and

ensure safety at every step. The smarter goals align seamlessly with cost saving

opportunities as logistics aggregators transition from the manual heavy

paperwork to the digital locker convenienceWhy every business needs to start digital twinning in 2026

Digital twins have begun to stand out because they’re not generic AI stand-ins;

at their best they’re structured behavioural models grounded in real customer

data. They offer a dependable way to keep insights active, consistent and

available on demand. That is where their true strategic value lies. More

granularly, the best performing digital twins are built on raw existing customer

insights – interview transcripts, survey results, and behavioural data. But

rather than just summarising the data, they create a representation of how a

particular individual tends to think. Their role isn’t to imitate someone’s

exact words, but to reflect underlying logic, preferences, motivations and blind

spots. ... There’s no denying the fact that organisations have had a year of big

promises and disappointing AI pilots, with the result that businesses are far

more selective about what genuinely moves the needle. For years, digital

twinning has been used to model complex systems in engineering, aerospace and

manufacturing, where failure is expensive and iteration must happen before

anything becomes real. With the rise of generative AI, the idea of a digital

twin has expanded. After a year of rushed AI pilots and disappointing ROI,

leaders are looking for approaches that actually fit how businesses work.

Digital twinning does exactly that: it builds on familiar research practices,

works inside existing workflows, and lets teams explore ideas safely before

committing to them.

Digital twins have begun to stand out because they’re not generic AI stand-ins;

at their best they’re structured behavioural models grounded in real customer

data. They offer a dependable way to keep insights active, consistent and

available on demand. That is where their true strategic value lies. More

granularly, the best performing digital twins are built on raw existing customer

insights – interview transcripts, survey results, and behavioural data. But

rather than just summarising the data, they create a representation of how a

particular individual tends to think. Their role isn’t to imitate someone’s

exact words, but to reflect underlying logic, preferences, motivations and blind

spots. ... There’s no denying the fact that organisations have had a year of big

promises and disappointing AI pilots, with the result that businesses are far

more selective about what genuinely moves the needle. For years, digital

twinning has been used to model complex systems in engineering, aerospace and

manufacturing, where failure is expensive and iteration must happen before

anything becomes real. With the rise of generative AI, the idea of a digital

twin has expanded. After a year of rushed AI pilots and disappointing ROI,

leaders are looking for approaches that actually fit how businesses work.

Digital twinning does exactly that: it builds on familiar research practices,

works inside existing workflows, and lets teams explore ideas safely before

committing to them.

From compliance to confidence: Redefining digital transformation in regulated enterprises

Compliance is no longer the brake on digital transformation. It is the steering

system that determines how fast and how far innovation can go. ... Technology

rarely fails because of a lack of innovation. It fails when organizations lack

the governance maturity to scale innovation responsibly. Too often, compliance

is viewed as a bottleneck. It’s a scalability accelerator when embedded early.

... When governance and compliance converge, they unlock a feedback loop of

trust. Consider a payer-provider network that unified its claims, care and

compliance data into a single “truth layer.” Not only did this integration

reduce audit exceptions by 45%, but it also improved member-satisfaction scores

because interactions became transparent and consistent. ... No transformation

from compliance to confidence happens without leadership alignment. The CIO sits

at the intersection of technology, policy and culture and therefore carries the

greatest influence over whether compliance is reactive or proactive. ...

Technology maturity alone is not enough. The workforce must trust the system.

When employees understand how AI or analytics systems make decisions, they

become more confident using them. ... Confidence is not the absence of

regulation; it’s mastery of it. A confident enterprise doesn’t fear audits

because its systems are inherently explainable.

Compliance is no longer the brake on digital transformation. It is the steering

system that determines how fast and how far innovation can go. ... Technology

rarely fails because of a lack of innovation. It fails when organizations lack

the governance maturity to scale innovation responsibly. Too often, compliance

is viewed as a bottleneck. It’s a scalability accelerator when embedded early.

... When governance and compliance converge, they unlock a feedback loop of

trust. Consider a payer-provider network that unified its claims, care and

compliance data into a single “truth layer.” Not only did this integration

reduce audit exceptions by 45%, but it also improved member-satisfaction scores

because interactions became transparent and consistent. ... No transformation

from compliance to confidence happens without leadership alignment. The CIO sits

at the intersection of technology, policy and culture and therefore carries the

greatest influence over whether compliance is reactive or proactive. ...

Technology maturity alone is not enough. The workforce must trust the system.

When employees understand how AI or analytics systems make decisions, they

become more confident using them. ... Confidence is not the absence of

regulation; it’s mastery of it. A confident enterprise doesn’t fear audits

because its systems are inherently explainable.

AI agents are already causing disasters - and this hidden threat could derail your safe rollout

Although artificial intelligence agents are all the rage these days, the world

of enterprise computing is experiencing disasters in the fledgling attempts to

build and deploy the technology. Understanding why this happens and how to

prevent it is going to involve lots of planning in what some are calling the

zero-day deliberation. "You might have hundreds of AI agents running on a user's

behalf, taking actions, and, inevitably, agents are going to make mistakes,"

said Anneka Gupta, chief product officer for data protection vendor Rubrik. ...

Gupta talked about more than just a product pitch. Fixing well-intentioned

disasters is not the biggest agent issue, she said. The big picture is that

agentic AI is not moving forward as it should because of zero-day issues. "Agent

Rewind is a day-two issue," said Gupta. "How do we solve for these zero-day

issues to start getting people moving faster -- because they are getting stuck

right now." ... According to Gupta, the true problem of agent deployment is all

the work that begins with the chief information security officer, CISO, the

chief information officer, CIO, and other senior management to figure out the

scope of agents. AI agents are commonly defined as artificial intelligence

programs that have been granted access to resources external to the large

language model itself, enabling the AI program to carry out a wider variety of

actions. ... The real zero-day obstacle is how to understand what agents are

supposed to be doing, and how to measure what success or failure would look

like.

Although artificial intelligence agents are all the rage these days, the world

of enterprise computing is experiencing disasters in the fledgling attempts to

build and deploy the technology. Understanding why this happens and how to

prevent it is going to involve lots of planning in what some are calling the

zero-day deliberation. "You might have hundreds of AI agents running on a user's

behalf, taking actions, and, inevitably, agents are going to make mistakes,"

said Anneka Gupta, chief product officer for data protection vendor Rubrik. ...

Gupta talked about more than just a product pitch. Fixing well-intentioned

disasters is not the biggest agent issue, she said. The big picture is that

agentic AI is not moving forward as it should because of zero-day issues. "Agent

Rewind is a day-two issue," said Gupta. "How do we solve for these zero-day

issues to start getting people moving faster -- because they are getting stuck

right now." ... According to Gupta, the true problem of agent deployment is all

the work that begins with the chief information security officer, CISO, the

chief information officer, CIO, and other senior management to figure out the

scope of agents. AI agents are commonly defined as artificial intelligence

programs that have been granted access to resources external to the large

language model itself, enabling the AI program to carry out a wider variety of

actions. ... The real zero-day obstacle is how to understand what agents are

supposed to be doing, and how to measure what success or failure would look

like.

No comments:

Post a Comment