Technical Debt: An enterprise’s self-inflicted cyber risk

Technical debt issues vary in risk level depending on the scope and blast radius

of the issue. Unaddressed high-risk technical debt issues create inefficiency

and security exposure while diminishing network reliability and performance.

There’s the obvious financial risk that comes from wasted time, inefficiencies,

and maintenance costs. Adding tools potentially introduces new vulnerabilities,

increasing the attack surface for cyber threats. A lot of the literature around

technical debt focuses on obsolete technology on desktops. While this does

present some risk, desktops have a limited blast radius when compromised.

Outdated hardware and unattended software vulnerabilities within network

infrastructure pose a much more imminent and severe risk as they serve as a

convenient entry point for malicious actors with a much wider potential reach.

An unpatched or end-of-life router, switch, or firewall, riddled with documented

vulnerabilities, creates a clear path to infiltrating the network. By

methodically addressing technical debt, enterprises can significantly mitigate

cyber risks, enhance operational preparedness, and minimize unforeseen

infrastructure disruptions.

Why Your AI Will Never Take Off Without Better Data Accessibility

Data management and security challenges cast a long shadow over efforts to

modernize infrastructures in support of AI and cloud strategies. The survey

results reveal that while CIOs prioritize streamlining business processes

through cloud infrastructures, improving data security and business resilience

is a close second. Security is a persistent challenge for companies managing

large volumes of file data and it continues to complicate efforts to enhance

data accessibility. Nasuni’s research highlights that 49% of firms (rising to

54% in the UK) view security as their biggest problem when managing file data

infrastructures. This issue ranks ahead of concerns such as rapid recovery from

cyberattacks and ensuring data compliance. As companies attempt to move their

file data to the cloud, security is again the primary obstacle, with 45% of all

respondents—and 55% in the DACH region—citing it as the leading barrier, far

outstripping concerns over cost control, upskilling employees and data migration

challenges. These security concerns are not just theoretical. Over half of the

companies surveyed admitted that they had experienced a cyber incident from

which they struggled to recover. Alarmingly, only one in five said they managed

to recover from such incidents easily.

Exploring DORA: How to manage ICT incidents and minimize cyber threat risks

The SOC must be able to quickly detect and manage ICT incidents. This involves

proactive, around-the-clock monitoring of IT infrastructure to identify

anomalies and potential threats early on. Security teams can employ advanced

tools such as security automation, orchestration and response (SOAR), extended

detection and response (XDR), and security information and event management

(SIEM) systems, as well as threat analysis platforms, to accomplish this.

Through this monitoring, incidents can be identified before they escalate and

cause greater damage. ... DORA introduces a harmonized reporting system for

serious ICT incidents and significant cyber threats. The aim of this reporting

system is to ensure that relevant information is quickly communicated to all

responsible authorities, enabling them to assess the impact of an incident on

the company and the financial market in a timely manner and respond accordingly.

... One of the tasks of SOC analysts is to ensure effective communication with

relevant stakeholders, such as senior management, specialized departments and

responsible authorities. This also includes the creation and submission of the

necessary DORA reports.

What is Cyber Resilience? Insurance, Recovery, and Layered Defenses

While cyber insurance can provide financial protection against the fallout of

ransomware, it’s important to understand that it’s not a silver bullet.

Insurance alone won’t save your business from downtime, data loss, or reputation

damage. As we’ve seen with other types of insurance, such as property or health

insurance, simply holding a policy doesn’t mean you’re immune to risks. While

cyber insurance is designed to mitigate financial risks, insurers are becoming

increasingly discerning, often requiring businesses to demonstrate adequate

cybersecurity controls before providing coverage. Gone are the days when

businesses could simply “purchase” cyber insurance without robust cyber hygiene

in place. Today’s insurers require businesses to have key controls such as

multi-factor authentication (MFA), incident response plans, and regular

vulnerability assessments. Moreover, insurance alone doesn’t address the

critical issue of data recovery. While an insurance payout can help with

financial recovery, it can’t restore lost data or rebuild your reputation. This

is where a comprehensive cybersecurity strategy comes in — one that encompasses

both proactive and reactive measures, involving components like third-party data

recovery software.

Integrating Legacy Systems with Modern Data Solutions

Many legacy systems were not designed to share data across platforms or

departments, leading to the creation of data silos. Critical information gets

trapped in isolated systems, preventing a holistic view of the organization’s

data and hindering comprehensive analysis and decision-making. ... Modern

solutions are designed to scale dynamically, whether it’s accommodating more

users, handling larger datasets, or managing more complex computations. In

contrast, legacy systems are often constrained by outdated infrastructure,

making it difficult to scale operations efficiently. Addressing this requires

refactoring old code and updating the system architecture to manage accumulated

technical debt. ... Older systems typically lack the robust security features of

modern solutions, making them more vulnerable to cyber-attacks. Integrating

these systems without upgrading security protocols can expose sensitive data to

threats. Ensuring robust security measures during integration is critical to

protect data integrity and privacy. ... Maintaining legacy systems can be costly

due to outdated hardware, limited vendor support, and the need for specialized

expertise. Integrating them with modern solutions can add to this complexity and

expense.

The challenges of hybrid IT in the age of cloud repatriation

The story of cloud repatriation is often one of regaining operational control. A

recent report found that 25% of organizations surveyed are already moving some

cloud workloads back on-premises. Repatriation offers an opportunity to address

these issues like rising costs, data privacy concerns, and security issues.

Depending on their circumstances, managing IT resources internally can allow

some organizations to customize their infrastructure to meet these specific

needs while providing direct oversight over performance and security. With

rising regulations surrounding data privacy and protection, enhanced control

over on-prem data storage and management provides significant advantages by

simplifying compliance efforts. ... However, cloud repatriation can often create

challenges of its own. The costs associated with moving services back on-prem

can be significant: new hardware, increased maintenance, and energy expenses

should all be factored in. Yet, for some, the financial trade-off for

repatriation is worth it, especially if cloud expenses become unsustainable or

if significant savings can be achieved by managing resources partially on-prem.

Cloud repatriation is a calculated risk that, if done for the right reasons and

executed successfully, can lead to efficiency and peace of mind for many

companies.

IT Cost Reduction Strategies: 3 Unexpected Ways Enterprise Architecture Can Help

Easier said than done with the traditional process of manual follow-ups hampered

by inconsistent documentation often scattered across many teams. The issue with

documentation also often means that maintenance efforts are duplicated, wasting

resources that could have been better deployed elsewhere. The result is the

equivalent of around 3 hours of a dedicated employee’s focus per application per

year spent on documentation, governance, and maintenance. Not so for the

organization that has a digital-native EA platform that leverages your data to

enable scalability and automation in workflows and messaging so you can reach

out to the most relevant people in your organization when it's most needed.

Features like these can save an immense amount of time otherwise spent

identifying the right people to talk to and when to reach out to them, making a

company's Enterprise Architecture the single source of truth and a solid

foundation for effective governance. The result is a reduction of approximately

a third of the time usually needed to achieve this. That valuable time can then

be reallocated toward other, more strategic work within the organization. We

have seen that a mid-sized company can save approximately $70 thousand annually

by reducing its documentation and governance time.

How Rules Can Foster Creativity: The Design System of Reykjavík

Design systems have already gained significant traction, but many are still in

their early stages, lacking atomic design structures. While this approach may

seem daunting at first, as more designers and developers grow accustomed to

working systematically, I believe atomic design will become the norm. Today,

most teams create their own design systems, but I foresee a shift toward

subscription-based or open-source systems that can be customized at the atomic

level. We already see this with systems like Google’s Material UI, IBM’s Carbon,

Shopify’s Polaris, and Atlassian’s design system. Adopting a pre-built,

well-supported design system makes sense for many organizations. Custom systems

are expensive and time-consuming to build, and maintaining them requires ongoing

resources, as we learned in Reykjavík. By leveraging a tried-and-tested design

system, teams can focus on customization rather than starting from scratch.

ontrary to popular belief, this shift won’t stifle creativity. For public

services, there is little need for extreme creativity regarding core

functionality - these products simply need to work as expected. AI will also

play a significant role in evolving design systems.

Eyes on Data: A Data Governance Study Bridging Industry and Academia

The researcher, Tony Mazzarella, is a seasoned data management professional and

has extensive experience in data governance within large organizations. His

professional and research observations have identified key motivations for this

work: Data Governance has a knowledge problem. Existing literature and

publications are overly theoretical and lack empirical guidance on practical

implementation. The conceptual and practical entanglement of governance and

management concepts and activities exacerbates this issue, leading to divergent

definitions and perceptions that data governance is overly theoretical. The

“people” challenges in data management are often overlooked. Culture is core to

data governance, but its institutionalization as a business function coincided

first in the financial services industry with a shift towards regulatory

compliance in response to the 2008 financial crisis. “Data culture” has

re-emerged in all industries, but it implies the governance function is tasked

with fostering culture change rather than emphasizing that data governance

requires a culture change, which is a management challenge. Data Management’s

industry-driven nature and reactive ethos result in unnecessary change as the

macroenvironment changes, undermining process resilience and sustainability.

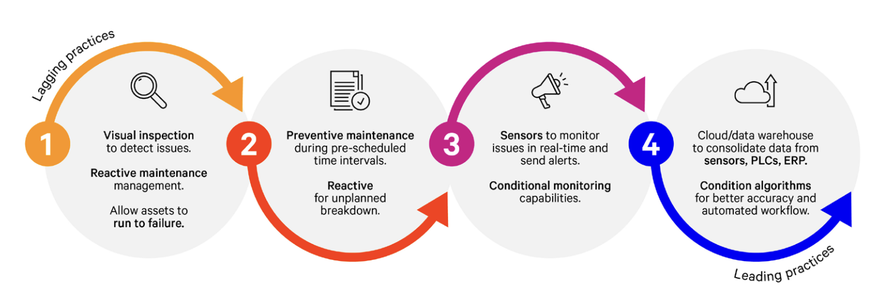

The future of data center maintenance

Condition-based maintenance and advanced monitoring services provide operators

with more information about the condition and behavior of assets within the

system, including insights into how environmental factors, controls, and usage

drive service needs. The ability to recommend actions for preventing downtime

and extending asset life allows a focus on high-impact items instead of tasks

that don't immediately affect asset reliability or lifespan. These items include

lifecycle parts replacement, optimizing preventive maintenance schedules,

managing parts inventories, and optimizing control logic. The effectiveness of a

service visit can subsequently be validated as the actions taken are reflected

in asset health analyses. ... Condition-based maintenance and advanced

monitoring services include a customer portal for efficient equipment health

reporting. Detailed dashboards display site health scores, critical events, and

degradation patterns. ... The future of data center maintenance is here –

smarter, more efficient, and more reliable than ever. With condition-based

maintenance and advanced monitoring services, data centers can anticipate risks

and benchmark assets, leading to improved risk management and enhanced

availability.

Quote for the day:

"It's not about how smart you are--it's

about capturing minds." -- Richie Norton

No comments:

Post a Comment