The engines of AI: Machine learning algorithms explained

Machine learning algorithms train on data to find the best set of weights for

each independent variable that affects the predicted value or class. The

algorithms themselves have variables, called hyperparameters. They’re called

hyperparameters, as opposed to parameters, because they control the operation of

the algorithm rather than the weights being determined. The most important

hyperparameter is often the learning rate, which determines the step size used

when finding the next set of weights to try when optimizing. If the learning

rate is too high, the gradient descent may quickly converge on a plateau or

suboptimal point. If the learning rate is too low, the gradient descent may

stall and never completely converge. Many other common hyperparameters depend on

the algorithms used. Most algorithms have stopping parameters, such as the

maximum number of epochs, or the maximum time to run, or the minimum improvement

from epoch to epoch. Specific algorithms have hyperparameters that control the

shape of their search.

How to Build a Cyber-Resilient Company From Day One

Despite your best proactive measures, some cyber threats will infiltrate your

defenses. Reactive defenses, such as firewalls and antivirus software, help to

minimize damage when these incidents occur. Firewalls monitor and control

incoming and outgoing network traffic based on predetermined security rules,

forming the first line of defense against cyber threats. Antivirus software

complements firewalls by detecting, preventing and removing malicious software.

Intrusion Detection and Prevention Systems (IDS/IPS) monitor your network for

suspicious activities and potential threats, alerting you to a potential attack

and, in some cases, taking action to mitigate the threat. Encryption is another

valuable reactive measure that involves making your sensitive data unreadable to

anyone without the appropriate decryption key, thus protecting it even if it

falls into the wrong hands. Security Information and Event Management (SIEM)

systems provide real-time analysis and reporting of security alerts generated by

applications and network hardware. They help detect incidents early and respond

promptly.

Quantum Algorithms vs. Quantum-Inspired Algorithms

Quantum-inspired algorithms refer usually to either of the two: (i) classical

algorithms based on linear algebra methods — often methods known as tensor

networks — that were developed in the recent past, or (ii) methods that

attempt to use a classical computer to simulate the behavior of a quantum

computer, thus making the classical machine operate algorithms that benefit

from the laws of quantum mechanics that benefit real quantum computers. On

(i), while the physics community has leveraged these methods to address

problems in quantum mechanics since the 70s [Penrose], tensor networks have an

independent origin as far back as the 80s in neuroscience as well, as there is

nothing really quantum behind them; it really is just linear algebra. For

(ii), the process of emulating a quantum system falls back on the limitations

of classical hardware. It is very hard to emulate classically the full

dynamics of a large quantum system for the exact same reasons that one wants

to actually build a real one! So, does this mean that quantum-inspired

algorithms are bogus? Not really.

Operator survey: 5G services require network automation

"Private 5G" and "network slicing" rank second and third, respectively. Heavy

Reading expects their importance and popularity to increase as additional

operators deploy 5G SA and can support full autonomy. "Performance SLAs for

enterprise services" is currently the lowest ranking (fifth) of all service

choices but is likely to be a valuable market, especially for network slicing

and private 5G. "Connected devices (e.g., cars, watches, other IoT devices)"

ranks just above performance SLAs in fourth. Internet of Things (IoT) is a

sizeable market within 4G, but the massive machine-type communications (mMTC)

use case has yet to be realized in 5G, as technologies such as RedCap remain

underdeveloped. Smaller operators have a different opinion from larger

operators on the revenue growth question. For mobile operators with less than

9 million subscribers, private 5G ranks first. This result perhaps indicates

that smaller operators feel they are already exploiting eMBB services and see

little scope for further revenue growth with SA.

Top 5 Features your ITSM Solution Should Have

Addressing the root causes of recurring incidents and preventing them from

happening again is the core of what a problem management module is designed

for. Robust problem management functionality helps investigate, analyze, and

identify underlying causes, leading to effective problem resolution. A

reliable ITSM solution should include features such as root cause analysis,

trend identification, and proactive problem identification. This should

provide a structured approach to change requests, reduce the impact of

incidents, and improve the overall stability of your IT environment. A

comprehensive knowledge management system is a necessary asset for any IT

service desk. It serves as a centralized repository of information, providing

users with self-help resources, troubleshooting guides, and best practices

from within the organization. A well-organized and searchable knowledge base

allows users to access relevant articles and documentation for independent

issue resolution. Knowledge bases reduce reliance on IT support and enable

faster problem resolution. When choosing an ITSM solution with a knowledge

base, look for user-friendly interfaces, easy personalization, and

collaborative features.

No cyber resilience without open source sustainability

Open source sustainability is a problem: maintainers of popular software

projects are often overwhelmed by issues and pull requests to the point of

burnout. Donations have emerged as one solution, and are regularly provided by

governments, foundations, companies, and individuals. Yet, as excerpts of

recent drafts of the CRA indicate, it could threaten to undermine

sustainability by potentially introducing a burdensome compliance regime and

potential penalties if a maintainer decides to accept donations. The result

will be less resources flowing to already under resourced maintainers. Open

source projects are often multi-stakeholder: they receive contributions from

developers building as individuals, volunteering in foundations, and working

for companies, large and small. The current text would regulate open source

projects unless they have “a fully decentralised development model.” Any

project where a corporate employee has commit rights would need to comply with

CRA obligations. This turns the win-wins of open source on its head. Projects

may ban maintainers or even contributors from companies, and companies may ban

their employees from contributing to open source at all.

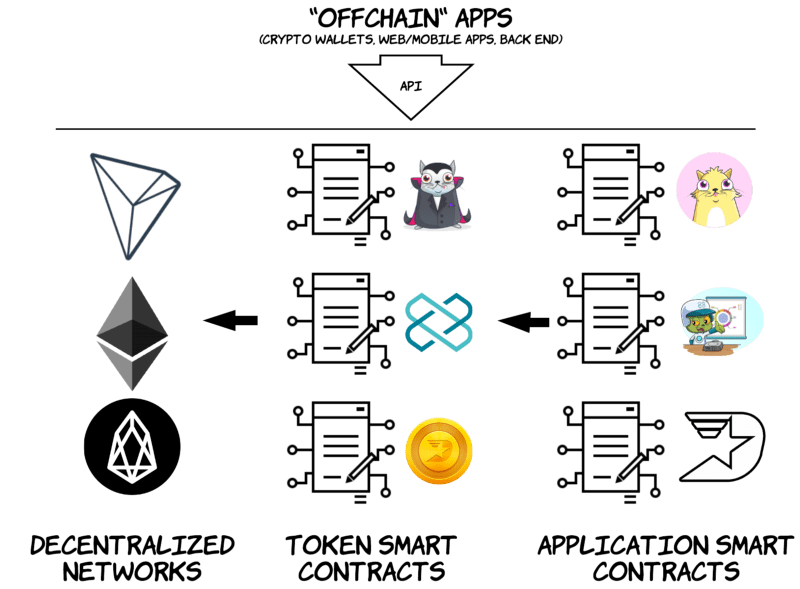

Building Trust in a Trustless World: Decentralized Applications Unveiled

In a DApp, smart contracts are used to store the program code and state of the

application. They replace the traditional server-side component in a regular

application. However, there are some important differences to consider.

Computation in smart contracts can be costly, so it's crucial to keep it

minimal. It's also essential to identify which parts of the application

require a trusted and decentralized execution platform. With Ethereum smart

contracts, you can create architectures where multiple smart contracts

interact with each other, exchanging data and updating their own variables.

The complexity is limited only by the block gas limit. Once you deploy your

smart contract, other developers may use your business logic in the future.

There are two key considerations when designing smart contract architecture.

First, once a smart contract is deployed, its code cannot be changed, except

for complete removal if programmed with a specific opcode. It can be deleted

if it is programmed with an accessible SELFDESTRUCT opcode, but other than

complete removal, the code cannot be changed in any way.

How the upcoming Cyber Resilience Act will impact privacy

The Cyber Resilience Act has several positive implications for privacy.

Firstly, by enforcing strict standards of cybersecurity in the development and

production of new devices, the Act creates an ecosystem where security is

ingrained in the product development cycle. Secondly, by creating the

reporting obligations, the Act ensures that vulnerabilities are addressed

promptly, reducing the risk of personal data breaches and protecting the

privacy of individuals. Third, the Act empowers consumers by ensuring they are

informed about the vulnerabilities in their devices and the measures they can

take to protect their personal data. From the perspective of data controllers,

particularly those who serve as manufacturers of devices regulated by the Act,

compliance requirements are raised to an even higher threshold. ...

Additionally, they will have to comply with reporting obligations regarding

vulnerabilities, even those that have already been fixed, regardless of

whether personal data was affected or not. Neglecting to fix known

vulnerabilities may also result in reputational consequences for data

controllers.

Crafting a cybersecurity resilience strategy: A comprehensive IT roadmap

In recent years, there has been a significant increase in the demand for

cybersecurity professionals due to the growing importance of protecting

sensitive information and systems from cyber threats. Organizations are

allocating larger budgets to enhance their cybersecurity measures, resulting

in a surge in the number of job opportunities in this field. According to the

latest Cyber Security Report by Michael Page, companies are actively seeking

skilled cybersecurity talent to address their security challenges. The report

reveals that globally, more than 3.5 million cybersecurity jobs are expected

to remain unfilled in 2023 due to a shortage of qualified professionals. This

shortage has created a sense of desperation among companies, as they struggle

to find suitable candidates to fill these critical roles. India is projected

to have over 1.5 million vacant cybersecurity positions by 2025, underscoring

the immense potential for career growth in this field. To effectively address

the ever-changing risks of digitalization and increasing cyberthreats, it is

crucial for organizations to implement a continuous security program.

The rise of OT cybersecurity threats

There is a need for a separate security program for OT that includes different

tools, governance, and processes. Companies can’t simply extend their IT

security program to OT, as the differences between the two domains are too

great. It may require two security operation centers (SOCs), which adds to the

complexity and costs of cybersecurity management. Bellack explains that some

CEOs or CIOs underestimate the risks associated with an OT attack. “It’s a

relatively new set of risks and a lot of executives don’t understand that they

are indeed in danger,” Bellack says. “Companies build smarter, faster, cheaper

factories using digital technologies because it’s great for business. But it

also expands their attack surface, and many people in charge don’t realize the

impacts or what they need to do to protect themselves.” ... “Machines are

components in a complex, revenue producing infrastructure that is a mix of

physical, digital, and human elements. Safety and availability are the key

focus, and security is sometimes forced to take a back seat if either of those

may be compromised,” explains Boals.

Quote for the day:

"Practice isn't the thing you do once

you're good. It's the thing you do that makes you good." --

Malcolm Gladwell

No comments:

Post a Comment