Sustainable Computing - With An Eye On The Cloud

There are two parts to sustainable goals: 1. How do cloud service providers

make their data centers more sustainable?; 2. What practices can cloud

service customers practice to better align with the cloud and make their

workloads more sustainable? Let us first look at the question of how

businesses should be planning for sustainability. How should they bake in

sustainability aspects as part of their migration to the cloud? The first

aspect to consider, of course, is choosing the right cloud service provider.

It is essential to select a carbon-thoughtful provider based on its commitment

to sustainability as well as how it plans, builds, powers, operates, and

eventually retires its physical data centers. The next aspect to consider

is the process of migrating services to an infrastructure-as-a-service

deployment model. Organizations should carry out such migrations without

re-engineering for the cloud, as this can help to drastically reduce energy

and carbon emissions as compared to doing so through an on-premise data

center.

The Intersection of AI and Data Stewardship: A New Era in Data Management

In addition to improving data quality, AI can also play a crucial role in

enhancing data security and privacy. With the increasing number of data

breaches and growing concerns around data privacy, organizations must ensure

that their data is protected from unauthorized access and misuse. AI can help

organizations identify potential security risks and vulnerabilities in their

data infrastructure and implement appropriate measures to safeguard their

data. Furthermore, AI can assist in ensuring compliance with various data

protection regulations, such as the General Data Protection Regulation (GDPR),

by automating the process of identifying and managing sensitive data. Another

area where AI and data stewardship intersect is in data governance. Data

governance refers to the set of processes, policies, and standards that

organizations use to ensure the proper management of their data assets. AI can

help organizations establish and maintain robust data governance practices by

automating the process of creating, updating, and enforcing data policies and

rules.

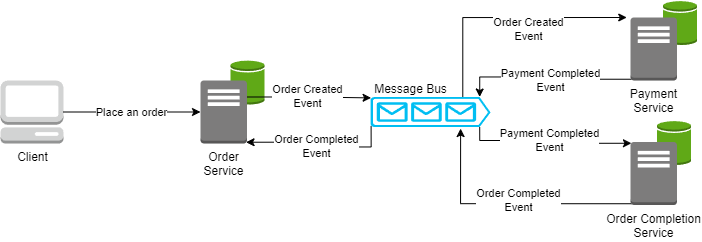

Saga Pattern With NServiceBus in C#

In its simplest form, a saga is a sequence of local transactions. Each

transaction updates data within a single service, and each service publishes

an event to trigger the next transaction in the saga. If any transaction

fails, the saga executes compensating transactions to undo the impact of the

failed transaction. The Saga Pattern is ideal for long-running, distributed

transactions where each step needs to be reliable and reversible. It allows us

to maintain data consistency across services without the need for distributed

locks or two-phase commit protocols, which can add significant complexity and

performance overhead. ... The Saga Pattern is a powerful tool in our

distributed systems toolbox, allowing us to manage complex business

transactions in a reliable, scalable, and maintainable way. Additionally, when

we merge the Saga Pattern with the Event Sourcing Pattern, we significantly

enhance traceability by constructing a comprehensive sequence of events that

can be analyzed to comprehend the transaction flow in-depth.

Efficiency and sustainability in legacy data centers

A recent analyst report found a “wave of technological trends” is driving

change throughout the data center sector at an unprecedented pace, with

“rapidly diversifying business applications generating terabytes of data.”

All that data has to go somewhere, and as hyperscale cloud providers push

some of their workloads away from large, CapEx-intensive centralized hubs

into Tier II and Tier III colocation markets — it’s looking like colos may

be in greater demand than ever before. However, these circumstances pose a

serious challenge for the colocation sector, as “the resulting workloads

have exploded onto legacy data center infrastructures”, many of which may be

“ill-equipped to handle them.” Now, the colocation market finds itself

caught between two conflicting macroeconomic forces. On one hand, the growth

in demand puts greater pressure on operators in Tier II and III markets to

build more facilities, faster, to accommodate larger and more complex

workloads; on the other, the existential need to reduce carbon emissions and

slash energy consumption is vital.

A quantum computer that can’t be simulated on classical hardware could be a reality next year

The current-generation machines are still very much in the noisy era of

quantum computing, Ilyas Khan, who founded Cambridge Quantum out of the

University of Cambridge in 2014 and now works as chief product officer, told

Tech Monitor that we’re moving into the “mid-stage NISQ” where the machines

are still noisy but we’re seeing signs of logical qubits and utility. Thanks

to error correction, detection and mitigation techniques, even on noisy

error-prone qubits, many companies have been able to produce usable

outcomes. But at this stage, the structure and performance of the quantum

circuits could still be simulated using classical hardware. That will change

next year, says Khan. “We think it’s important for quantum computers to be

useful in real-life problem solving,” he says. “Our current system model,

H2, has 32 qubits in its current instantiation, all to all connected with

mid-circuit measurement.”

Protecting your business through test automation

The inadequate pre-launch testing forces teams to then scramble post-launch

to fix faulty software applications with renewed urgency, with the added

pressure of managing the potential loss of revenue and damaged brand

reputation caused by the defect. When the faulty software reaches end users,

dissatisfied customers are a problem that could have far longer-reaching

effects as users pass on their negative experiences to others. The negative

feedback could also prevent potential new customers from ever trying the

software in the first place. So why is software not being tested properly?

Changing customer behaviours in the financial services sector, as well as

increased competition from digital-native fintech start-ups, have led many

organisations to invest in a huge amount of digital transformation in recent

years. With companies coming under more pressure than ever to respond to

market demands and user experience trends through increasingly frequent

software releases, the sheer volume of software needing testing has

skyrocketed, placing a further burden on resources already stretched to

breaking point.

Implementing zero trust with the Internet of Things (IoT)

There’s a strongly held view that it simply isn’t possible to trust any IoT

device, even if it’s equipped with automatic security updating. “As a former

CIO, my guidance is that preparation is the best defense,” Archundia tells

ITPro. IoT devices are often just too much of a risk; they’re too much of a

soft entry point into the organization to overlook them. It’s best to assume

each device is a hole in an enterprise’s defenses. Perhaps each device won’t

be a hole at all times, but some may be for at least some of the times. So

long as the hole isn’t plugged, it can be found and exploited. That’s

actually fine in a zero trust environment, because it assumes every single

act, by a human or a device, could be malicious. ... “Because zero trust

focuses on continuously verifying and placing security as close to each

asset as possible, a cyber attack need not have far-reaching consequences in

the organization,” he says. “By relying on techniques such as secured zones,

the organization can effectively limit the blast radius of an attack,

ensuring that a successful attack will have limited benefits for the threat

agent.”

US Data Privacy Relationship Status: It’s Complicated

The American Data Privacy and Protection Act (ADPPA) is a bill that if

passed would become the first set of federal privacy regulations that would

supersede state laws. While it passed a House of Representatives commerce

committee vote by a 53-2 margin in July 2022, the bill is still waiting on a

full House vote and then a Senate vote. In the US, 10 states have enacted

comprehensive privacy laws, including California, Colorado, Connecticut,

Indiana, Iowa, Montana, Tennessee, Texas, Utah, and Virginia. More than a

dozen other states have proposed bills in various states of activity. The

absence of an overarching federal law means companies must pick and choose

based on where they happen to be doing business. Some businesses opt to

start with the most stringent law and model their own data privacy standards

accordingly. The current global standard for privacy is Europe’s 2018

General Data Protection Regulation (GDPR) and has become the model for other

data privacy proposals. Since many large US companies do business globally,

they are very familiar with GDPR.

KillNet DDoS Attacks Further Moscow's Psychological Agenda

Mandiant's assessment of the 500 DDoS attacks launched by KillNet and

associated groups from Jan. 1 through June 20 offers further evidence that

the collective isn't some grassroots assembly of independent, patriotic

hackers. "KillNet's targeting has consistently aligned with established and

emerging Russian geopolitical priorities, which suggests that at least part

of the influence component of this hacktivist activity is intended to

directly promote Russia's interests within perceived adversary nations

vis-a-vis the invasion of Ukraine," Mandiant said. Researchers said KillNet

and its affiliates often attack technology, social media and transportation

firms, as well as NATO. ... To hear KillNet's recounting of its attacks via

its Telegram channel, these hacktivists are nothing short of devastating.

The same goes for other past and present members of the KillNet collective,

including KillMilk, Tesla Botnet, Anonymous Russia and Zarya. Recent attacks

by Anonymous Sudan have involved paid cloud infrastructure and had a greater

impact, although it's unclear if this will become the norm.

Agile vs. Waterfall: Choosing the Right Project Methodology

Choosing the right project management methodology lays the foundation for

effective planning, collaboration, and delivery. Failure to select the

appropriate methodology can lead to many challenges and setbacks that can

hinder project progress and ultimately impact overall success. Let's delve

into why it's crucial to choose the right project management methodology

and explore in-depth what can go wrong if an unsuitable methodology is

employed. ... The right methodology enables effective resource allocation

and utilization. Projects require a myriad of resources, including human,

financial, and technological. If you select an inappropriate methodology,

you can experience inefficient resource management, causing budget

overruns, underutilization of skills, and time delays. For instance, an

Agile methodology that relies heavily on frequent collaboration and

iterative development may not be suitable for projects with limited

resources and a hierarchical team structure.

Quote for the day:

"People leave companies for two reasons. One, they don't feel

appreciated. And two, they don't get along with their boss." --

Adam Bryant

No comments:

Post a Comment