Tackling tech anxiety within the workforce

The average employee spends over two hours each day on work admin, manual

paperwork, and unnecessary meetings. As a result, 81% of workers are unable to

dedicate more than three hours of their day to creative, strategic tasks — the

very work most ill-suited to machines. Fortunately, this is where digital

collaboration comes in. When AI is set to automate certain processes, employees

are freer to work on what they love, which often also happens to be what they do

best. This extra time back then offers more opportunities to learn, create, and

innovate on the job. Take Google’s ‘20% time’ rule, for instance. The policy

involves Google employees spending a fifth of their week away from their usual,

everyday responsibilities. Instead, they use the time to explore, work, and

collaborate on exciting ideas that might not pay off immediately, or even at

all, but could eventually reveal big business opportunities. It’s a win-win

model for almost every business. At worst, colleagues enjoy the time to

strengthen team bonds, improve problem-solving skills, and boost their morale.

And at best, they uncover incredible ideas that can change the course of the

company.

NFTs Emerge as the Next Enterprise Attack Vector

"The most common attacks try to trick cryptocurrency enthusiasts into handing

over their wallet’s recovery phrase," he says. Users who fall for the scam often

stand to lose access to their funds permanently, he says. "Bogus Airdrops, which

are fake promotional giveaways, are also common and ask for recovery phrases or

have the victim connect their wallets to malicious Airdrop sites, he adds,

noting that many fake Airdrop sites are imitations of real NFT projects. And

with so many small unverified projects around, it’s often hard to determine

authenticity, he notes. Oded Vanunu, head of product vulnerability at Check

Point Software, says what his company has observed by way of NFT-centric attacks

is activity focused on exploiting weaknesses in NFT marketplaces and

applications. "We need to understand that all NFT or crypto markets are

using Web3 protocols," Vanunu says, referring to the emerging idea of a new

Internet based on blockchain technology. Attackers are trying to figure out new

ways to exploit vulnerabilities in applications connected to decentralized

networks such as blockchain, he notes.

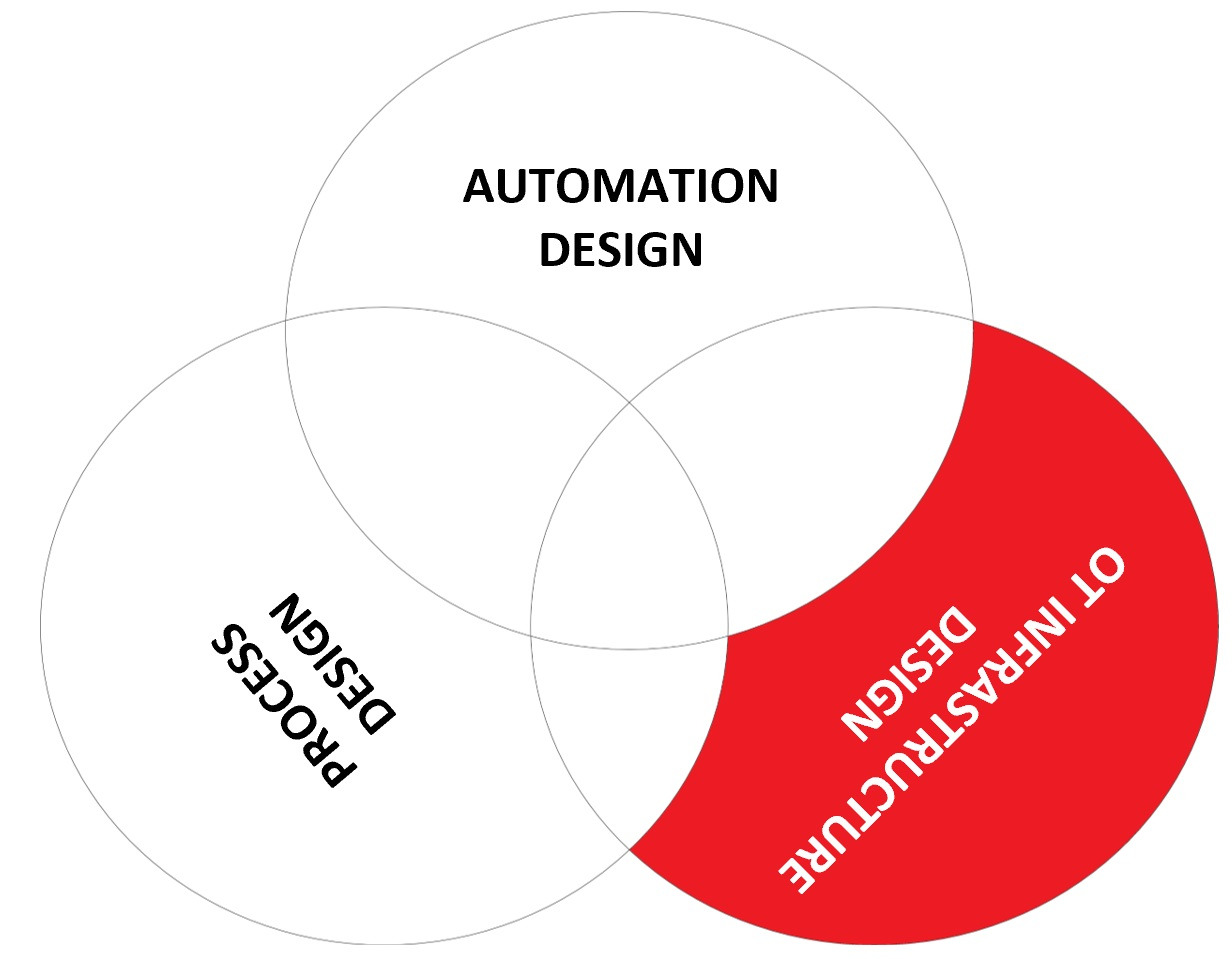

The OT security skills gap

Though often the responsibility for OT security is combined with the OT

Infrastructure design role, in the OT world this is in my opinion less logical

because it is the automation design engineer that has the wider overview of

overall business functions in the system. If OT would be like IT, so primarily

data manipulation, it makes sense to put the lead with OT infrastructure design.

But because OT is not only data manipulation but also initiating various control

actions that need to operate within a restricted operating window, it makes

sense to give automation design this coordinating role. This is because

automation design oversees all three skill elements and has more detailed

knowledge of the production process than the OT infrastructure design role. It

is very comparable to cyber security in a bank, where the lead role is linked to

the overall business process and the infrastructure security is in a more

supportive role. Finally, there is the process design role, what are the cyber

security responsibilities for this role? First of all the process design role

understands all the process deviations that can lead to trouble, and they know

what that trouble is, they know how to handle it, and they have set criteria for

limiting the risk that this trouble occurs.

Ransomware-as-a-service: Understanding the cybercrime gig economy and how to protect yourself

The cybercriminal economy—a connected ecosystem of many players with different

techniques, goals, and skillsets—is evolving. The industrialization of attacks

has progressed from attackers using off-the-shelf tools, such as Cobalt Strike,

to attackers being able to purchase access to networks and the payloads they

deploy to them. This means that the impact of a successful ransomware and

extortion attack remains the same regardless of the attacker’s skills. RaaS is

an arrangement between an operator and an affiliate. The RaaS operator develops

and maintains the tools to power the ransomware operations, including the

builders that produce the ransomware payloads and payment portals for

communicating with victims. The RaaS program may also include a leak site to

share snippets of data exfiltrated from victims, allowing attackers to show that

the exfiltration is real and try to extort payment. Many RaaS programs further

incorporate a suite of extortion support offerings, including leak site hosting

and integration into ransom notes, as well as decryption negotiation, payment

pressure, and cryptocurrency transaction services

U.S. White House releases ambitious agenda to mitigate the risks of quantum computing

The first directive, the executive order, seeks to advance QIS by placing the

National Quantum Initiative Advisory Committee, the federal government’s main

independent expert advisory body for quantum information science and

technology, under the authority of the White House. The National Quantum

Initiative, established by a law known as the NQI Act, encompasses activities

by executive departments and agencies (agencies) with membership on either the

National Science and Technology Council (NSTC) Subcommittee on Quantum

Information Science (SCQIS) or the NSTC Subcommittee on Economic and Security

Implications of Quantum Science (ESIX).” ... The national security memorandum

(NSM) plans to tackle the risks posed to encryption by quantum computing. It

establishes a national policy to promote U.S. leadership in quantum computing

and initiates collaboration among the federal government, industry, and

academia as the nation begins migrating to new quantum-resistant cryptographic

standards developed by the National Institute of Standards and Technology

(NIST).

Industry pushes back against India's data security breach reporting requirements

India's Internet Freedom Foundation has offered an extensive criticism of the

regulations, arguing that they were formulated and announced without

consultation, lack a data breach reporting mechanism that would benefit

end-users, and include data localization requirements that could prevent some

cross-border data flows. The foundation also points out that the privacy

implications of the rules – especially five-year retention of personal

information – is a very significant requirement at a time when India's Draft

Data Protection Bill has proven so controversial it has failed to reach a vote

in Parliament, and debate about digital privacy in India is ongoing and

fierce. Indian outlet Medianama has quoted infosec researcher Anand

Venkatanarayanan, who claimed one way to report security incidents to CERT-In

involves a non-interactive PDF that has to be printed out and filled in by

hand. Venkatanarayanan also pointed out that the rules' requirement to report

incidents as trivial as port scanning has not been explained – is it one PDF

per IP address scanned, or can one report cover many IP addresses?

When—and how—to prepare for post-quantum cryptography

Consider data shelf life. Some data produced today—such as classified

government data, personal health information, or trade secrets—will still be

valuable when the first error-corrected quantum computers are expected to

become available. For instance, a long-term life insurance contract may

already be sensitive to future quantum threats because it could still be

active when quantum computers become commercially available. Any long-term

data transferred now on public channels will be at risk of interception and

future decryption. Because regulations on PQC do not yet exist, the

possibility of data transferred today being decrypted in the future does not

yet pose a compliance risk. For the moment, far more significant are the

future consequences for organizations, for their customers and suppliers, and

for those relationships. However, regulatory considerations will also become

relevant as the field develops, which could speed up the need for some

organizations to act. Just as with data, some critical physical systems

developed today ... will still be in use when the first fully error-corrected

quantum computer is expected to come online.

If we compare railways with, for example, the banking sector then we see we

have some catching up to do but given the fact that we are used to dealing

with risks I am confident that this sector is fully able to develop the

necessary mechanisms to stay resilient to these new emerging threats. Of

course, we can fall victim to some kind of attack someday just like any other

organization. It is up to us to be prepared and stay resilient; I am confident

we can do that. ... Actually, any technique, tactic, or procedure (TTP) that

can be used in other organizations as well. What we will see is, now that our

sector is speeding up the digitization process, that the attack surface is

broadening and becoming more complex. Trains will become Tesla’s on rails

having many connections with other digital services such as the European Rail

Traffic Management System (ERTMS) and driving via Automatic Train Automation

(ATO). The obvious consequence is that we need to be able to withstand those

TTP’s and plan for mitigation in our digital roadmaps. In the most ideal

world, we develop our services cybersafe by design and default. There’s work

to do there!

How data can improve your website’s accessibility

With an understanding of how data can inform accessibility, it’s time to apply

that data towards accessibility improvements. This entails framing your tracked

data in the context of Web Content Accessibility Guidelines (WCAG), which

provides the latest standards for ensuring web accessibility. ... WCAG 2.1

focuses on five accessibility principles. These are perceivability, operability,

understandability, robustness, and conformance. Your KPIs for accessibility

should be tied to these features. For example, measure conformance through the

number of criteria violations that occur through site testing. This and similar

metrics will help you identify areas of improvement. ... Your approach to

gathering accessibility data should not be limited to one tool or testing

procedure. Instead, diversify your data to ensure quality. Both quantitative and

qualitative metrics factor in, including user feedback, numbers of flagged

issues, and insights from all kinds of tests and validation procedures. ... The

gamut of usability considerations is broader than most testers can accommodate

in one go.

Low Code: Satisfying Meal or Junk Food?

“If low code is treated as strictly an IT tool and excludes the line of

business -- just like manual coding -- you seriously run the risk of just

creating new technical debt, but with pictures this time,” says Rachel

Brennan, vice president of Product Marketing at Bizagi, a low-code process

automation provider. However, when no-code and low-code platforms are used as

much by citizen developers as by software developers, whether it satisfies the

hunger for more development stems from “how” it is used rather than by whom.

But first, it's important to note the differences between low-code platforms

for developers and those for citizen developers. Low code for the masses

usually means visual tools and simple frameworks that mask the complex coded

operations that lie beneath. Typically, these tools can only realistically be

used for fairly simple applications. “Low-code tools for developers offer

tooling, frameworks, and drag-and drop options but ALSO include the option to

code when the developer wants to customize the application -- for example, to

develop APIs, or to integrate the application with other systems, or to

customize front end interfaces,” explains Miguel Valdes Faura

Quote for the day:

"One machine can do the work of fifty

ordinary men. No machine can do the work of one extraordinary man." --

Elbert Hubbard

No comments:

Post a Comment