OAuth Security in a Cloud Native World

As you integrate OAuth into your applications and APIs, you will realize that

the authorization server you have chosen is a critical part of your architecture

that enables solutions for your security use cases. Using up-to-date security

standards will keep your applications aligned with security best practices. Many

of these standards map to company use cases, some of which are essential in

certain industry sectors. APIs must validate JWT access tokens on every request

and authorize them based on scopes and claims. This is a mechanism that scales

to arbitrarily complex business rules and spans across multiple APIs in your

cluster. Similarly, you must be able to implement best practices for web and

mobile apps and use multiple authentication factors. The OAuth framework

provides you with building blocks rather than an out-of-the-box solution.

Extensibility is thus essential for your APIs to deal with identity data

correctly. One critical area is the ability to add custom claims from your

business data to access tokens. Another is the ability to link accounts reliably

so that your APIs never duplicate users if they authenticate in a new way, such

as when using a WebAuthn key.

APIs Outside, Events Inside

It goes without saying that external clients of an application calling the same

API version — the same endpoint — with the same input parameters expect to see

the same response payload over time. The need of end users for such certainty is

once again understandable but stands in stark contrast to the requirements of

the DA itself. In order for distributed applications to evolve and grow at the

speed required in today’s world, those autonomous development teams assigned to

each constituent component need to be able to publish often-changing,

forward-and-backward-compatible payloads as a single event to the same fixed

endpoints using a technique I call "version-stacking." ... A key concern of

architects when exposing their applications to external clients via APIs is —

quite rightly — security. Those APIs allow external users to affect changes

within the application itself, so they must be rigorously protected, requiring

many and frequent authorization steps. These security steps have obvious

implications for performance, but regardless, they do seem necessary.

More money for open source security won’t work

The best guarantor of open source security has always been the open source

development process. Even with OpenSSF’s excellent plan, this remains true.

The plan, for example, promises to “conduct third-party code reviews of up to

200 of the most critical components.” That’s great! But guess what makes

something a “critical component”? That’s right—a security breach that roils

the industry. Ditto “establishing a risk assessment dashboard for the top open

source components.” If we were good at deciding in advance which open source

components are the top ones, we’d have fewer security vulnerabilities because

we’d find ways to fund them so that the developers involved could better care

for their own security. Of course, often the developers responsible for “top

open source components” don’t want a full-time job securing their software. It

varies greatly between projects, but the developers involved tend to have very

different motivations for their involvement. No one-size-fits-all approach to

funding open source development works ...

Prepare for What You Wish For: More CISOs on Boards

Recently, the Security Exchange Commission (SEC) made a welcome move for

cybersecurity professionals. In proposed amendments to its rules to enhance

and standardize disclosures regarding cybersecurity risk management, strategy,

governance, and incident reporting, the SEC outlined requirements for public

companies to report any board member’s cybersecurity expertise. The change

reflects a growing belief that disclosure of cybersecurity expertise on boards

is important as potential investors consider investment opportunities and

shareholders elect directors. In other words, the SEC is encouraging U.S.

public companies to beef up cybersecurity expertise in the boardroom.

Cybersecurity is a business issue, particularly now as the attack surface

continues to expand due to digital transformation and remote work, and cyber

criminals and nation-state actors capitalize on events, planned or unplanned,

for financial gain or to wreak havoc. The world in which public companies

operate has changed, yet the makeup of boards doesn’t reflect that.

12 steps to building a top-notch vulnerability management program

With a comprehensive asset inventory in place, Salesforce SVP of information

security William MacMillan advocates taking the next step and developing an

“obsessive focus on visibility” by “understanding the interconnectedness of

your environment, where the data flows and the integrations.” “Even if you’re

not mature yet in your journey to be programmatic, start with the visibility

piece,” he says. “The most powerful dollar you can spend in cybersecurity is

to understand your environment, to know all your things. To me that’s the

foundation of your house, and you want to build on that strong foundation.”

... To have a true vulnerability management program, multiple experts say

organizations must make someone responsible and accountable for its work and

ultimately its successes and failures. “It has to be a named position, someone

with a leadership job but separate from the CISO because the CISO doesn’t have

the time for tracking KPIs and managing teams,” says Frank Kim, founder of

ThinkSec, a security consulting and CISO advisory firm, and a SANS Fellow.

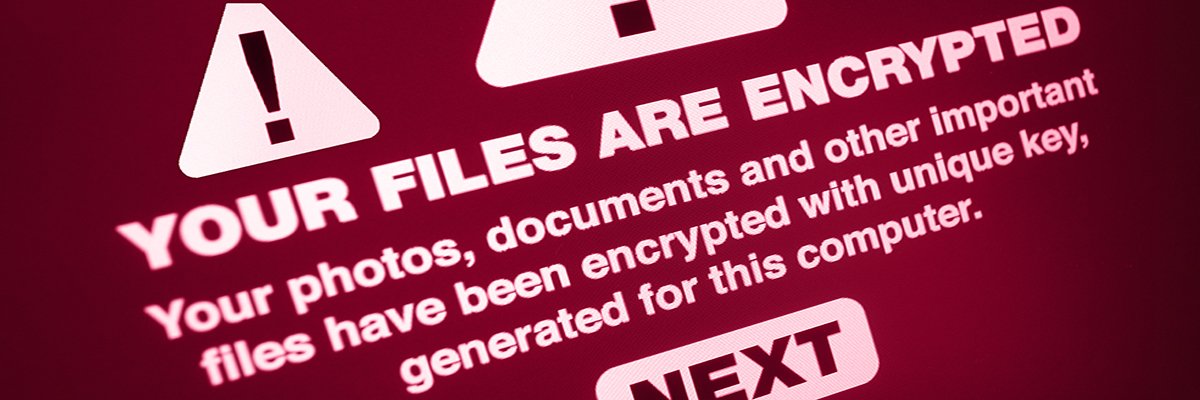

The limits and risks of backup as ransomware protection

One option is to use so-called “immutable” backups. These are backups that,

once written, cannot be changed. Backup and recovery suppliers are building

immutable backups into their technology, often targeting it specifically as a

way to counter ransomware. The most common method for creating immutable

backups is through snapshots. In some respects, a snapshot is always

immutable. However, suppliers are taking additional measures to prevent these

backups being targeted by ransomware. Typically, this is by ensuring the

backup can only be written to, mounted or erased by the software that created

it. Some suppliers go further, such as requiring two people to use a PIN to

authorise overwriting a backup. The issue with snapshots is the volume of data

they create, and the fact that those snapshots are often written to tier one

storage, for reasons of rapidity and to lessen disruption. This makes

snapshots expensive, especially if organisations need to keep days, or even

weeks, of backups as a protection against ransomware. “The issue with snapshot

recovery is it will create a lot of additional data,” says Databarracks’

Mote.

Four ways towards automation project management success

Having a fundamental understanding of the relationship between problem and

outcome is essential for automation success. Process mining is one of the best

options a business has to expedite this process. Leyla Delic, former CIDO at

Coca Cola İçecek, eloquently describes process mining as a “CT scan of your

processes”, taking stock and ensuring that the automation that you want to

implement is actually problem-solving for the business. With process mining

one should expect to need to go in and try blindly at first, learn what works,

and only then expand and scale for real outcomes. A recent Forrester report

found that 61% of executive decision-makers either are, or are looking at,

using process mining to simplify their operations. Constructing a detailed,

end-to-end understanding of processes provides the necessary basis to move

from siloed, specific task automation to more holistic process automation –

making a tangible impact. With the most advanced tools available today, one

can even understand in real-time the actual activities and processes of

knowledge workers across teams and tools, and receive automatic

recommendations on how to improve work.

The Power of Decision Intelligence: Strategies for Success

While chief information officers and chief data officers are the traditional

stakeholders and purchase decision makers, Kohl notes that he’s seeing

increased collaboration between IT and other business management areas when it

comes to defining analytics requirements. “Increasingly, line-of-business

executives are advocating for analytics platforms that enable data-driven

decision making,” he says. With an intelligent decisioning strategy,

organizations can also use customer data -- preferably in real time -- to

understand exactly where they are on their journeys -- be it an offer for a

more tailored new service, or outreach with help if they’re behind on a

payment. Don Schuerman, CTO of Pega, says this helps ensure that every

interaction is helpful and empathetic, versus just a blind email sent without

any context. In the same way that a good intelligence integration strategy can

benefit customers, the ability to analyze employee data and understand

roadblocks in their workflows helps solve for these problems faster and create

better processes, resulting in happier, more productive employees.

Digital exhaustion: Redefining work-life balance

As workers continue to create and collaborate in digital spaces, one of the

best things we can do as leaders is to let go. Let go of preconceived

schedules, of always knowing what someone is working on, of dictating when and

how a project should be accomplished – in effect, let go of micromanagement.

Instead, focus on hiring productive, competent workers and trust them to do

their jobs. Don’t manage tasks – gauge results. Use benchmarks and deadlines

to assess effectiveness and success. This will make workers feel more

empowered and trusted. Such “human-centric” design, as Gartner explains,

emphasizes flexible work schedules, intentional collaboration, and

empathy-based management to create a sustainable environment for hybrid work.

According to Gartner’s evaluation, a human-centric approach to work stimulates

a 28 percent rise in overall employee performance and a 44% decrease in

employee fatigue. The data supports the importance of recognizing and reducing

the impacts of digital exhaustion.

Late-Stage Startups Feel the Squeeze on Funding, Valuations

Investors are now tracking not only a prospect's burn rate but also their burn

multiple, which Sekhar says measures how much cash a startup is spending

relative to the amount of ARR it is adding each year. As a result, he says,

deals that last year took two days to get done are this year taking two weeks

since investors are engaging in far more due diligence to ensure they're

betting on a quality asset. "We've seen this in the past where companies spend

irresponsibly and just run off a cliff expecting that they'll raise yet

another round," Sekhar says. "I think we're going back to basics and focusing

on building great businesses." Midstage and late-stage security startups have

begun examining how many months of capital they have and whether they should

slow hiring to buy more time to prove their value, Scheinman says. Startups

want to extend how long they can operate before they have to approach

investors for more money, given all the uncertainty in the market, he says. As

a result, Scheinman says, venture-backed firms have cut back on hiring and

technology purchases and placed greater emphasis on hitting their sales

numbers.

Quote for the day:

"Ninety percent of leadership is the

ability to communicate something people want." --

Dianne Feinstein

No comments:

Post a Comment