You’ve migrated to the cloud, now what?

When thinking about cost governance, for example, in an on-premises

infrastructure world, costs increase in increments when we purchase equipment,

sign a vendor contract, or hire staff. These items are relatively easy to

control because they require management approval and are usually subject to

rigid oversight. In the cloud, however, an enterprise might have 500 virtual

machines one minute and 5,000 a few minutes later when autoscaling functions

engage to meet demand. Similar differences abound in security management and

workload reliability. Technologies leaders with legacy thinking are faced with

harsh trade-offs between control and the benefits of cloud. These benefits can

include agility, scalability, lower cost, and innovation and require heavy

reliance on automation rather than manual legacy processes. This means that the

skillsets of an existing team may be not the same skillsets needed in the new

cloud order. When writing a few lines of code supplants plugging in drives and

running cable, team members often feel threatened. This can mean that success

requires not only a different way of thinking but also a different style of

leadership.

When thinking about cost governance, for example, in an on-premises

infrastructure world, costs increase in increments when we purchase equipment,

sign a vendor contract, or hire staff. These items are relatively easy to

control because they require management approval and are usually subject to

rigid oversight. In the cloud, however, an enterprise might have 500 virtual

machines one minute and 5,000 a few minutes later when autoscaling functions

engage to meet demand. Similar differences abound in security management and

workload reliability. Technologies leaders with legacy thinking are faced with

harsh trade-offs between control and the benefits of cloud. These benefits can

include agility, scalability, lower cost, and innovation and require heavy

reliance on automation rather than manual legacy processes. This means that the

skillsets of an existing team may be not the same skillsets needed in the new

cloud order. When writing a few lines of code supplants plugging in drives and

running cable, team members often feel threatened. This can mean that success

requires not only a different way of thinking but also a different style of

leadership.A new edge in global stability: What does space security entail for states?

Observers recently recentred the debate on a particular aspect of space

security, namely anti-satellite (ASAT) technologies. The destruction of assets

placed in outer space is high on the list of issues they identify as most

pressing and requiring immediate action. As a result, some researchers and

experts rolled out propositions to advance a transparent and cooperative

approach, promoting the cessation of destructive operations in both outer space

and launched from the ground. One approach was the development of ASAT Test

Guidelines, first initiated in 2013 by a Group of Governmental Experts on Outer

Space Transparency and Confidence-Building Measures. Another is through general

calls to ban anti-satellite tests, to not only build a more comprehensive arms

control regime for outer space and prevent the production of debris, but also

reduce threats to space security and regulate destabilising force. Many space

community members threw their support behind a letter urging the United Nations

(UN) General Assembly to take up for consideration a kinetic anti-satellite

(ASAT) Test Ban Treaty for maintaining safe access to Earth orbit and decreasing

concerns about collisions and the proliferation of space debris.

Observers recently recentred the debate on a particular aspect of space

security, namely anti-satellite (ASAT) technologies. The destruction of assets

placed in outer space is high on the list of issues they identify as most

pressing and requiring immediate action. As a result, some researchers and

experts rolled out propositions to advance a transparent and cooperative

approach, promoting the cessation of destructive operations in both outer space

and launched from the ground. One approach was the development of ASAT Test

Guidelines, first initiated in 2013 by a Group of Governmental Experts on Outer

Space Transparency and Confidence-Building Measures. Another is through general

calls to ban anti-satellite tests, to not only build a more comprehensive arms

control regime for outer space and prevent the production of debris, but also

reduce threats to space security and regulate destabilising force. Many space

community members threw their support behind a letter urging the United Nations

(UN) General Assembly to take up for consideration a kinetic anti-satellite

(ASAT) Test Ban Treaty for maintaining safe access to Earth orbit and decreasing

concerns about collisions and the proliferation of space debris.

From data to knowledge and AI via graphs: Technology to support a knowledge-based economy

Leveraging connections in data is a prominent way of getting value out of data.

Graph is the best way of leveraging connections, and graph databases excel at

this. Graph databases make expressing and querying connection easy and powerful.

This is why graph databases are a good match in use cases that require

leveraging connections in data: Anti-fraud, Recommendations, Customer 360 or

Master Data Management. From operational applications to analytics, and from

data integration to machine learning, graph gives you an edge. There is a

difference between graph analytics and graph databases. Graph analytics can be

performed on any back end, as they only require reading graph-shaped data. Graph

databases are databases with the ability to fully support both read and write,

utilizing a graph data model, API and query language. Graph databases have been

around for a long time, but the attention they have been getting since 2017 is

off the charts. AWS and Microsoft moving in the domain, with Neptune and Cosmos

DB respectively, exposed graph databases to a wider audience.

Leveraging connections in data is a prominent way of getting value out of data.

Graph is the best way of leveraging connections, and graph databases excel at

this. Graph databases make expressing and querying connection easy and powerful.

This is why graph databases are a good match in use cases that require

leveraging connections in data: Anti-fraud, Recommendations, Customer 360 or

Master Data Management. From operational applications to analytics, and from

data integration to machine learning, graph gives you an edge. There is a

difference between graph analytics and graph databases. Graph analytics can be

performed on any back end, as they only require reading graph-shaped data. Graph

databases are databases with the ability to fully support both read and write,

utilizing a graph data model, API and query language. Graph databases have been

around for a long time, but the attention they have been getting since 2017 is

off the charts. AWS and Microsoft moving in the domain, with Neptune and Cosmos

DB respectively, exposed graph databases to a wider audience.Observability Is the New Kubernetes

So where will observability head in the next two to five years? Fong-Jones said

the next step is to support developers in adding instrumentation to code,

expressing a need to strike a balance between easy and out of the box and

annotations and customizations per use case. Suereth said that the OpenTelemetry

project is heading in the next five years toward being useful to app developers,

where instrumentation can be particularly expensive. “Target devs to provide

observability for operations instead of the opposite. That’s done through

stability and protocols.” He said that right now observability right now, like

with Prometheus, is much more focused on operations rather than developer

languages. “I think we’re going to start to see applications providing

observability as part of their own profile.” Suereth continued that the

OpenTelemetry open source project has an objective to have an API with all the

traces, logs and metrics with a single pull, but it’s still to be determined how

much data should be attached to it.

So where will observability head in the next two to five years? Fong-Jones said

the next step is to support developers in adding instrumentation to code,

expressing a need to strike a balance between easy and out of the box and

annotations and customizations per use case. Suereth said that the OpenTelemetry

project is heading in the next five years toward being useful to app developers,

where instrumentation can be particularly expensive. “Target devs to provide

observability for operations instead of the opposite. That’s done through

stability and protocols.” He said that right now observability right now, like

with Prometheus, is much more focused on operations rather than developer

languages. “I think we’re going to start to see applications providing

observability as part of their own profile.” Suereth continued that the

OpenTelemetry open source project has an objective to have an API with all the

traces, logs and metrics with a single pull, but it’s still to be determined how

much data should be attached to it.Data Exploration, Understanding, and Visualization

DevSecOps: A Complete Guide

Both DevOps and DevSecOps use some degree of automation for simple tasks, freeing up time for developers to focus on more important aspects of the software. The concept of continuous processes applies to both practices, ensuring that the main objectives of development, operation, or security are met at each stage. This prevents bottlenecks in the pipeline and allows teams and technologies to work in unison. By working together, development, operational or security experts can write new applications and software updates in a timely fashion, monitor, log, and assess the codebase and security perimeter as well as roll out new and improved codebase with a central repository. The main difference between DevOps and DevSecOps is quite clear. The latter incorporates a renewed focus on security that was previously overlooked by other methodologies and frameworks. In the past, the speed at which a new application could be created and released was emphasized, only to be stuck in a frustrating silo as cybersecurity experts reviewed the code and pointed out security vulnerabilities.Skilling employees at scale: Changing the corporate learning paradigm

Corporate skilling programs have been founded on frameworks and models from

the world of academia. Even when we have moved to digital learning platforms,

the core tenets of these programs tend to remain the same. There is a standard

course with finite learning material, a uniformly structured progression to

navigate the learning, and the exact same assessment tool to measure progress.

This uniformity and standardization have been the only approach for

organizations to skill their employees at scale. As a result, organizations

made a trade-off; content-heavy learning solutions which focus on knowledge

dissemination but offer no way to measure the benefit and are limited to

vanity metrics have become the norm for training the workforce at large. On

the other hand, one-on-one coaching programs that promise results are

exclusive only to the top one or two percent of the workforce, usually

reserved for high-performing or high-potential employees. This is because such

programs have a clear, measurable, and direct impact on behavioral change and

job performance.

Corporate skilling programs have been founded on frameworks and models from

the world of academia. Even when we have moved to digital learning platforms,

the core tenets of these programs tend to remain the same. There is a standard

course with finite learning material, a uniformly structured progression to

navigate the learning, and the exact same assessment tool to measure progress.

This uniformity and standardization have been the only approach for

organizations to skill their employees at scale. As a result, organizations

made a trade-off; content-heavy learning solutions which focus on knowledge

dissemination but offer no way to measure the benefit and are limited to

vanity metrics have become the norm for training the workforce at large. On

the other hand, one-on-one coaching programs that promise results are

exclusive only to the top one or two percent of the workforce, usually

reserved for high-performing or high-potential employees. This is because such

programs have a clear, measurable, and direct impact on behavioral change and

job performance.The Ultimate SaaS Security Posture Management (SSPM) Checklist

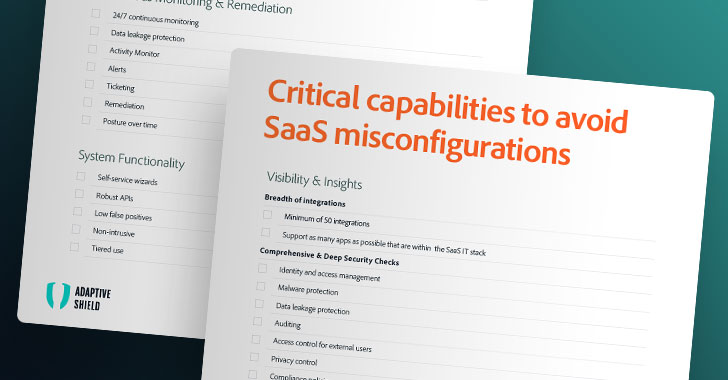

The capability of governance across the whole SaaS estate is both nuanced and

complicated. While the native security controls of SaaS apps are often robust,

it falls on the responsibility of the organization to ensure that all

configurations are properly set — from global settings, to every user role and

privilege. It only takes one unknowing SaaS admin to change a setting or share

the wrong report and confidential company data is exposed. The security team

is burdened with knowing every app, user and configuration and ensuring they

are all compliant with industry and company policy. Effective SSPM solutions

come to answer these pains and provide full visibility into the company's SaaS

security posture, checking for compliance with industry standards and company

policy. Some solutions even offer the ability to remediate right from within

the solution. As a result, an SSPM tool can significantly improve

security-team efficiency and protect company data by automating the

remediation of misconfigurations throughout the increasingly complex SaaS

estate.

The capability of governance across the whole SaaS estate is both nuanced and

complicated. While the native security controls of SaaS apps are often robust,

it falls on the responsibility of the organization to ensure that all

configurations are properly set — from global settings, to every user role and

privilege. It only takes one unknowing SaaS admin to change a setting or share

the wrong report and confidential company data is exposed. The security team

is burdened with knowing every app, user and configuration and ensuring they

are all compliant with industry and company policy. Effective SSPM solutions

come to answer these pains and provide full visibility into the company's SaaS

security posture, checking for compliance with industry standards and company

policy. Some solutions even offer the ability to remediate right from within

the solution. As a result, an SSPM tool can significantly improve

security-team efficiency and protect company data by automating the

remediation of misconfigurations throughout the increasingly complex SaaS

estate.Why gamification is a great tool for employee engagement

Gamification is the beating heart of almost everything we touch in the digital

world. With employees working remotely, this is the golden solution for

employers. If applied in the right format, gaming can help create engagement in

today's remote working environment, motivate personal growth, and encourage

continuous improvement across an organization. ... In the connected workspace,

gamification is essentially a method of providing simple goals and motivations

that rely on digital rather than in-person engagement. At the same time, there

is a tacit understanding among both game designer and "player" that when these

goals are aligned in a way that benefits the organization, the rewards often

impact more than the bottom line. Engaged employees are a valuable part of

defined business goals, and studies show that non-engagement impacts the bottom

line. At the same time, motivated employees are more likely to want to make the

customer experience as satisfying as possible, especially if there is internal

recognition of a job well done.

Gamification is the beating heart of almost everything we touch in the digital

world. With employees working remotely, this is the golden solution for

employers. If applied in the right format, gaming can help create engagement in

today's remote working environment, motivate personal growth, and encourage

continuous improvement across an organization. ... In the connected workspace,

gamification is essentially a method of providing simple goals and motivations

that rely on digital rather than in-person engagement. At the same time, there

is a tacit understanding among both game designer and "player" that when these

goals are aligned in a way that benefits the organization, the rewards often

impact more than the bottom line. Engaged employees are a valuable part of

defined business goals, and studies show that non-engagement impacts the bottom

line. At the same time, motivated employees are more likely to want to make the

customer experience as satisfying as possible, especially if there is internal

recognition of a job well done.

10 Cloud Deficiencies You Should Know

What happens if your cloud environment goes down due to challenges outside your

control? If your answer is “Eek, I don’t want to think about that!” you’re not

prepared enough. Disaster preparedness plans can include running your workload

across multiple availability zones or regions, or even in a multicloud

environment. Make sure you have stakeholders (and back-up stakeholders) assigned

to any manual tasks, such as switching to backup instances or relaunching from a

system restore point. Remember, don’t wait until you’re faced with a worst-case

scenario to test your response. Set up drills and trial runs to make sure your

ducks are quacking in a row. One thing you might not imagine the cloud being is

… boring. Without cloud automation, there are a lot of manual and tedious tasks

to complete, and if you have 100 VMs, they’ll require constant monitoring,

configuration and management 100 times over. You’ll need to think about

configuring VMs according to your business requirements, setting up virtual

networks, adjusting for scale and even managing availability and

performance.

What happens if your cloud environment goes down due to challenges outside your

control? If your answer is “Eek, I don’t want to think about that!” you’re not

prepared enough. Disaster preparedness plans can include running your workload

across multiple availability zones or regions, or even in a multicloud

environment. Make sure you have stakeholders (and back-up stakeholders) assigned

to any manual tasks, such as switching to backup instances or relaunching from a

system restore point. Remember, don’t wait until you’re faced with a worst-case

scenario to test your response. Set up drills and trial runs to make sure your

ducks are quacking in a row. One thing you might not imagine the cloud being is

… boring. Without cloud automation, there are a lot of manual and tedious tasks

to complete, and if you have 100 VMs, they’ll require constant monitoring,

configuration and management 100 times over. You’ll need to think about

configuring VMs according to your business requirements, setting up virtual

networks, adjusting for scale and even managing availability and

performance. Quote for the day:

"Leaders begin with a different question than others. Replacing who can I blame with how am I responsible?" -- Orrin Woodward

No comments:

Post a Comment