The Future of Healthcare Is in the Cloud

While the idea of making information accessible anywhere and at any time offers

obvious advantages, there are obstacles to overcome. Potential security risks

and concern over compliance has long held back cloud adoption in healthcare. IT

staff need to ensure timely software updates, maintain network availability, and

institute a regular and robust backup routine. Healthcare organizations also

need to consider how data will be processed by a third party, examine with whom

their cloud partners are in business, and ensure security standards extend to

any cloud networks they use. Cloud providers with healthcare experience and an

understanding of the unique compliance landscape will be favored as the industry

rises to meet these challenges. Everyone should take comfort from the fact that

the most advanced healthcare organizations in the world have announced major

cloud initiatives after much deliberation and due diligence. Mayo Clinic’s

announcement of its partnership with Google is one such example. The dream of

global collaboration relies on cloud computing. Healthcare professionals in

different countries can now trade massive data sets easily. While collaboration

like this has typically been reserved for esoteric research projects, it’s now

being employed to tackle global health problems.

Q&A: Telehouse engineering lead discusses AI benefits for data centres

Developments in network management AI and cyber security are allowing us to

detect unusual activity outside of usual traffic patterns. In a typical office

environment, if a company device logs in at 3am and starts taking gigabytes of

data from the business, that will be flagged as atypical behaviour. AI can

analyse this breach quickly and respond by disabling that device’s network

access to stop the possible data loss. That data transfer could also take place

in the middle of the working day, but it might come from a device that would not

normally transfer that volume of data, such as a laptop solely used for

presentations. The AI already understands the typical behaviour patterns of that

device and will flag when there might be inflow or outflow of data that does not

fit its typical usage pattern. In a data centre it is no different. Every server

has its own typical operational pattern, and these can be monitored by the cyber

security systems, and any unusual activity can be flagged. It is possible to

take this further than simple network monitoring by interfacing with other

systems. For example, detecting whether server behaviour changed after someone

entered a secure server hall, which could indicate that a server has been

tampered with.

The year ahead in DevOps and agile: bring on the automation, bring on the business involvement

The slower-than-desired pace of automaton stems from "organizations

prohibiting developers from accessing production environments, probably

because developers made changes in production previously that caused

production problems," says Newcomer. "It's hard to change that kind of policy,

especially when incidents have occurred. Another reason is simple

institutional inertia - processes and procedures are difficult to change once

fully baked into daily practice, especially when it's someone's specific job

to perform these manual deployment steps." DevOps and agile progress needs to

be well-measured and documented. "People have different definitions of DevOps

and agile," says Lei Zhang, head of Bloomberg's Developer Experience group.

Zhang's team turned to the measurements established within Google's DevOps

Research and Assessment guidelines -- lead time, deploy frequency, time to

restore, and change fail percentage, and focus on the combination. "We think

the effort is cohesive, while the results have huge varieties. Common

contributors to such varieties include complex dependencies due to the nature

of the business, legacy but crucial software artifacts, compliance

requirements, and low-level infrastructure limitations."

Performance Tuning Techniques of Hive Big Data Table

Developers working on big data applications have a prevalent problem when

reading Hadoop file systems data or Hive table data. The data is written in

Hadoop clusters using spark streaming, Nifi streaming jobs, or any streaming

or ingestion application. A large number of small data files are written in

the Hadoop Cluster by the ingestion job. These files are also called part

files. These part files are written across different data nodes, and when the

number of files increases in the directory, it becomes tedious and a

performance bottleneck if some other app or user tries to read this data. One

of the reasons is that the data is distributed across nodes. Think about your

data residing in multiple distributed nodes. The more scattered it is, the job

takes around “N * (Number of files)” time to read the data, where N is the

number of nodes across each Name Nodes. For example, if there are 1 million

files, when we run the MapReduce job, the mapper has to run for 1 million

files across data nodes and this will lead to full cluster utilization leading

to performance issues. For beginners, the Hadoop cluster comes with several

Name Nodes, and each Name Node will have multiple Data Nodes.

Ingestion/Streaming jobs write data across multiple data nodes, and it has

performance challenges while reading those data.

AI Support Bots Still Need That Human Touch

At the core of providing effective support for critical issues is

personalized, expedited service. In contrast to the wholesale outsourcing of

frontline support to bots with little documentation, by combining best

practices, best-of-breed technology and a trained staff of experts, this

hybrid approach offers the best option for delivering and maintaining

mission-critical networks. When a network administrator has an issue that is

beyond the automated self-healing functions, the first call should be readily

available and start with a dedicated support expert, who will know exactly how

the network is configured, its history, and they should have all of the

pertinent incident data at the proverbial fingertips. Issues can then be

quickly resolved and in the event that something unexpected pops up, it can be

handled without having to start all over again. Today, networks are being

stressed like never before with remote work, IoT, cloud migration, and so

forth, spawning novel, unforeseen issues that cannot be handled by limited

AI-based tools. During these periods, accessing an engineer with intimate

knowledge of the system and configuration on-site will be the lifeline network

teams need to help diagnose and resolve these types of challenges. Is this

simply a luddite view of Artificial Intelligence?

Hidden Dangers of Microsoft 365's Power Automate and eDiscovery Tools

Power Automate and eDiscovery Compliance Search, application tools embedded in

Microsoft 365, have emerged as valuable targets for attackers. The Vectra

study revealed that 71% of the accounts monitored had noticed suspicious

activity using Power Automate, and 56% of accounts revealed similarly

suspicious behavior using the eDiscovery tool. A follow-up study revealed that

suspicious Azure Active Directory (AD) Operation and Power Automate Flow

Creation occurred in 73% and 69%, respectively, of monitored environments. ...

Microsoft Power Automate is the new PowerShell, designed to automate mundane,

day-to-day user tasks in both Microsoft 365 and Azure, and it is enabled by

default in all Microsoft 365 applications. This tool can reduce the time and

effort it takes to accomplish certain tasks — which is beneficial for users

and potential attackers. With more than 350 connectors to third-party

applications and services available, there are vast attack options for

cybercriminals who use Power Automate. The malicious use of Power Automate

recently came to the forefront when Microsoft announced it found advanced

threat actors in a large multinational organization that were using the tool

to automate the exfiltration of data. This incident went undetected for over

200 days.

The ‘It’ Factors in IT Transformation

Shadow IT has been the bane of many-a-CIO for as far as I can remember. But

how many organizations focus on complete business IT alignment where the

operating model supports proactively eliminating business operation

disruptions as opposed to meeting internal IT SLAs? The best way to generate

this elusive value from an IT revamp is to use existing concepts and add vital

new ones to get transformational results. And the outcome? A business that can

comfortably jump barriers and leapfrog competitors for whom IT is an

afterthought. So, let’s break this down a bit. What are the “it” factors that

separate a successful IT transformation from the ones with relegated outcomes?

For starters, in the former, IT leaders address every critical part of the

whole and the framework encourages C-level executives to take the plunge.

Enterprise executives sometimes get cornered by organizational dynamics into

playing it safe, into taking baby steps. Unfortunately, though, as former

British Prime Minister David Lloyd George so appropriately puts it, “You can’t

cross a chasm in two small jumps.” Committing to a well-planned yet courageous

leap is critical for success from the very onset.

Organizations can no longer afford a reactive approach to risk management

“Business leaders must be vigilant in scanning for emerging issues and make

actionable plans to adjust their strategies and business models while being

authentic in fostering a trust-based, innovative culture and the

organizational resilience necessary to successfully navigate disruptive

change. Digitally mature companies with an agile workforce were ready when

COVID-19 hit and are the ones best positioned to continue to ride the wave of

rapid acceleration of digitally driven change through the pandemic and

beyond.” Consistent with the survey’s findings in previous years, data

security and cyber threats again rank in the top 10 risks for both 2021 and

2030. The continuously evolving nature of cyber and privacy risks underscores

the need for a secure operating environment in which nimble workforces can

regularly refresh the technology and skills in their arsenal to remain

competitive. “If there’s any risk that all organizations across industries and

geographies must maintain focus on, it’s cybersecurity and privacy,” said

Patrick Scott, executive VP, Industry Programs, Protiviti. “While the areas

that businesses will need to address may change as they transform their

business models and increase their resiliency to face the future confidently,

cybersecurity and privacy threats will remain a constant and should be at or

near the top of the list.”

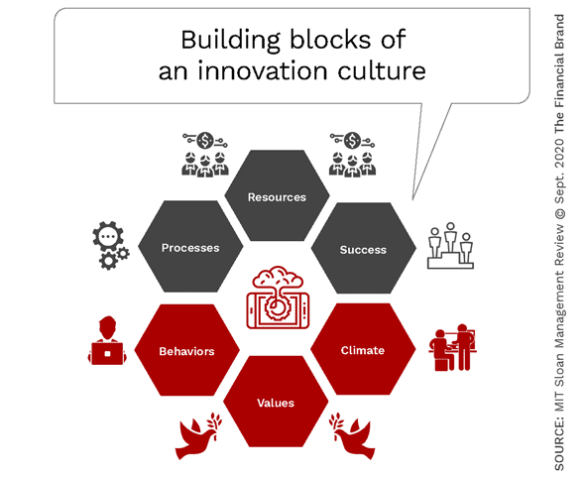

Digital Transformation Demands a Culture of Innovation

Research done over the past five years by the Digital Banking Report finds

that corporate culture is much more important than the size of the company,

level of investment, geographic location or even regulatory environment. The

question becomes: How can leaders build and reinforce an innovation culture

within their organization? According to research by Jay Rao and Joseph

Weintraub, professors at Babson College and published in the MIT Sloan

Management Review, an innovative culture rests on a foundation of six building

blocks. These include resources, processes, values, behavior, climate and

success. Each of these building blocks are dynamically linked. The research by

the professors is aligned with insights found recently by the Digital Banking

Report which shows that increasing investment, changing processes and

measuring success is imperative … but not enough. Organizations must also

focus on the overarching company values, the actions of people within the

organization (behaviors), and the internal environment (climate). These are

much less tangible and harder to measure and manage, but just as important to

the success of innovation and the ability to create a sustained competitive

advantage.

How Enterprise AI Use Will Grow in 2021: Predictions from Our AI Experts

Hillary Ashton, the chief product officer at data analytics vendor Teradata,

said that AI will be helpful in 2021 for many companies as businesses look

toward reopening and recouping sufficient revenue streams as the COVID-19

pandemic slowly releases its grip on the world. “They’ll need to leverage

smart technologies to gather key insights in real-time that allow them to do

so,” said Ashton. “Adopting AI technologies can help guide companies to

understand if their strategies to keep customers and employees safe are

working, while continuing to foster growth. As companies recognize the unique

abilities of AI to help ease corporate policy management and compliance,

ensure safety and evolve customer experience, we'll see boosted rates of AI

adoption across industries." That will also involve using AI to boost safety

and compliance measures inside offices, she said. “As companies look to

eventually return in some form to the office, we'll see investments in AI rise

across the board. AI-driven algorithms can scour meeting invites, email

traffic, business travel and GPS data from employer-issued computers and cell

phones to give businesses advance warnings of certain danger zones or to

quickly halt a potential outbreak at a location. ..."

Quote for the day:

"A casual stroll through the lunatic

asylum shows that faith does not prove anything." --

Friedrich Nietzsche

No comments:

Post a Comment