5 Reasons Why You Should Code Daily As A Data Scientist

By coding on a daily basis, you will gain the ability to integrate Data Science

or other elementary concepts into your programming of developmental projects.

The best part about practicing to code each day is it allows you, the coders, to

subconsciously develop an innovative skill where you can solve computational

tasks in the form of code. By gaining more information and learning more

details, you can use code blocks more effectively and efficiently to solve

complex programming architectures. You can even find solutions to Data Science

tasks that you might have otherwise struggled with because you are in touch with

the subject. And it also helps you gather discrete and more innovative ideas for

implementation faster. This point is quite self-explanatory. Coding daily will

sharpen your deduction abilities and tune you into a better programmer. By

following the accurate principles of coding, you can improve your skills to the

next level and develop yourself into an ultimate genius of programming. For

example, let us take into consideration the Python programming language, which

is most commonly used for Data Science. By developing your coding skills in

Python, you are also advancing your skill level of Data Science to code complex

Data Science projects.

Privacy and Security Are No Longer One-Size-Fits-All

Historically, relationships can be challenging cross-divisionally between

InfoSec, IT, Legal, and business or product teams in the sense that the internal

conversations and ongoing education required for these teams to sync on

protocols and security measures can be extensive. Moreover, policy enforcement

between these teams without proper technical safeguards can result in the

accidental ー or in rare instances, malicious ー leakage of data that would put

an individual or business’ reputation at risk. By taking a more contextual,

technically enforced approach to data governance, the all-too-common risks

associated with human-led processes can be eliminated and in turn, improve

collaboration and enforcement between these internal teams. When the right

technical controls are in place based on the data context, using

privacy-enhancing techniques or in partnership with a reliable technology

partner, enterprises no longer have to rely on the manual human processes that

may lead to sensitive data leakage or re-identification risk. Only this

alignment between internal teams will allow an enterprise’s overall data

strategy to flourish. Many of the world’s most successful companies, such as

Amazon or Unilever, have gained sizable market share through data collaboration,

starting within their own enterprise.

Providing Customer-Driven Value With A ToGAF® Based Enterprise Architecture

The concept of value needs to be mastered and used by enterprise architects in

a customer-driven enterprise, as shown in Figure 1 above. An organization

usually provides several value propositions to its different customer segments

(or persona) and partners that are delivered by value streams made of several

value stages. Value stages have internal stakeholders, external stakeholders,

and often the customer as participants. Value stages enable customer journey

steps, are enabled by capabilities and operationalized by processes (level 2

or 3 usually). The TOGAF® Business Architecture: Value Stream Guide video

provides a very clear and simple explanation, should you want to know more.

Customer journeys are not strictly speaking part of business architecture, but

still, be very useful to interface with business stakeholders. These value

streams/stages cannot be realized out of thin air. An organization must have

the ability to achieve a specific purpose, which is to provide value to the

triggering stakeholder, in occurrence the customers. This ability is an

enabling business capability. Without this capability, the organization cannot

provide value to triggering stakeholders (customers). a capability enables a

value stage and is operationalized by a business process.

A Brief History of Metadata

A primary goal of metadata is to assist researchers in finding relevant

information and discovering resources. Keywords used in the descriptions are

called “meta tags.” Metadata is also used in organizing electronic

resources, providing digital identification, and supporting the preservation

and archiving of data. Metadata assists researchers in discovering

resources by locating relevant criteria and providing location information.

In terms of digital marketing, metadata can be used to organize and display

content, maximizing marketing efforts. Metadata increases brand visibility

and improves “findability.” Different metadata standards are used for

different disciplines (such as digital audio files, websites, or museum

collections). A web page, for example, a may contain metadata describing the

software language, the tools used to create it, and the location of more

information on the subject. ... Metadata found online and in digital

marketing is a crucial tool for modern marketing. Metadata can help people

find a website. It makes web content more searchable, and when used

efficiently, metadata can increase the number of visits.

Center for Applied Data Ethics suggests treating AI like a bureaucracy

People privileged enough to be considered the default by data scientists and

who are not directly impacted by algorithmic bias and other harms may see

the underrepresentation of race or gender as inconsequential. Data Feminism

authors Catherine D’Ignazio and Lauren Klein describe this as “privilege

hazard.” As Alkhatib put it, “other people have to recognize that race,

gender, their experience of disability, or other dimensions of their lives

inextricably affect how they experience the world.” He also cautions against

uncritically accepting AI’s promise of a better world. “AIs cause so much

harm because they exhort us to live in their utopia,” the paper reads.

“Framing AI as creating and imposing its own utopia against which people are

judged is deliberately suggestive. The intention is to square us as

designers and participants in systems against the reality that the world

that computer scientists have captured in data is one that surveils,

scrutinizes, and excludes the very groups that it most badly misreads. It

squares us against the fact that the people we subject these systems to

repeatedly endure abuse, harassment, and real violence precisely because

they fall outside the paradigmatic model that the state — and now the

algorithm — has constructed to describe the world.”

Can IoT accelerate a return to offices?

Thanks to recent developments, both the Internet of Things (IoT) and the

Industrial Internet of Things (IIoT) are making Internet-enabled devices an

increasingly common feature in business. This trend was already on the

upswing prior to the global lockdown, with 46% of IT, telecommunications,

and business managers saying their organizations had already invested in IoT

applications and/or services, while another 30% were readying to invest in

the next 12-24 months. But with millions of workers now working and

communicating virtually from remote locations, the role of IoT in helping to

realize the vision of the smart enterprise has drawn even greater interest.

For one thing, IoT has gained traction as an enabler of productive remote

work and unhindered team collaboration across industries, said Igor Efremov

the head of HR at Itransition, a Denver, US-based software development

company. Sectors that formerly could not continuously function without human

labor such as manufacturing, can now rely on IIoT infrastructure including

sensors, cameras, and endpoints to allow technicians to monitor and maintain

asset performance in real time, without actually being physically

present.

Is there a human consequence to WFH?

There is no such thing as a perfect work/life balance, of course. And while

some employers understand the stress faced by their workers, not all do:

Switched-on employers who care about their staff provide employee choice

schemes, target-related bonuses, personal support, subsidized Wi-Fi, and a

judgement-free attitude toward sick days. Those who don’t, insist workers

stay on camera all day and refuse to accept excuses for absence on the basis

of child care or any other need, while indulging in fire and re-hire

policies. To a great extent, corporate responsibility around employee care

in this environment has effectively been outsourced to employees themselves,

even as productivity (and working hours) increase. And while Apple’s devices

and third-party apps can help remote workers manage time more effectively,

the need for all parties to develop new ways of working that don’t impact

personal space remains challenging. This isn’t a platform-specific matter,

of course: Windows or Mac, Android or iPhone, iPad or some other tablet,

enterprise workers of every stripe face complex challenges as they juggle

work and personal responsibilities.

You Should Master Data Analytics First Before Becoming a Data Scientist

As a Data Scientist, you will have to perform feature engineering, where you

will isolate key features that contribute to the prediction of your model.

In school or wherever you learned Data Science, you may have a perfect

dataset that is already made for you, but in the real world, you will have

to use SQL to query your database to start finding the necessary data. In

addition to the columns that you already have in your tables, you will have

to make new ones — usually, these are new features that can be aggregated

metrics like clicks per user, for example. As a Data Analyst, you will

practice SQL the most, and as a Data Scientist, it can be frustrating if all

you know is Python or R — and you can not rely on Pandas all the time, and

as a result, you cannot even start the model building process without

knowing how to efficiently query your database. Similarly, the focus on

analytics can allow you to practice creating subqueries and metrics like the

one stated above so that you can add a few to at least, say 100, new

features that are completely created from you that could be more important

than the base data that you have now. ... A Data Analyst usually will master

visualizations because they have to present findings in a way that is easily

digestible for others in the company.

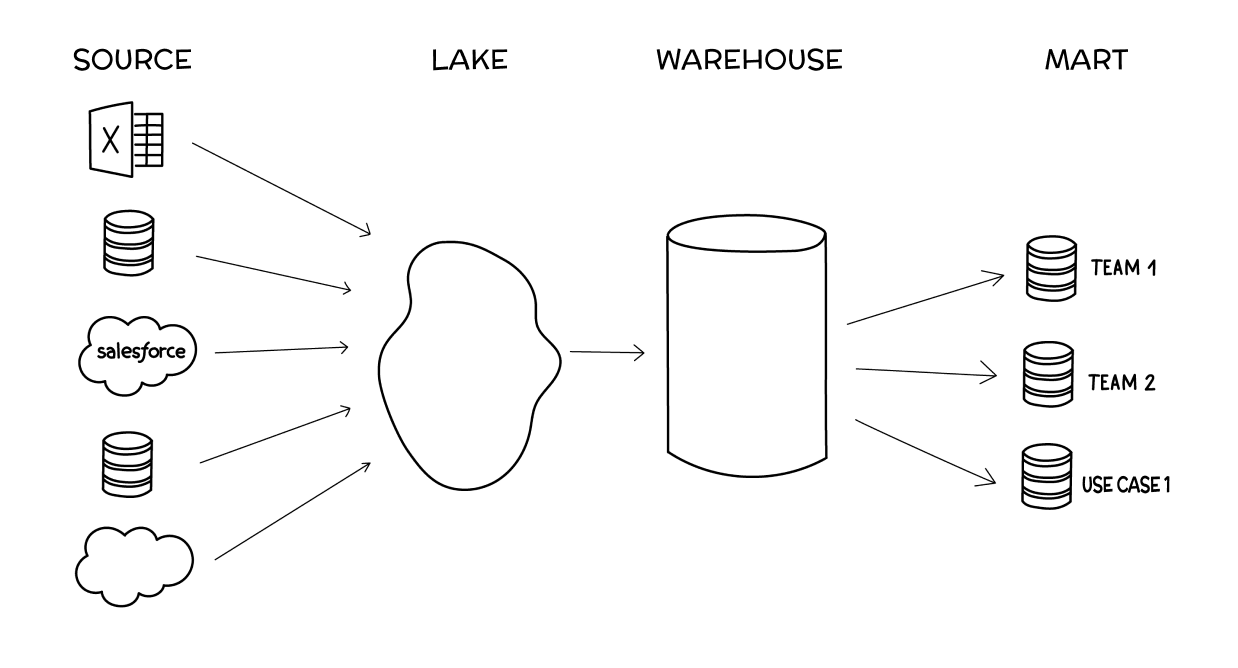

Introduction - The 4 Stages of Data Sophistication

Companies today are quite good at collecting data - but still very poor at

organizing and learning from it. Setting up a proper Data Governance

organization, workflow, tools, and an effective data stack are essential

tasks if a business wants to gain from it’s information. This book is for

organizations of all sizes that want to build the right data stack for them

- one that is both practical and enables them to be as informed as possible.

It is a continually improving community driven book teaching modern data

governance techniques for companies at different levels of data

sophistication. In it we will progresses from the starting setup of a new

startup to a mature data driven enterprise covering architectures, tools,

team organizations, common pitfalls and best practices as data needs expand.

The structure and original chapters of this book were written by the

leadership and Data Advisor teams at Chartio, sharing our experiences

working with hundreds of companies over the past decade. Here we’ve compiled

our learnings and open sourced them in a free, open book.

Data Residency Compliance with Distributed Cloud Approach

Rather than providing a centralized solution for everything, a distributed

cloud can meet each specific customer and country requirement. It also gives

enterprises the ability to properly use their original investments in

existing central clouds while executing a unified cloud strategy for

location-based data needs. This is especially important when customers are

looking to utilize SaaS solutions that depend on central clouds since they

often can’t easily localize data independently. Hybrid clouds were

originally intended to enable a unified strategy. Yet, enterprises continue

to struggle to get the level of value they initially expected out of their

private cloud deployments, especially in compliance-centric use cases where

ongoing research and expertise is needed. However, enterprises can now

consider a distributed cloud-based offering such as ‘Data

Residency-as-a-Service’ to meet global compliance standards. As businesses

look to expand into new countries that require data sets to be localized in

different regions, it’s essential to stay ahead of these challenges or they

risk losing business in the region altogether.

Quote for the day:

"Brilliant strategy is the best

route to desirable ends with available means." -- Max McKeown

No comments:

Post a Comment