Wi-Fi 6 will slowly gather steam in 2020

Making sure devices are compliant with modern Wi-Fi standards will be crucial in the future, though it shouldn’t be a serious issue that requires a lot of device replacement outside of fields that are using some of the aforementioned specialized endpoints, like medicine. Healthcare, heavy industry and the utility sector all have much longer-than-average expected device lifespans, which means that some may still be on 802.11ac. That’s bad, both in terms of security and throughput, but according to Shrihari Pandit, CEO of Stealth Communications, a fiber ISP based in New York, 802.11ax access points could still prove an advantage in those settings thanks to the technology that underpins them. “Wi-Fi 6 devices have eight radios inside them,” he said. “MIMO and beamforming will still mean a performance upgrade, since they’ll handle multiple connections more smoothly.” A critical point is that some connected devices on even older 802.11 versions – n, g, and even b in some cases – won’t be able to connect to 802.11ax radios, so they won’t be able to benefit from the numerous technological upsides of the new standard. Making sure that a given network is completely cross-compatible will be a central issue for IT staff looking to upgrade access points that service legacy gear.

Cloud storage solutions gaining momentum through disruption by traditional vendors

This disruption, where traditional on-prem vendors have brought their offerings out into the public cloud, has led to the emergence of more innovative cloud storage solutions. “This has given customers flexibility in how they approach storage in a cloud environment, with more enterprise-style services being offered,” continued Beale. On-prem vendors want to have storage apps in the cloud. In part, this is a marketing positioning exercise where they want to be seen as new and innovative vendors, by working in the cloud. But, there’s a second, more technical and practical reason for this changing cloud storage landscape. “Around 80% of the organisations that we speak to have some sort of cloud presence. That means they’re using cloud-based technologies and at some point, multiple cloud providers, along with an on-prem solution,” explained Beale. Having a ubiquitous big data plain across an organisation is appealing to customers, because they don’t have to spend a lot of time, money or resources on disparate platforms across multiple vendors.

Why flexible work and the right technology may just close the talent gap

Increasingly what we see is that freelancers become full-time freelancers; meaning it’s their primary source of income. Usually, as a result of that, they tend to move. And when they move it is out of big cities like San Francisco and New York. They tend to move to smaller cities where the cost of living is more affordable. And so that’s true for the freelance workforce, if you will, and that’s pulling the rest of the workforce with it. What we see increasingly is that companies are struggling to find talent in the top cities where the jobs have been created. Because they already use freelancers anyway, they are also allowing their full-time employees to relocate to other parts of the country, as well as to hire people away from their headquarters, people who essentially work from home as full-time employees, remotely. ... And along the way, companies realized two things. Number one, they needed different skills than they had internally. So the idea of the contingent worker or freelance worker who has that specific expertise becomes increasingly vital.

Life on the edge: A new world for data

For many CIOs, a strategy for edge computing will be entirely new. Sunil urges CIOs to assess what parts of edge computing can be achieved in-house and what should be done through a consulting firm. “A system integrator will play a big role in bringing it all together,” he says. Chris Lloyd-Jones, emerging technology, product and engineering lead at Avanade, says large enterprises are starting to build IoT platforms to centrally manage edge computing devices and provide connectivity across geographic regions. “Edge computing is no longer just about an on-board computer where data from the device is uploaded via a USB cable,” he says. “Edge computing now handles 4G and 5G connectivity with periodic connectivity, and support for full-scale machine learning and computationally intensive workloads. Data can be transmitted to and from the cloud. This provides centralised management.” Lloyd-Jones says the cloud can be used to train machine learning models, which can then be deployed to edge devices and managed like any other IT equipment.

Microsoft: RDP brute-force attacks last 2-3 days on average

Usually, these attacks use combinations of usernames and passwords that have been leaked online after breaches at various online services, or are simplistic in nature, and easy to guess. Microsoft says that the RDP brute-force attacks it recently observed last 2-3 days on average, with about 90% of cases lasting for one week or less, and less than 5% lasting for two weeks or more. The attacks lasted days rather than hours because attackers were trying to avoid getting their attack IPs banned by firewalls. Rather than try hundreds or thousands of login combos at a time, they were trying only a few combinations per hour, prolonging the attack across days, at a much slower pace than RDP brute-force attacks have been observed before. "Out of the hundreds of machines with RDP brute force attacks detected in our analysis, we found that about .08% were compromised," Microsoft said. "Furthermore, across all enterprises analyzed over several months, on average about 1 machine was detected with high probability of being compromised resulting from an RDP brute force attack every 3-4 days," the Microsoft research team added.

AI, privacy and APIs will mold digital health in 2020

Interoperability is a major player in health tech innovation: patients will always receive care across multiple venues, and secure data exchange is key to providing continuity of care. Standardized APIs can provide the technological foundations for data sharing, extending the functionality of EHRs and other technologies that support connected care. Platforms like Validic Inform leverage APIs to share patient-generated data from personal health devices to providers, while giving them the ability to configure data streams to identify actionable data and automate triggers. In the upcoming year, look for major players like Apple and Google to make strides toward interoperability and breaking down data silos. Apple’s Health app already is capable of populating with information from other apps on your phone. Add your calorie intake to a weight loss app? Time your miles with a running app? Monitor your bedtime habits with a sleep tracking app? You’ll find that info aggregating in your Health app. Apple is uniquely positioned to be the driver of interoperability, and Google is not far behind.

Capitalizing on the promise of artificial intelligence

Remarkably, a majority of early adopters within each country believe that AI will substantially transform their business within the next three years. However, as pointed out in Is the window for AI competitive advantage closing for early adopters?—part of Deloitte’s Thinking Fast series of quick insights — the early adopters also believe that the transformation of their industry is following close on the heels of their own AI-powered business transformation. Globally, there’s a sense of urgency among adopters that now is the time to capitalize on AI, before the window for competitive advantage closes. However, comparing AI adopters across countries reveals notable differences in AI maturity levels and urgency. While many nations regard AI as crucial to their future competitiveness, these comparisons indicate that some countries are adopting AI aggressively, while others are proceeding with considerable caution—and may be at risk of being left behind. Consider Canada.

Building the ‘Intelligent Bank’ of the Future

Of high awareness within the banking industry, but not yet understood by consumers is the evolving nature of open banking, which has proceeding in stages in Europe and elsewhere, but not yet in the U.S. From the consumer perspective, people want easier ways to manage their money and make their daily life easier. Many financial institutions, on the other hand, are somewhat overwhelmed by the prospects of delivering on the open banking promise. The paradox exists between the desire to deliver more integrated solutions while being transparent around the sharing of data between multiple organizations. Most of the concerns around open banking revolve around the collection and sharing of data with third parties and the inherent risks around such sharing. There is also the need to educate both the consumer and the employee on data security. The end result is less than clear regulations around open banking, and very few organizations actually being prepared to deliver on what has been promised consumers. That said, it is interesting that more than four in ten financial institutions (41%) are looking beyond just offering banking products in the future.

Backdoors and Breaches incident response card game makes tabletop exercises fun

Unlike some tabletop exercises that can take months to prepare and last for days, Backdoors and Breaches makes it simple to role-play thousands of possible security incidents, and to do so even as a weekly exercise. The game can be played just by blue teamers but could also involve a member of the legal team, management, or a member of the public relations team. The ideal game involves no more than six players to ensure that everyone is engaged and participating. "This game can be played every Thursday at lunch," Blanchard tells CSO. If the upside of the B&B card deck is the ability to instantly create thousands of scenarios from generic attack methods, the downside is that it lacks cards for specific industries, or company-specific issues. Black Hills plans for expansion decks in 2020, including one for industrial control system (ICS) security and another for web application security. The B&B deck launched at DerbyCon 2019, and Blanchard says they plan to give away free decks at every infosec conference they attend in 2020. The decks are also available on Amazon for $10 plus shipping, which, he says, just covers their costs.

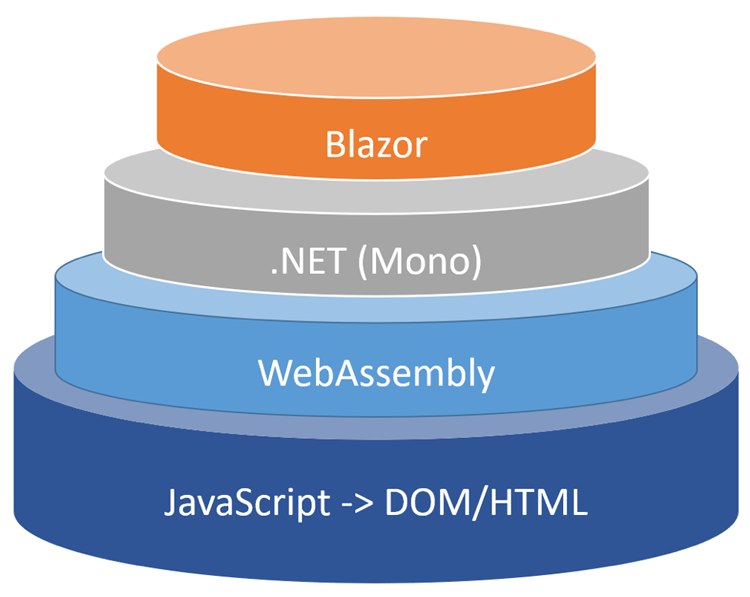

An Introduction to Blazor and Web Assembly

Blazor is a new framework that lets you build interactive web UIs using C# instead of JavaScript. It is an excellent option for .NET developers looking to take their skills into web development without learning an entirely new language. Currently, there are two ways to work with Blazor: running on an ASP.NET Core server with a thin-client, or completely on the client’s web browser using WebAssembly instead of JavaScript. ... UI component libraries were created long before Blazor. Most existing frameworks that target web applications are based on JavaScript. They are still compatible with Blazor due to its ability to interoperate with JavaScript. Components that are primarily based on JavaScript are called wrapped JavaScript controls, as opposed to components written entirely in Blazor, which are referred to as native Blazor controls. Native Blazor controls parse the Razor syntax to generate a render tree that represents the UI and behavior of that control. The render tree is why it’s possible to run server-side Blazor. The tree is parsed and used to generate HTML on the server that’s sent to the client for rendering. In the case of Blazor WebAssembly, the render tree is parsed and rendered entirely in the client.

Quote for the day:

"No great manager or leader ever fell from heaven, its learned not inherited." -- Tom Northup

No comments:

Post a Comment