Breaking Barriers: The Power of Cross-Departmental Collaboration in Modern Business

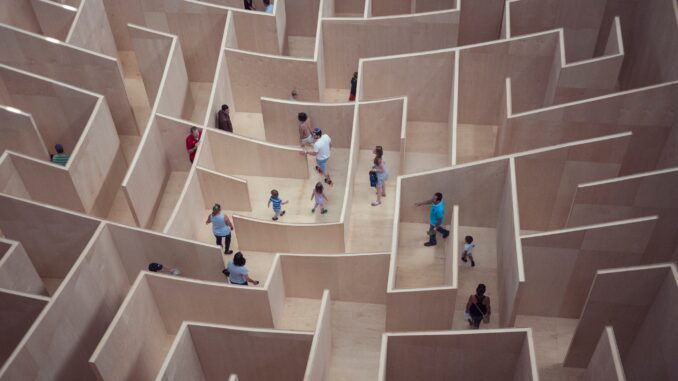

In an era of rapid change and increasing complexity, cross-departmental collaboration is no longer a luxury but a necessity. By dismantling silos, fostering trust, and leveraging technology, organizations can unlock their full potential, drive innovation, and enhance customer satisfaction. While industry leaders have shown the way, the journey to a truly collaborative culture requires sustained effort and adaptation. To embark on this collaborative journey, organizations must prioritize collaboration as a core value, invest in leadership development, empower employees, leverage technology, and measure progress. Creating a collaborative culture is like building a bridge between departments: it requires strong foundations, continuous maintenance, and a shared vision. By doing so, they can create a culture where innovation thrives, employees are engaged, and customers benefit from improved products and services. Looking ahead, successful organizations will not only embrace collaboration but also anticipate its evolution in response to emerging trends like remote work, artificial intelligence, and data privacy. By proactively addressing these challenges and opportunities, businesses can position themselves as leaders in the collaborative economy.

Singapore releases guidelines for securing AI systems and prohibiting deepfakes in elections

"AI systems can be vulnerable to adversarial attacks, where malicious actors

intentionally manipulate or deceive the AI system," said Singapore's Cyber

Security Agency (CSA). "The adoption of AI can also exacerbate existing

cybersecurity risks to enterprise systems, [which] can lead to risks such as

data breaches or result in harmful, or otherwise undesired model outcomes." "As

such, AI should be secure by design and secure by default, as with all software

systems," the government agency said. ... "The Bill is scoped to address the

most harmful types of content in the context of elections, which is content that

misleads or deceives the public about a candidate, through a false

representation of his speech or actions, that is realistic enough to be

reasonably believed by some members of the public," Teo said. "The condition of

being realistic will be objectively assessed. There is no one-size-fits-all set

of criteria, but some general points can be made." These encompass content that

"closely match[es]" the candidates' known features, expressions, and mannerisms,

she explained. The content also may use actual persons, events, and places, so

it appears more believable, she added.

2025 and Beyond: CIOs' Guide to Stay Ahead of Challenges

As enterprises move beyond the "experiment" or the "proof of concept" stage,

it is time to design and formalize a well-thought-out AI strategy that is

tailored to their unique business needs. According to Gartner, while 92% of

CIOs anticipate AI will be integrated into their organizations by 2025 -

broadly driven by increasing pressure from CEOs and boards - 49% of leaders

admit their organizations struggle to assess and showcase AI's value. That's

where the strategy kicks in. ... Forward-looking CIOs are focused on using

data for decision-making while tackling challenges related to its quality and

availability. Data governance is a crucial aspect to deal with. As data

systems become more interconnected, managing complexity is crucial. Going

forward, CIOs will have to focus on optimizing current systems, high level of

data literacy, complexity management and establishing strong governance. The

importance of shifting IT from a cost center to a profit driver lies in

focusing on data-driven revenue generation, said Eric Johnson ... CIOs should

be able to communicate the strategic use of IT investment and present it as a

core enabler for competitiveness.

5 Ways to Reduce SaaS Security Risks

It's important to understand what corporate assets are visible to attackers

externally and, therefore, could be a target. Arguably, the SaaS attack

surface extends to every SaaS, IaaS and PaaS application, account, user

credential, OAuth grant, API, and SaaS supplier used in your

organization—managed or unmanaged. Monitoring this attack surface can feel

like a Sisyphean task, given that any user with a credit card, or even just

a corporate email address, has the power to expand the organization's attack

surface in just a few clicks. ... Single sign-on (SSO) provides a

centralized place to manage employees' access to enterprise SaaS

applications, which makes it an integral part of any modern SaaS identity

and access governance program. Most organizations strive to ensure that all

business-critical applications (i.e., those that handle customer data,

financial data, source code, etc.) are enrolled in SSO. However, when new

SaaS applications are introduced outside of IT governance processes, this

makes it difficult to truly assess SSO coverage. ... Multi-factor

authentication adds an extra layer of security to protect user accounts from

unauthorized access. By requiring multiple factors for verification, such as

a password and a unique code sent to a mobile device, it significantly

decreases the chances of hackers gaining access to sensitive

information.

World’s smallest quantum computer unveiled, solves problems with just 1 photon

In the new study, the researchers successfully implemented Shor’s algorithm

using a single photon by encoding and manipulating 32 time-bin modes within

its wave packet. This achievement highlights the strong

information-processing capabilities of a single photon in high dimensions.

According to the team, with commercially available electro-optic modulators

capable of 40 GHz bandwidth, it is feasible to encode over 5,000 time-bin

modes on long single photons. While managing high-dimensional states can be

more challenging than working with qubits, this work demonstrates that these

time-bin states can be prepared and manipulated efficiently using a compact

programmable fiber loop. Additionally, high-dimensional quantum gates can

enhance manipulation, using multiple photons for scalability. Reducing the

number of single-photon sources and detectors can improve the efficiency of

counting coincidences over accidental counts. Research indicates that

high-dimensional states are more resistant to noise in quantum channels,

making time-bin-encoded states of long single photons promising for future

high-dimensional quantum computing.

Google creates the Mother of all Computers: One trillion operations per second and a mystery

The capability of exascale computing to handle massive amounts of data and

run through simulation has created new avenues for scientific modeling. From

mimicking black holes and the birth of galaxies to introducing newer and

evolved treatments and diagnoses through customized genome mapping across

the globe, this technology has all the potential to burst open newer

frontiers of knowledge about the cosmos. While current supercomputers would

otherwise spend years solving computations, exascale machines will pave the

way to areas of knowledge that were previously uncharted. For instance, the

exascale solution in astrophysics holds the prospect of modeling many

phenomena, such as star and galaxy formation, with higher accuracy. For

example, these simulations could reveal new detections of the fundamental

laws of physics and be used to answer questions about the universe’s

formation. In addition, in fields like particle physics, researchers could

analyze data from high-energy experiments far more efficiently and perhaps

discover more about the nature of matter in the universe. AI is another area

to benefit from exascale computing for a supercharge in performance. Present

models of AI are very efficient, but the current computing machines

constrain them.

Taming the Perimeter-less Nature of Global Area Networks

The availability of data and intelligence from across the global span of the

network is significantly effective in helping ITOps teams understand all the

component services and providers their business has exposure to or reliance

on. It means being able to pinpoint an impending problem or the root cause

of a developing issue within their global area network and to pursue

remediation with the right third-party provider ... Certain traffic

engineering actions taken on owned infrastructure can alter connectivity and

performance by altering the path that traffic takes in the unowned

infrastructure portion of the global area network. Consider these actions as

adjustments to a network segment that is within your control, such as a

network prefix or a BGP route change to bypass a route hijack happening

downstream in the unowned Internet-based segment. These traffic engineering

actions are manageable tasks that ITOps teams or their automated systems can

execute within a global area network setup. While they are implemented in

the parts of the network directly controlled by ITOps, their impact is

designed to span the entire service delivery chain and its

performance.

Firms use AI to keep reality from unreeling amid ‘global deepfake pandemic’

Seattle-based Nametag has announced the launch of its Nametag Deepfake

Defense product. A release quotes security technologist and cryptography

expert Bruce Schneier, who says “Nametag’s Deepfake Defense engine is the

first scalable solution for remote identity verification that’s capable of

blocking the AI deepfake attacks plaguing enterprises.” And make no mistake,

says Nametag CEO Aaron Painter: “we’re facing a global deepfake pandemic

that’s spreading ransomware and disinformation.” The company cites numbers

from Deloitte showing that over 50 percent of C-suite executives expect an

increase in the number and size of deepfake attacks over the next 12 months.

Deepfake Defense consists of three core proprietary technologies:

Cryptographic Attestation, Adaptive Document Verification and Spatial

Selfie. The first “blocks digital injection attacks and ensures data

integrity using hardware-backed keystore assurance and secure enclave

technology from Apple and Google.” The second “prevents ID presentation

attacks using proprietary AI models and device telemetry that detect even

the most sophisticated digital manipulation or forgery.”

Evolving Data Governance in the Age of AI: Insights from Industry Experts

While evolving existing data governance to meet AI needs is crucial, many

organizations need to advance their DG first, before delving into AI

governance. Existing data quality does not cover AI requirements. As

mentioned in the previous section, currently DG programs enforce roles,

procedures and tools for some structured data throughout the company. Yet AI

models learn from and use very large data sets, containing structured and

unstructured data. All this data needs to be of good quality too, so that

the AI model can respond accurately, completely, consistently, and

relevantly. Companies frequently struggle to determine if their unstructured

data, including videos and PowerPoint slides, is of sufficient quality for

AI training and implementation. If organizations don’t address this issue,

Haskell said, they “throw dollars at AI and AI tools,” because the bad data

quality inputted gets outputted. For this reason, the pressures of data

quality fundamentals and clean-up take higher importance over the drive to

implement AI. O’Neal likened AI and its governance to an iceberg. The CEO

and senior management see only the tip, visible with all of AI’s promise and

reward.

On the Road to 2035, Banking Will Walk One of These Three Paths

Economist Impact’s latest report walks through three different potential

scenarios that the banking sector will zero in on by 2035. Each paints a

vivid picture of how technological advancements, shifting consumer

expectations and evolving global dynamics could reshape the financial world

as we know it. ... Digital transformation will be central to banking’s

future, regardless of which scenario unfolds. Banks that fail to innovate

and adapt to new technologies risk becoming obsolete. Trust will be a

critical currency in the banking sector of 2035. Whether it’s through

enhanced data protection, ethical AI use, or commitment to sustainability,

banks must find ways to build and maintain customer trust in an increasingly

complex world. The role of banks is likely to expand beyond traditional

financial services. In all scenarios, we see banks taking on new

responsibilities, whether it’s driving sustainable development, bridging

geopolitical divides, or serving as the backbone for broader digital

ecosystems. Flexibility and adaptability will be crucial for success. The

future is uncertain and potentially fragmented, requiring banks to be agile

in their strategies and operations to thrive in various possible

environments.

Quote for the day:

"The secret of my success is a two

word answer: Know people." -- Harvey S. Firestone

No comments:

Post a Comment