The Power of Going Opposite: Unlocking Hidden Opportunities in Business

It is more than just going opposite directions. This is rooted in a principle I

call the Law of Opposites. With this, you look in the opposite direction

everyone else is looking and what you will find are unique opportunities in the

most inconspicuous places. By leveraging this law, business leaders and whole

organizations can uncover hidden opportunities and create significant

competitive advantage they would otherwise miss. Initially, many fear that

looking in the opposite direction will leave them in uncharted territory

unnecessarily. For instance, why would a restaurant look at what is currently

going on in the auto industry? That should do quite the opposite,

right? This principle of going opposite has the opposite effect of that

fear, revealing unexpected opportunities! With an approach that places

organizations on opposite sides of conventional thinking, you take on a new

viewpoint. ... Leveraging the Law of Opposites and putting in the effort to go

opposite has two critical benefits to organizations. For one, when faced with

what appears to be insurmountable competition, it allows organizations to leap

ahead, pivoting their offerings to see what others miss.

Top 5 Container Security Mistakes and How to Avoid Them

Before containers are deployed, you need assurance they don’t contain

vulnerabilities right from the start. But unfortunately, many organizations fail

to scan container images during the build process. That leaves serious risks

lurking unseen. Unscanned container images allow vulnerabilities and malware to

easily slip into production environments, creating significant security issues

down the road. ... Far too often, developers demand (and receive) excessive

permissions for container access, which trailblazes unnecessary risks. If

compromised or misused, overprivileged containers can lead to devastating

security incidents. ... Threat prevention shouldn’t stop once a container

launches, either. But some forget to extend protections during the runtime

phase. Containers left unprotected at runtime allow adversarial lateral movement

across environments if compromised. ... Container registries offer juicy targets

when left unprotected. After all, compromise the registry, and you will have the

keys to infect every image inside. Unsecured registries place your entire

container pipeline in jeopardy if accessed maliciously. ... You can’t protect

what you can’t see. Monitoring gives visibility into container health events,

network communications, and user actions.

Why you should want face-recognition glasses

Under the right circumstances, we can easily exchange business card-type

information by simply holding the two phones near each other. To give a business

card is to grant permission for the receiver to possess the personal information

thereon. It would be trivial to add a small bit of code to grant permission for

face recognition. Each user could grant that permission with a checkbox in the

contacts app. That permission would automatically share both the permission and

a profile photo. Face-recognition permission should be grantable and revokable

at any time on a person-by-person basis. Ten years from now (when most everyone

will be wearing AI glasses), you could be alerted at conferences and other

business events about everyone you’ve met before, complete with their name,

occupation, and history of interaction. Collecting such data throughout one’s

life on family and friends would also be a huge benefit to older people

suffering from age-related dementia or just from a naturally failing memory.

Shaming AI glasses as a face-recognition privacy risk is the wrong tactic,

especially when the glasses are being used only a camera. Instead, we should

recognize that permission-based face-recognition features in AI glasses would

radically improve our careers and lives.

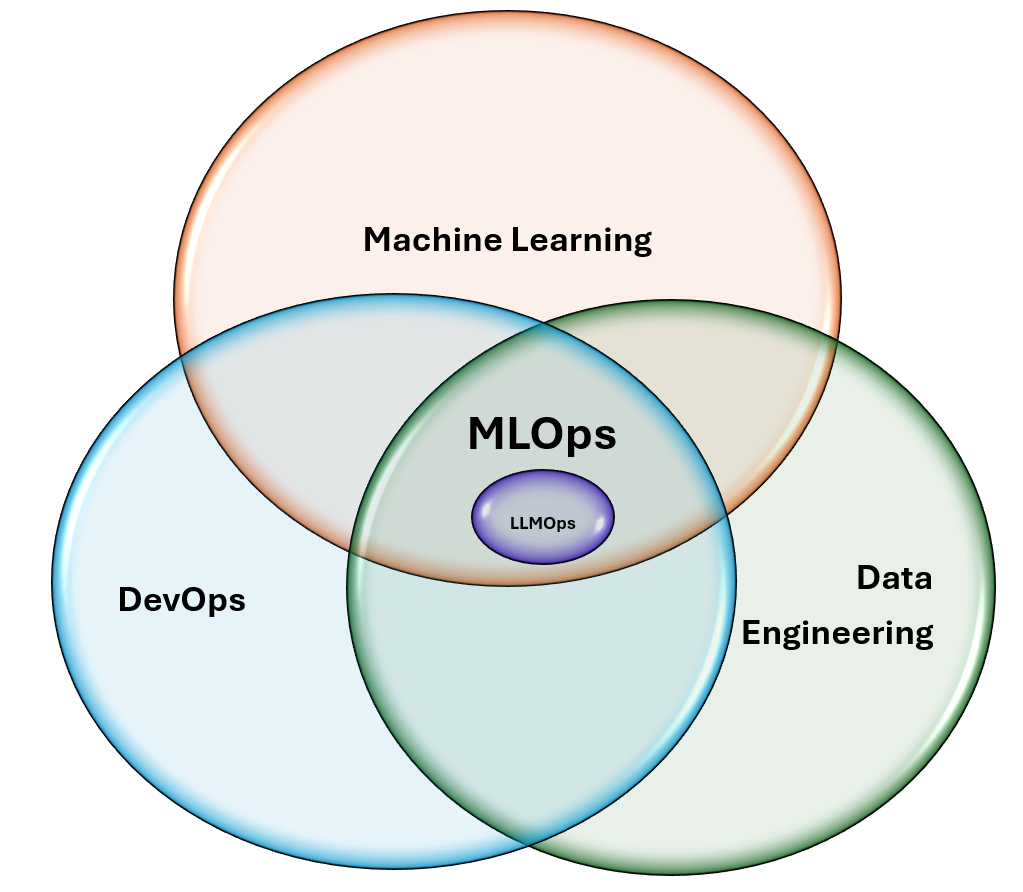

Operationalize a Scalable AI With LLMOps Principles and Best Practices

The recent rise of Generative AI with its most common form of large language

models (LLMs) prompted us to consider how MLOps processes should be adapted to

this new class of AI-powered applications. LLMOps (Large Language Models

Operations) is a specialized subset of MLOps (Machine Learning Operations)

tailored for the efficient development and deployment of large language

models. LLMOps ensures that model quality remains high and that data quality

is maintained throughout data science projects by providing infrastructure and

tools. Use a consolidated MLOps and LLMOps platform to enable close

interaction between data science and IT DevOps to increase productivity and

deploy a greater number of models into production faster. MLOps and LLMOps

will both bring Agility to AI Innovation to the project. ... Evaluating

LLMs is a challenging and evolving domain, primarily because LLMs often

demonstrate uneven capabilities across different tasks. LLMs can be sensitive

to prompt variations, demonstrating high proficiency in one task but faltering

with slight deviations in prompts. Since most LLMs output natural language, it

is very difficult to evaluate the outputs via traditional Natural Language

Processing metrics.

Using Chrome's accessibility APIs to find security bugs

Chrome exposes all the UI controls to assistive technology. Chrome goes to

great lengths to ensure its entire UI is exposed to screen readers, braille

devices and other such assistive tech. This tree of controls includes all

the toolbars, menus, and the structure of the page itself. This structural

definition of the browser user interface is already sometimes used in other

contexts, for example by some password managers, demonstrating that

investing in accessibility has benefits for all users. We’re now taking that

investment and leveraging it to find security bugs, too. ... Fuzzers are

unlikely to stumble across these control names by chance, even with the

instrumentation applied to string comparisons. In fact, this by-name

approach turned out to be only 20% as effective as picking controls by

ordinal. To resolve this we added a custom mutator which is smart enough to

put in place control names and roles which are known to exist. We randomly

use this mutator or the standard libprotobuf-mutator in order to get the

best of both worlds. This approach has proven to be about 80% as quick as

the original ordinal-based mutator, while providing stable test cases.

Investing in Privacy by Design for long-term compliance

Organizations still have a lot of prejudice when discussing principles like

Privacy by Design which comes from the lack of knowledge and awareness. A

lot of organizations which are handling sensitive private data have a

dedicated Data Protection Officer only on paper, and that person performing

the role of the DPO is often poorly educated and misinformed regarding the

subject. Companies have undergone a shallow transformation and defined the

roles and responsibilities when the GDPR was put into force, often led by

external consultants, and now those DPO’s in the organizations are just

trying to meet the minimum requirements and hope everything turns out for

the best. Most of the legacy systems in companies were ‘taken care of’

during these transformations, impact assessments were made, and that was the

end of the discussion about related risks. For adequate implementation of

principles like Privacy by Design and Security by Design, all of the

organization has to be aware that this is something that has to be done, and

support from all the stakeholders needs to be ensured. By correctly

implementing Privacy by Design, privacy risks need to be established at the

beginning, but also carefully managed until the end of the project, and then

periodically reassessed.

Benefits of a Modern Data Historian

With Industry 4.0, data historians have advanced significantly. They now

pull in data from IoT devices and cloud platforms, handling larger and more

complex datasets. Modern historians use AI and real-time analytics to

optimize operations across entire businesses, making them more scalable,

secure, and integrated with other digital systems, perfectly fitting the

connected nature of today’s industries. Traditional data historians were

limited in scalability and integration capabilities, often relying on manual

processes and statistical methods of data collection and storage. Modern

data historians, particularly those built using a time series database

(TSDB), offer significant improvements in speed and ease of data processing

and aggregation. One such foundation for a modern data historian is

InfluxDB. ... Visualizing data is crucial for effective decision-making as

it transforms complex datasets into intuitive, easily understandable

formats. This lets stakeholders quickly grasp trends, identify anomalies,

and derive actionable insights. InfluxDB seamlessly integrates with

visualization tools like Grafana, renowned for its powerful, interactive

dashboards.

Beyond Proof of Concepts: Will Gen AI Live Up to the Hype?

Two years after ChatGPT's launch, the experimental stage is largely behind

CIOs and tech leaders. What once required discretionary funding approval

from CFOs and CEOs has now evolved into a clear recognition that gen AI

could be a game changer. But scaling this technology across multiple

business use cases while aligning them with strategic objectives - without

overwhelming users - is a more practical approach. Still, nearly 90% of gen

AI projects remain stuck in the pilot phase, with many being rudimentary.

According to Gartner, one major hurdle is justifying the significant

investments in gen AI, particularly when the benefits are framed merely as

productivity enhancements, which may not always translate into tangible

financial gains. "Many organizations leverage gen AI to transform their

business models and create new opportunities, yet they continue to struggle

with realizing value," said Rita Salaam, distinguished vice president

analyst at Gartner. ... In another IBM survey, tech leaders revealed

that half of their IT budgets will be allocated to AI and cloud over the

next two years. This shift suggests that gen AI is transitioning from the

"doubt" phase to the "confidence" phase.

Microsoft’s Take on Kernel Access and Safe Deployment Following CrowdStrike Incident

This was discussed at some length at the MVI summit. “We face a common set

of challenges in safely rolling out updates to the large Windows ecosystem,

from deciding how to do measured rollouts with a diverse set of endpoints to

being able to pause or rollback if needed. A core SDP principle is gradual

and staged deployment of updates sent to customers,” comments Weston in a

blog on the summit. “This rich discussion at the Summit will continue as a

collaborative effort with our MVI partners to create a shared set of best

practices that we will use as an ecosystem going forward,” he blogged.

Separately, he expanded to SecurityWeek: “We discussed ways to de-conflict

the various SDP approaches being used by our partners, and to bring

everything together as a consensus on the principles of SDP. We want

everything to be transparent, but then we want to enforce this standard as a

requirement for working with Microsoft.” Agreeing and requiring a minimum

set of safe deployment practices from partners is one thing; ensuring that

those partners employ the agreed SDP is another. “Technical enforcement

would be a challenge,” he said. “Transparency and accountability seem to be

the best methodology for now.”

What Hybrid Quantum-Classic Computing Looks Like

Because classical and quantum computers have limitations, the two are being

used as a hybrid solution. For example, a quantum computer is an accelerator

for a classical computer and classical computers can control quantum

systems. However, there are challenges. One challenge is that classical

computers and quantum computers operate at different ambient temperatures,

which means a classical computer can’t run in a near zero Kelvin

environment, nor can a quantum computer operate in a classical environment.

Therefore, separating the two is necessary. Another challenge is that

quantum computers are very noisy and therefore error prone. To address that

issue, Noisy Intermediate-Scale Quantum or NISQ computing emerged. The

assumption is that one must just accept the errors and create variational

algorithms. In this vein, one guesses what a solution looks like and then

attempts to tweak the parameters of it using something like Stochastic

gradient descent, which is used to train neural networks. Using a hybrid

system, the process is iterative. The classical computer measures the state

of the of qubits, analyzes them and sends instructions for what to do next.

This is how the classical-quantum error correction iterations work at a high

level.

Quote for the day:

"Facing difficult circumstances does

not determine who you are. They simply bring to light who you already

were." -- Chris Rollins

No comments:

Post a Comment