Throw out all those black boxes and say hello to the software-defined car

Software-defined vehicles might give automakers more flexibility in terms of the

features and functions they can create, but it comes with some headaches on

their end, including ensuring that a car works in each market where it's

offered. "All the requirements are different for each region, and the complexity

is so high. And from my perspective, this is the biggest challenge for

engineers. Complexity is so high, especially if you sell cars worldwide. It is

not easy. So in the past, we had this world car, so you bring one car for each

market. We are not able to bring this world car for all regions anymore,"

Hoffmann told me. "In the past, it was not easy, but it was very clear—more

performance, more efficiency, focus on design. And now that's changed

dramatically. So software became very important; you have to focus on the

ecosystem, and it is very, very complex. For each region you have, you have

dedicated and different ecosystems," he said. ... The move to software-defined

vehicles complicates this, as it applies to software as well as hardware. That

means each update needs to be signed off by a regulator before being sent out

over the air.

Staying ahead: How the best CEOs continually improve performance

Between three and five years into their tenure, the best CEOs typically combine

what they’ve gained from their expanded learning agenda and their self-directed

outsider’s perspective to form a point of view on what the next performance

S-curve is for their company. The concept of the S-curve is that, with any

strategy, there’s a period of slow initial progress as the strategy is formed

and initiatives are launched. That is followed by a rapid ascent from the

cumulative effect of initiatives coming to fruition and then by a plateau where

the value of the portfolio of strategic initiatives has largely been captured.

Dominic Barton, McKinsey’s former global managing partner, describes why

managing a series of S-curves is important: “No one likes change, so you need to

create a rhythm of change. Think of it as applying ‘heart paddles’ to the

organization.” Former Best Buy CEO Hubert Joly describes why and how he applied

heart paddles to his organization, moving from one S-curve to another: “We

started with a turnaround, something we called ‘Renew Blue.’

Cloud Security: Don’t Confuse Vendor and Tool Consolidation

Unfortunately, simply buying solutions from fewer vendors doesn’t necessarily

deliver the operation efficiencies or efficacy of security coverage — that

entirely depends on the nature of those solutions, how integrated they are and

how good the user experience is that they provide. If you’re an in-the-trenches

application developer or security practitioner, consolidating cybersecurity-tool

vendors might not mean much to you. If the vendor that your business chooses

doesn’t offer an integrated platform, you’re still left juggling multiple tools.

You are constantly toggling between screens and dealing with the productivity

hit that comes with endless context switching. You have to move data manually

from one tool to another to aggregate, normalize, reconcile, analyze and archive

it. You have to sit down and think about which alerts to prioritize because each

tool is generating different alerts, and without tooling integrations, one tool

is incapable of telling you how an issue it has surfaced might (or might not) be

related to an alert from a different tool.

Deconstructing DevSecOps: Why A DevOps-Centric Approach To Security Is Needed In 2023

DevSecOps, in reality, is actually more of a bridge building exercise: DevOps

are asked to be that bridge to the security teams. Yet, simultaneously, DevOps

are asked to enhance the technology used (for example, strong customer

authentication, or SCA for short) often without the full input of security

teams and so new potential for risk is introduced. These are DevOps security

tasks, in effect, rather than DevSecOps. These need to be approached from the

top down and bottom up: an organisational risk assessment to prioritise the

software security tasks, and then a bottom-up modelling of how to incorporate

something like SCA in our example. This is a DevOps-centric approach to

security rather than the commonly accepted DevSecOps one. ... Security risks

cover the entire software lifecycle from the initial open source building

blocks right through to deployed and in production. Understanding this level

of maturity is essential to a DevOps-centric approach, with a shift right

being equally important to the shift-left focus of old. You can think of this

as modernising DevSecOps, reducing alert 'noise' within developer range, and

ensuring contextual threat levels are brought into focus.

Why 'Data Center vs. Cloud' Is the Wrong Way to Think

If you think in more nuanced ways about how data centers relate to the cloud,

you'll realize that terms like "data center vs. cloud" just don't make sense.

There are several reasons why. First and foremost, data centers are an

integral part of public clouds. If you move your workload to AWS, Azure, or

another public cloud, it's hosted in a data center. The difference between the

cloud and private data centers is that in the cloud, someone else owns and

manages the data center. ... A second reason why it's tricky to compare data

centers to the cloud is that not all workloads that exist outside of the

public cloud are hosted in private data centers dedicated to handling just one

business's applications and data. ... Another cause for blurred lines between

data centers and the cloud is that in certain cases, you can obtain services

inside private data centers that resemble those most closely associated with

the public cloud. I'm thinking here of offerings like Equinix Metal, which is

essentially an infrastructure-as-a-service (IaaS) solution that allows

companies to stand up servers on-demand inside colocation centers.

Tales of Kafka at Cloudflare: Lessons Learnt on the Way to 1 Trillion Messages

/filters:no_upscale()/articles/kafka-clusters-cloudflare/en/resources/24figure-1-1684771375174.jpg)

With an event-driven system, to avoid coupling, systems shouldn't be aware of

each other. Initially, we had no enforced message format and producer teams

were left to decide how to structure their messages. This can lead to

unstructured communication and pose a challenge if the teams don't have a

strong contract in place, with an increased number of unprocessable messages.

To avoid unstructured communication, the team searched for solutions within

the Kafka ecosystem and found two viable options, Apache Avro and protobuf,

with the latter being the final choice. We had previously been using JSON, but

found it difficult to enforce compatibility and the JSON messages were larger

compared to protobuf. ... Based on Kafka connectors, the framework enables

engineers to create services that read from one system and push it to another

one, like Kafka or Quicksilver, Cloudflare's Edge database. To simplify the

process, we use Cookiecutter to template the service creation, and engineers

only need to enter a few parameters into the CLI.

Agile & UX: a failed marriage?

Where should UX teams sit in an Agile organisation? I have worked with

companies where they’ve resided in engineering/technology, product, customer

experience, digital, and even their own vertical. The choice of where the

function sits should be based on organisational maturity, for example, newer

companies tend to have them bundled with engineering (and therefore the

designers tend to be UI designers who are helping the front end developers

code) and more mature ones might to have them sit in either product or

standalone orgs. The challenge is what follows. Most companies that are Agile

tend to have cross-functional mission teams working on a product or feature.

In the case study, we saw that there were two distinct teams: first, the

business and architecture group and second the PO and their Agile delivery

squad. Hidden behind this seemingly simple structure is much more complexity.

For example, while UX teams work with the PO and their squad, they have a role

to play, arguably a fundamental one in helping the business and solution

architects understand the sort of experience that will emerge (and therefore

should be considered when estimating timeframes/investments).=

Hybrid working: the new workplace normal

Some enterprises are allowing teams within the organization to decide whether

to continue to work from home or come back to the office for a few days a

week. But the transition is creating a new set of challenges: Since many

organizations reduced their office real estate footprint during the pandemic,

scheduling problems now crop up when multiple teams are doing “in-office” days

simultaneously and vying for space and resources such as meeting rooms and

videoconferencing equipment. The rise of this “hoteling” concept can create

new headaches for operations and IT teams. One constant among the attendees is

the technology gap increasingly associated with a hybrid or remote workforce.

Employees returning to the workplace are discovering that it is no longer a

plug-and-play environment. Downsizing, moving, and years of work-at-home

technology often lead to frustrating searches for the right cable to connect,

the right power adapter, and proper training for the new audioconferencing

bridge that they never learned how to use.

How generative AI regulation is shaping up around the world

Laws relating to regulation of AI in Canada are currently subject to a mixture

of data privacy, human rights and intellectual property legislation on a

state-to-state basis. However, an Artificial Intelligence and Data Act (AIDA)

is planned for 2025 at the earliest, with drafting having begun under the Bill

C-27, the Digital Charter Implementation Act, 2022. An in-progress framework

for managing the risks and pitfalls of generative AI, as well as other areas

of this technology across Canada, aims to encourage responsible adoption, with

consultations reportedly planned with stakeholders. ... The Indian

government announced in March 2021 that it would apply a “light touch” to AI

regulation in the aim of maintaining innovation across the country, with no

immediate plans for specific regulation currently. Opting against regulation

of AI growth, this area of tech was identified by the Ministry of Electronics

and IT as “significant and strategic”, but the agency stated that it would put

in place policies and infrastructure measures to help combat bias,

discrimination and ethical concerns.

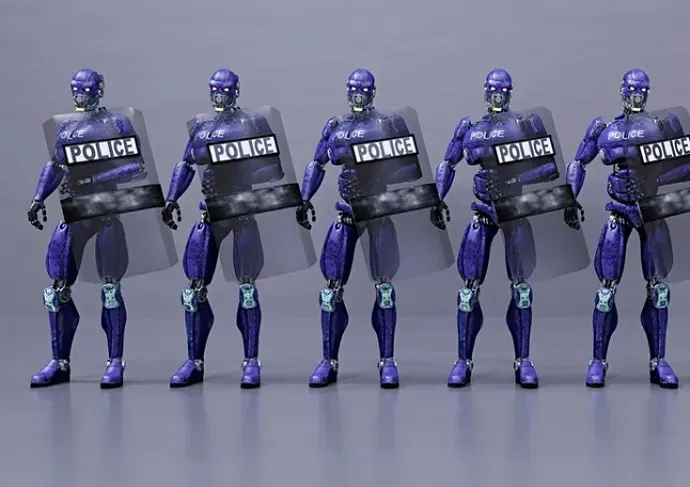

Good Cop, Bad Cop: Investigating AI for Policing

On a brighter note, police departments with real-time crime centers, as well

as regional intelligence centers, can benefit from AI technology due to the

massive amounts of data pouring in from multiple sources. AI can effectively

sort through and prioritize such data in real time to allow faster and more

targeted responses to unfolding situations. Perhaps most critically, law

enforcement agencies can turn to AI for assistance during unfolding incidents.

“A 911 dispatching system, emergency management watch center, or real-time

crime center embedded with assistive AI can analyze data from multiple

sources, such as cameras, sensors and databases, to gain insights that might

otherwise go unseen during a fast-moving situation or investigation,” Sims

says. Hara notes that AI is already playing an important role in several key

law enforcement areas. He points to crowd management as an example. “AI will

understand how many people are expected at a location and alert officials to a

variance,” Hara says. AI can also play a critical role in school safety,

taking advantage of the surveillance cameras many schools have already

installed.

Why the Document Model Is More Cost-Efficient Than RDBMS

A common objection from customers before they try a NoSQL database like

MongoDB Atlas is that their developers already know how to use RDBMS, so it is

easy for them to “stay the course.” Believe me when I say that nothing is

easier than storing your data the way your application actually uses it. A

proper document data model mirrors the objects that the application uses. It

stores data using the same data structures already defined in the application

code using containers that mimic the way the data is actually processed. There

is no abstraction between the physical storage or increased time complexity to

the query. The result is less CPU time spent processing the queries that

matter. One might say this sounds a bit like hard-coding data structures into

storage like the HMS systems of yesteryear. So what about those OLAP queries

that RDBMS was designed to support? MongoDB has always invested in APIs that

allow users to run the ad hoc queries required by common enterprise

workloads.

Quote for the day:

“You never know how strong you are

until being strong is the only choice you have.” -- Bob Marley

No comments:

Post a Comment