2023 could be the breakthrough year for quantum computing

Despite progress on short-term applications, 2023 will not see error

correction disappear. Far from it, the holy grail of quantum computing will

continue to be building a machine capable of fault tolerance. 2023 may create

software or hardware breakthroughs that will show how we’re closer than we

think, but otherwise, this will continue to be something that is achieved far

beyond 2023. Despite it being everything to some quantum companies and

investors, the future corporate users of quantum computing will largely see it

as too far off the time horizon to care much. The exception will be government

and anyone else with a significant, long-term interest in cryptography.

Despite those long time horizons, 2023 will define clearer blueprints and

timelines for building fault-tolerant quantum computers for the future.

Indeed, there is also an outside chance that next year will be the year when

quantum rules out the possibility of short-term applications for good, and

doubles down on the 7- to 10-year journey towards large-scale fault-tolerant

systems.

Technical Debt is a Major Threat to Innovation

The challenge is instead of trying to keep the proverbial IT lights on during

the COVID-19 era, IT teams are now being asked to innovate to advance digital

business transformation initiatives, said Orlandini. A full 87% of survey

respondents cited modernizing critical applications as a key success driver.

As a result, many organizations are embracing platform engineering to bring

more structure to their DevOps processes, he noted. The challenge, however, is

striking a balance between a more centralized approach to DevOps and

maintaining the ability of developers to innovate, said Orlandini. The issue,

of course, is that in addition to massive technical debt, the latest

generation of applications are more distributed than ever. The survey found

91% of respondents now rely on multiple public cloud providers for different

workloads, with 54% of data residing on a public cloud. However, the survey

also found on-premises IT environments are still relevant, with 20% planning

to repatriate select public cloud workloads to an on-premises model over the

next 12 months.

What’s Going Into NIST’s New Digital Identity Guidelines?

Both government and private industries have been collecting and using facial

images for years. However, critics of facial recognition technology accuse it

of racial, ethnic, gender and age-based biases, as it struggles to properly

identify people of color and women. The algorithms in facial recognition tend

to perpetuate discrimination in a technology meant to add security rather than

adding risk. The updated NIST digital guidelines will directly address the

struggles of facial recognition in particular, and biometrics overall. “The

forthcoming draft will include biometric performance requirements designed to

make sure there aren’t major discrepancies in the tech’s effectiveness across

different demographic groups,” FCW reported. Rather than depend on digital

photos for proof, NIST will add more options to prove identity. Lowering risk

is as important to private industries as it is to federal agencies. Therefore,

it would behoove enterprises to take steps to rethink their identity proofing.

The Past and Present of Serverless

As a new computing paradigm in the cloud era, Serverless architecture is a

naturally distributed architecture. Its working principle is slightly changed

compared with traditional architectures. In the traditional architecture,

developers need to purchase virtual machine services, initialize the running

environment, and install the required software (such as database software and

server software). After preparing the environment, they need to upload the

developed business code and start the application. Then, users can access the

target application through network requests. However, if the number of

application requests is too large or too small, developers or O&M

personnel need to scale the relevant resources according to the actual number

of requests and add corresponding policies to the load balance and reverse

proxy module to ensure the scaling operation takes effect timely. At the same

time, when doing these operations, it is necessary to ensure online users will

not be affected. Under the Serverless architecture, the entire application

release process and the working principle will change to some extent.

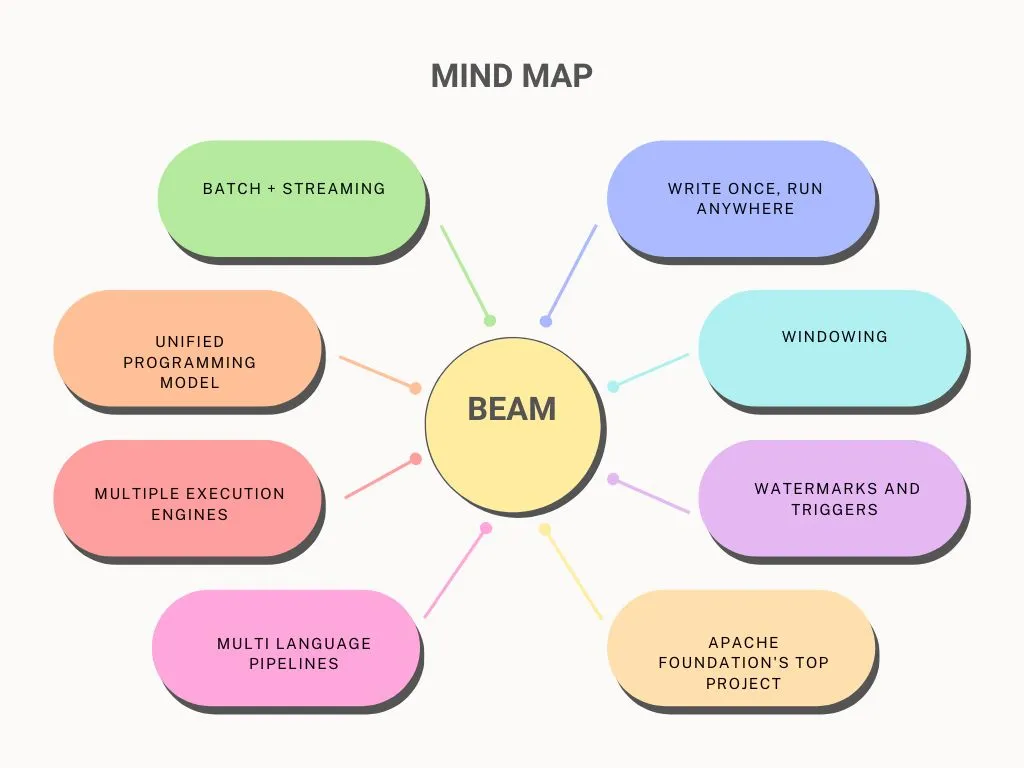

Why Apache Beam is the next big thing in big data processing

It’s a programming model for writing big data processing pipelines which is

portable and unified. Now what does it mean exactly: First let’s understand

the use cases for big data processing pipelines. Batch processing: Batch

processing is a data processing technique used in big data pipelines to

analyze and process large volumes of data in batches or sets. In batch

processing, data is collected over a period of time, and then the entire batch

of data is processed together Stream processing : Processing data as it is

generated. It is a data processing technique to process data in real-time as

it is generated, rather than in batches. In stream processing, data is

processed continuously, as it flows through the pipeline. ... Beam offers

multi-language pipelines which is basically a pipeline that is constructed

using one Beam SDK language and incorporates one or more transforms from

another Beam SDK language. The transforms from the other SDK language are

known as cross-language transforms.

The Use of ChatGPT in the Cyber Security Industry

ChatGPT has also been useful within cybernetic defense, by being asked to

create a Web Application Firewall (WAF) rule to detect a specific type of

attack, in the threat hunting scenario, where it is possible that the tool

creates a machine learning model in any language, such as python, so that the

tool can analyze the network traffic of a .pcap file, where the network

packets were captured and thereby identify possible malicious behavior, such

as a network connection with a malicious IP address that is already known and

may indicate that a device is compromised, indicate an unusual increase in

attempts to access the network through brute force, among other possibilities.

... This is worrying to the point of schools in NYC City blocking access to

ChatGPT due to concern about the negative impacts this can generate on the

students’ learning process, since in most cases, depending on the question,

the answer is already provided without any effort or without having to

study.

Is quantum machine learning ready for primetime?

Hopkins disagrees. “We are trying to apply [quantum ML] already,” he says,

joining up with multiple clients to explore practical applications for such

methods on a timescale of years and not decades, as some have ventured.

... “You’re not going to fit that on a quantum computer with only 433

qubits,” says Hopkins – sufficient progress is being made each year to expand

the possible number of quantum ML experiments that could be run. He also

predicts that we will see quantum ML models become more generalisable. Schuld,

too, is hopeful that the quantum ML field will directly benefit from recent

and forthcoming advances on the hardware side. It’ll be at this point, she

predicts, when researchers can begin testing quantum ML models on realistic

problem sizes, and when we’re likely to see what she describes as a ‘smoking

gun’ revealing a set of overarching principles in general quantum ML – one

that reveals just how much we do and don’t know about the mysteries of

applying these algorithms to complex, real-world problems.

Cyber Resilience Act: A step towards safe and secure digital products in Europe

Cybersecurity threats are global and continually evolving. They are targeting

complex, interdependent systems that are hard to secure as threats can come

from many places. A product that had strong security yesterday can have weak

security tomorrow as new vulnerabilities and attack tactics are discovered.

Even with a manufacturer appropriately mitigating risks, a product can still

be compromised through supply chain attacks, the underlying digital

infrastructure, an employee or many other ways. Microsoft alone analyzes 43

trillion security signals daily to better understand and protect against

cyberthreats. Staying one step ahead requires speed and agility. Moreover,

addressing digital threats requires a skilled cybersecurity workforce that

helps organizations prepare and helps authorities ensure adequate enforcement.

However, in Europe and across the world there is a shortage of skilled staff.

Over 70% of businesses cannot find staff with the required digital

skills.

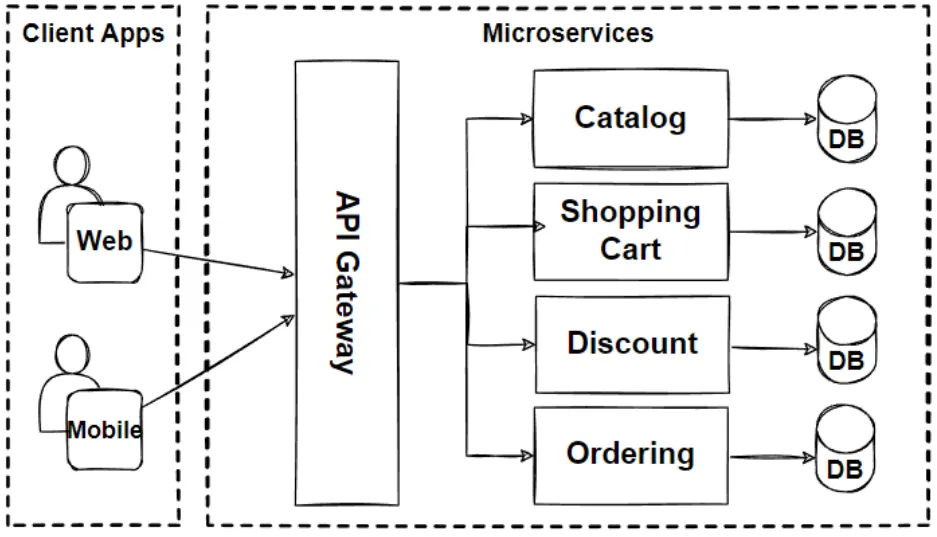

Microservices Architecture for Enterprise Large-Scaled Application

Microservices architecture is a good choice for complex, large-scale

applications that require a high level of scalability, availability, and

agility. It can also be a good fit for organizations that need to integrate

with multiple third-party services or systems. However, microservices

architecture is not a one-size-fits-all solution, and it may not be the best

choice for all applications. It requires additional effort in terms of

designing, implementing, and maintaining the services, as well as managing the

communication between them. Additionally, the overhead of coordinating between

services can result in increased latency and decreased performance, so it may

not be the best choice for applications that require high performance or low

latency. ... Microservices architecture is a good choice for organizations

that require high scalability, availability, and agility, and are willing to

invest in the additional effort required to design, implement, and maintain a

microservices-based application.

Developing a successful cyber resilience framework

The difference between cyber security and cyber resilience is key. Cyber

security focuses on protecting an organization from cyber attack. It involves

things such as firewalls, VPNs, anti-malware software, and hygiene, such as

patching software and firmware, and training employees about secure behavior.

On the other hand, “cyber resilience focuses on what happens when cyber

security measures fail, as well as when systems are disrupted by things such

as human error, power outages, and weather,”. Resiliency takes into account

where an organization's operations are reliant on technology, where critical

data is stored, and how those areas can be affected by disruption. ...

Cyber resilience includes preparation for business continuity and involves not

just cyber attacks or data breaches, but other adverse conditions and

challenges as well. For example, if the workforce is working remotely due to a

catastrophic scenario, like the COVID-19 pandemic, but still able to perform

business operations well and produce results in a cyber-secure habitat, the

company is demonstrating cyber resilience.

Quote for the day:

"The art of communication is the

language of leadership." -- James Humes

No comments:

Post a Comment