Toward data dignity: Let’s find the right rules and tools for curbing the power of Big Tech

Enlightened new policies and legislation, building on blueprints like the

European Union’s GDPR and California’s CCPA, are a critical start to creating a

more expansive and thoughtful formulation for privacy. Lawmakers and regulators

need to consult systematically with technologists and policymakers who deeply

understand the issues at stake and the contours of a sustainable working system.

That was one of the motivations behind the creation of the >Ethical

Tech Project —to gather like-minded ethical technologists, academics, and

business leaders to engage in that intentional dialogue with policymakers. We

are starting to see elected officials propose regulatory bodies akin to what the

Ethical Tech Project was designed to do—convene tech leaders to build standards

protecting users against abuse. A recently proposed federal watchdog would be a

step in the right direction to usher in proactive tech regulation and start a

conversation between the government and the individuals who have the know-how to

find and define the common-sense privacy solutions consumers need.

For HPC Cloud The Underlying Hardware Will Always Matter

For a large contingent of those ordinary enterprise cloud users, the belief is

that a major benefit of the cloud is not thinking about the underlying

infrastructure. But, in fact, understanding the underlying infrastructure is

critical to unleashing the value and optimal performance of a cloud deployment.

Even more so, HPC application owners need in-depth insight and therefore, a

trusted hardware platform with co-design and portability built in from the

ground up and solidified through long-running cloud provider partnerships. ...

In other words, the standard lift-and-shift approach to cloud migration is not

an option. The need for blazing fast performance with complex parallel codes

means fine-tuning hardware and software. That’s critical for performance and for

cost optimization, says Amy Leeland, director of hyperscale cloud software and

solutions at Intel. “Software in the cloud isn’t always set by default to use

Intel CPU extensions or embedded accelerators for optimal performance, even

though it is so important to have the right software stack and optimizations to

unlock the potential of a platform, even on a public cloud,” she explains.

NSA, CISA say: Don't block PowerShell, here's what to do instead

Defenders shouldn't disable PowerShell, a scripting language, because it is a

useful command-line interface for Windows that can help with forensics, incident

response and automating desktop tasks, according to joint advice from the US spy

service the National Security Agency (NSA), the US Cybersecurity and

Infrastructure Security Agency (CISA), and the New Zealand and UK national

cybersecurity centres. ... So, what should defenders do? Remove PowerShell?

Block it? Or just configure it? "Cybersecurity authorities from the United

States, New Zealand, and the United Kingdom recommend proper configuration and

monitoring of PowerShell, as opposed to removing or disabling PowerShell

entirely," the agencies say. "This will provide benefits from the security

capabilities PowerShell can enable while reducing the likelihood of malicious

actors using it undetected after gaining access into victim networks."

PowerShell's extensibility, and the fact that it ships with Windows 10 and 11,

gives attackers a means to abuse the tool.

How companies are prioritizing infosec and compliance

This study confirmed our long-standing theory that when security and

compliance have a unified strategy and vision, every department and employee

within the organization benefits, as does the business customer,” said

Christopher M. Steffen, managing research director of EMA. Most organizations

view compliance and compliance-related activities as “the cost of business,”

something they have to do to conduct operations in certain markets.

Increasingly, forward-thinking organizations are looking for ways to maximize

their competitive advantage in their markets and having a best-in-class data

privacy program or compliance program is something that more savvy customers

are interested in, especially in organizations with a global reach. Compliance

is no longer a “table stakes” proposition: comprehensive compliance programs

focused on data security and privacy can be the difference in very tight

markets and are often a deciding factor for organizations choosing one vendor

over another.”

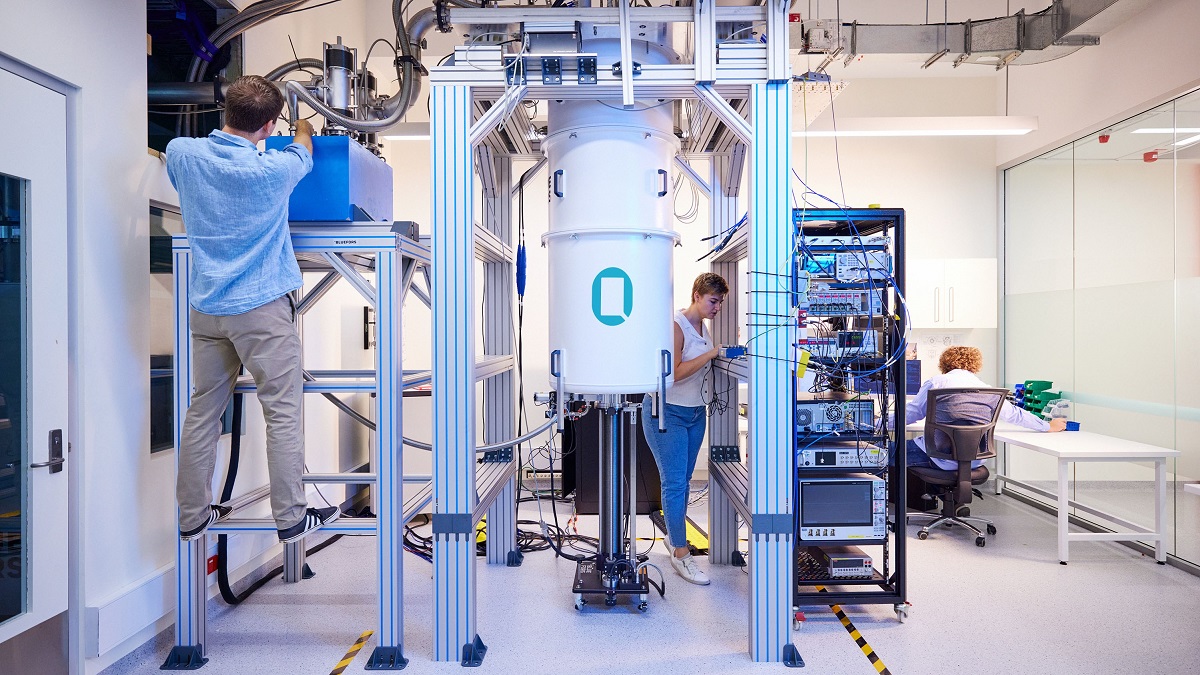

IDC Perspective on Integration of Quantum Computing and HPC

Quantum and classical hardware vendors are working to develop quantum and

quantum-inspired computing systems dedicated to solving HPC problems. For

example, using a co-design approach, quantum start-up IQM is mapping quantum

applications and algorithms directly to the quantum processor to develop an

application-specific superconducting computer. The result is a quantum system

optimized to run particular applications such as HPC workloads. In

collaboration with Atos, quantum hardware start-up, Pascal is working to

incorporate its neutral-atom quantum processors into HPC environments.

NVIDIA’s cuQuantum Appliance and cuQuantum software development kit provide

enterprises the quantum simulation hardware and developer tools needed to

integrate and run quantum simulations in HPC environments. At a more global

level, the European High Performance Computing Joint Undertaking (EuroHPC JU)

announced its funding for the High-Performance Computer and Quantum Simulator

(HPCQS) hybrid project.

Australian researchers develop a coherent quantum simulator

“What we’re doing is making the actual processor itself mimic the single

carbon-carbon bonds and the double carbon-carbon bonds,” Simmons explains. “We

literally engineered, with sub-nanometre precision, to try and mimic those

bonds inside the silicon system. So that’s why it’s called a quantum analog

simulator.” Using the atomic transistors in their machine, the researchers

simulated the covalent bonds in polyacetylene. According to the SSH theory,

there are two different scenarios in polyacetylene, called “topological

states” – “topological” because of their different geometries. In one state,

you can cut the chain at the single carbon-carbon bonds, so you have double

bonds at the ends of the chain. In the other, you cut the double bonds,

leaving single carbon-carbon bonds at the ends of the chain and isolating the

two atoms on either end due to the longer distance in the single bonds. The

two topological states show completely different behaviour when an electrical

current is passed through the molecular chain. That’s the theory. “When we

make the device,” Simmons says, “we see exactly that behaviour. So that’s

super exciting.”

Is Kubernetes key to enabling edge workloads?

Lightweight and deployed in milliseconds, containers enable compatibility

between different infrastructure environments and apps running across

disparate platforms. Isolating edge workloads in containers protects them from

cyber threats while microservices let developers update apps without worrying

about platform-level dependencies. Benefits of orchestrating edge containers

with Kubernetes include:Centralized Management — Users control the entire app

deployment across on-prem, cloud, and edge environments through a single pane

of glass. Accelerated Scalability — Automatic network rerouting and the

capability to self-heal or replace existing nodes in case of failure remove

the need for manual scaling. Simplified Deployment — Cloud-agnostic,

DevOps-friendly, and deployable anywhere from VMs to bare metal environments,

Kubernetes grants quick and reliable access to hybrid cloud

computing. Resource Optimization — Kubernetes maximizes the use of

available resources on bare metal and provides an abstraction layer on top of

VMs optimizing their deployment and use.

Canada Introduces Infrastructure and Data Privacy Bills

The bill sets up a clear legal framework and details expectations for critical

infrastructure operators, says Sam Andrey, a director at think tank

Cybersecure Policy Exchange at Toronto Metropolitan University. The act also

creates a framework for businesses and government to exchange information on

the vulnerabilities, risks and incidents, Andrey says, but it does not address

some other key aspects of cybersecurity. The bill should offer "greater

clarity" on the transparency and oversight into what he says are "fairly

sweeping powers." These powers, he says, could perhaps be monitored by the

National Security and Intelligence Review Agency, an independent government

watchdog. It lacks provisions to protect "good faith" researchers. "We would

urge the government to consider using this law to require government agencies

and critical infrastructure operators to put in place coordinated

vulnerability disclosure programs, through which security researchers can

disclose vulnerabilities in good faith," Andrey says.

Prioritize people during cultural transformation in 3 steps

Addressing your employees’ overall well-being is also critical. Many workers

who are actively looking for a new job say they’re doing so because their

mental health and well-being has been negatively impacted in their current

role. Increasingly, employees are placing greater value on their well-being

than on their salary and job title. This isn’t a new issue, but it’s taken on

a new urgency since COVID pushed millions of workers into the remote

workplace. For example, a 2019 Buffer study found that 19 percent of remote

workers reported feeling lonely working from home – not surprising, since most

of us were forced to severely limit our social interactions outside of work as

well. Leaders can help address this by taking actions as simple as introducing

more one-to-one meetings, which can boost morale. One-on-one meetings are

essential to promoting ongoing feedback. When teams worked together in an

office, communication was more efficient mainly because employees and managers

could meet and catch up organically throughout the day.

Pathways to a Strategic Intelligence Program

Strong data visualization capabilities can also be a huge boost to the

effectiveness of a strategic intelligence program because they help executive

leadership, including the board, quickly understand and evaluate risk

information. “There’s an overwhelming amount of data out there and so it’s

crucial to be able to separate the signal from the noise,” he says. “Good data

visualization tools allow you to do that in a very efficient, impactful and

cost-effective manner, and to communicate information to busy senior leaders

in a way that is most useful for them.” Calagna agrees that data visualization

tools play an important role in bringing a strategic intelligence to life for

leaders across functions within any organization, helping them to understand

complex scenarios and insights more easily than narrative and other report

forms may permit. “By quickly turning high data volumes into complex analyses,

data visualization tools can enable organizations to relay near real-time

insights and intelligence that support better informed decision-making,” she

says. Data visualization tools can help monitor trends and assumptions that

impact strategic plans and market forces and shifts that will inform strategic

choices.

Quote for the day:

"Patience puts a crown on the head."

-- Ugandan Proverb

No comments:

Post a Comment