Why data literacy needs to be part of a company's DNA

"Companies with lower levels of data literacy in the workforce will be at a competitive disadvantage," said Martha Bennett, vice president and principal analyst, Forrester. "It's also important to stress that different roles have different requirements for data literacy; advanced firms also understand that increasing data literacy is not a once-and-done training exercise, it's a continuous process." These days, everyone in an organization needs to be data literate, and the organization must establish a well-rounded data literacy program to ensure effective decision making. The programs must address the capacity to collect, analyze, and disseminate data tailored to the needs of diverse organizational roles. "Lack of data literacy puts you at a disadvantage, and can lead to potentially disastrous outcomes," Bennett said, "and we're not just talking about a business context here, the same applies in our personal lives." Numbers play a role in daily decisions, both in business and in our personal lives. Quantitative information must be evaluated, whether it's predicting an event, considering the increased risk of developing disease, how people lean politically, or how popular a product or service is.

5 reasons to choose PyTorch for deep learning

One of the primary reasons that people choose PyTorch is that the code they look at is fairly simple to understand; the framework is designed and assembled to work with Python instead of often pushing up against it. Your models and layers are simply Python classes, and so is everything else: optimizers, data loaders, loss functions, transformations, and so on. Due to the eager execution mode that PyTorch operates under, rather than the static execution graph of traditional TensorFlow (yes, TensorFlow 2.0 does offer eager execution, but it’s a touch clunky at times) it’s very easy to reason about your custom PyTorch classes, and you can dig into debugging with TensorBoard or standard Python techniques all the way from print() statements to generating flame graphs from stack trace samples. This all adds up to a very friendly welcome to those coming into deep learning from other data science frameworks such as Pandas or Scikit-learn. PyTorch also has the plus of a stable API that has only had one major change from the early releases to version 1.3 (that being the change of Variables to Tensors).

AI: It's time to tame the algorithms and this is how we'll do it

To achieve this objective, the Commission wants to create an "ecosystem of trust" for AI. And it starts with placing a question mark over facial recognition. The organisation said it would consider banning the technology altogether. Commissioners are planning to launch a debate about "which circumstances, if any" could justify the use of facial recognition. The EU's white paper also suggests having different rules, depending on where and how an AI system is used. A high-risk system is one used in a critical sector, like healthcare, transport or policing, and which has a critical use, such as causing legal changes, or deciding on social-security payments. Such high-risk systems, said the Commission, should be subject to stricter rules, to ensure that the application doesn't transgress fundamental rights by delivering biased decisions. In the same way that products and services entering the European market are subject to safety and security checks, argues the Commission, so should AI-powered applications be controlled for bias. The dataset feeding the algorithm could have to go through conformity assessments, for instance. The system could also be required to be entirely retrained in the EU.

Why You Should Revisit Value Discovery

There are at least two reasons for the shift. The first is because we are in a digital world. Now the cost of creating new products can be extraordinarily low (a developer, a laptop). And the cost factor has given rise to new methodologies like Lean Startup and concepts like Fail Fast, Fail Cheap. As enterprises adopt these techniques, they push more projects into corporate innovation pipelines. More on the impact of that later. The second reason relates to software development and delivery methods. It is now possible, often necessary, to chunk software into smaller and smaller units of work and push these into a live test environment with users relatively quickly. Both of these approaches are creating problems. They reinforce the view that more is better. And both also reinforce a challenging proposition: enterprises can be experimental laboratories. Are you starting to get the picture? More ideas of dubious and yet-to-be tested value find their way into your workflow! Perhaps enterprises can convert this negative into a positive but to do so means stitching together a value discovery process with very good value management and delivery.

More And More Organizations Injecting Emotional Intelligence Into Their Systems

A growing number of organizations are injecting emotional intelligence into their systems. These include AI capabilities, such as machine learning and voice and facial recognition, which can better detect and appropriately respond to human emotion, according to Deloitte’s 11th annual Tech Trends 2020 report. The trends also indicate more and more organizations using digital twins, human experience platforms and new approaches to enterprise finance, which can redefine the future of tech innovation. Deloitte’s 11th annual Tech Trends 2020 report captures the intersection of digital technologies, human experiences, and increasingly sophisticated analytics and artificial intelligence technologies in the modern enterprise. The report explores digital twins, the new role technology architects play in business outcomes, and affective computing-driven “human experience platforms” that are redefining the way humans and machines interact. Tech Trends 2020 also shares key insights and prescriptive advice for business and technology leaders so they can better understand what technologies will disrupt their businesses during the next 18 to 24 months.

7 Tips to Improve Your Employees' Mobile Security

"A bit of a trade-off has to happen, as they're managing an aspect of something that is personally owned by the employee, and they're using it for all kinds of things besides work," says Sean Ryan, a Forrester analyst serving security and risk professionals. On nights and weekends, for example, employees are more likely to let their guards down and connect to public Wi-Fi or neglect security updates. Sure, some people are diligent about these things, while some "just don't care," Ryan adds. This attitude can put users at greater risk for phishing, which is a common attack vector for mobile devices, says Terrance Robinson, head of enterprise security solutions at Verizon. Employees are also at risk for data leakage and man-in-the-middle attacks, especially when they hop on public Wi-Fi networks or download apps without first checking requested permissions. Mobile apps are another hot attack vector for smartphones, used in nearly 80% of attacks. A major challenge in strengthening mobile device security is changing users' perception of it. Brian Egenrieder, chief risk officer at SyncDog, says he sees "negativity toward it, as a whole."

Recent ransomware attacks define the malware's new age

Over the past two years, however, ransomware has come back with a vengeance. Mounir Hahad, head of the Juniper Threat Labs at Juniper Networks, sees two big drivers behind this trend. The first has to do with the vagaries of cryptocurrency pricing. Many cryptojackers were using their victims' computers to mine the open source Monero currency; with Monero prices dropping, "at some point the threat actors will realize that mining cryptocurrency was not going to be as rewarding as ransomware," says Hahad. And because the attackers had already compromised their victim's machines with Trojan downloaders, it was simple to launch a ransomware attack when the time was right. "I was honestly hoping that that prospect would be two to three years out," says Hahad, "but it took about a year to 18 months for them to make that U-turn and go back to their original attack." The other trend was that more attacks focused on striking production servers that hold mission-critical data. "If you get a random laptop, an organization may not care as much," says Hahad. "But if you get to the servers that fuel their day-to-day business, that has so much more grabbing power."

To Disrupt or Not to Disrupt?

First, consider the choice of technology. Clayton Christensen long distinguished between disruptive technologies and sustaining technologies (which do not). Most companies pursue sustaining technologies as a way of retaining existing customers and keeping a healthy profit margin. The reason to choose a technology that is “worse” initially is its potential to outperform older technologies in the relatively near future. Moreover, disruptive technologies tend to be what established companies either are not good at or do not want to adopt for fear of alienating their customer base. In other words, the very existence of disruptive technologies represents an opportunity for startups. Which brings us to the choice of customer for a disruptive entrepreneur. Christensen noted that, if you want to sell a product that underperforms existing products in some dimension (say, a laptop with less computing power), you need to find either a way of selling at a discount so that a lack of performance can be compensated for or a set of customers who do not strongly value that performance more than some other feature (for example, longer battery life).

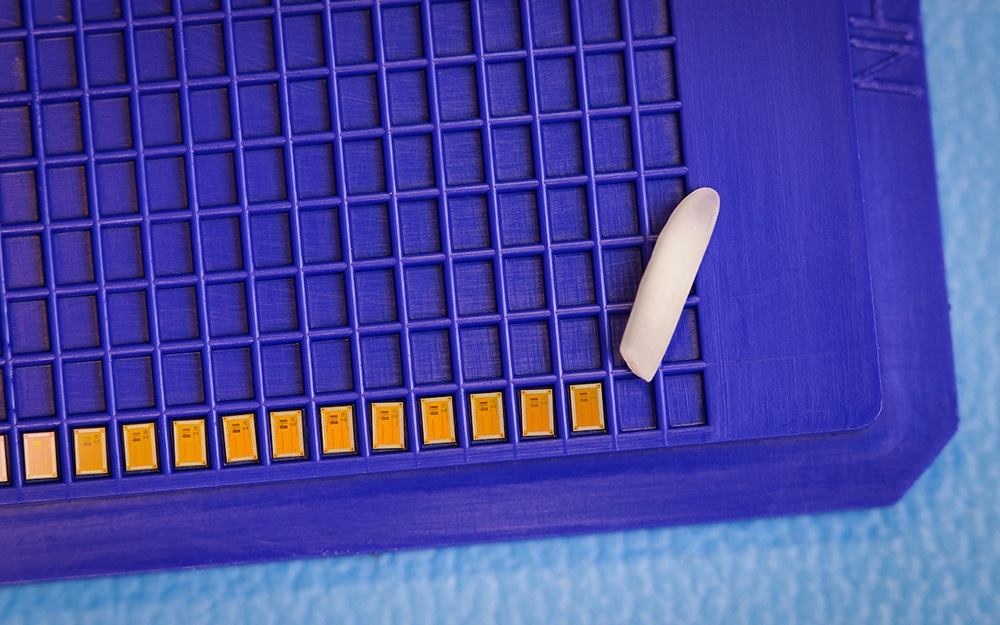

New Wi-Fi chip for the IoT devices consumes 5,000 times less energy

The invention is based on a technique called backscattering. The transmitter does not generate its own signal, but takes the incoming signals from the nearby devices (like a smartphone) or Wi-Fi access point, modifies the signals and encodes its own data onto them, and then reflects the new signals onto a different Wi-Fi channel to another device or access point. This approach requires much less energy and gives electronics manufacturers much more flexibility. With the tiny Wi-Fi chip, the IoT devices will no longer need to charge frequently or need large batteries, but can also allow smart home devices to work completely wirelessly and even without batteries in some cases. The developers note that the new transmitter will significantly increase the operating time on a single charge of various Wi-Fi battery sensors and IoT devices, including, for example, portable video cameras, smart voice speakers, and smoke detectors. Reducing energy consumption in some cases will allow manufacturers of sensors to make their devices even more compact by switching to using less capacious batteries.

The importance of talent and culture in tech-enabled transformations

Many industrial companies may assume that top technology talent is out of reach and that their brand and even location might prevent them from attracting the kind of people they need. But technology professionals are less biased against industrial companies than might be expected. Only 7.4 percent of the respondents to a 2018 survey of technology professionals considered their employer’s industry important. Compensation, the work environment, and professional development—all factors within an industrial company’s control—were the factors that matter most to technology talent ... One leading North American industrial company looking to embark on a tech-enabled transformation prioritized bringing in a chief digital officer (CDO) who had credibility among technologists. The company hired a CDO who previously had led businesses at major technology companies and was able to attract three leading product managers and designers from similar organizations. The company used these new hires—who were intimately familiar with rapid, user-centric design—to signal its commitment to world-class digital development.

Quote for the day:

"If you care enough for a result, you will most certainly attain it." -- William James

No comments:

Post a Comment