Debunking five myths about process automation

Software solutions can be costly, nearly clearing out entire IT budgets in one fell swoop and running up tabs for maintenance and service fees down the road. Often the change in productivity is not near enough to compensate for the forfeited capital, leaving management with a disappointing return on investment. Thankfully, not all systems carry a hefty price tag. Cloud-based apps have disrupted the pricing scale with rates so low even small non-profit organizations can automate workflows without breaking the budget. Saas models and cloud storage enable low, flexible monthly rates and only a small one-time start-up fee. ... Automation systems offering no-code platforms solve multiple problems at once. Since data is securely stored on the cloud, server space isn’t required. The tedious responsibilities of creating new versions, fixing bugs, and maintaining the software fall on the service provider and don’t incur additional expenses for the user; it’s all neatly packed into the monthly rate.

How to move to a disruptive network technology with minimal disruption

Start with the open source and open specification projects, suggests Amy Wheelus, network cloud vice president at AT&T. For the cloud infrastructure, the go-to open source project is OpenStack, with many operators and different use cases, including at the edge. For the service orchestration layer, ONAP is the largest project in Open Source, she notes. "At AT&T we have launched our mobile 5G network using several open source software components, OpenStack, Airship and ONAP." Weyrick recommends "canarying" traffic before relying on it in production. "Bringing up a new, unused private subnet on existing production servers alongside existing interfaces and transitioning less-critical traffic, such as operational metrics, is one method," he says. "This allows you to get experience deploying and operating the various components of the SDN, prove operational reliability and gain confidence as you increase the percentage of traffic being transited by the new stack."

Wearable technology in the workplace and data protection law

Wearable technology is not always quite as extreme, with many employees reaping the benefits of fitness bands and smart watches. Wearable technology can also be used to help keep employees safe. For example, Oxfordshire County Council recently announced that waste recycling teams will be fitted with body cameras to deter physical and verbal abuse from the public. Whatever the technology, there will always be arguments for and against the introduction of workplace accessories, with the importance of wellbeing, safety and productivity, balanced against the adverse costs, legitimate privacy concerns, risks of discrimination and potential staff morale issues. However, given the breadth of personal data the technologies are likely to obtain, and the real risk of over-collection or that the data is used for an illegitimate purpose, the biggest adversary for wearable technology in the workplace is likely to be data protection law.

What is BDD and What Does it Mean for Testers?

The BDD approach favors system testability. I dare to say that this scheme works better with microservice architecture than with monolithic systems because the former allows adding and working on all layers independently per feature. This scheme facilitates testing as we think about the test before any line of code, thus providing greater testability. This reminds me of the Scrum Master course I took by Peregrinus, where the instructor mentioned that in Agile, the important thing is to know how to "cut the cake." Think of a multi-layered cake. Each layer adds a new flavor that is to be combined with the other layers. If the cake is cut horizontally, some people may only get the crust, some a chocolate middle layer, another a layer of vanilla, or a mix of the two, etc. In this scenario, no one would get to taste the cake as it was fully meant to be enjoyed and no one could say they actually tasted the cake.

The danger of having a cloud-native IT policy

The core benefit is simplicity. Because you’re using only the native interfaces from a single cloud provider, there is no heterogenous complexity to worry about for your security system, database, compute platforms, and so on; they are uniform and well-integrated because they sprung from the same R&D group at a single provider. As a result the cloud services work well together. A cloud-native policy limits the cloud services you can use, in return for faster, easier deployment. The promise is better performance, better integration, and a single throat to choke when things go wrong. The downside is pretty obvious: Cloud-native means lockin, and while some lockin is unavoidable, the more you’re using cloud-native interfaces and APIs, the more you’re married to that specific provider. They got ya. In an era where IT is trying to get off of expensive, proprietary enterprise databases, platforms, and software systems, the lockin aspect of cloud-native computing may be an understandably tough sell in most IT departments.

5G use cases for the enterprise: Better productivity and new business models

With 5G potentially allowing much faster download speeds and lower latency for users, he argued that a smartphone will have the same — if not better — potential for productivity as a PC. "With 5G, if you want to run something that requires a lot of processing power that's traditionally only on PCs, you'll be able to run that using the edge capability of the operator on 5G. So all of a sudden, your smartphone starts to become a more powerful platform for productivity," Amon said. Qualcomm's enthusiasm for 5G was unsurprisingly echoed by Huawei. "With a super-fast connection and with low latency, we could put a lot of things, heavy things in the cloud — in our hand," said Li Changzhu, VP of the handset product line at Huawei. The Chinese telecommunications company has used MWC to showcase its brand new Huawei Mate X foldable 5G smartphone. With a large fold-out screen and 5G connectivity, Huawei is positioning it at least in part as an enterprise productivity device.

Why AI and ML are not cybersecurity solutions--yet

Strong AI, capable of learning and solving virtually any set of diverse problems akin to an average human does not exist yet, and it is unlikely to emerge within the next decade. Frequently, when someone says AI, they mean Machine Learning. The latter can be very helpful for what we call intelligent automation - a reduction of human labor without loss of quality or reliability of the optimized process. However, the more complicated a process is the more expensive and time-consuming it is to deploy a tenable ML technology to automate it. Often, ML systems merely assist professionals by taking care of routine and trivial tasks and empowering people to concentrate on more sophisticated tasks. ... Although application security best practice has been discussed for years, there are still regular horror stories in the media, often due to a failure in basic security measures. Why are the basics still not being followed by significant numbers of businesses?

Microsoft boosts HoloLens performance, seeks corporate users

Speaking during the product’s launch, Microsoft Chief Executive Officer Satya Nadella said Microsoft had a responsibility to be a “trusted partner” for companies using its products, such as HoloLens, and that businesses and institutions shouldn’t be dependent on the tech giant. “The defining technologies of our times, AI and mixed reality, cannot be the province of a few people, a few companies or even few countries,” he said. “They must be democratized so everyone can benefit.” Unlike virtual reality goggles, which block out a user’s surroundings, the augmented-reality HoloLens overlays holograms on a user’s existing environment, letting them see things like digital instructions on complex equipment. Microsoft is focusing on corporate customers with HoloLens, and is trying to make the devices more useful right out of the box with prepared applications, rather than require months to write customized programs, says spokesman Greg Sullivan.

Security is battling to keep pace with cloud adoption

The survey found that enterprises are inadvertently introducing complexity into their environments by deploying multiple systems on-premise as well as across multiple private and public clouds. That complexity is compounded by a lack of integrated tools and training that are needed to manage and secure hybrid cloud environments. Respondents also cited a lack of integration across tools, and shortage of qualified personnel or insufficient training for using the tools, as key roadblocks to achieving cross-environment security management. While 59% of respondents said they use two or more different firewalls in their environment and 67% said they are also using two or more public cloud platforms, only 28% said they are using tools that can work across multiple environments to manage network security.

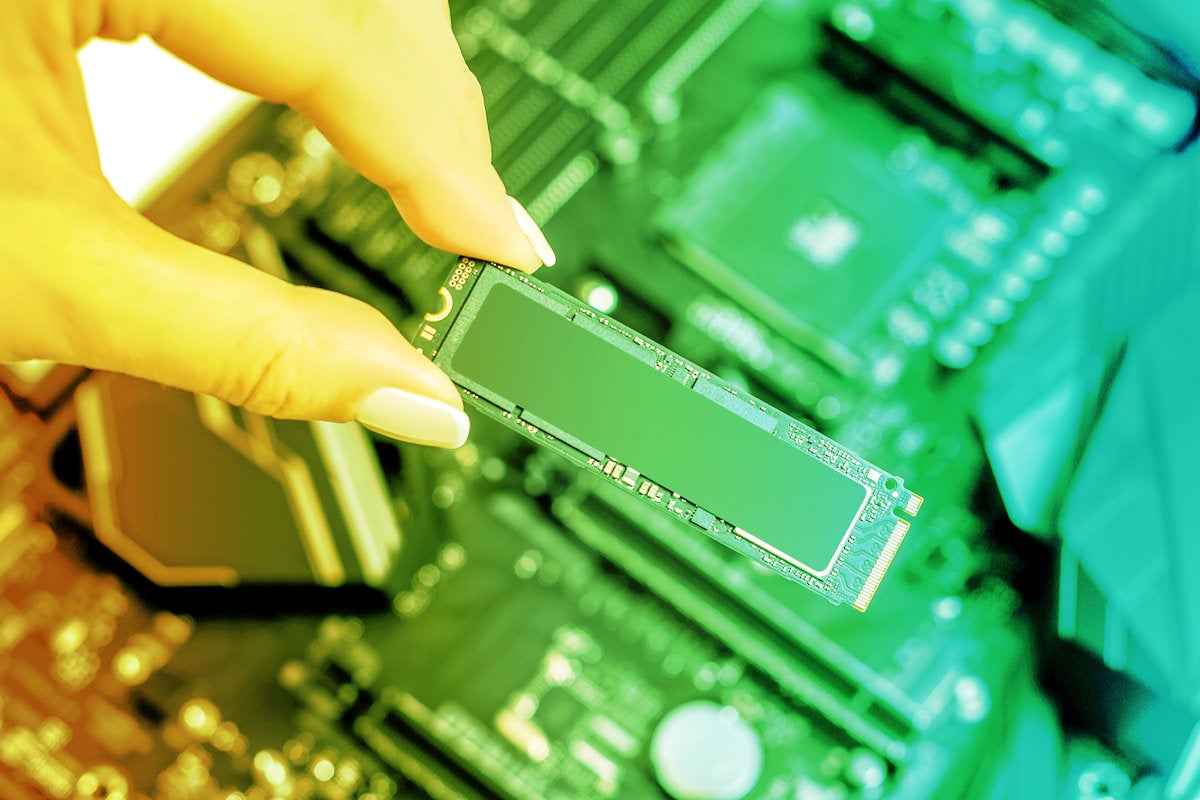

Western Digital launches SSDs for different enterprise use cases

The SN630 is a read-intensive drive capable of two disk writes per day, which means it has the performance to write the full capacity of the drive two times per day. So a 1TB version can write up two 2TB per day. But these drives are smaller capacity, as WD traded capacity for endurance. The SN720 is a boot device optimized for edge servers and hyperscale cloud with a lot more write performance. Random write is 10x the speed of the SN630 and is optimized for fast sequential writes. Both use NVMe, which is predicted to replace the ageing SATA interface. SATA was first developed around the turn of the century as a replacement for the IDE interface and has its legacy in hard disk technology. It is the single biggest bottleneck in SSD performance. NVMe uses the much faster PCI Express protocol, which is much more parallel and has better error recovery. Rather than squeeze any more life out of SATA, the industry is moving to NVMe in a big way at the expense of SATA. IDC predicts SATA product sales peaked in 2017 at around $5 billion and are headed to $1 billion by 2022.

Quote for the day:

"It's not the size of the dog in the fight, it's the size of the fight in the dog." -- Mark Twain

No comments:

Post a Comment