The role of open source in networking

The biggest gap in open source is probably the management and support. Vendors keep making additions to the code. For example, zero-touch provision is not part of the open source stack, but many SD-WAN vendors have added that capability to their product. Besides, low code/no code coding can also become a problem. As we now have APIs, users are mixing and matching stacks together and not doing raw coding. We now have GUIs that have various modules which can communicate with a REST API. Essentially, what you are doing is, you are taking the open source modules and aggregating them together. The problem with pure network function virtualization (NFV) is that a bunch of different software stacks is running on a common virtual hardware platform. The configuration, support, and logging from each stack still require quite a bit of integration and support. Some SD-WAN vendors are taking a “single pane of glass” approach where all the network and security functions are administered from a common management view.

The Internet Has A New Problem: Repeating Random Numbers!

To understand the problem it’s useful to take a 30 second tutorial on Digital Certificates. For those of you who might have managed to stay awake during math class you’ll remember that Asymmetric Cryptography utilizes 2 prime numbers to create a Public and Private Key for a Digital Certificate. The Public Key maps an input (that you want to keep secret) to a large number field while the Private Key reverses the transaction. The theory goes that since there’s an infinite set of prime numbers, there’s an infinite set of Public/Private key combinations. To make sure the prime numbers are different a Random Number Generator (RNG) is used. Sounds pretty secure. Infinite is a big number. What could go wrong? Well the real world is a bit different than math class. It seems the random number generators (RNG) on computer devices really don’t generate an infinite set of primes but rather a bounded set that in turn generates a set of Public/Private Key combinations.

Understanding the Darknet and Its Impact on Cybersecurity

Uses of the darknet are nearly as wide and as diverse as the internet: everything from email and social media to hosting and sharing files, news websites and e-commerce. Accessing it requires specific software, configurations or authorization, often using nonstandard communication protocols and ports. Currently, two of the most popular ways to access the darknet are via two overlay networks. The first is the aforementioned Tor; the second is called I2P. Tor, which stands for “onion router” or “onion routing,” is designed primarily to keep users anonymous. Just like the layers of an onion, data is stored within multiple layers of encryption. Each layer reveals the next relay until the final layer sends the data to its destination. Information is sent bidirectionally, so data is being sent back and forth via the same tunnel. On any given day, over one million users are active on the Tor network. I2P, which stands for the Invisible Internet Project, is designed for user-to-user file sharing. It takes data and encapsulates it within multiple layers.

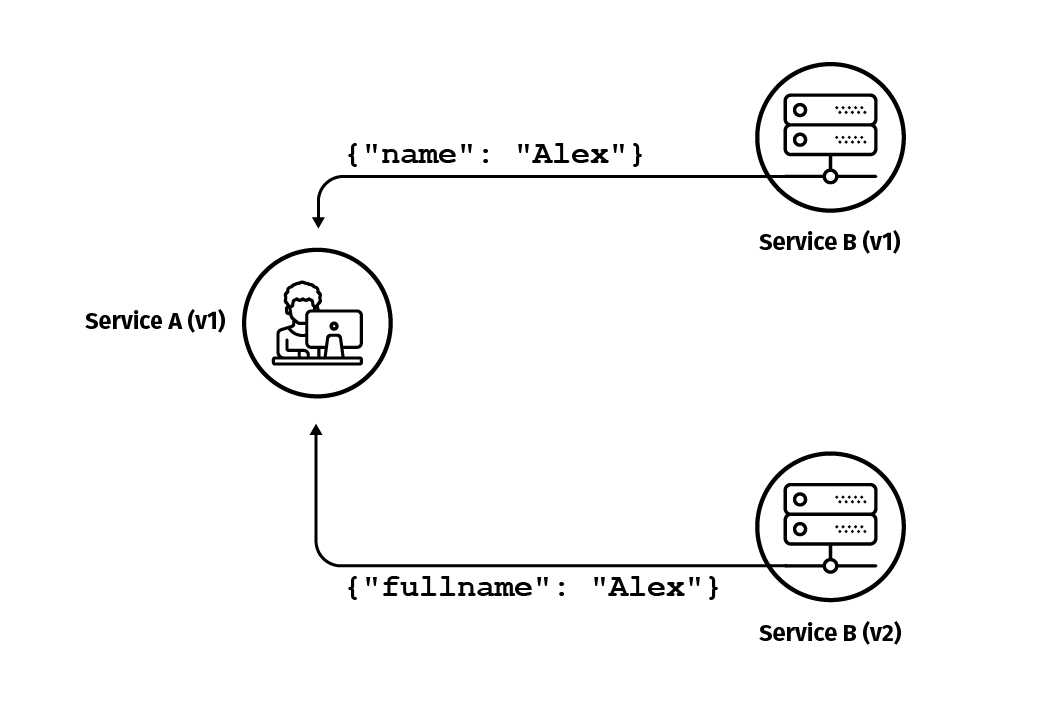

Tap compare is not something that attempts to act as a direct substitute for any other testing technique -- you will still need to write other kinds of tests such as unit tests, component tests or contract tests. However, it can help to you detect regressions so that you can feel more confident about the quality of the new version of the developed service. But one important thing about tap compare is that it provides a new layer of quality around your service. With unit tests, integration tests, and contract tests, the tests verify functionality based on your understanding of the system, so the inputs and outputs are provided by you during test development. In the case of tap compare, this is something totally different. Here, the validation of the service occurs with production requests, either by capturing a group of them from the production environment and replaying them against the new service, or by using the mirroring traffic technique where you shift production traffic to be sent to both the old version and to the new version, and you compare the results.

Why are IoT platforms so darn confusing?

An Internet of Things (IoT) platform is the support software that connects edge hardware, access points, and data networks to other parts of the value chain (which are generally the end-user applications). IoT platforms typically handle ongoing management tasks and data visualization, which allow users to automate their environment. You can think of these platforms as the middleman between the data collected at the edge and the user-facing SaaS or mobile application. That last line is key because to me, an IoT platform is little more than a fancy name for the middleware that connects everything together. i-Scoop focuses on that aspect: “An IoT platform is a form of middleware that sits between the layers of IoT devices and IoT gateways (and thus data) on one hand and applications, which it enables to build, on the other.” Perhaps, though, IoT platform vendor KAA offers the most honest description. While acknowledging the middleware aspect, the vendor also allows that “an IoT platform can be wearing different hats depending on how you look at it.”

Kaspersky Lab Launches New Threat Intelligence Service

Sergey Martsynkyan, Head of B2B Product Marketing at Kaspersky Lab, provided some insights into the new threat intelligence service. “Being aware of the most relevant zero-days, emerging threats and advanced attack vectors is key to an effective cybersecurity strategy.” “However, manually collecting, analyzing and sharing threat data doesn’t provide the level of responsiveness required by an enterprise. There’s a need for a centralized point for accessible data sources and task automation.” According to Kaspersky Lab, one-third of enterprise CISOs feel overwhelmed by threat intelligence sources. Moreover, they also tend to struggle with connecting their threat intelligence with their SIEM solution. The Kaspersky CyberTrace indicates the blurring lines between the different disciplines of cybersecurity; in addition, the new service highlights the growing importance of threat detection and remediation in the modern cybersecurity paradigm; a prevention-based model often leaves cyber-attacks to dwell on enterprise networks and wreak havoc in the digital background.

RPA: the key players, and what’s unique about them

Of all the key specialist key players in RPA, Blue Prism is the only company listed on the stock market. We spoke to Pat Geary, the company’s Chief Evangelist. Pat has an interesting claim to fame in this space, for it was he who first came up with the phrase RPA. Blue Prism puts a quite different emphasis on RPA, indeed it goes further and argues that a lot of the ‘claimed’ players in the RPA space are not actually RPA companies at all — rather they sell what he calls RDA — robotics desktop automation. When we spoke to Mr Geary, he put emphasis on the word guardian: Operational security guardians, resilience and backup guardians, audit guardians and governance guardians.” He says that what he calls RDA bypasses these guardians — “sneaking stuff in without passing the guardians.” He says Blue Prism as providing a fortress RPA, “it’s absolutely bullet-proof,” he says. He likens the Blue Prism solution to a padded room — an area that is safe, allows for experiment, the “business can do whatever they like in there, but they’re not going to break anything.”

Three Pillars with Zero Answers: Rethinking Observability with Ben Sigelman

The big challenge associated with metrics is in dealing with high cardinality. Graphing metrics often provides visibility that allows humans to understand that something is going wrong, and then the associated metric can often be explored further by diving-deeper into the data via an associated metdata tag e.g. user ID, transaction ID, geolocation etc. However, many of these tags have high cardinality, which presents challenges for querying data efficiently. The primary challenge with logging is the volume of data collected. Within a system based on a microservices architecture, the amount of logging is typically the multiple of the number of services by transaction rate. The total cost for maintaining the ability to query on this data can be calculated by further multiplying the number of total transaction by the cost of networking and storage, and again by the number of weeks of retention required. For a large scale system, this cost can be prohibitive.

Digital transformation in healthcare remains complex and challenging

Whilst progress has been made in digital healthcare, it hasn’t necessarily been transformational and in many cases is a simple conversion of analogue to electronic. Certainly the areas of eReferral, ePrescribing and eHealth Records haven’t undergone revolutionary change, they’re simply the transference of what were analogue forms and processes into electronic versions of the same. In healthcare transformation so many processes remain ripe for digital disruption. We’re heading into the post-digital era where healthcare organisations will need to adopt new and emerging technology. These new technologies will drive change in an environment where the sector already has a multitude of existing digital tools. The new technology that is already appearing in healthcare includes artificial intelligence, distributed ledger technology, extended reality and quantum computing. Most industries that have undergone digital transformation have done so by adopting a data-driven approach. In healthcare we’re entering an era where data will be generated at scale.

What You Need to Know about Modern Identity Security

At its core, modern identity and access management platforms must handle provisioning, deprovisioning, and modifying user access from a central network location. Provisioning refers to giving initial permissions to an employee when they first enter your workforce. Deprovisioning, in turn, refers to removing all of the permissions from an employee’s account when they leave your employ. An IAM solution should also help you evaluate and adjust through role management the permissions your employees have as they change roles and position during their employ with your enterprise. You should consider all three of these capabilities absolutely necessary for your enterprise. Limiting the permissions individual users possess often proves the best way to prevent a security threat from taking hold; it prevents the damage a stolen password can do and limits the likelihood of an insider threat. A modern identity security threat should also allow your IT security team to mandate a certain level of password complexity.

Quote for the day:

"Trust is the lubrication that makes it possible for organizations to work." -- Warren G. Bennis

No comments:

Post a Comment