Quote for the day:

"Leaders are people who believe so passionately that they can seduce other people into sharing their dream." -- Warren G. Bennis

What comes after Design thinking

The first and most obvious one is that we can no longer afford to design things

solely for humans. We clearly need to think in non-human, non-monocentric terms

if we want to achieve real, positive, long-term impact. Second, HCD fell short

in making its practitioners think in systems and leverage the power of

relationships to really be able to understand and redesign what has not been

serving us or our planet. Lastly, while HCD accomplished great feats in

designing better products and services that solve today’s challenges, it fell

short in broadening horizons so that these products and systems could pave the

way for regenerative systems: the ones that go beyond sustainability and

actively restore and revitalize ecosystems, communities, and resources create

lasting, positive impact. Now, everything that we put out in the world needs to

have an answer to how it is contributing to a regenerative future. And in order

to build a regenerative future, we need to start prioritizing something that is

integral to nature: relationships. We need to grow relational capacity, from

designing for better interpersonal relationships to establishing systems that

facilitate cross-organizational collaboration. We need to think about relational

networks and harness their power to recreate more just, trustful, and better

functioning systems. We need to think in communities.

FinOps automation: Raising the bar on lowering cloud costs

Successful FinOps automation requires strategies that exploit efficiencies

from every angle of cloud optimization. Good data management, negotiations,

data manipulation capabilities, and cloud cost distribution strategies are

critical to automating cost-effective solutions to minimize cloud spend. This

article focuses on how expert FinOps leaders have focused their automation

efforts to achieve the greatest benefits. ... Effective automation relies on

well-structured data. Intuit and Roku have demonstrated the importance of

robust data management strategies, focusing on AWS accounts and Kubernetes

cost allocation. Good data engineering enables transparency, visibility, and

accurate budgeting and forecasting. ... Automation efforts should focus

on areas with the highest potential for cost savings, such as prepayment

optimization and waste reduction. Intuit and Roku have achieved significant

savings by targeting these high-cost areas. ... Automation tools should be

accessible and user-friendly for engineers managing cloud resources. Intuit

and Roku have developed tools that simplify resource management and align

costs with responsible teams. Automated reporting and forecasting tools help

engineers make informed decisions.

Why CISOs Must Think Clearly Amid Regulatory Chaos

_filmfoto_Alamy.jpg?width=1280&auto=webp&quality=95&format=jpg&disable=upscale)

At their core, CISOs are truth sayers — akin to an internal audit committee

that assesses risks and makes recommendations to improve an organization's

defenses and internal controls. Ultimately, though, it's the board and a

company's top executives who set policy and decide what to disclose in public

filings. CISOs can and should be a counselor for this group effort because

they have the understanding of security risk. And yet, the advice they can

offer is limited if they don't have full visibility into an organization's

technology stack. "Many oversee a company's IT system, but not the products

the company sells. That's crucial when it comes to data-dependent systems and

devices that can provide network-access targets to cyber criminals. Those

might include medical devices, or sensors and other Internet of Things

endpoints used in manufacturing lines, electric grids, and other critical

physical infrastructure. In short: A company's defenses are only as strong as

the board and its top executives allow it to be. And if there is a breach, as

in the case of SolarWinds? CISOs do not determine the materiality of a

cybersecurity incident; a company's top executives and its board make that

call. The CISO's responsibilities in that scenario involves responding to the

incident and conducting the follow-up forensics required to help minimize or

avoid future incidents.

Building Secure Multi-Cloud Architectures: A Framework for Modern Enterprise Applications

The technical controls alone cannot secure multi-cloud environments.

Organizations must conduct cloud security architecture reviews before

implementing any multi-cloud solution. These reviews should focus on: Data

flow patterns between clouds Authentication and authorization requirements

Compliance obligations across all relevant jurisdictions. Completing these

tasks thoroughly and diligently will ensure that multi-cloud security is baked

into the architectural layer between the clouds and in the clouds themselves.

While thorough architecture reviews establish the foundation, automation

brings these security principles to life at scale. Automation provides a major

advantage to security operations for multi-cloud environments. By treating

infrastructure and security as code, organizations can achieve consistent

configurations across clouds, implement automated security testing and enable

fast response to security events. This helps with the overall security and

operational overhead because it allows us to do more with less and to reduce

human error. Our security operations experienced a substantial enhancement

when we moved to automated compliance checks. Still, we did not just throw AWS

services at the problem. We engaged our security team deeply in the

process.

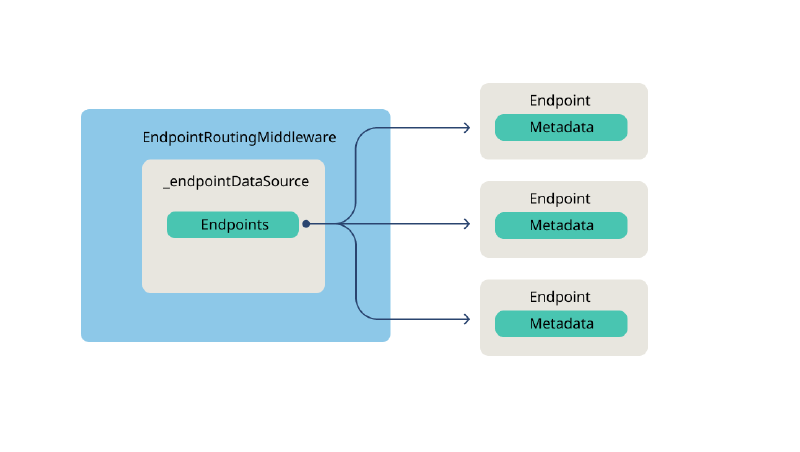

Scaling Dynamic Application Security Testing (DAST)

One solution is to monitor requests sent to the target web server and

extrapolate an OpenAPI Specification based on those requests in real-time.

This monitoring could be performed client-side, server-side, or in-between on

an API gateway, load-balancer, etc. This is a scalable, automatable solution

that does not require each developer’s involvement. Depending on how long it

runs, this approach can be limited in comprehensively identifying all web

endpoints. For example, if no users called the /logout endpoint, then the

/logout endpoint would not be included in the automatically generated OpenAPI

Specification. Another solution is to statically analyze the source code for a

web service and generate an OpenAPI Specification based on defined API

endpoint routes that the automation can gleam from the source code. Microsoft

internally prototyped this solution and found it to be non-trivial to reliably

discover all API endpoint routes and all parameters by parsing abstract syntax

trees without access to a working build environment. This solution was also

unable to handle scenarios of dynamically registered API route endpoint

handlers. ... To truly scale DAST for thousands of web services, we need to

automatically, comprehensively, and deterministically generate OpenAPI

Specifications.

Post-Quantum Cryptography 2025: The Enterprise Readiness Gap

"Quantum technology offers a revolutionary approach to cybersecurity,

providing businesses with advanced tools to counter emerging threats," said

David Close, chief solutions architect at Futurex. By using quantum machine

learning algorithms, organizations can detect threats faster and more

accurately. These algorithms identify subtle patterns that indicate

multi-vector cyberattacks, enabling proactive responses to potential breaches.

Innovations such as quantum key distribution and quantum random number

generators enable unbreakable encryption and real-time anomaly detection,

making them indispensable in fraud prevention and secure communications, Close

said. These technologies not only protect sensitive data but also ensure the

integrity of financial transactions and authentication protocols. A

cornerstone of quantum security is post-quantum cryptography, PQC. Unlike

traditional cryptographic methods, PQC algorithms are designed to withstand

attacks from quantum computers. Standards recently established by the National

Institute of Standards and Technology include algorithms such as Kyber,

Dilithium and SPHINCS+, which promise robust protection against future quantum

threats.

Tricking the bad guys: realism and robustness are crucial to deception operations

The goal of deception technology, also known as deception techniques,

operations, or tools, is to create an environment that attracts and deceives

adversaries to divert them from targeting the organization’s crown jewels.

Rapid7 defines deception technology as “a category of incident detection and

response technology that helps security teams detect, analyze, and defend

against advanced threats by enticing attackers to interact with false IT

assets deployed within your network.” Most cybersecurity professionals are

familiar with the current most common application of deception technology,

honeypots, which are computer systems sacrificed to attract malicious actors.

But experts say honeypots are merely decoys deployed as part of what should be

more overarching efforts to invite shrewd and easily angered adversaries to

buy elaborate deceptions. Companies selling honeypots “may not be thinking

about what it takes to develop, enact, and roll out an actual deception

operation,” Handorf said. “As I stressed, you have to know your

infrastructure. You have to have a handle on your inventory, the log analysis

in your case. But you also have to think that a deception operation is not a

honeypot. It is more than a honeypot. It is a strategy that you have to think

about and implement very decisively and with willful intent.”

Effective Techniques to Refocus on Security Posture

If you work in software development, then “technical debt” is a term that

likely triggers strong reactions. Foundationally, technical debt serves a

similar function to financial debt. When well-managed, both can be used as

leverage for further growth opportunities. In the context of engineering,

technical debt can help expand product offerings and operations, helping a

business grow faster than paying the debt with the opportunities offered from

the leverage. On the other hand, debt also comes with risks and the rate of

exposure is variable, dependent on circumstance. In the context of security,

acceptance of technical debt from End of Life (EoL) software and risky

decisions enable threats whose greatest advantage is time, the exact resource

that debt leverages. ... The trustworthiness of software is dependent on the

exploitable attack surface. Part of that attack surface are exploitable

vulnerabilities. If the outcome of the SBOM with a VEX attestation is a deeper

understanding of those applicable and exploitable vulnerabilities, coupling

that information with exploit predictive analysis like EPSS helps to bring

valuable information to decision-making. This type of assessment allows for

programmatic decision-making. It allows software suppliers to express risk in

the context of their applications and empowers software consumers to escalate

on problems worth solving.

Sustainability, grid demands, AI workloads will challenge data center growth in 2025

Uptime expects new and expanded data center developers will be asked to

provide or store power to support grids. That means data centers will need to

actively collaborate with utilities to manage grid demand and stability,

potentially shedding load or using local power sources during peak times.

Uptime forecasts that data center operators “running non-latency-sensitive

workloads, such as specific AI training tasks, could be financially

incentivized or mandated to reduce power use when required.” “The context for

all of this is that the [power] grid, even if there were no data centers,

would have a problem meeting demand over time. They’re having to invest at a

rate that is historically off the charts. It’s not just data centers. It’s

electric vehicles. It’s air conditioning. It’s carbonization. But obviously,

they are also retiring coal plants and replacing them with renewable plants,”

Uptime’s Lawrence explained. “These are much less stable, more intermittent.

So, the grid has particular challenges.” ... According to Uptime,

infrastructure requirements for next-generation AI will force operators to

explore new power architectures, which will drive innovations in data center

power delivery. As data centers need to handle much higher power densities, it

will throw facilities off balance in terms of how the electrical

infrastructure is designed and laid out.

Is the Industrial Metaverse Transforming the E&U Industry?

One major benefit of the industrial metaverse is that it can monitor equipment

issues and hazardous conditions in real time so that any fluctuations in the

electrical grid are instantly detected. As they collect data and create

simulations, digital twins can also function as proactive tools by predicting

potential problems before they escalate. “You can see which components are in

early stages of failure,” a Hitachi Energy spokesperson notes in this article.

“You can see what the impact of failure is and what the time to failure is, so

you’re able to make operational decisions, whether it’s a switching operation,

deploying a crew, or scheduling an outage, whatever that looks like.” ...

Digital twins also make it possible for operators to simulate and test

operational changes in virtual environments before real-world implementation,

reducing excessive costs. “While it will not totally replace on-site testing,

it can significantly reduce physical testing, lower costs and contribute to an

increased quality of the protection system,” Andrea Bonetti, a power system

protection specialist at Megger, tells the Switzerland-based International

Electrotechnical Commission. Shell is one of several energy providers that use

digital twins to enhance operations, according to Digital Twin

Insider.

No comments:

Post a Comment