Can I read your mind? How close are we to mind-reading technologies?

Technology nowadays is already heavily progressing in artificial intelligence,

so it doesn’t seem too farfetched. Humans have already developed

brain-computer interface (BCI) technologies that can safely be used on humans.

... How would the government play a role in these mind-reading technologies?

How would it effect the eligibility of use of the technology? Don’t you think

some unethical play would be prevalent, because I sure do. I’m not very

ethically inclined to believe these companies aren’t sending our data to other

companies without our consent. I found this term “Neurorights” in a Vox

article, “Brain-reading tech is coming. The law is not ready to protect us”

written by Sigal Samuel. It’s a good read, and I think she demonstrates well

into the depth of how this would impact society from a privacy concern

standpoint. She discusses having 4 core new rights protected within the law:

The right to your cognitive library, mental privacy, mental integrity, and

psychological continuity. She mentions, “brain data is the ultimate refuge of

privacy”. Once it’s collected, I believe you can’t get it back. There needs to

be strict laws enforced if this were to become a ubiquitous technology.

It's The End Of Infrastructure-As-A-Service As We Know It: Here's What's Next

Containers are the next step in the abstraction trend. Multiple containers can

run on a single OS kernel, which means they use resources more efficiently than

VMs. In fact, on the infrastructure required for one VM, you could run a dozen

containers. However, containers do have their downsides. While they're more

space efficient than VMs, they still take up infrastructure capacity when idle,

running up unnecessary costs. To reduce these costs to the absolute minimum,

companies have another choice: Go serverless. The serverless model works best

with event-driven applications — applications where a finite event, like a user

accessing a web app, triggers the need for compute. With serverless, the company

never has to pay for idle time, only for the milliseconds of compute time used

in processing a request. This makes serverless very inexpensive when a company

is getting started at a small volume while also reducing operational overhead as

applications grow in scale. Transitioning to containerization or a serverless

model requires major changes to your IT teams' processes and structure and

thoughtful choices about how to carry out the transition itself.

9 Future of Work Trends Post-COVID-19

Before COVID-19, critical roles were viewed as roles with critical skills,

or the capabilities an organization needed to meet its strategic goals. Now,

employers are realizing that there is another category of critical roles —

roles that are critical to the success of essential workflows. To build the

workforce you’ll need post-pandemic, focus less on roles — which group

unrelated skills — than on the skills needed to drive the organization’s

competitive advantage and the workflows that fuel that advantage. Encourage

employees to develop critical skills that potentially open up multiple

opportunities for their career development, rather than preparing for a

specific next role. Offer greater career development support to employees in

critical roles who lack critical skills. ... After the global financial

crisis, global M&A activity accelerated, and many companies were

nationalized to avoid failure. As the pandemic subsides, there will be a

similar acceleration of M&A and nationalization of companies. Companies

will focus on expanding their geographic diversification and investment in

secondary markets to mitigate and manage risk in times of disruption. This

rise in complexity of size and organizational management will create

challenges for leaders as operating models evolve.

South African bank to replace 12m cards after employees stole master key

"According to the report, it seems that corrupt employees have had access to

the Host Master Key (HMK) or lower level keys," the security researcher

behind Bank Security, a Twitter account dedicated to banking fraud, told

ZDNet today in an interview. "The HMK is the key that protects all the keys,

which, in a mainframe architecture, could access the ATM pins, home banking

access codes, customer data, credit cards, etc.," the researcher told ZDNet.

"Access to this type of data depends on the architecture, servers and

database configurations. This key is then used by mainframes or servers that

have access to the different internal applications and databases with stored

customer data, as mentioned above. "The way in which this key and all the

others lower-level keys are exchanged with third party systems has different

implementations that vary from bank to bank," the researcher said. The

Postbank incident is one of a kind as bank master keys are a bank's most

sensitive secret and guarded accordingly, and are very rarely compromised,

let alone outright stolen.

What matters most in an Agile organizational structure

An Agile organizational strategy that works for one organization won't

necessarily work for another. The chapter excerpt includes a Spotify org

chart, which the authors describe as, "Probably the most frequently emulated

agile organizational model of all." But an Agile model that serves as a

standard of success won't necessarily replicate to another organization

well. Agile software developers aim to better meet customer needs. To do so,

they need to prioritize, release and adapt software products more easily.

Unlike the Spotify-inspired tribe structure, Agile teams should remain

located closely to the operations teams that will ultimately support and

scale their work, according to the authors. This model, they argue in Doing

Agile Right, promotes accountability for change, and willingness to innovate

on the business side. Any Agile initiative should follow the sequence of

"test, learn, and scale." People at the top levels must accept new ideas,

which will drive others to accept them as well. Then, innovation comes from

the opposite direction. "Agile works best when decisions are pushed down the

organization as far as possible, so long as people have appropriate

guidelines and expectations about when to escalate a decision to a higher

level."

What is process mining? Refining business processes with data analytics

Process mining is a methodology by which organizations collect data from

existing systems to objectively visualize how business processes operate and

how they can be improved. Analytical insights derived from process mining

can help optimize digital transformation initiatives across the

organization. In the past, process mining was most widely used in

manufacturing to reduce errors and physical labor. Today, as companies

increasingly adopt emerging automation and AI technologies, process mining

has become a priority for organizations across every industry. Process

mining is an important tool for organizations that are committed to

continuously improving IT and business processes. Process mining begins by

evaluating established IT or business processes to find repetitive tasks

that can by automated using technologies such as robotic process automation

(RPA), artificial intelligence and machine learning. By automating

repetitive or mundane tasks, organizations can increase efficiency and

productivity — and free up workers to spend more time on creative or complex

projects. Automation also helps reduce inconsistencies and errors in process

outcomes by minimizing variances. Once an IT or business process is

developed, it’s important to consistently check back to ensure the process

is delivering appropriate outcomes — and that’s where process mining comes

in.

How to improve cybersecurity for artificial intelligence

One of the major security risks to AI systems is the potential for

adversaries to compromise the integrity of their decision-making processes

so that they do not make choices in the manner that their designers would

expect or desire. One way to achieve this would be for adversaries to

directly take control of an AI system so that they can decide what outputs

the system generates and what decisions it makes. Alternatively, an attacker

might try to influence those decisions more subtly and indirectly by

delivering malicious inputs or training data to an AI model. For

instance, an adversary who wants to compromise an autonomous vehicle so that

it will be more likely to get into an accident might exploit vulnerabilities

in the car’s software to make driving decisions themselves. However,

remotely accessing and exploiting the software operating a vehicle could

prove difficult, so instead an adversary might try to make the car ignore

stop signs by defacing them in the area with graffiti. Therefore, the

computer vision algorithm would not be able to recognize them as stop signs.

This process by which adversaries can cause AI systems to make mistakes by

manipulating inputs is called adversarial machine learning.

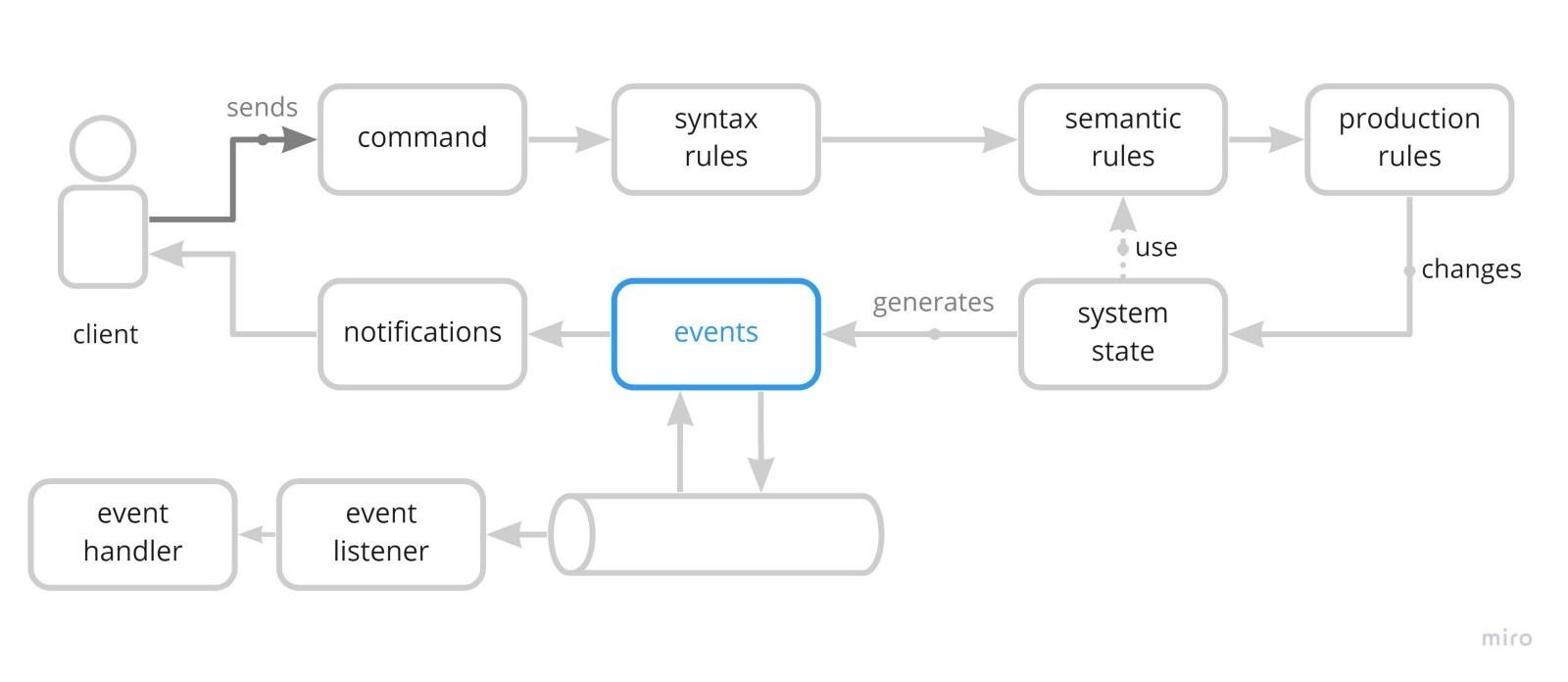

Using a DDD Approach for Validating Business Rules

For modeling commands that can be executed by clients, we need to identify

them by assigning them names. For example, it can be something like

MakeReservation. Notice that we are moving these design definitions towards

a middle point between software design and business design. It may sound

trivial, but when it’s specified, it helps us to understand a system design

more efficiently. The idea connects with the HCI (human-computer

interaction) concept of designing systems with a task in mind; the command

helps designers to think about the specific task that the system needs to

support. The command may have additional parameters, such as date, resource

name, and description of the usage. ... Production rules are the heart of

the system. So far, the command has traveled through different stages which

should ensure that the provided request can be processed. Production rules

specified the actions the system must perform to achieve the desired state.

They deal with the task a client is trying to accomplish. Using the

MakeReservation command as a reference, they make the necessary changes to

register the requested resource as reserved.

7 Ways to Reduce Cloud Data Costs While Continuing to Innovate

This is a difficult time for enterprises, which need to tightly control

costs amid the threat of a recession while still investing sufficiently in

technology to remain competitive. ... This is especially true of analytics

and machine learning projects. Data lakes, ideally suited for machine

learning and streaming analytics, are a powerful way for businesses to

develop new products and better serve their customers. But with data teams

able to spin up new projects in the cloud easily, infrastructure must be

managed closely to ensure every resource is optimized for cost and every

dollar spent is justified. In the current economic climate, no business can

tolerate waste. But enterprises aren’t powerless. Strong financial

governance practices allow data teams to control and even reduce their cloud

costs while still allowing innovation to happen. Creating appropriate

guardrails that prevent teams from using more resources than they need and

ensuring workloads are matched with the correct instance types to optimize

savings will go a long way to reducing waste while ensuring that critical

SLAs are met.

Who Should Lead AI Development: Data Scientists or Domain Experts?

To lead these efforts ethically and effectively, Chraibi suggested data

scientists such as himself should be the driving force. “The data scientists

will be able to give you an insight into how bad it will be using a

machine-learning model” if ethical considerations are not taken into

account, he said. But Paul Moxon, senior vice president for data

architecture at Denodo Technologies, said his experience working with AI

development in the financial sector has given him a different perspective.

“The people who raised the ethics issues with banks—the original ones—were

the legal and compliance team, not the technologists,” he said. “The

technologists want to push the boundaries; they want to do what they’re

really, really good at. But they don’t always think of the inadvertent

consequences of what they’re doing.” In Moxon’s opinion, data scientists and

other technology-focused roles should stay focused on the technology, while

risk-centric roles like lawyers and compliance officers are better suited to

considering broader, unintended effects. “Sometimes the data scientists

don’t always have the vision into how something could be abused. Not how it

should be used but how it could be abused,” he said.

Quote for the day:

No comments:

Post a Comment