GitHub hit with the largest DDoS attack ever seen

GitHub explained how such an attack could generate vast amounts of traffic: "Spoofing of IP addresses allows memcached's responses to be targeted against another address, like ones used to serve GitHub.com, and send more data toward the target than needs to be sent by the unspoofed source. The vulnerability via misconfiguration described in the post is somewhat unique amongst that class of attacks because the amplification factor is up to 51,000, meaning that for each byte sent by the attacker, up to 51KB is sent toward the target," it said. GitHub said that, because of the scale of the attack, it decided to move traffic to Akamai, which could help provide additional edge network capacity. It said it is now investigating the use of its monitoring infrastructure to automate enabling DDoS mitigation providers and will continue to measure its response times to incidents like this -- with a goal of reducing mean time to recovery.

Load Testing Tool Must-Haves

One of the most dangerous moves software developers and testers can make is being lulled into a false sense of security. For example, when application features and performance levels meet expectations during pre-production, only to crash and burn when presented to real users in production. In that same vein, if your organization has any kind of performance testing strategy, chances are you're conducting load testing. However, you may not be truly emulating the real world behavior of your end users in your load tests. Realism in load tests, when overlooked, can cause a myriad of performance problems in production, and end users won't wait around. If you're not performing accurate and realistic load testing, you risk revenue loss, brand damage and diminished employee productivity. The solution: cloud-based load testing. Right off the bat, the cloud provides two major advantages to load and performance procedures that help testing teams better model realistic behavior: instant infrastructure and geographic location.

Building AI systems that work is still hard

Domain expertise, feature modeling and hundreds of thousands lines of code now can be beaten with a few hundred lines of scripting (plus a decent amount of data). As mentioned above: That means that proprietary code is no longer a defensible asset when it’s in the path of the mainstream AI train. Significant contributions are very rare. Real breakthroughs or new developments, even a new combination of the basic components, is only possible for a very limited number of researchers. This inner circle is much smaller, as you might think. Why is that? Maybe it’s rooted in its core algorithm: backpropagation. Nearly every neural network is trained by this method. The simplest form of backpropagation can be formulated in first-semester calculus — nothing sophisticated at all. In spite of this simplicity — or maybe for that very reason — in more than 50 years of an interesting and colorful history, only a few people looked behind the curtain and questioned its main architecture.

Another massive DDoS internet blackout could be coming your way

While older, more established companies are still more likely to host their own DNS, the emergence of cloud as infrastructure means that newer companies are outsourcing everything to the cloud, including DNS. "The concentration of DNS services into a small number of hands...exposes single points of failure that weren't present under the more distributed DNS paradigm of yesteryear (one in which enterprises most often hosted their own DNS servers onsite)," John Bowers, one of the report's co-authors, tells CSO. "The Dyn attack offers a perfect illustration of this concentration of risk--a single DDoS attack brought down a significant fraction of the internet by targeting a provider used by dozens of high profile websites and CDNs [content delivery networks]." The shocking part of this report is that despite the clear danger this concentration poses, too few enterprises have bothered to implement any secondary DNS.

Zero-Day Attacks Major Concern in Hybrid Cloud

Despite their growing reliance on containers, many businesses will continue to at least partially rely on legacy systems for years to come, he continues. Security becomes a challenge when multiple users are accessing multiple environments from multiple different locations. The biggest hybrid cloud security challenge is maintaining strong, consistent security across the enterprise data center and multiple cloud environments, says Cahill. Businesses want consistency; they want to be able to centralize policy and security controls across both. Security teams also struggle to maintain the pace of cloud, an increasingly difficult challenge as cloud continues to accelerate. It used to be that cloud adoption was slowed by security, Cahill points out. Now, containers are driven by the app development team. Security has to keep up. "One of the things we know about cloud computing in general, and about DevOps, is it's all about moving fast," he points out.

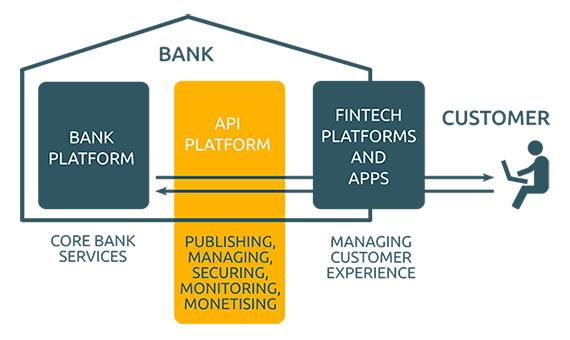

Can APIs Bridge the Gap between Banks and Fintechs?

Fintech companies are forcing banks to go beyond their comfort zone, innovate and accept change as a way of staying in business. With APIs handling the translation between legacy systems and the new technologies, fintech companies can focus on providing more value to the clients instead of learning about obsolete systems. Adopting a client-centric vision helps both banks and fintech companies fulfill their goals. For example, a bank doesn’t offer its corporate clients the ability to compare their yearly financial results with the industry average, but a fintech company can make it its value proposition and, by cooperating with the bank through an API, to help them learn more about their results. For the bank, it doesn’t make sense to create such a niche service, while the fintech’s algorithm is useless without the proper big data input. International organizations and forums support this collaboration between banks and fintech companies since it brings added value to the client.

Cloud firms need $1bn datacentre investment a quarter to compete with AWS and co

“If companies can’t find at least a billion dollars per quarter for datacentre investments and back that up with an aggressive long-term management focus, then the best they can achieve is a tier-two status or a niche market position,” he said. Dinsdale’s comments coincide with the publication of Synergy’s research into how much capital expenditure (capex) the hyperscale cloud firms pumped into their operations in 2017. Its findings are based on an analysis of the capex and datacentre footprint of the world’s 24 biggest cloud and internet service firms. This reveals that the hyperscale community collectively spent $75bn in capex during 2017, which is 19% up on the previous year. Of that $75bn, $22bn was paid out in the fourth quarter alone. Most of the capex is channeled towards helping the hyperscale cloud firms expand and upgrade their datacentres, with Amazon, Apple, Facebook, Google and Microsoft name-checked by Synergy as being top five biggest spenders, accounting, in aggregate, for more than 70% of capex spend in the fourth quarter.

The Banking Industry Sorely Underestimates The Impact of Digital Disruption

Many organizations associate being a ‘Digital Bank’ with the development and deployment of their mobile banking application. Others look at the digital transformation from a sales or marketing perspective. The reality is that digital transformation goes beyond the way a financial services organization deploys their services across digital devices. Even though by 2025, more than 20 billion devices will be connected, the real power of these connections comes from the insight these connection produce. Use of this data, combined with advanced analytics, can change the level of back office automation, connectivity, decision making and existing business models. “Lacking a clear definition of digital, companies will struggle to connect digital strategy to their business, leaving them adrift in the fast-churning waters of digital adoption and change,” states McKinsey. “What’s happened with the smartphone over the past ten years should haunt everyone - since no industry will be immune.”

AI will create new jobs but skills must shift, say tech giants

“For sure there is some shift in the jobs. There’s lots of jobs which will. Think about flight attendant jobs before there was planes and commercial flights. ... So there are jobs which will be appearing of that type that are related to the AI,” he said. “I think the topic is a super important topic. How jobs and AI is related — I don’t think it’s one company or one country which can solve it alone. It’s all together we could think about this topic,” he added. “But it’s really an opportunity, it’s not a threat.” “From IBM’s perspective we firmly believe that every profession will be impacted by AI. There’s no question. We also believe that there will be more jobs created,” chimed in Bob Lord, IBM’s chief digital officer. “We also believe that there’ll be more jobs created. “I firmly believe that augmenting someone’s intelligence is going to get rid of… the mundane jobs. And allow us to rise up a level. That we haven’t been able to do before and solve some really, really hard problems.”

How to build skills that stay relevant instead of chasing the latest tech trends

Knowledge about core functions of the software would eventually be available from a broad pool of people, driving down wages unless you were willing to participate in the "arms race" of always learning the latest and greatest. What became quickly apparent was that the people who succeeded in this area were those who were the most adaptable and able to sense where the market was going, so they could retool their skillset based on what was hot at any given time. The individual who was a supply chain specialist a couple of years ago might now be an accounts payable expert, based on the demand for a particular skillset. These individuals had developed a core talent—the ability to sense where the market for this software package was going—and combined it with an ability to rapidly learn and apply the new technical elements of that software. While those focused on deepening their skills were seeing the market pass them by, the talent-focused individuals happily abandoned and changed skills in order to stay relevant.

Quote for the day:

"Leaders are more powerful role models when they learn than when they teach." -- Rosabeth Moss Kantor

No comments:

Post a Comment