Quote for the day:

"Success in almost any field depends more on energy and drive than it does on intelligence. This explains why we have so many stupid leaders." -- Sloan Wilson

Tiny AI: The new oxymoron in town? Not really!

/dq/media/media_files/2026/02/04/tiny-ai-2026-02-04-16-39-39.jpg) Could SLMs and minituarised models be the drink that would make today’s AI

small enough to walk through these future doors without AI bumping into

carbon-footprint issues? Would model compression tools like pruning,

quantisation, and knowledge distillation help to lift some weight off the

shoulders of heavy AI backyards? Lightweight models, edge devices that save

compute resources, smaller algorithms that do not put huge stress on AI

infrastructures, and AI that is thin on computational complexity- Tiny AI- as

an AI creation and adoption approach- sounds unusual and promising at the

onset. ... hardware innovations and new approaches to modelling that enable

Tiny AI can significantly ease the compute and environmental burdens of

large-scale AI infrastructures, avers Biswajeet Mahapatra, principal analyst

at Forrester. “Specialised hardware like AI accelerators, neuromorphic chips,

and edge-optimised processors reduces energy consumption by performing

inference locally rather than relying on massive cloud-based models. At the

same time, techniques such as model pruning, quantisation, knowledge

distillation, and efficient architectures like transformers-lite allow smaller

models to deliver high accuracy with far fewer parameters.” ... Tiny AI models

run directly on edge devices, enabling fast, local decision-making by

operating on narrowly optimised datasets and sending only relevant, aggregated

insights upstream, Acharya spells out.

Could SLMs and minituarised models be the drink that would make today’s AI

small enough to walk through these future doors without AI bumping into

carbon-footprint issues? Would model compression tools like pruning,

quantisation, and knowledge distillation help to lift some weight off the

shoulders of heavy AI backyards? Lightweight models, edge devices that save

compute resources, smaller algorithms that do not put huge stress on AI

infrastructures, and AI that is thin on computational complexity- Tiny AI- as

an AI creation and adoption approach- sounds unusual and promising at the

onset. ... hardware innovations and new approaches to modelling that enable

Tiny AI can significantly ease the compute and environmental burdens of

large-scale AI infrastructures, avers Biswajeet Mahapatra, principal analyst

at Forrester. “Specialised hardware like AI accelerators, neuromorphic chips,

and edge-optimised processors reduces energy consumption by performing

inference locally rather than relying on massive cloud-based models. At the

same time, techniques such as model pruning, quantisation, knowledge

distillation, and efficient architectures like transformers-lite allow smaller

models to deliver high accuracy with far fewer parameters.” ... Tiny AI models

run directly on edge devices, enabling fast, local decision-making by

operating on narrowly optimised datasets and sending only relevant, aggregated

insights upstream, Acharya spells out. Kali Linux vs. Parrot OS: Which security-forward distro is right for you?

The first thing you should know is that Kali Linux is based on Debian, which

means it has access to the standard Debian repositories, which include a

wealth of installable applications. ... There are also the 600+ preinstalled

applications, most of which are geared toward information gathering,

vulnerability analysis, wireless attacks, web application testing, and more.

Many of those applications include industry-specific modifications, such as

those for computer forensics, reverse engineering, and vulnerability

detection. And then there are the two modes: Forensics Mode for investigation

and "Kali Undercover," which blends the OS with Windows. ... Parrot OS (aka

Parrot Security or just Parrot) is another popular pentesting Linux

distribution that operates in a similar fashion. Parrot OS is also based on

Debian and is designed for security experts, developers, and users who

prioritize privacy. It's that last bit you should pay attention to. Yes,

Parrot OS includes a similar collection of tools as does Kali Linux, but it

also offers apps to protect your online privacy. To that end, Parrot is

available in two editions: Security and Home. ... What I like about Parrot OS

is that you have options. If you want to run tests on your network and/or

systems, you can do that. If you want to learn more about cybersecurity, you

can do that. If you want to use a general-purpose operating system that has

added privacy features, you can do that.

The first thing you should know is that Kali Linux is based on Debian, which

means it has access to the standard Debian repositories, which include a

wealth of installable applications. ... There are also the 600+ preinstalled

applications, most of which are geared toward information gathering,

vulnerability analysis, wireless attacks, web application testing, and more.

Many of those applications include industry-specific modifications, such as

those for computer forensics, reverse engineering, and vulnerability

detection. And then there are the two modes: Forensics Mode for investigation

and "Kali Undercover," which blends the OS with Windows. ... Parrot OS (aka

Parrot Security or just Parrot) is another popular pentesting Linux

distribution that operates in a similar fashion. Parrot OS is also based on

Debian and is designed for security experts, developers, and users who

prioritize privacy. It's that last bit you should pay attention to. Yes,

Parrot OS includes a similar collection of tools as does Kali Linux, but it

also offers apps to protect your online privacy. To that end, Parrot is

available in two editions: Security and Home. ... What I like about Parrot OS

is that you have options. If you want to run tests on your network and/or

systems, you can do that. If you want to learn more about cybersecurity, you

can do that. If you want to use a general-purpose operating system that has

added privacy features, you can do that. Bridging the AI Readiness Gap: Practical Steps to Move from Exploration to Production

To bridge the gap between AI readiness and implementation, organizations can

adopt the following practical framework, which draws from both enterprise

experience and my ongoing doctoral research. The framework centers on four

critical pillars: leadership alignment, data maturity, innovation culture, and

change management. When addressed together, these pillars provide a strong

foundation for sustainable and scalable AI adoption. ... This begins with a

comprehensive, cross-functional assessment across the four pillars of

readiness: leadership alignment, data maturity, innovation culture, and change

management. The goal of this assessment is to identify internal gaps that may

hinder scale and long-term impact. From there, companies should prioritize a

small set of use cases that align with clearly defined business objectives and

deliver measurable value. These early efforts should serve as structured

pilots to test viability, refine processes, and build stakeholder confidence

before scaling. Once priorities are established, organizations must develop an

implementation road map that achieves the right balance of people, processes,

and technology. This road map should define ownership, timelines, and

integration strategies that embed AI into business workflows rather than

treating it as a separate initiative. Technology alone will not deliver

results; success depends on aligning AI with decision-making processes and

ensuring that employees understand its value.

To bridge the gap between AI readiness and implementation, organizations can

adopt the following practical framework, which draws from both enterprise

experience and my ongoing doctoral research. The framework centers on four

critical pillars: leadership alignment, data maturity, innovation culture, and

change management. When addressed together, these pillars provide a strong

foundation for sustainable and scalable AI adoption. ... This begins with a

comprehensive, cross-functional assessment across the four pillars of

readiness: leadership alignment, data maturity, innovation culture, and change

management. The goal of this assessment is to identify internal gaps that may

hinder scale and long-term impact. From there, companies should prioritize a

small set of use cases that align with clearly defined business objectives and

deliver measurable value. These early efforts should serve as structured

pilots to test viability, refine processes, and build stakeholder confidence

before scaling. Once priorities are established, organizations must develop an

implementation road map that achieves the right balance of people, processes,

and technology. This road map should define ownership, timelines, and

integration strategies that embed AI into business workflows rather than

treating it as a separate initiative. Technology alone will not deliver

results; success depends on aligning AI with decision-making processes and

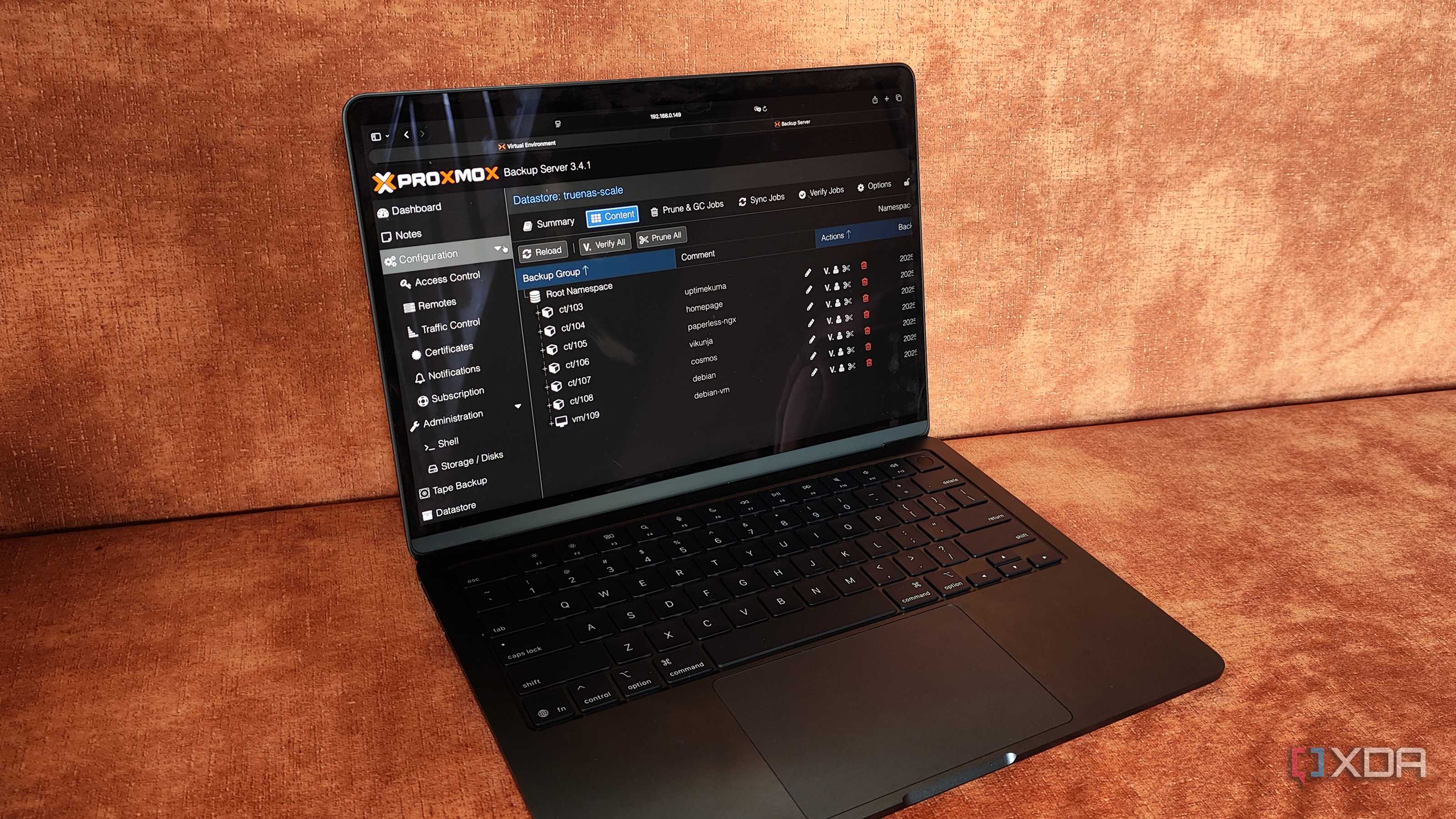

ensuring that employees understand its value. Proxmox's best feature isn't virtualization; it's the backup system

Because backups are integrated into Proxmox instead of being bolted on as some

third-party add-on, setting up and using backups is entirely seamless. Agents

don't need to be configured per instance. No extra management is required, and

no scripts need to be created to handle the running of snapshots and recovery.

The best part about this approach is that it ensures everything will continue

working with each OS update. Backups can be spotted per instance, too, so it's

easy to check how far you can go back and how many copies are available. The

entire backup strategy within Proxmox is snapshot-based, leveraging localised

storage when available. This allows Proxmox to create snapshots of not only

running Linux containers, but also complex virtual machines. They're reliable,

fast, and don't cause unnecessary downtime. But while they're powerful

additions to a hypervised configuration, the backups aren't difficult to use.

This is key since it would render the backups less functional if it proved

troublesome to use them when it mattered most. These backups don't have to use

local storage either. NFS, CIFS, and iSCSI can all be targeted as backup

locations. ... It can also be a mixture of local storage and cloud

services, something we recommend and push for with a 3-2-1 backup strategy.

But there's one thing of using Proxmox's snapshots and built-in tools and a

whole different ball game with Proxmox Backup Server. With PBS, we've got

duplication, incremental backups, compression, encryption, and

verification.

Because backups are integrated into Proxmox instead of being bolted on as some

third-party add-on, setting up and using backups is entirely seamless. Agents

don't need to be configured per instance. No extra management is required, and

no scripts need to be created to handle the running of snapshots and recovery.

The best part about this approach is that it ensures everything will continue

working with each OS update. Backups can be spotted per instance, too, so it's

easy to check how far you can go back and how many copies are available. The

entire backup strategy within Proxmox is snapshot-based, leveraging localised

storage when available. This allows Proxmox to create snapshots of not only

running Linux containers, but also complex virtual machines. They're reliable,

fast, and don't cause unnecessary downtime. But while they're powerful

additions to a hypervised configuration, the backups aren't difficult to use.

This is key since it would render the backups less functional if it proved

troublesome to use them when it mattered most. These backups don't have to use

local storage either. NFS, CIFS, and iSCSI can all be targeted as backup

locations. ... It can also be a mixture of local storage and cloud

services, something we recommend and push for with a 3-2-1 backup strategy.

But there's one thing of using Proxmox's snapshots and built-in tools and a

whole different ball game with Proxmox Backup Server. With PBS, we've got

duplication, incremental backups, compression, encryption, and

verification.The Fintech Infrastructure Enabling AI-Powered Financial Services

AI is reshaping financial services faster than most realize. Machine learning

models power credit decisions. Natural language processing handles customer

service. Computer vision processes documents. But there’s a critical

infrastructure layer that determines whether AI-powered financial platforms

actually work for end users: payment infrastructure. The disconnect is

striking. Fintech companies invest millions in AI capabilities, recommendation

engines, fraud detection, personalization algorithms. ... From a technical

standpoint, the integration happens via API. The platform exposes user

balances and transaction authorization through standard REST endpoints. The

card provider handles everything downstream: card issuance logistics,

real-time currency conversion, payment network settlement, fraud detection at

the transaction level, dispute resolution workflows. This architectural

pattern enables fintech platforms to add payment functionality in 8-12 weeks

rather than the 18-24 months required to build from scratch. ... The

compliance layer operates transparently to end users while protecting

platforms from liability. KYC verification happens at multiple checkpoints.

AML monitoring runs continuously across transaction patterns. Reporting

systems generate required documentation automatically. The platform gets

payment functionality without becoming responsible for navigating payment

regulations across dozens of jurisdictions.

AI is reshaping financial services faster than most realize. Machine learning

models power credit decisions. Natural language processing handles customer

service. Computer vision processes documents. But there’s a critical

infrastructure layer that determines whether AI-powered financial platforms

actually work for end users: payment infrastructure. The disconnect is

striking. Fintech companies invest millions in AI capabilities, recommendation

engines, fraud detection, personalization algorithms. ... From a technical

standpoint, the integration happens via API. The platform exposes user

balances and transaction authorization through standard REST endpoints. The

card provider handles everything downstream: card issuance logistics,

real-time currency conversion, payment network settlement, fraud detection at

the transaction level, dispute resolution workflows. This architectural

pattern enables fintech platforms to add payment functionality in 8-12 weeks

rather than the 18-24 months required to build from scratch. ... The

compliance layer operates transparently to end users while protecting

platforms from liability. KYC verification happens at multiple checkpoints.

AML monitoring runs continuously across transaction patterns. Reporting

systems generate required documentation automatically. The platform gets

payment functionality without becoming responsible for navigating payment

regulations across dozens of jurisdictions.Context Engineering for Coding Agents

Context engineering is relevant for all types of agents and LLM usage of course. My colleague Bharani Subramaniam’s simple definition is: “Context engineering is curating what the model sees so that you get a better result.” For coding agents, there is an emerging set of context engineering approaches and terms. The foundation of it are the configuration features offered by the tools, and then the nitty gritty of part is how we conceptually use those features. ... One of the goals of context engineering is to balance the amount of context given - not too little, not too much. Even though context windows have technically gotten really big, that doesn’t mean that it’s a good idea to indiscriminately dump information in there. An agent’s effectiveness goes down when it gets too much context, and too much context is a cost factor as well of course. Some of this size management is up to the developer: How much context configuration we create, and how much text we put in there. My recommendation would be to build context like rules files up gradually, and not pump too much stuff in there right from the start. ... As I said in the beginning, these features are just the foundation for humans to do the actual work and filling these with reasonable context. It takes quite a bit of time to build up a good setup, because you have to use a configuration for a while to be able to say if it’s working well or not - there are no unit tests for context engineering. Therefore, people are keen to share good setups with each other.Reimagining The Way Organizations Hire Cyber Talent

The way we hire cybersecurity professionals is fundamentally flawed. Employers

post unicorn job descriptions that combine three roles’ worth of

responsibilities into one. Qualified candidates are filtered out by automated

scans or rejected because their resumes don’t match unrealistic expectations.

Interviews are rushed, mismatched, or even faked—literally, in some cases. On

the other side, skilled professionals—many of whom are eager to work—find

themselves lost in a sea of noise, unable to connect with the opportunities that

align with their capabilities and career goals. Add in economic uncertainty, AI

disruption and changing work preferences, and it’s clear the traditional hiring

playbook simply isn’t working anymore. ... Part of fixing this broken system

means rethinking what we expect from roles in the first place. Jones believes

that instead of packing every security function into a single job description

and hoping for a miracle, organizations should modularize their needs. Need a

penetration tester for one month? A compliance SME for two weeks? A security

architect to review your Zero Trust strategy? You shouldn’t have to hire

full-time just to get those tasks done. ... Solving the cybersecurity workforce

challenge won’t come from doubling down on job boards or resume filters. But

organizations may be able to shift things in the right direction by reimagining

the way they connect people to the work that matters—with clarity, flexibility

and mutual trust.

The way we hire cybersecurity professionals is fundamentally flawed. Employers

post unicorn job descriptions that combine three roles’ worth of

responsibilities into one. Qualified candidates are filtered out by automated

scans or rejected because their resumes don’t match unrealistic expectations.

Interviews are rushed, mismatched, or even faked—literally, in some cases. On

the other side, skilled professionals—many of whom are eager to work—find

themselves lost in a sea of noise, unable to connect with the opportunities that

align with their capabilities and career goals. Add in economic uncertainty, AI

disruption and changing work preferences, and it’s clear the traditional hiring

playbook simply isn’t working anymore. ... Part of fixing this broken system

means rethinking what we expect from roles in the first place. Jones believes

that instead of packing every security function into a single job description

and hoping for a miracle, organizations should modularize their needs. Need a

penetration tester for one month? A compliance SME for two weeks? A security

architect to review your Zero Trust strategy? You shouldn’t have to hire

full-time just to get those tasks done. ... Solving the cybersecurity workforce

challenge won’t come from doubling down on job boards or resume filters. But

organizations may be able to shift things in the right direction by reimagining

the way they connect people to the work that matters—with clarity, flexibility

and mutual trust.News sites are locking out the Internet Archive to stop AI crawling. Is the ‘open web’ closing?

Publishers claim technology companies have accessed a lot of this content for free and without the consent of copyright owners. Some began taking tech companies to court, claiming they had stolen their intellectual property. High-profile examples include The New York Times’ case against ChatGPT’s parent company OpenAI and News Corp’s lawsuit against Perplexity AI. ... Publishers are also using technology to stop unwanted AI bots accessing their content, including the crawlers used by the Internet Archive to record internet history. News publishers have referred to the Internet Archive as a “back door” to their catalogues, allowing unscrupulous tech companies to continue scraping their content. ... The opposite approach – placing all commercial news behind paywalls – has its own problems. As news publishers move to subscription-only models, people have to juggle multiple expensive subscriptions or limit their news appetite. Otherwise, they’re left with whatever news remains online for free or is served up by social media algorithms. The result is a more closed, commercial internet. This isn’t the first time that the Internet Archive has been in the crosshairs of publishers, as the organisation was previously sued and found to be in breach of copyright through its Open Library project. ... Today’s websites become tomorrow’s historical records. Without the preservation efforts of not-for-profit organisations like The Internet Archive, we risk losing vital records.Who will be the first CIO fired for AI agent havoc?

As CIOs deploy teams of agents that work together across the enterprise, there’s

a risk that one agent’s error compounds itself as other agents act on the bad

result, he says. “You have an endless loop they can get out of,” he adds. Many

organizations have rushed to deploy AI agents because of the fear of missing

out, or FOMO, Nadkarni says. But good governance of agents takes a thoughtful

approach, he adds, and CIOs must consider all the risks as they assign agents to

automate tasks previously done by human employees. ... Lawsuits and fines seem

likely, and plaintiffs will not need new AI laws to file claims, says Robert

Feldman, chief legal officer at database services provider EnterpriseDB. “If an

AI agent causes financial loss or consumer harm, existing legal theories already

apply,” he says. “Regulators are also in a similar position. They can act as

soon as AI drives decisions past the line of any form of compliance and safety

threshold.” ... CIOs will play a big role in figuring out the guardrails, he

adds. “Once the legal action reaches the public domain, boards want answers to

what happened and why,” Feldman says. ... CIOs should be proactive about agent

governance, Osler recommends. They should require proof for sensitive actions

and make every action traceable. They can also put humans in the loop for

sensitive agent tasks, design agents to hand off action when the situation is

ambiguous or risky, and they can add friction to high-stakes agent actions and

make it more difficult to trigger irreversible steps, he says.

As CIOs deploy teams of agents that work together across the enterprise, there’s

a risk that one agent’s error compounds itself as other agents act on the bad

result, he says. “You have an endless loop they can get out of,” he adds. Many

organizations have rushed to deploy AI agents because of the fear of missing

out, or FOMO, Nadkarni says. But good governance of agents takes a thoughtful

approach, he adds, and CIOs must consider all the risks as they assign agents to

automate tasks previously done by human employees. ... Lawsuits and fines seem

likely, and plaintiffs will not need new AI laws to file claims, says Robert

Feldman, chief legal officer at database services provider EnterpriseDB. “If an

AI agent causes financial loss or consumer harm, existing legal theories already

apply,” he says. “Regulators are also in a similar position. They can act as

soon as AI drives decisions past the line of any form of compliance and safety

threshold.” ... CIOs will play a big role in figuring out the guardrails, he

adds. “Once the legal action reaches the public domain, boards want answers to

what happened and why,” Feldman says. ... CIOs should be proactive about agent

governance, Osler recommends. They should require proof for sensitive actions

and make every action traceable. They can also put humans in the loop for

sensitive agent tasks, design agents to hand off action when the situation is

ambiguous or risky, and they can add friction to high-stakes agent actions and

make it more difficult to trigger irreversible steps, he says.

No comments:

Post a Comment