Quote for the day:

“The greatest leader is not necessarily the one who does the greatest things. He is the one that gets the people to do the greatest things.” -- Ronald Reagan

Rethinking Network Operations For Cloud Repatriation

Repatriation introduces significant network challenges, further amplified by

the adoption of disruptive technologies like SDN, SD-WAN, SASE and the rapid

integration of AI/ML, especially at the edge. While beneficial, these

technologies add complexity to network management, particularly in areas such

as traffic routing, policy enforcement, and handling the unpredictable

workloads generated by AI. ... Managing a hybrid environment spanning

on-premises and public cloud resources introduces inherent complexity. Network

teams must navigate diverse technologies, integrate disparate tools and

maintain visibility across a distributed infrastructure. On-premises networks

often lack the dynamic scalability and flexibility of cloud environments.

Absorbing repatriated workloads further complicates existing infrastructure,

making monitoring and troubleshooting more challenging. ... Repatriated

workloads introduce potential security vulnerabilities if not seamlessly

integrated into existing security frameworks. On-premises security stacks not

designed for the increased traffic volume previously handled by SASE services

can introduce latency and performance bottlenecks. Adjustments to SD-WAN

routing and policy enforcement may be necessary to redirect traffic to

on-premises security resources.

For the AI era, it’s time for BYOE: Bring Your Own Ecosystem

We can no longer limit user access to one or two devices — we must address the

entire ecosystem. Instead of forcing users down a single, constrained path,

security teams need to acknowledge that users will inevitably venture into

unsafe territory, and focus on strengthening the security of the broader

environment. In 2015, we as security practitioners could get by with placing

“do not walk on the grass” signs and ushering users down manicured pathways.

In 2025, we need to create more resilient grass. ... The risk extends beyond

basic access. Forty-percent of employees download customer data to personal

devices, while 33% alter sensitive data, and 31% approve large financial

transactions. And, most alarming, 63% use personal accounts on their work

laptops — most commonly Google — to share work files and create documents,

effectively bypassing email filtering and data loss prevention (DLP) systems.

... Browser-based access exposes users to risks from malicious plugins,

extensions and post authentication compromise, while the increasing reliance

on SaaS applications creates opportunities for supply chain attacks. Personal

accounts serve as particularly vulnerable entry points, allowing threat actors

to leverage compromised credentials or stolen authentication tokens to

infiltrate corporate networks.

DARPA continues work on technology to combat deepfakes

The rapid evolution of generative AI presents a formidable challenge in the

arms race between deepfake creators and detection technologies. As AI-driven

content generation becomes more sophisticated, traditional detection

mechanisms are at a fast risk of becoming obsolete. Deepfake detection relies

on training machine learning models on large datasets of genuine and

manipulated media, but the scarcity of diverse and high-quality datasets can

impede progress. Limited access to comprehensive datasets has made it

difficult to develop robust detection systems that generalize across various

media formats and manipulation techniques. To address this challenge, DARPA

puts a strong emphasis on interdisciplinary collaboration. By partnering with

institutions such as SRI International and PAR Technology, DARPA leverages

cutting-edge expertise to enhance the capabilities of its deepfake detection

ecosystem. These partnerships facilitate the exchange of knowledge and

technical resources that accelerate the refinement of forensic tools. DARPA’s

open research model also allows diverse perspectives to converge, fostering

rapid innovation and adaptation in response to emerging threats. Deepfake

detection also faces significant computational challenges. Training deep

neural networks to recognize manipulated media requires extensive processing

power and large-scale data storage.

AI Agents: Future of Automation or Overhyped Buzzword?

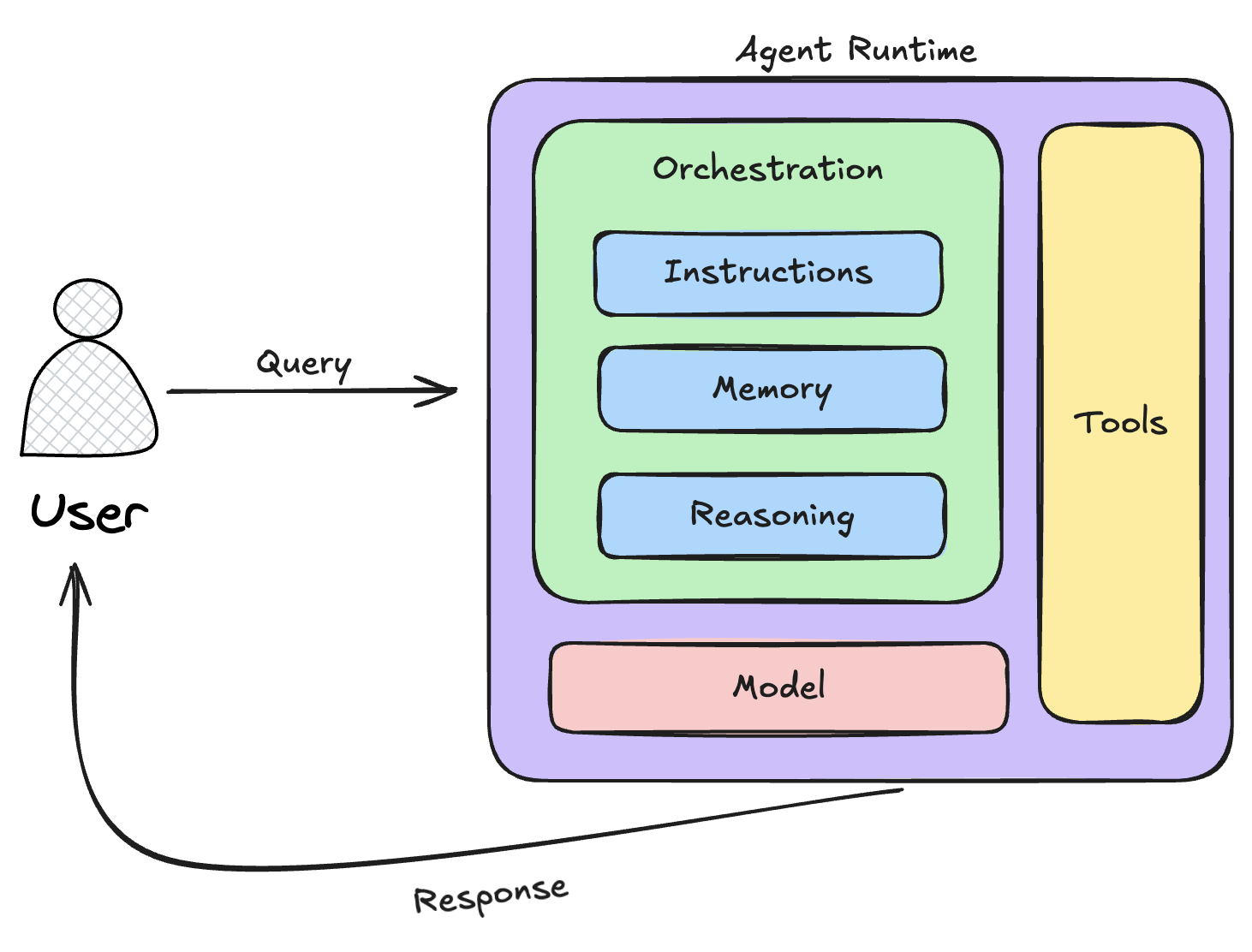

AI agents are not just an evolution of AI; they are a fundamental shift in IT

operations and decision-making. These agents are being increasingly integrated

into Predictive AIOps, where they autonomously manage, optimize, and

troubleshoot systems without human intervention. Unlike traditional

automation, which follows pre-defined scripts, AI agents dynamically predict,

adapt, and respond to system conditions in real time. ... AI agents are

transforming IT management and operational resilience. Instead of just

replacing workflows, they now optimize and predict system health,

automatically mitigating risks and reducing downtime. Whether it's

self-repairing IT infrastructure, real-time cybersecurity monitoring, or

orchestrating distributed cloud environments, AI Agents are pushing technology

toward self-governing, intelligent automation. ... The future of AI agents is

both thrilling and terrifying. Companies are investing in large action models

— next-gen AI that doesn’t just generate text but actually does things. We’re

talking about AI that can manage entire business processes or run a company’s

operations without human intervention. ... AI agents aren’t just another

tech buzzword — they represent a fundamental shift in how AI interacts with

the world. Sure, we’re still in the early days, and there’s a lot of fluff in

the market, but make no mistake: AI agents will change the way we work, live,

and do business.

Optimizing Cloud Security: Managing Sprawl, Technical Debt, and Right-Sizing Challenges

Technical debt is the implied cost of future IT infrastructure rework caused

by choosing expedient IT solutions like shortcuts, software patches or

deferred IT upgrades over long-term, sustainable designs. It’s easily accrued

when under pressure to innovate quickly but leads to waste and security gaps

and vulnerabilities that compromise an organization’s integrity, making

systems more susceptible to cyber threats. Technical debt can also be costly

to eradicate, with companies spending an average of 20-40% of their IT budgets

on addressing it. ... Cloud sprawl refers to the uncontrolled proliferation of

cloud services, instances, and resources within an organization. It often

results from rapid growth, lack of visibility, and decentralized

decision-making. At Surveil, we have over 2.5 billion data points to lean on

to identify trends and we know that organizations with unmanaged cloud

environments can see up to 30% higher cloud costs due to redundant and idle

resources.Unchecked cloud sprawl can lead to increased security

vulnerabilities due to unmanaged and unmonitored resources. ... Right-sizing

involves aligning IT resources precisely with the demands of applications or

workloads to optimize performance and cost. Our data shows that organizations

that effectively right-size their IT estate can reduce cloud costs by up to

40%, unlocking business value to invest in other business priorities.

How businesses can avoid a major software outage

Software bugs and bad code releases are common culprits behind tech outages.

These issues can arise from errors in the code, insufficient testing, or

unforeseen interactions among software components. Moreover, the complexity of

modern software systems exacerbates the risk of outages. As applications

become more interconnected, the potential for failures increases. A seemingly

minor bug in one component can have far-reaching consequences, potentially

bringing down entire systems or services. ... The impact of backup failures

can be particularly devastating as they often come to light during already

critical situations. For instance, a healthcare provider might lose access to

patient records during a primary system failure, only to find that their

backup data is incomplete or corrupted. Such scenarios underscore the

importance of not just having backup systems, but ensuring they are fully

functional, up-to-date, and capable of meeting the organization's recovery

needs. ... Human error remains one of the leading causes of tech outages. This

can include mistakes made during routine maintenance, misconfigurations, or

accidental deletions. In high-pressure environments, even experienced

professionals can make errors, especially when dealing with complex systems or

tight deadlines.

Serverless was never a cure-all

Serverless architectures were originally promoted as a way for developers to

rapidly deploy applications without the hassle of server management. The allure

was compelling: no more server patching, automatic scalability, and the ability

to focus solely on business logic while lowering costs. This promise resonated

with many organizations eager to accelerate their digital transformation

efforts. Yet many organizations adopted serverless solutions without fully

understanding the implications or trade-offs. It became evident that while

server management may have been alleviated, developers faced numerous

complexities. ... The pay-as-you-go model appears attractive for intermittent

workloads, but it can quickly spiral out of control if an application operates

under unpredictable traffic patterns or contains many small components. The

requirement for scalability, while beneficial, also necessitates careful budget

management—this is a challenge if teams are unprepared to closely monitor usage.

... Locating the root cause of issues across multiple asynchronous components

becomes more challenging than in traditional, monolithic architectures.

Developers often spent the time they saved from server management struggling to

troubleshoot these complex interactions, undermining the operational

efficiencies serverless was meant to provide.

AI Is Improving Medical Monitoring and Follow-Up

Artificial intelligence technologies have shown promise in managing some of the

worst inefficiencies in patient follow-up and monitoring. From automated

scheduling and chatbots that answer simple questions to review of imaging and

test results, a range of AI technologies promise to streamline unwieldy

processes for both patients and providers. ... Adherence to medication regimens

is essential for many health conditions, both in the wake of acute health events

and over time for chronic conditions. AI programs can both monitor whether

patients are taking their medication as prescribed and urge them to do so with

programmed notifications. Feedback gathered by these programs can indicate the

reasons for non-adherence and help practitioners to devise means of addressing

those problems. ... Using AI to monitor the vital signs of patients suffering

from chronic conditions may help to detect anomalies -- and indicate adjustments

that will stabilize them. Keeping tabs on key indicators of health such as blood

pressure, blood sugar, and respiration in a regular fashion can establish a

baseline and flag fluctuations that require follow up treatment using both

personal and demographic data related to age and sex by comparing it to

available data on similar patients.

IT infrastructure complexity hindering cyber resilience

Given the rapid evolution of cyber threats and continuous changes in corporate

IT environments, failing to update and test resilience plans can leave

businesses exposed when attacks or major outages occur. The importance of

integrating cyber resilience into a broader organizational resilience strategy

cannot be overstated. With cybersecurity now fundamental to business operations,

it must be considered alongside financial, operational, and reputational risk

planning to ensure continuity in the face of disruptions. ... Leaders also

expect to face adversity in the near future with 60% anticipating a significant

cybersecurity failure within the next six months, which reflects the sheer

volume of cyber attacks as well as a growing recognition that cloud services are

not immune to disruptions and outages. ... Eirst and most importantly, it

removes IT and cybersecurity complexity–the key impediment to enhancing cyber

resilience. Eliminating traditional security dependencies such as firewalls and

VPNs not only reduces the organization’s attack surface, but also streamlines

operations, cuts infrastructure costs, and improves IT agility. ... The second

big win is the inability of attackers to move laterally should a compromise at

an endpoint occur. Users are verified and given the lowest privileges necessary

each time they access a corporate resource, meaning ransomware and other

data-stealing threats are far less of a concern.

Is subscription-based networking the future?

There are several factors making NaaS an attractive proposition. One of the most

significant is the growing demand for flexibility. Traditional networking models

often require upfront investments and long-term commitments, which are

restrictive for organisations that need to scale their infrastructure quickly or

adapt to changing needs. In contrast, a subscription model allows businesses to

pay only for what they use, making it easier to adjust capacity and features as

needed. Cost efficiency is another big driver. With networking delivered as a

service, organisations can move away from large capital expenditures toward

predictable, operational costs. This helps IT teams manage budgets more

effectively while reducing the need to maintain and upgrade hardware. It also

enables companies to access new technologies without costly refresh cycles.

Security and compliance are becoming increasingly complex, especially for

companies handling sensitive data. NaaS solutions often come with built-in

security updates, compliance tools, and proactive monitoring, helping businesses

stay ahead of emerging threats. Instead of managing security in-house, IT teams

can rely on service providers to ensure their networks remain protected and up

to date. Additionally, the rise of cloud computing and hybrid work has

accelerated the need for more agile and scalable networking solutions.

No comments:

Post a Comment