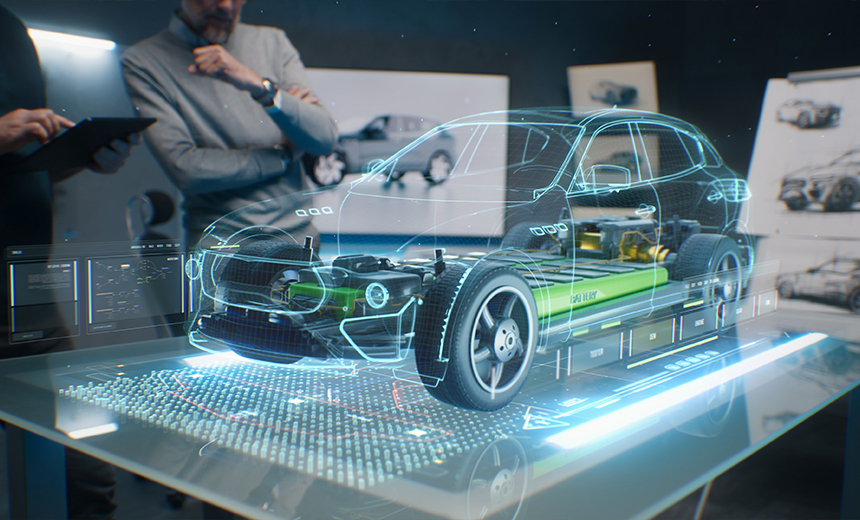

What Does the Car of the Future Look Like?

Enabled with IoT, the vehicles stay in sync with their environments. The

ConnectedDrive feature, for example, enables predictive maintenance by using IoT

sensors to monitor vehicle health and performance in real time and notify

drivers about upcoming maintenance needs. IoT also paves the way for

vehicle-to-infrastructure, or V2X, communication, which enables BMW cars to

interact with traffic lights, road signs and other vehicles. But a smart car is

more than just internet-connected. ... The next leap in sensor technology is

quantum sensing. Image generation systems based on infrared, ultrasound and

radar are already in use. But with multisensory systems, BMW vehicles will not

only be able to detect potential hazards more accurately but also predict and

prevent damage - a capability crucial for automated and autonomous driving

systems. These sensors will allow vehicles to "feel" their surroundings,

enabling more refined surface control and the ability to perform complex tasks,

such as the automated assembly of intricate components. Predictive maintenance,

powered by multisensory input, will serve as an early warning system in

production, reducing downtime.

NIST Cybersecurity Framework (CSF) and CTEM – Better Together

CSF's core functions align well with the CTEM approach, which involves

identifying and prioritizing threats, assessing the organization's vulnerability

to those threats, and continuously monitoring for signs of compromise. Adopting

CTEM empowers cybersecurity leaders to significantly mature their organization's

NIST CSF compliance. Prior to CTEM, periodic vulnerability assessments and

penetration testing to find and fix vulnerabilities was considered the gold

standard for threat exposure management. The problem was, of course, that these

methods only offered a snapshot of security posture – one that was often

outdated before it was even analyzed. CTEM has come to change all this. The

program delineates how to achieve continuous insights into the organizational

attack surface, proactively identifying and mitigating vulnerabilities and

exposures before attackers exploit them. To make this happen, CTEM programs

integrate advanced tech like exposure assessment, security validation, automated

security validation, attack surface management, and risk prioritization.

Leveling Up to Responsible AI Through Simulations

This simulation highlighted the challenges and opportunities involved in

embedding responsible AI practices within Agile development environments. The

lessons learned from this exercise are clear: expertise, while essential, must

be balanced with cross-disciplinary collaboration; incentives need to be aligned

with ethical outcomes; and effective communication and documentation are crucial

for ensuring accountability. Moving forward, organizations must prioritize the

development of frameworks and cultures that support responsible AI. This

includes creating opportunities for ongoing education and reflection, fostering

environments where diverse perspectives are valued, and ensuring that all

stakeholders—from engineers to policymakers—are equipped and incentivized to

navigate the complexities of responsible Agile AI development. Simulations like

the one we conducted are a valuable tool in this effort. By providing a

realistic, immersive experience, they help professionals from diverse

backgrounds understand the challenges of responsible AI development and prepare

them to meet these challenges in their own work. As AI continues to evolve and

become increasingly integrated into our lives, the need for responsible

development practices will only grow.

What software supply chain security really means

Upon reflection, the “supply chain” aspect of software supply chain security

suggests the crucial ingredient of an improved definition. Software producers,

like manufacturers, have a supply chain. And software producers, like

manufacturers, require inputs and then perform a manufacturing process to build

a finished product. In other words, a software producer uses components,

developed by third parties and themselves, and technologies to write, build, and

distribute software. A vulnerability or compromise of this chain, whether done

via malicious code or via the exploitation of an unintentional vulnerability, is

what defines software supply chain security. I should mention that a similar,

rival data set maintained by the Atlantic Council uses this broader

definition. I admit to still having one general reservation about this

definition: It can feel like software supply chain security subsumes all of

software security, especially the sub-discipline often called application

security. When a developer writes a buffer overflow in the open source software

library your application depends upon, is that application security? Yep! Is

that also software supply chain security?

Data privacy and security in AI-driven testing

As the technology has become more accepted and widespread, the focus has

shifted from disbelief in its capabilities to a deep concern for how it

handles sensitive data. At Typemock, we’ve adapted to this shift by ensuring

that our AI-driven tools not only deliver powerful testing capabilities but

also prioritize data security at every level. ... While concerns about IP

leakage and data permanence are significant today, there is a growing shift in

how people perceive data sharing. Just as people now share everything online,

often too loosely in my opinion, there is a gradual acceptance of data sharing

in AI-driven contexts, provided it is done securely and transparently.Greater

Awareness and Education: In the future, as people become more educated about

the risks and benefits of AI, the fear surrounding data privacy may diminish.

However, this will also require continued advancements in AI security measures

to maintain trust. Innovative Security Solutions: The evolution of AI

technology will likely bring new security solutions that can better address

concerns about data permanence and IP leakage. These solutions will help

balance the benefits of AI-driven testing with the need for robust data

protection.

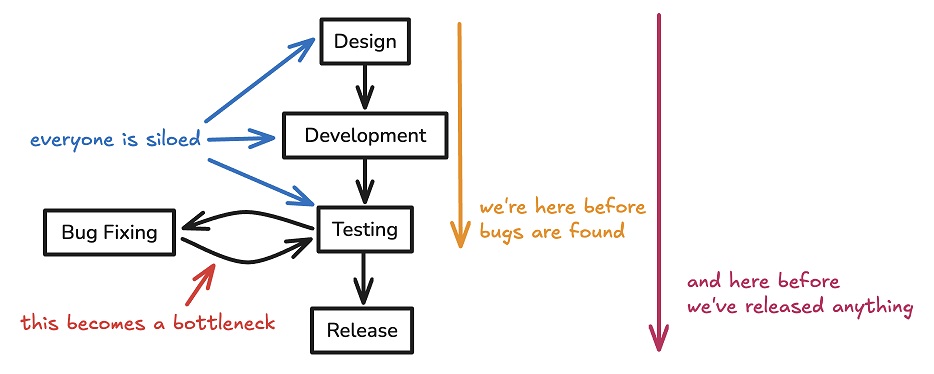

QA's Dead: Where Do We Go From Here?

Developers are now the first line of quality control. This is possible through

two initiatives. First, iterative development. Agile methodologies mean teams

now work in short sprints, delivering functional software more frequently.

This allows for continuous testing and feedback, catching issues earlier in

the process. It also means that quality is no longer a final checkpoint but an

ongoing consideration throughout the development cycle. Second, tooling.

Automated testing frameworks, CI/CD pipelines, and code quality tools have

allowed developers to take on more quality control responsibilities without

risking burnout. These tools allow for instant feedback on code quality,

automated testing on every commit, and integration of quality checks into the

development workflow. ... The first opportunity is down the stack, moving into

more technical roles. QA professionals can leverage their quality-focused

mindset to become automation specialists or DevOps engineers. Their expertise

in thorough testing can be crucial in developing robust, reliable automated

test suites. The concept that "flaky tests are worse than no tests" becomes

even more critical when the tests are all that stop an organization from

shipping low-quality code.

Serverless Is Trending Again in Modern Application Development

A better definition has emerged as serverless becomes a path to developer

productivity. The term "serverless" was always a misnomer and, even among end

users and vendors, tended to mean different things depending on product and

use case. Just as the cloud is someone else's computer, serverless is still

someone else's server. Today, things are much clearer. A serverless

application is a software component that runs inside of an environment that

manages the underlying complexity of deployment, runtimes, protocols, and

process isolation so that developers can focus on their code. Enterprise

success stories delivered proven, repeatable use case solutions. The initial

hype around serverless centered around fast development cycles and back-end

use cases where serverless functions acted as the glue between disparate cloud

services. ... Since then, we've seen many more enterprise customers taking

advantage of serverless. An expanded ecosystem of ancillary services drives

emerging use cases. The core use case of serverless remains building

lightweight, short-running ephemeral functions.

New AI standards group wants to make data scraping opt-in

The Dataset Providers Alliance, a trade group formed this summer, wants to

make the AI industry more standardized and fair. To that end, it has just

released a position paper outlining its stances on major AI-related issues.

The alliance is made up of seven AI licensing companies, including music

copyright-management firm Rightsify, Japanese stock-photo marketplace Pixta,

and generative-AI copyright-licensing startup Calliope Networks. ... The DPA

advocates for an opt-in system, meaning that data can be used only after

consent is explicitly given by creators and rights holders. This represents a

significant departure from the way most major AI companies operate. Some have

developed their own opt-out systems, which put the burden on data owners to

pull their work on a case-by-case basis. Others offer no opt-outs whatsoever.

The DPA, which expects members to adhere to its opt-in rule, sees that route

as the far more ethical one. “Artists and creators should be on board,” says

Alex Bestall, CEO of Rightsify and the music-data-licensing company Global

Copyright Exchange, who spearheaded the effort. Bestall sees opt-in as a

pragmatic approach as well as a moral one: “Selling publicly available

datasets is one way to get sued and have no credibility.”

AI potential outweighs deepfake risks only with effective governance: UN

“AI must serve humanity equitably and safely,” Guterres says. “Left unchecked,

the dangers posed by artificial intelligence could have serious implications

for democracy, peace and stability. Yet, AI has the potential to promote and

enhance full and active public participation, equality, security and human

development. To seize these opportunities, it is critical to ensure effective

governance of AI at all levels, including internationally.” ... The flurry of

laws also concern worker protections – which in Hollywood means protecting

actors and voice actors from being replaced with deepfake AI clones. Per AP,

the measure mirrors language in the deal SAG-AFTRA made with movie studios

last December. The state is also to consider imposing penalties on those who

clone the dead without obtaining consent from the deceased’s estate – a

bizarre but very real concern, as late celebrities begin popping up in studio

films. ... If you find yourself suffering from deepfake despair, Siddharth

Gandhi is here to remind you that there are remedies. Writing in ET Edge, the

COO of 1Kosmos for Asia Pacific says strong security is possible by pairing

liveness detection with device-based algorithmic systems that can detect

injection attacks in real-time.

Red Hat delivers AI-optimized Linux platform

RHEL AI helps enterprises get away from the “one model to rule them all”

approach to generative AI, which is not only expensive but can lock

enterprises into a single vendor. There are now open-source large language

models available that rival those available from the commercial vendors in

performance. “And there are smaller models,” Katarki adds, “which are truly

aligned to your specific use cases and your data. They offer much better ROI

and much better overall costs compared to large language models in general.”

And not only the models themselves but the tools needed to train them are also

available from the open-source community. “The open-source ecosystem is really

fueling generative AI, just like Linux and open source powered the cloud

revolution,” Katarki says. In addition to allowing enterprises to run

generative AI on their own hardware, RHEL AI also supports a “bring your own

subscription” for public cloud users. At launch, RHEL AI supports AWS and the

IBM cloud. “We’ll be following that with Azure and GCP in the fourth quarter,”

Katarki says. RHEL AI also has guardrails and agentic AI on its roadmap.

“Guardrails and safety are one of the value-adds of Instruct Lab and RHEL AI,”

he says.

Quote for the day:

"Without continual growth and

progress, such words as improvement, achievement, and success have no

meaning." -- Benjamin Franklin

No comments:

Post a Comment