AI, Software Architecture and New Kinds of Products

Although AI won’t change the practice of software architecture, AI will make a

big change in what software architects architect. The first generation of

AI-enabled applications will be similar to what we have now. For example,

integrating generative AI into word processing and spreadsheet applications

(as Microsoft and Google are doing) or tools for AI-assisted programming (like

GitHub Copilot and others). But before long, we will be building different

kinds of software. ... Architects will also play a role in evaluating an AI’s

performance. Evals determine whether the application’s performance is

acceptable. But what does “acceptable” mean in the application’s context?

That’s an architectural question. In an autonomous vehicle, misidentifying a

pedestrian isn’t acceptable; picking a suboptimal route is tolerable. In a

recommendation engine, poor recommendations aren’t a problem as long as a

reasonable number are good. What’s “reasonable”? That’s an architectural

question. Evals also give us our first glimpses of what running the

application in production will be like. Is the performance acceptable? Is the

cost of running it acceptable?

The AI Power Paradox

Wellise notes that AI technologies may also help data centers to manage their

energy consumption by monitoring environmental conditions and adjusting use of

resources accordingly. “In one of our Frankfurt data centers, we deployed the

use of AI to create digital twins where we model data associated with climate

parameters,” he explains. AI can also help tech companies that operate data

centers in different areas to allocate their resources according to the

availability of renewables. If it is sunny in California and solar energy is

available to a data center there, models can ramp up their training at that

location and ramp it down in cloudy Virginia, Demeo says. “Just because

they’re there doesn't mean they have to run at full capacity.” Data center

customers, too, can have an impact. They can stipulate that they will only use

a data center’s services under certain circumstances. “They will use your data

center only until a certain price. Beyond that, they will not use it,”

Chaudhuri relates. Though application of even the most moderate of these

technologies is not yet widespread, advocates claim that these experimental

setups may eventually be more widely applicable.

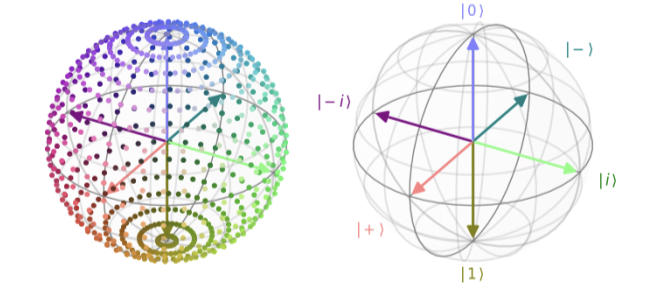

Quantinuum Unveils First Contribution Toward Responsible AI

This research has significant implications for the future of AI and quantum

computing. One of the most notable outcomes is the potential to use quantum AI

for interpretable models. In current large language models (LLMs) like GPT-4,

decisions are often made in a “black box” fashion, making it difficult for

researchers to understand how or why certain outputs are generated. In

contrast, the QDisCoCirc model allows researchers to inspect the internal

quantum states and the relationships between different words or sentences,

providing insights into the decision-making process. In practical terms, this

could have wide-reaching applications in areas such as question answering

systems, also referred to as ‘classification’ challenges, where understanding

how a machine reaches a conclusion is as important as the answer itself. By

offering an interpretable approach, quantum AI using compositional methods,

could be applied in fields like legal, medical, and financial sectors, where

accountability and transparency in AI systems are critical. The study also

showed that compositional generalization—the ability of the model to

generalize from smaller training sets to larger and more complex inputs—was

successful.

Differential privacy in AI: A solution creating more problems for developers?

Differential privacy protects personal data by adding random noise, making it

harder to identify individuals while preserving the dataset. The fundamental

concept revolves around a parameter, epsilon (ε), which acts as a privacy knob. A lower epsilon value results in stronger

privacy but adds more noise, which in turn reduces the usefulness of the data.

A developer at a major fintech company recently voiced frustration over

differential privacy’s effect on their fraud detection system, which needs to

detect tiny anomalies in transaction data. “When noise is added to protect

user data,” they explained, “those subtle signals disappear, making our model

far less effective.” Fraud detection thrives on spotting minute deviations,

and differential privacy easily masks these critical details. The stakes are

even higher in healthcare. For instance, AI models used for breast cancer

detection rely on fine patterns in medical images. Adding noise to protect

privacy can blur these patterns, potentially leading to misdiagnoses. This

isn’t just a technical inconvenience—it can put lives at risk.

Thinking of building your own AI agents? Don’t do it, advisors say

Large companies may be tempted to roll their own highly customized agents, he

says, but they can get tripped up by fragmented internal data, by

underestimating the resources needed, and by lacking in-house expertise.

“While some companies may achieve success, it’s common for these projects to

spiral out of control in terms of cost and complexity,” Ackerson says. “In

many cases, buying a solution from a trusted partner can help organizations

avoid the pitfalls of builder’s remorse and accelerate their path to success.”

AlphaSense has trained its own AI agents, but many companies lack internal

expertise, he says. In addition, organizations may project the development

costs but ignore the cost of ongoing maintenance, he adds. “This is the

largest cost, as maintaining AI systems over time can be complex and

resource-intensive, requiring constant updates, monitoring, and optimization

to ensure long-term functionality,” Ackerson says. Partnering with an AI

provider can give companies access to proven, ready-made agents that have been

tested and refined by thousands of users, he contends. “It’s faster to

implement, less resource-intensive, and comes with the added benefit of

ongoing updates and support — freeing companies to focus on other critical

areas of their business,” he says.

Building an Enterprise Data Strategy: What, Why, How

After completing the assessment of your current data management efforts and

defining your objectives and priorities, you can begin to assemble the data

governance framework by defining roles, responsibilities, and procedures for

the entire data lifecycle. This includes data ownership, access controls,

security, and compliance as well as data consistency, accountability, and

integrity. The next step is to establish the processes and tools used to

manage data quality, which include data profiling, cleansing, standardization,

and validation. Determine the mechanisms for integrating data to create a

seamless and coherent data environment encompassing the entire enterprise.

Data lifecycle management covers data retention policies, archival storage,

and data purging to ensure efficient storage management. The glue that keeps

the many moving pieces of an enterprise data strategy working together

harmoniously is your company’s culture of data literacy and empowerment.

Employees and managers must be trained to recognize the value of data to the

organization, and the importance of maintaining its quality and

security.

Beware the Great AI Bubble Popping

This does not mean that the technology will never make money. Early stages of

evolution in any tech usually involve trying products in the market by making

them as accessible as possible and monetizing the solutions when there's

clarity on use cases, sizable adoption, dependency and demand. Generative AI

will take a while longer to get there. The Great Popping will also lead to the

ecosystem thinning. Startups with speculative or unsustainable business models

will shutter shop as funding decreases. The most likely future scenario is

that the AI landscape will shift to make room for a small number of long-term

players that focus on practical applications, while the rest go bust. Despite

sharing similarities with the dot-com bubble, the residue of the AI one will

likely differ in that entire companies, especially the OpenAIs and the

Anthropics, won't likely shutter completely. They may close down

money-guzzling units, rejigger focus or even pivot entirely, but they are

unlikely to vanish off the face of the earth as their dot-com counterparts

did. Job losses are a likely inevitability, and few firms will hire the

laid-off employees.

Why Jensen Huang and Marc Benioff see ‘gigantic’ opportunity for agentic AI

In the future, Huang noted, there will be AI agents that understand subtleties

and that can reason and collaborate. They’ll be able to find other agents to

“work together, assemble together,” while also talking to humans and

soliciting feedback to improve their dialogue and outputs. Some will be

“excellent” at particular skills, while others will be more general purpose,

he noted. “We’ll have agents working with agents, agents working with us,”

said Huang. “We’re going to supercharge the ever-loving daylights of our

company. We’re going to come to work and a bunch of work we didn’t even

realize needed to be done will be done.” Adoption needs to be demystified, he

and Benioff agreed, with Huang noting that “it’s going to be a lot more like

onboarding employees.” Benioff, for his part, underscored the importance of

people being able to “actually understand” how they work and their purpose,

and “need to get their hands in the soil.” ... Huang pointed out that the

challenges we have in front of us are “many.” Some of these include

fine-tuning and guardrailing, but scientists are making advancements in these

areas every day.

Navigating a Security Incident – Best Practices for Engaging Service Providers

Organizations experiencing a security incident must grapple with numerous

competing issues simultaneously, usually under a very tight timeframe and the

pressure of significant business disruption. Engaging qualified service

providers is often critical to successfully resolving and minimizing the

fall-out of the incident. These providers include forensic firms, public

relations firms, restoration experts, and notification and call center

vendors. Due to the nature of these services, they can have access to or even

generate additional personal and sensitive information relevant to the

incident. Protecting this information from third party or unauthorized

disclosures during litigation, discovery, or otherwise, via the application of

attorney-client privilege and the work product doctrine is essential. While

there is no bright-line, uniform rule about how and under what circumstances

these privileges attach to forensic reports and other information prepared by

incident response providers, recent case law offers guidance as to how

organizations can maximize the prospect that their assessments will remain

shielded by the work product doctrine and/or the attorney-client privilege.

AWS claims customers are packing bags and heading back on-prem

You read that correctly – customers are finding that moving their IT back

on-premises is so attractive compared with remaining on AWS that they are

prepared to do this despite the significant effort involved. Hardly a

resounding endorsement of the benefits of the cloud. AWS also says that

customers may switch back to on-premises for a number of reasons, including

"to reallocate their own internal finances, adjust their access to technology

and increase the ownership of their resources, data and security." In fact,

there have been a growing number of cases of companies moving some or even all

their workloads back from the cloud – so-called cloud repatriation – and cost

often seems to be a factor. ... Andrew Buss, IDC senior research director for

EMEA, told The Register that while cloud repatriation is becoming more common,

"we'd put the share of companies actively repatriating public cloud workloads

in the single digit percentage sphere." Organizations are more likely to move

to another public cloud provider if the incumbent is not meeting their needs,

he said, and they have got more used to the cost economics of public cloud and

can compare it to the long-term costs of running private IT infrastructure.

Quote for the day:

"Without initiative, leaders are

simply workers in leadership positions." -- Bo Bennett

No comments:

Post a Comment