Why AI Won’t Take Over The World Anytime Soon

The majority of AI systems we encounter daily are examples of "narrow AI." These

systems are masters of specialization, adept at tasks such as recommending your

next movie on Netflix, optimizing your route to avoid traffic jams or even more

complex feats like writing essays or generating images. Despite these

capabilities, they operate under strict limitations, designed to excel in a

particular arena but incapable of stepping beyond those boundaries. Even the

generative AI tools that are dazzling us with their ability to create content

across multiple modalities. They can draft essays, recognize elements in

photographs, and even compose music. However, at their core, these advanced AIs

are still just making mathematical predictions based on vast datasets; they do

not truly "understand" the content they generate or the world around them.

Narrow AI operates within a predefined framework of variables and outcomes. It

cannot think for itself, learn beyond what it has been programmed to do, or

develop any form of intention. Thus, despite the seeming intelligence of these

systems, their capabilities remain tightly confined.

Establishing a security baseline for open source projects

Transparency is in the spirit of open-source, and enhancing it within the

community is a key goal of our organization. Currently, every OpenSSF project is

required to have a security policy that provides clear directions on how

vulnerabilities should be reported and how they will be responded to. The

security baseline also requires that. The OpenSSF Best Practice Badge program

and Scorecard report if a project has a vulnerability disclosure policy. The

badge program passing level has been used by other Linux Foundation open-source

projects as a criteria to become generally available. Open-source communities

have been pushing the boundaries on SBOM to increase transparency in both

open-source and closed source software. However, there have been challenges with

SBOM consumption due to data quality and interoperability issues. Recently,

OpenSSF, along with CISA and DHS S&T, took steps to address this challenge

by releasing Protobom, an open-source software supply chain tool that enables

all organizations, including system administrators and software development

communities, to read and generate SBOMs and file data, as well as translate this

data across standard industry SBOM formats.

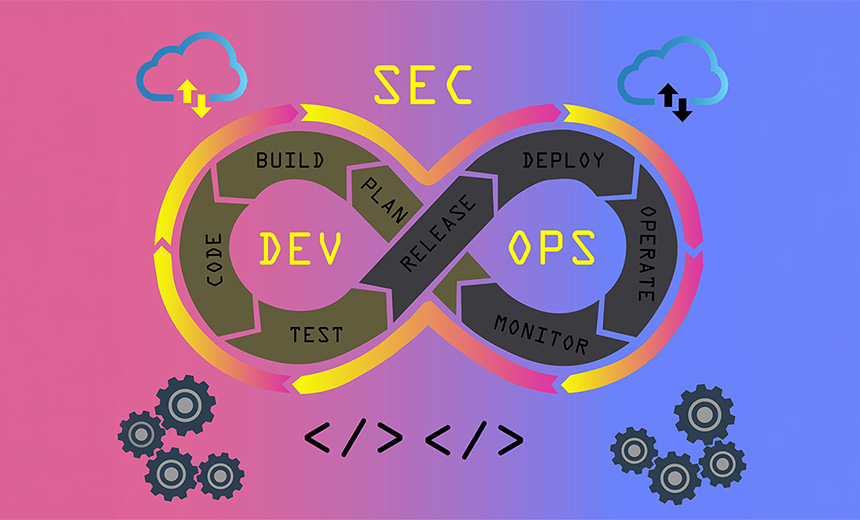

Overcoming Resistance to DevOps Adoption

A main challenge in transitioning from traditional software development

approaches is establishing a DevOps culture. For years, development teams have

worked in siloes, leading to bureaucracy and departmental barriers that hindered

agility and collaboration. These teams are required to learn new tools and

processes as part of adopting agile development methodologies, creating a

cultural shift and resistance to change. Most practitioners have cited cultural

change as a barrier to DevOps adoption. Soumik Mukherjee, senior manager,

platform engineering (global), Ascendion, said he confronted these challenges by

starting small with manageable projects, celebrating early wins to build

momentum, and fostering open communication and collaboration across teams. "We

invest in upskilling our employees and continuously track progress to identify

and address any bottlenecks. By breaking down silos and building a shared

understanding, we create a collaborative environment where teams work together

efficiently and effectively," Mukherjee said. Debashis Singh, CIO at Persistent

Systems, said "Fostering a DevOps and DevSecOps culture and establishing a clear

vision is akin to setting the North Star for everyone in the organization.

Charting India’s AI trajectory: Insights from World Economic Forum

The overarching theme for this year’s WEF focused on “Rebuilding Trust”, though

the topic extends beyond fighting corruption in public institutions. Trust is

critical to AI. Without trust in AI and its outputs, our goal of transforming

economies with AI will be hard to achieve. The foundation of this trust starts

with high-fidelity, trusted, and secure input data. We must center security and

compliance when developing AI applications to combat these concerns. Adoption

will naturally accelerate when leaders can trust that AI applications are secure

and compliant. No company can risk missing out on the productivity gains that AI

offers. As Sam Altman said at Davos, “[GenAI] will change the world much less

than we all think and it will change jobs much less than we all think. We will

all operate at a… higher level of abstraction… [and] have access to a lot more

capability.” India’s AI journey and progress took the spotlight at WEF and was

center stage at various bustling technology discussions. The country’s

unwavering commitment to driving innovation and fostering growth is evident in

the many success stories and examples shared at the forum.

Don’t overlook the impact of AI on data management

While many organizations already understand the power of having clean data and

clean ways of inputting that data, many fail to grasp that tools ready to help

them with this process already exist and are already doing wonders for peers in

your industry. One emerging tool for inputting data, that may surprise, is

generative AI chatbots. With the advent of gen AI, a new breed of chatbots has

emerged — ones that can conduct high-level conversations, resembling human

interactions more closely than ever before. Not only can they understand

customer queries, but they can input and collect data directly with business

systems, efficiently handling forms and personalizing client profiles.

Integrating such AI-driven chatbots isn’t just about cutting costs — it’s about

revolutionizing customer engagement and driving new insights from every

interaction. If the first step is automating data capture, chatbots can directly

collect and process data from customers without human intervention. The chatbots

can not only collect the data but they can also use it for cross

selling.

Linux backdoor threat is a wake-up call for IoT

This hack should serve as a wake-up call that not every device warrants Linux.

Basic devices like sensors or monitors – and, yes, even doorbells – usually

serve one function at a time. They can therefore benefit from the resource

efficiency and focused functionality of RTOS. In Linux and other general-purpose

operating systems, programs are loaded dynamically after boot, often with the

ability to run in separate memory and file spaces under different user accounts.

This isolation is beneficial when running multiple applications concurrently on

a shared server, as one user’s programs cannot interfere with another’s, and

hardware access is shared equally through the operating system. In contrast,

RTOS operates by compiling applications and tasks directly into the system with

minimal separation between memory spaces and hardware. Since the primary goal of

an IoT device is typically to serve a single application, possibly divided into

multiple tasks, this lack of separation is not an issue. Additionally, because

the application is compiled into the RTOS, it is ready to run after a very short

boot and initialization process.

AI At The Edge Is Different From AI In The Data Center

In manufacturing, locally run AI models can rapidly interpret data from sensors

and cameras to perform vital tasks. For example, automakers scan their assembly

lines using computer vision to identify potential defects in a vehicle before it

leaves the plant. In a use case like this, very low latency and always-on

requirements make data movement throughout an extensive network impractical.

Even small amounts of lag can impede quality assurance processes. On the other

hand, low-power devices are ill-equipped to handle beefy AI workloads, such as

training the models that computer vision systems rely on. Therefore, a holistic,

edge-to-cloud approach combines the best of both worlds. Backend cloud instances

provide the scalability and processing power for complex AI workloads, and

front-end devices put data and analysis physically close together to minimize

latency. For these reasons, cloud solutions, including those from Amazon,

Google, and Microsoft, play a vital role. Flexible and performant instances with

purpose-built CPUs, like the Intel Xeon processor family with built-in AI

acceleration features, can tackle the heavy lifting for tasks like model

creation.

Ask a Data Ethicist: What Happens When Language Becomes Data?

Natural language processing involves turning language into formats a machine

can understand (numbers), before turning it back into our desired human output

(text, code, etc). One of the first steps in the process of “datafying”

language is to break it down into tokens. Tokens are typically a single word,

at least in English – more on that in a minute. ... Tokens are important

because they not only drive performance of the model they also drive training

costs. AI companies charge developers by the token. English tends to be the

most token-efficient language, making it economically advantageous to train on

English language “data” versus, say, Burmese. This blog post by data scientist

Yennie Jun goes into further details about how the process works in a very

accessible way, and this tool she built allows you to select different

languages along with different tokenizers to see exactly how many tokens are

needed for each of the languages selected. NLP training techniques used in

LLMs privilege the English language when it turns it into data for training,

and penalize other languages, particularly low-resource languages.

AI-powered XDR: The Answer to Network Outages and Security Threats

To overcome the limitations of standard XDR, organizations can choose XDR

capabilities integrated within a SASE architecture. SASE consolidates all

networking and security functions into a cohesive whole with

single-pane-of-glass visibility. SASE-based, next-gen XDR can leverage SASE’s

telemetry to inform an organization’s incident detection and response

workflows. By leveraging native sensors, like NGFW, advanced threat

prevention, SWG, and ZTNA (zero trust network architecture), that feed data

into a unified data lake, SASE eliminates the need for data integration and

normalization. It allows XDR to analyze raw data, which eliminates

inaccuracies and gaps. ... AI and machine learning play a pivotal role in XDR

capabilities. Advanced algorithms trained on vast amounts of data enable more

accurate incident detection and correlation. However, only comprehensive,

consistent, and high-quality data and events can train AI/ML algorithms to

create quality XDR incidents and perform root-cause analysis. SASE converges

petabytes of data from various native sensors into a single data lake for

training advanced AI/ML models.

Is an AI Bubble Inevitable?

Forward-looking enterprise AI adopters are already hedging their bets by

ensuring they have interpretable AI and traditional analytics on hand while

they explore newer AI technologies with appropriate caution, Zoldi says. He

notes that many financial services organizations have already pulled back from

using GenAI, both internally and for customer-facing applications. "The fact

that ChatGPT, for example, doesn't give the same answer twice is a big

roadblock for banks, which operate on the principle of consistency." ... In

the event of a market drawback, AI customers may revert to less sophisticated

approaches instead of reevaluating their AI strategies, Amorim warns. "This

could result in a setback for businesses that have invested heavily in AI,

since they may be less inclined to explore its full potential or adapt to

changing market dynamics." Just as the dot-com failure didn't permanently

destroy the web, an AI industry collapse won't mark the end of AI. Zoldi

believes there will eventually be a return to normal. "Companies that had a

mature, responsible AI practice will come back to investing in continuing that

journey," he notes.

Quote for the day:

"Without continual growth and

progress, such words as improvement, achievement, and success have no

meaning." -- Benjamin Franklin

No comments:

Post a Comment