4 reasons firewalls and VPNs are exposing organizations to breaches

Unfortunately, the attack surface problems of perimeter-based architectures go well beyond the above, and that is because of firewalls and VPNs. These tools are the means by which castle-and-moat security models are supposed to defend hub-and-spoke networks but using them has unintended consequences. Firewalls and VPNs have public IP addresses that can be found on the public internet. This is by design so that legitimate, authorized users can access the network via the web, interact with the connected resources therein and do their jobs. However, these public IP addresses can also be found by malicious actors who are searching for targets that they can attack in order to gain access to the network. In other words, firewalls and VPNs give cybercriminals more attack vectors by expanding the organization’s attack surface. Ironically, this means that the standard strategy of deploying additional firewalls and VPNs to scale and improve security actually exacerbates the attack surface problem further. Once cybercriminals have successfully identified an attractive target, they unleash their cyberattacks in an attempt to penetrate the organization’s defenses.

CI/CD Observability: A Rich New Opportunity for OpenTelemetry

We deploy things, we see things catch on fire and then we try to mitigate the fire. But if we only observe the latest stages of the development and deployment cycle, it’s too late. We don’t know what happened in the build phase or the test phase, or we have difficulty in root cause analysis or due to increases in mean time to recovery, and also due to missed optimization opportunities. We know our CI pipelines take a long time to run, but we don’t know what to improve if we want to make them faster. If we shift our observability focus to the left, we can address issues before they escalate, enhance efficiency by cutting problems in the process, increase the robustness and integrity of our tests, and minimize costs and expenses related to post-deployment and downtime. ... This is a really exciting time for the observability community. By getting data out of our CIs and integrating it with observability systems, we can trace back to the logs in builds, and see important information — like when the first time something failed was — from our CI. From there, we can find out what’s producing errors, in a way that’s much better pinpointed to the exact time of their origin.

Cyber breach misinformation creates a haze of uncertainty

Fueling the rise of data breach misinformation is the speed at which fake data

breach reports are spread online. In a recent blog post, Hunt wrote: “There are

a couple of Twitter accounts in particular that are taking incidents that appear

across a combination of a popular clear web hacking forum and various dark web

ransomware websites and ‘raising them to the surface,’ so to speak. Incidents

that may have previously remained on the fringe are being regularly positioned

in the spotlight where they have much greater visibility.” “It’s getting very

difficult at the moment because not only are there more breaches than ever, but

there’s just more stuff online than ever,” Hunt says. ... “We need to get

everything out from in the shadows,” Callow says. “Far too much happens in the

shadows. The more light can be shone on it, the better. That would be great in

multiple ways. It’s not just a matter of removing some of the leverage threat

actors have. It’s also giving the cybersecurity community and the government

access to better data. Far too much goes unreported.”

Making cybersecurity more appealing to women, closing the skills gap

From my perspective of a consistent interest in seeking industry expertise for

guidance and mentorship in cyber security there is currently both a good news

and bad news story. There are some strong examples out there, like Women in

Cybersecurity, but I think women can be reluctant to join them because they

don’t want to be different to their male counterparts and want to be part of an

inclusive operating structure such as Tech Channel Ambassadors recently

established to address this significant gap in the sector Personal mentorship

can drive really positive change, and it’s certainly had a strong influence on

my career. There’s still a shortfall in organised mentor programmes with

businesses, but I see a lot of talented people identifying that gap and reaching

out to support those starting out in their careers more proactively, which is

fantastic. It’s important to realise that mentors don’t need to be within the

same company or even the same industry. I’m currently mentoring one person

within Sapphire and six others outside the company. Meanwhile, I’ve had four

incredible mentors myself—and one of them is a CEO in the fashion

industry.

The power of persuasion: Google DeepMind researchers explore why gen AI can be so manipulative

Persuasion can be rational or manipulative — the difference being the underlying

intent. The end game for both is delivering information in a way that will

likely shape, reinforce or change a person’s behaviors, beliefs or preferences.

But while rational gen AI delivers relevant facts, sound reasons or other

trustworthy evidence with its outputs, manipulative gen AI exploits cognitive

biases, heuristics and other misrepresenting information to subvert free

thinking or decision-making, according to the DeepMind researchers. ... AI can

build trust and rapport when models are polite, sycophantic and agreeable,

praise and flatter users, engage in mimicry and mirroring, express shared

interests, relational statements or adjust responses to align with users’

perspectives. Outputs that seem empathetic can fool people into thinking AI is

more human or social than it really is. This can make interactions less

task-based and more relationship-based, the researchers point out. “AI systems

are incapable of having mental states, emotions or bonds with humans or other

entities,” they emphasize.

Federal Privacy Bill Aims To Consolidate US Privacy Law Patchwork

lthough it is not yet law, many observers are optimistic that the APRA will move

forward due to its bipartisan support and the compromises it reaches on the

issues of preemption and private rights of action, which have stalled prior

federal privacy bills. The APRA contains familiar themes that largely mirror

comprehensive state privacy laws, including the rights it provides to

individuals and the duties it imposes on Covered Entities. This article

discusses key departures from state privacy laws and new concepts introduced by

the APRA. ... The APRA follows most state privacy laws with a broad definition

of Covered Data, including any information that “identifies or is linked or

reasonably linkable, alone or in combination with other information, to an

individual or a device that identifies or is linked or reasonably linkable to

one or more individuals.” The APRA would exclude employee information,

de-identified data and publicly available information. Only the California

Consumer Privacy Act (CCPA) currently includes employee information in its scope

of covered data.

How cloud cost visibility impacts business and employment

What rings true is that engineers are on the frontline in controlling cloud

costs. Indeed, they drive most overspending (or underspending) on cloud services

and the associated fees. However, they are the most often overlooked asset when

it comes to dealing with these costs. The main issue is that the cloud cost

management team, including those responsible for managing finops programs, often

lacks an understanding of engineers’ work and how it can affect costs. They

assume that everything engineers do is necessary and unavoidable without

considering there could be potential cost inefficiencies. When I work with a

company to optimize their cloud costs, I often ask how they collaborate with

their cloud engineers. I’m mostly met with questioning stares. This indicates

that they don’t interact with the engineers in ways that would help optimize

cloud costs. Companies look at their cloud engineers as if they were pilots of

passenger aircraft—well-trained, following all best practices and procedures,

and already optimizing everything. Unfortunately, this is not always true.

The Impact of Generative AI on Data Science

Organizations will continue to need more data scientists to fill a void in the

specialized knowledge, as described above. To do so, enterprises may think to

turn to the businessperson – say, the sales professional that automates

quarterly financial reports. Berthold described this worker as one who can build

a Microsoft Excel workflow and reliably do the data aggregation, avoiding

errors. That employee can use an AI chat assistant to “upskill their Data

Literacy,” says Berthold. They can come to the LLM with their “own perspective

and vocabulary,” and learn. For example, they can get help on how to use Excel’s

Visual Basic (VB) code to look up data and see what functions will be available,

from there. ... So, AI and Data Governance need to put controls and safeguards

in place. Berthold sees that a commercial platform, like his own company, can

come in handy in supporting these requirements. He said, “Organizations need

governance programs or IT departments to only allow access to the AI tools their

workers use. Companies need to ensure they know what happens to the data before

it gets sent to another person or system.“

NIST 2.0 as a Framework for All

Importantly, it’s not prescriptive in nature but focuses purely on what

desirable outcomes are. This flexible approach focused on high-level outcomes

instead of prescriptive solutions sends a clear message to the security

practitioners globally – tailor the policies, controls and procedures to the

specific outcomes that are relevant for your organisational context. The new

version retains the five core functions of the original ie identify, protect,

detect, respond and recover. ‘Identify’ catalogues the assets of the

organisation and related cybersecurity risks and ‘Protect’ the safeguards and

measures that can be taken to protect those assets. ‘Detect’ focuses on the

means the organisation has to find and analyse anomalies, indicators of

compromise and events, and ‘Respond’ the process that then happens when a

threat is detected. Finally, ‘Recover’ looks at the capability of the

organisation to restore and resume normal operations. However, under NIST 2.0

there is now a sixth function: ‘Govern’. ‘Govern’ is an overarching function

that encompasses the other five and determines that the cybersecurity risk

management strategy, expectations and policy are established, communicated, and

monitored.

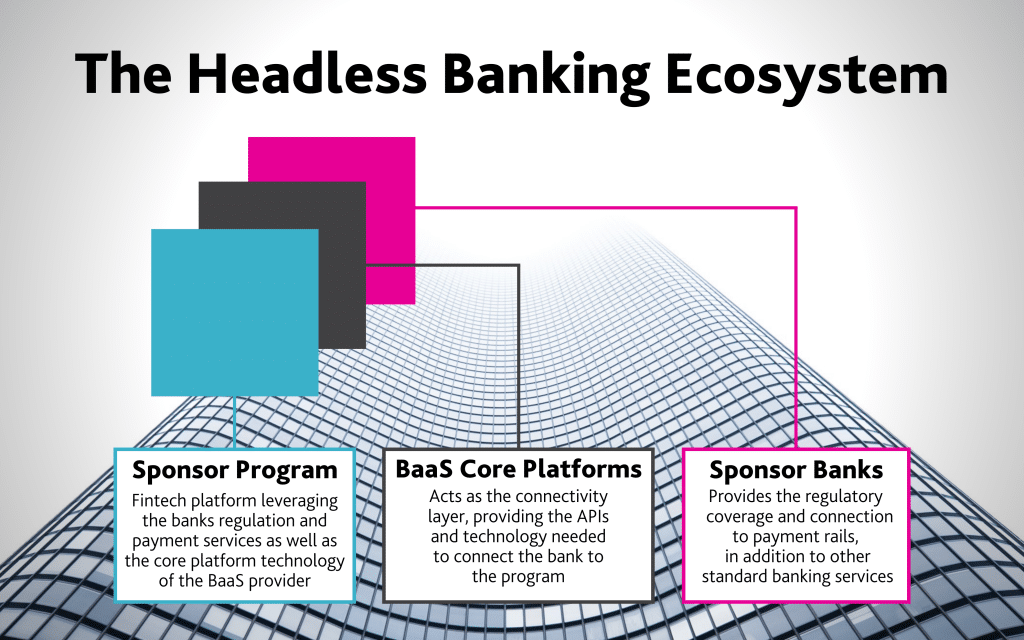

Is Headless Banking the Next Evolution of BaaS?

Not every financial institution can just flip a switch and immediately offer

headless banking services. Headless banking relies on robust APIs to allow

partners to access back-end data structures and business logic, says Ryan

Christiansen, executive director of Stema Center for Financial Technology at the

University of Utah. “Take the Column example,” Christiansen says. “This is a

very innovative bank in that its entire tech stack is built around just having

these APIs. Most banks have not built their technology on this modern tech

stack, which is APIs.” ... Regulators have been turning up the heat on sponsored

banks and BaaS firms as a whole, which also slows down, or impedes altogether,

potential entrants, Stema Center’s Christiansen says. “It’s a full Category 5

storm in the regulatory space,” he says. “There’s consent orders being handed

out to these sponsor banks on a very routine basis right now. Regulators have

decided that there’s not enough oversight of their fintech clients.” Sponsored

banks, for example, are still required to abide by “very strict” KYC guidelines

when new accounts are opened, even if a fintech partner is the one handling the

account opening, Christansen says.

Quote for the day:

"Brilliant strategy is the best route to

desirable ends with available means." -- Max McKeown

No comments:

Post a Comment