Transforming transformation

Transformation has been a way of extracting value rather than re-invention.

Financial services companies are particularly guilty of this. For example, in

banking, digital has been a way of reducing costs by moving the “business of

banking” into the hands of the end customer – hence why we all do things

ourselves that the bank used to do for us. This focus on cost reduction has

meant that processes have been optimised for the digital age at the expense of

true innovation. The days of extracting value are almost over for the

financial services industry. There are not many places left to reduce costs.

So, they must become value creators, which means taking a leaf out of the

digital giants’ book and finding ways of identifying and solving problems. ...

But, according to Paul Staples, who was, until recently, head of embedded

banking for HSBC, success will not be determined by technology but by the

proposition, approach, and processes that the banks wrap around it. Pain

points and value must be identified up front, forming the basis of what gets

delivered.

Five Megatrends Impacting Banking Forever

The first megatrend impacting banking is the democratization of data and

insights. More than ever, data is being collected everywhere, and it is

the lifeblood of any financial institution. The democratization of data

and insights refers to the process of making data and insights accessible

to a wider audience, including both employees and customers. ... The

explosion of hyper-personalization is driven by the use of significantly

larger amounts of data, such as browsing and purchase history, interests

and preferences, demographics and even survey information. With advanced

technologies that include facial recognition, augmented reality and

conversational AI, it is now possible to also offer customers highly

personalized experiences that cater to their unique delivery preferences –

in near real-time. ... Traditionally, banks and credit unions have viewed

their relationship with consumers as a series of transactions. However, in

recent years, there has been an increasing focus on providing a seamless

and integrated engagement opportunity that can result in a more stable and

long-term relationship.

Understanding the Role of DLT in Healthcare

Finding actual healthcare circumstances where this DLT technology could be

useful and relevant is crucial. Instead of implementing a solution without

first identifying an issue to answer, organizations must take into account

any current requirements or challenges that the technology may help

address. Organizations employing this technology must be aware of and

receptive to the new organizational paradigms that go along with these

solutions. Recognizing the paradigm shift to decentralized, distributed

solutions is essential to evaluating this technology. ... In shared

ledgers, the validity and consistency of which are maintained by nodes

using a variety of processes, including consensus mechanisms, protecting

the secrecy of data entail ensuring that only authorized access is granted

to data. Institutions are employing a multi-layered strategy for

blockchain in healthcare, using private blockchains where all of the

linked healthcare organizations are well-known and trusted.

Control the Future of Data with AI and Information Governance

“The average company manages hundreds of terabytes of data. For that data

to prove an asset rather than a liability, it must be located, classified,

cleansed, and monitored. With so much data entering the organization so

quickly from so many disparate sources, conducting those data tasks

manually is not feasible.” “For organizations to make accurate data-driven

decisions, decision makers need clean, reliable data. By the same token,

AI-powered analysis will only prove useful if based on complete and

accurate data sets. That requires visibility into all relevant data. And

it requires exhaustive checks for errors, duplicates, and outdated

information.” “An important aspect of information governance includes data

security. Privacy regulations, for example, require that organizations

take all reasonable measures to keep confidential data safe from

unauthorized access. This includes ensuring against inappropriate sharing

and applying encryption to sensitive information.”

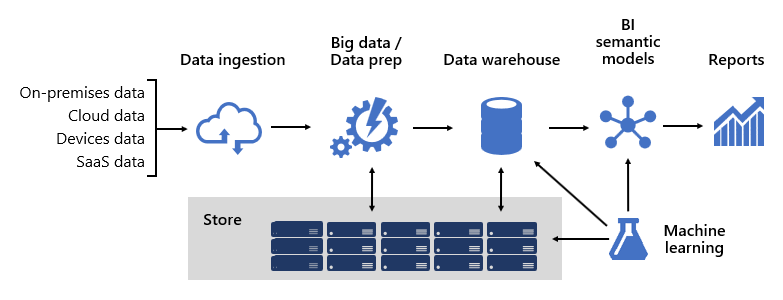

BI solution architecture in the Center of Excellence

Designing a robust BI platform is somewhat like building a bridge; a

bridge that connects transformed and enriched source data to data

consumers. The design of such a complex structure requires an engineering

mindset, though it can be one of the most creative and rewarding IT

architectures you could design. In a large organization, a BI solution

architecture can consist of: Data sources; Data ingestion; Big data /

data preparation; Data warehouse; BI semantic models;

and Reports. At Microsoft, from the outset we adopted a systems-like

approach by investing in framework development. Technical and business

process frameworks increase the reuse of design and logic and provide a

consistent outcome. They also offer flexibility in architecture leveraging

many technologies, and they streamline and reduce engineering overhead via

repeatable processes. We learned that well-designed frameworks increase

visibility into data lineage, impact analysis, business logic maintenance,

managing taxonomy, and streamlining governance.

When finops costs you more in the end

Don’t overspend on finops governance. The same can be said for finops

governance, which controls who can allocate what resources and for what

purposes. In many instances, the cost of the finops governance tools

exceeds any savings from nagging cloud users into using fewer cloud

services. You saved 10%, but the governance systems, including human time,

cost way more than that. Also, your users are more annoyed as they are

denied access to services they feel they need, so you have a morale hit as

well. Be careful with reserved instances. Another thing to watch out for

is mismanaging reserved instances. Reserved instances are a way to save

money by committing to using a certain number of resources for a set

period. But if you’re not optimizing your use of them, you may end up

spending more than you need to. Again, the cure is worse than the disease.

You’ve decided that using reserved instances, say purchasing cloud storage

services ahead of time at a discount, will save you 20% each year.

However, you have little control over demand, and if you end up underusing

the reserved instances, you still must pay for resources that you didn’t

need.

Core Wars Shows the Battle WebAssembly Needs to Win

So the basics are that you have two or more competing programs, running in

a virtual space and trying to corrupt each other with code. In summary:The

assembler-like language is called Redcode. Redcode is run by a program

called MARS. The competitor programs are called “warriors” and are

written in Redcode, managed by MARS. The basic unit is not a byte,

but an instruction line. MARS executes one instruction at a time,

alternatively for each “warrior” program. The core (the memory of the

simulated computer), or perhaps “battlefield”, is a continuous wrapping

loop of instruction lines, initially empty except for the competing

programs, which are set apart. Code is run and data stored directly on

these lines. Each Redcode instruction contains three parts: the operation

itself (OpCode), the source address and the destination address. ... While

in modern chips, code moves through parallel threads in mysterious ways,

the Core War setup is still pretty much the basics of how a computer

works. However code is written it, we know it ends up as a set of machine

code instructions.

Data Fear Looms As India Embraces ChatGPT

Considering the vast amounts of data that OpenAI has amassed without

permission—enough that there is a chance that ChatGPT will be trained on

blog posts, product reviews, articles and more—its privacy policy raises

legitimate concerns. The IP address of visitors, their browser’s type and

settings, and the information about how visitors interact with the

websites—such as the kind of content they engage with, the features they

use, and the actions they take—are all collected by OpenAI in accordance

with its privacy policy. Additionally, it compiles information on the

user’s website and time-based browsing patterns. OpenAI also states that

it may share users’ personal information with unspecified third parties

without informing them to meet its business objectives. The lack of clear

definitions for terms such as ‘business operation needs’ and ‘certain

services and functions’ in the company’s policies creates ambiguity

regarding the extent and reasoning for data sharing. To add to the

concerns, OpenAI’s privacy policy also states that the user’s personal

information may be used for internal or third-party research and could

potentially be published or made publicly available.

Booking.com's OAuth Implementation Allows Full Account Takeover

While researchers only divulged how they used OAuth to compromise

Booking.com in the report, they discovered other sites with risk from

improperly applying the authentication protocol, Balmas tells Dark

Reading. "We have observed several other instances of OAuth flaws on

popular websites and Web services," he says. "The implications of each

issue vary and depends on the bug itself. In our cases, we are talking

about full account takeovers across them all. And there are surely many

more that are yet to be discovered." OAuth provides an easy solution to

bypass the user login process for site owners, reducing friction for which

is a "long and frustrating" problem, Balmas says. However, though it seems

simple, implementing the technology successfully and securely is actually

very complicated in terms of proper technical implementation, and a single

small wrong move can have a huge security impact, he says. "To put it in

other words — it is very easy to put a working social login functionality

on a website, but it is very hard to do it correctly," Balmas tells Dark

Reading.

More automation, not just additional tech talent, is what is needed to stay ahead of cybersecurity risks

Just over three-quarters of CISOs believe that their limited bandwidth and

lack of resources has led to important security initiatives falling to the

wayside, and nearly 80% claimed they have received complaints from board

members, colleagues or employees that security tasks are not being handled

effectively. ... Stress is also having an impact on hiring. 83% of the

CISOs surveyed admitted they have had to compromise on the staff they hire

to fill gaps left by employees who have quit their job. “I’ve never tried

harder in my career to keep people than I have in the past few years,”

said Rader. “It’s so key to hang onto good talent because without those

people you’re always going to be stuck focusing on operations instead of

strategy.” But there are solutions — and it’s not just finding more

talent, says George Tubin, director of product marketing at Cynet. He said

CISOs want more automated tools to manage repetitive tasks, better

training, and the ability to outsource some of their work.

Quote for the day:

"No great manager or leader ever fell from heaven, its learned not

inherited." -- Tom Northup

No comments:

Post a Comment