Quote for the day:

"Surround yourself with great people; delegate authority; get out of the way" -- Ronald Reagan

Data sovereignty: an existential issue for nations and enterprises

Law-making bodies have in recent years sought to regulate data flows to

strengthen their citizens’ rights – for example, the EU bolstering individual

citizens’ privacy through the General Data Protection Regulation (GDPR). This

kind of legislation has redefined companies’ scope for storing and processing

personal data. By raising the compliance bar, such measures are already

reshaping C-level investment decisions around cloud strategy, AI adoption and

third-party access to their corporate data. ... Faced with dynamic data

sovereignty risks, enterprises have three main approaches ahead of them:

First, they can take an intentional risk assessment approach. They can define

a data strategy addressing urgent priorities, determining what data should go

where and how it should be managed - based on key metrics such as data

sensitivity, the nature of personal data, downstream impacts, and the

potential for identification. Such a forward-looking approach will, however,

require a clear vision and detailed planning. Alternatively, the enterprise

could be more reactive and detach entirely from its non-domestic public cloud

service providers. This is riskier, given the likely loss of access to

innovation and, worse, the financial fallout that could undermine their

pursuit of key business objectives. Lastly, leaders may choose to do nothing

and hope that none of these risks directly affects them. This is the

highest-risk option, leaving no protection from potentially devastating

financial and reputational consequences of an ineffective data sovereignty

strategy.

Law-making bodies have in recent years sought to regulate data flows to

strengthen their citizens’ rights – for example, the EU bolstering individual

citizens’ privacy through the General Data Protection Regulation (GDPR). This

kind of legislation has redefined companies’ scope for storing and processing

personal data. By raising the compliance bar, such measures are already

reshaping C-level investment decisions around cloud strategy, AI adoption and

third-party access to their corporate data. ... Faced with dynamic data

sovereignty risks, enterprises have three main approaches ahead of them:

First, they can take an intentional risk assessment approach. They can define

a data strategy addressing urgent priorities, determining what data should go

where and how it should be managed - based on key metrics such as data

sensitivity, the nature of personal data, downstream impacts, and the

potential for identification. Such a forward-looking approach will, however,

require a clear vision and detailed planning. Alternatively, the enterprise

could be more reactive and detach entirely from its non-domestic public cloud

service providers. This is riskier, given the likely loss of access to

innovation and, worse, the financial fallout that could undermine their

pursuit of key business objectives. Lastly, leaders may choose to do nothing

and hope that none of these risks directly affects them. This is the

highest-risk option, leaving no protection from potentially devastating

financial and reputational consequences of an ineffective data sovereignty

strategy.Verification Debt: When Generative AI Speeds Change Faster Than Proof

Software delivery has always lived with an imbalance. It is easier to change a

system than to demonstrate that the change is safe under real workloads, real

dependencies, and real failure modes. ... The risk is not that teams become

careless. The risk is that what looks correct on the surface becomes abundant

while evidence remains scarce. ... A useful name for what accumulates in the

mismatch is verification debt. It is the gap between what you released and

what you have demonstrated, with evidence gathered under conditions that

resemble production, to be safe and resilient. Technical debt is a bet about

future cost of change. Verification debt is unknown risk you are running right

now. Here, verification does not mean theorem proving. It means evidence from

tests, staged rollouts, security checks, and live production signals that is

strong enough to block a release or trigger a rollback. It is uncertainty

about runtime behavior under realistic conditions, not code cleanliness, not

maintainability, and not simply missing unit tests. If you want to spot

verification debt without inventing new dashboards, look at proxies you may

already track. ... AI can help with parts of verification. It can suggest

tests, propose edge cases, and summarize logs. It can raise verification

capacity. But it cannot conjure missing intent, and it cannot replace the need

to exercise the system and treat the resulting evidence as strong enough to

change the release decision. Review is helpful. Review is evidence of

readability and intent.

Software delivery has always lived with an imbalance. It is easier to change a

system than to demonstrate that the change is safe under real workloads, real

dependencies, and real failure modes. ... The risk is not that teams become

careless. The risk is that what looks correct on the surface becomes abundant

while evidence remains scarce. ... A useful name for what accumulates in the

mismatch is verification debt. It is the gap between what you released and

what you have demonstrated, with evidence gathered under conditions that

resemble production, to be safe and resilient. Technical debt is a bet about

future cost of change. Verification debt is unknown risk you are running right

now. Here, verification does not mean theorem proving. It means evidence from

tests, staged rollouts, security checks, and live production signals that is

strong enough to block a release or trigger a rollback. It is uncertainty

about runtime behavior under realistic conditions, not code cleanliness, not

maintainability, and not simply missing unit tests. If you want to spot

verification debt without inventing new dashboards, look at proxies you may

already track. ... AI can help with parts of verification. It can suggest

tests, propose edge cases, and summarize logs. It can raise verification

capacity. But it cannot conjure missing intent, and it cannot replace the need

to exercise the system and treat the resulting evidence as strong enough to

change the release decision. Review is helpful. Review is evidence of

readability and intent.Executive-level CISO titles surge amid rising scope strain

Executive-level CISOs were more likely to report outside IT than peers with VP

or director titles, according to the findings. The report frames this as part

of a broader shift in how organisations place accountability for cyber risk

and oversight. The findings arrive as boards and senior executives assess

cyber exposure alongside other enterprise risks. The report links these

expectations to the need for security leaders to engage across legal, risk,

operations and other functions. ... Smaller organisations and industries with

leaner security teams showed the highest levels of strain, the report says. It

adds that CISOs warn these imbalances can delay strategic initiatives and push

teams towards reactive security operations. The report positions this issue as

a management challenge as well as a governance question. It links scope creep

with wider accountability and higher expectations on security leaders, even

where budgets and staffing remain constrained. ... Recruiters and employers

have watched turnover trends closely as demand for senior security leadership

has remained high across many sectors. The report suggests that title, scope

and reporting structure form part of how CISOs evaluate roles. ... "The

demand for experienced CISOs remains strong as the role continues to become

more complex and more 'executive'," said Martano. "Understanding how

organizations define scope, reporting structure, and leadership access and

visibility is critical for CISOs planning their next move and for companies

looking to hire or retain security leaders."

Executive-level CISOs were more likely to report outside IT than peers with VP

or director titles, according to the findings. The report frames this as part

of a broader shift in how organisations place accountability for cyber risk

and oversight. The findings arrive as boards and senior executives assess

cyber exposure alongside other enterprise risks. The report links these

expectations to the need for security leaders to engage across legal, risk,

operations and other functions. ... Smaller organisations and industries with

leaner security teams showed the highest levels of strain, the report says. It

adds that CISOs warn these imbalances can delay strategic initiatives and push

teams towards reactive security operations. The report positions this issue as

a management challenge as well as a governance question. It links scope creep

with wider accountability and higher expectations on security leaders, even

where budgets and staffing remain constrained. ... Recruiters and employers

have watched turnover trends closely as demand for senior security leadership

has remained high across many sectors. The report suggests that title, scope

and reporting structure form part of how CISOs evaluate roles. ... "The

demand for experienced CISOs remains strong as the role continues to become

more complex and more 'executive'," said Martano. "Understanding how

organizations define scope, reporting structure, and leadership access and

visibility is critical for CISOs planning their next move and for companies

looking to hire or retain security leaders."What’s in, and what’s out: Data management in 2026 has a new attitude

Data governance is no longer a bolt-on exercise. Platforms like Unity Catalog,

Snowflake Horizon and AWS Glue Catalog are building governance into the

foundation itself. This shift is driven by the realization that external

governance layers add friction and rarely deliver reliable end-to-end

coverage. The new pattern is native automation. Data quality checks, anomaly

alerts and usage monitoring run continuously in the background. ... Companies

want pipelines that maintain themselves. They want fewer moving parts and

fewer late-night failures caused by an overlooked script. Some organizations

are even bypassing pipes altogether. Zero ETL patterns replicate data from

operational systems to analytical environments instantly, eliminating the

fragility that comes with nightly batch jobs. ... Traditional enterprise

warehouses cannot handle unstructured data at scale and cannot deliver the

real-time capabilities needed for AI. Yet the opposite extreme has failed too.

The highly fragmented Modern Data Stack scattered responsibilities across too

many small tools. It created governance chaos and slowed down AI readiness.

Even the rigid interpretation of Data Mesh has faded. ... The idea of humans

reviewing data manually is no longer realistic. Reactive cleanup costs too

much and delivers too little. Passive catalogs that serve as wikis are

declining. Active metadata systems that monitor data continuously are now

essential.

Data governance is no longer a bolt-on exercise. Platforms like Unity Catalog,

Snowflake Horizon and AWS Glue Catalog are building governance into the

foundation itself. This shift is driven by the realization that external

governance layers add friction and rarely deliver reliable end-to-end

coverage. The new pattern is native automation. Data quality checks, anomaly

alerts and usage monitoring run continuously in the background. ... Companies

want pipelines that maintain themselves. They want fewer moving parts and

fewer late-night failures caused by an overlooked script. Some organizations

are even bypassing pipes altogether. Zero ETL patterns replicate data from

operational systems to analytical environments instantly, eliminating the

fragility that comes with nightly batch jobs. ... Traditional enterprise

warehouses cannot handle unstructured data at scale and cannot deliver the

real-time capabilities needed for AI. Yet the opposite extreme has failed too.

The highly fragmented Modern Data Stack scattered responsibilities across too

many small tools. It created governance chaos and slowed down AI readiness.

Even the rigid interpretation of Data Mesh has faded. ... The idea of humans

reviewing data manually is no longer realistic. Reactive cleanup costs too

much and delivers too little. Passive catalogs that serve as wikis are

declining. Active metadata systems that monitor data continuously are now

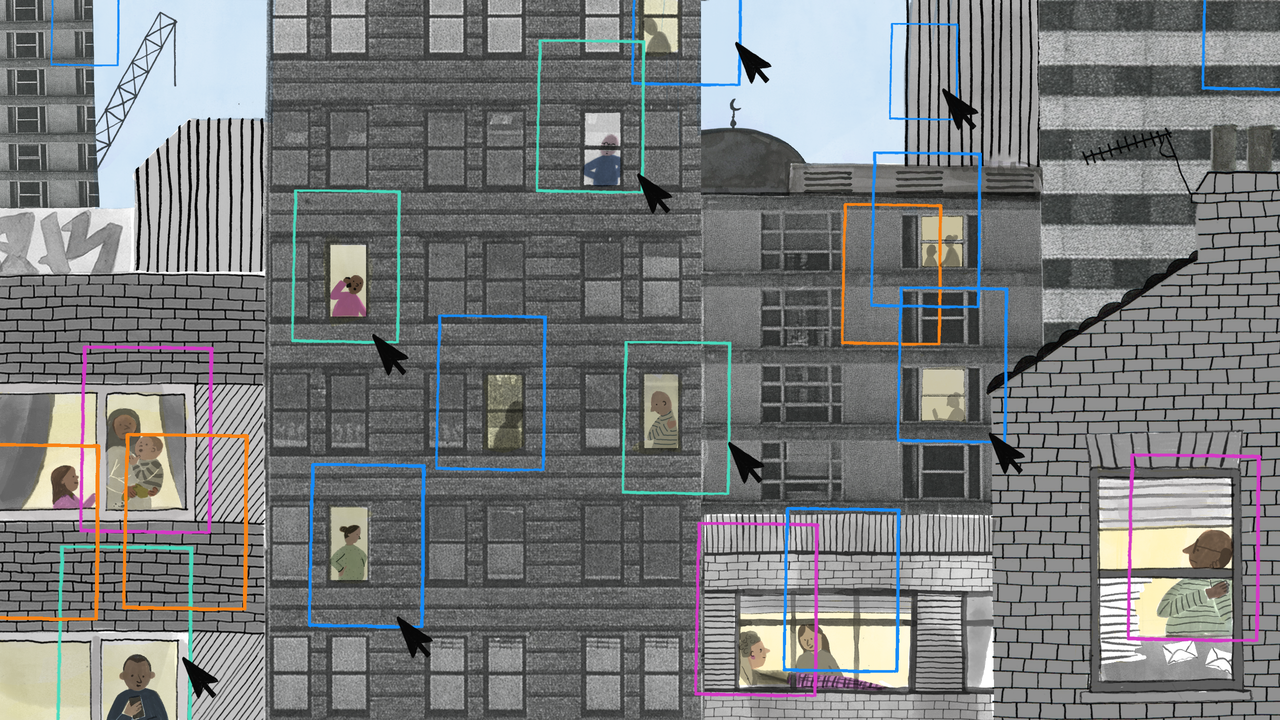

essential.How Algorithmic Systems Automate Inequality

The deployment of predictive analytics in public administration is usually

justified by the twin pillars of austerity and accuracy. Governments and

private entities argue that automated decision-making systems reduce

administrative bloat while eliminating the subjectivity of human caseworkers.

... This dynamic is clearest in the digitization of the welfare state. When

agencies turn to machine learning to detect fraud, they rarely begin with a

blank slate, training their models on historical enforcement data. Because

low-income and minority populations have historically been subject to higher

rates of surveillance and policing, these datasets are saturated with

selection bias. The algorithm, lacking sociopolitical context, interprets this

over-representation as an objective indicator of risk, identifying correlation

and deploying it as causality. ... Algorithmic discrimination, however, is

diffuse and difficult to contest. A rejected job applicant or a flagged

welfare recipient rarely has access to the proprietary score that disqualified

them, let alone the training data or the weighting variable—they face a black

box that offers a decision without a rationale. This opacity makes it nearly

impossible for an individual to challenge the outcome, effectively insulating

the deploying organisation from accountability. ... Algorithmic systems do not

observe the world directly; they inherit their view of reality from datasets

shaped by prior policy choices and enforcement practices. To assess such

systems responsibly requires scrutiny of the provenance of the data on which

decisions are built and the assumptions encoded in the variables selected.

The deployment of predictive analytics in public administration is usually

justified by the twin pillars of austerity and accuracy. Governments and

private entities argue that automated decision-making systems reduce

administrative bloat while eliminating the subjectivity of human caseworkers.

... This dynamic is clearest in the digitization of the welfare state. When

agencies turn to machine learning to detect fraud, they rarely begin with a

blank slate, training their models on historical enforcement data. Because

low-income and minority populations have historically been subject to higher

rates of surveillance and policing, these datasets are saturated with

selection bias. The algorithm, lacking sociopolitical context, interprets this

over-representation as an objective indicator of risk, identifying correlation

and deploying it as causality. ... Algorithmic discrimination, however, is

diffuse and difficult to contest. A rejected job applicant or a flagged

welfare recipient rarely has access to the proprietary score that disqualified

them, let alone the training data or the weighting variable—they face a black

box that offers a decision without a rationale. This opacity makes it nearly

impossible for an individual to challenge the outcome, effectively insulating

the deploying organisation from accountability. ... Algorithmic systems do not

observe the world directly; they inherit their view of reality from datasets

shaped by prior policy choices and enforcement practices. To assess such

systems responsibly requires scrutiny of the provenance of the data on which

decisions are built and the assumptions encoded in the variables selected.DevSecOps for MLOps: Securing the Full Machine Learning Lifecycle

Autonomous Supply Chains: Catalyst for Building Cyber-Resilience

Autonomous supply chains are becoming essential for building resilience amid

rising global disruptions. Enabled by a strong digital core, agentic

architecture, AI and advanced data-driven intelligence, together with IoT and

robotics, they facilitate operations that continuously learn, adapt and

optimize across the value chain. ... Conventional thinking suggests that

greater autonomy widens the attack surface and diminishes human oversight

turning it into a security liability. However, if designed with cyber

resilience at its core, autonomous supply chain can act like a “digital immune

system,” becoming one of the most powerful enablers of security. ... As AI

operations and autonomous supply chains scale, traditional perimeter simply

won’t work. Organizations must adopt a Zero Trust security model to eliminate

implicit trust at every access point. A Zero Trust model, centered on

AI-driven identity and access management, ensures continuous authentication,

network micro-segmentation and controlled access across users, devices and

partners. By enforcing “never trust, always verify,” organizations can

minimize breach impact and contain attackers from freely moving across

systems, maintaining control even in highly automated environments. ...

Autonomy in the supply chain thrives on data sharing and connectivity across

suppliers, carriers, manufacturers, warehouses and retailers, making

end-to-end visibility and governance vital for both efficiency and

security.

Autonomous supply chains are becoming essential for building resilience amid

rising global disruptions. Enabled by a strong digital core, agentic

architecture, AI and advanced data-driven intelligence, together with IoT and

robotics, they facilitate operations that continuously learn, adapt and

optimize across the value chain. ... Conventional thinking suggests that

greater autonomy widens the attack surface and diminishes human oversight

turning it into a security liability. However, if designed with cyber

resilience at its core, autonomous supply chain can act like a “digital immune

system,” becoming one of the most powerful enablers of security. ... As AI

operations and autonomous supply chains scale, traditional perimeter simply

won’t work. Organizations must adopt a Zero Trust security model to eliminate

implicit trust at every access point. A Zero Trust model, centered on

AI-driven identity and access management, ensures continuous authentication,

network micro-segmentation and controlled access across users, devices and

partners. By enforcing “never trust, always verify,” organizations can

minimize breach impact and contain attackers from freely moving across

systems, maintaining control even in highly automated environments. ...

Autonomy in the supply chain thrives on data sharing and connectivity across

suppliers, carriers, manufacturers, warehouses and retailers, making

end-to-end visibility and governance vital for both efficiency and

security. When enterprise edge cases become core architecture

What matters most is not the presence of any single technology, but the

requirements that come with it. Data that once lived in separate systems now

must be consistent and trusted. Mobile devices are no longer occasional access

points but everyday gateways. Hiring workflows introduce identity and access

considerations sooner than many teams planned for. As those realities stack

up, decisions that once arrived late in projects are moving closer to the

start. Architecture and governance stop being cleanup work and start becoming

prerequisites. ... AI is no longer layered onto finished systems. Mobile is no

longer treated as an edge. Hiring is no longer insulated from broader

governance and security models. Each of these shifts forces organizations to

think earlier about data, access, ownership and interoperability than they are

used to doing. What has changed is not just ambition, but feasibility. AI can

now work across dozens of disparate systems in ways that were previously

unrealistic. Long-standing integration challenges are no longer theoretical

problems. They are increasingly actionable -- and increasingly unavoidable.

... As a result, integration, identity and governance can no longer sit

quietly in the background. These decisions shape whether AI initiatives move

beyond experimentation, whether access paths remain defensible and whether

risk stays contained or spreads. Organizations that already have a clear view

of their data, workflows and access models will find it easier to

adapt.

What matters most is not the presence of any single technology, but the

requirements that come with it. Data that once lived in separate systems now

must be consistent and trusted. Mobile devices are no longer occasional access

points but everyday gateways. Hiring workflows introduce identity and access

considerations sooner than many teams planned for. As those realities stack

up, decisions that once arrived late in projects are moving closer to the

start. Architecture and governance stop being cleanup work and start becoming

prerequisites. ... AI is no longer layered onto finished systems. Mobile is no

longer treated as an edge. Hiring is no longer insulated from broader

governance and security models. Each of these shifts forces organizations to

think earlier about data, access, ownership and interoperability than they are

used to doing. What has changed is not just ambition, but feasibility. AI can

now work across dozens of disparate systems in ways that were previously

unrealistic. Long-standing integration challenges are no longer theoretical

problems. They are increasingly actionable -- and increasingly unavoidable.

... As a result, integration, identity and governance can no longer sit

quietly in the background. These decisions shape whether AI initiatives move

beyond experimentation, whether access paths remain defensible and whether

risk stays contained or spreads. Organizations that already have a clear view

of their data, workflows and access models will find it easier to

adapt. Why New Enterprise Architecture Must Be Built From Steel, Not Straw

Architecture must reflect future ambition. Ideally, architects build systems

with a clear view of where the product and business are heading. When a system

architecture is built for the present situation, it’s likely lacking in

flexibility and scalability. That said, sound strategic decisions should be

informed by well-attested or well-reasoned trends, not just present needs and

aspirations. ... Tech leaders should avoid overcommitting to unproven

ideas—i.e., not get "caught up" in the hype. Safe experimentation frameworks

(from hypothesis to conclusion) reduce risk by carefully applying best practices

to testing out approaches. In a business context with something as important as

the technology foundation the organization runs in, do not let anyone

mischaracterize this as timidity. Critical failure is a career-limiting move,

and potentially an organizational catastrophe. ... The art lies in designing

systems that can absorb future shifts without constant rework. That comes from

aligning technical decisions not only with what the company is today, but also

what it intends to become. Future-ready architecture isn’t the comparatively

steady and predictable discipline it was before AI-enabled software features. As

a consequence, there’s wisdom in staying directional, rather than architecting

for the next five years. Align technical decisions with long-term vision but

built with optionality wherever possible.

Architecture must reflect future ambition. Ideally, architects build systems

with a clear view of where the product and business are heading. When a system

architecture is built for the present situation, it’s likely lacking in

flexibility and scalability. That said, sound strategic decisions should be

informed by well-attested or well-reasoned trends, not just present needs and

aspirations. ... Tech leaders should avoid overcommitting to unproven

ideas—i.e., not get "caught up" in the hype. Safe experimentation frameworks

(from hypothesis to conclusion) reduce risk by carefully applying best practices

to testing out approaches. In a business context with something as important as

the technology foundation the organization runs in, do not let anyone

mischaracterize this as timidity. Critical failure is a career-limiting move,

and potentially an organizational catastrophe. ... The art lies in designing

systems that can absorb future shifts without constant rework. That comes from

aligning technical decisions not only with what the company is today, but also

what it intends to become. Future-ready architecture isn’t the comparatively

steady and predictable discipline it was before AI-enabled software features. As

a consequence, there’s wisdom in staying directional, rather than architecting

for the next five years. Align technical decisions with long-term vision but

built with optionality wherever possible.

No comments:

Post a Comment