Quote for the day:

“Rarely have I seen a situation where doing less than the other guy is a good strategy.” -- Jimmy Spithill

Is Your Enterprise Architecture Ready for AI?

/pcq/media/media_files/2025/12/24/is-your-enterprise-architecture-ready-for-ai-2025-12-24-18-39-21.webp) The old model of building, deploying, and governing apps is being reshaped

into a composable enterprise blueprint. By abstracting complexity through

visual models and machine intelligence, businesses are creating systems that

are faster to adapt yet demand stronger governance, interoperability, and

security. What emerges is not just acceleration but transformation at the

foundation. ... With AI copilots spitting out code at scale, the traditional

software development life cycle faces an existential test. Developers may not

fully understand every line of AI-generated code, making manual reviews

insufficient. The solution: automate aggressively. ... This new era also

demands AI observability in SDLC, tracking provenance, explainability, and

liability. Provenance shows the chain of prompts and responses. Explainability

clarifies decisions. Bias and drift monitoring ensure AI systems don’t quietly

shift into harmful or unreliable patterns. Without these, enterprises risk

blind trust in black-box code. ... The destination for enterprises is clear:

AI-native enterprise architecture and composable enterprise blueprint

strategies, where every capability is exposed as an API and orchestrated by

LCNC and AI. The road, however, is slowed by legacy monoliths in industries

like banking and healthcare. These systems won’t vanish overnight. Instead,

strategies like wrapping monoliths with APIs and gradually replacing

components will define the journey.

The old model of building, deploying, and governing apps is being reshaped

into a composable enterprise blueprint. By abstracting complexity through

visual models and machine intelligence, businesses are creating systems that

are faster to adapt yet demand stronger governance, interoperability, and

security. What emerges is not just acceleration but transformation at the

foundation. ... With AI copilots spitting out code at scale, the traditional

software development life cycle faces an existential test. Developers may not

fully understand every line of AI-generated code, making manual reviews

insufficient. The solution: automate aggressively. ... This new era also

demands AI observability in SDLC, tracking provenance, explainability, and

liability. Provenance shows the chain of prompts and responses. Explainability

clarifies decisions. Bias and drift monitoring ensure AI systems don’t quietly

shift into harmful or unreliable patterns. Without these, enterprises risk

blind trust in black-box code. ... The destination for enterprises is clear:

AI-native enterprise architecture and composable enterprise blueprint

strategies, where every capability is exposed as an API and orchestrated by

LCNC and AI. The road, however, is slowed by legacy monoliths in industries

like banking and healthcare. These systems won’t vanish overnight. Instead,

strategies like wrapping monoliths with APIs and gradually replacing

components will define the journey. After LLMs and agents, the next AI frontier: video language models

World models — which some refer to as video language models — are the new

frontier in AI, following in the footsteps of the iconic ChatGPT and more

recently, AI agents. Current AI tech largely affects digital outcomes, but

world models will allow AI to improve physical outcomes. World models are

designed to help robots understand the physical world around them, allowing

them to track, identify and memorize objects. On top of that, just like humans

planning their future, world models allow robots to determine what comes next

— and plan their actions accordingly. ... Beyond robotics, world models

simulate real-world scenarios. They could be used to improve safety features

for autonomous cars or simulate a factory floor to train employees. World

models pair human experiences with AI in the real world, said Deepak Seth,

director analyst at Gartner. “This human experience and what we see around us,

what’s going on around us, is part of that world model, which language models

are currently lacking,” Seth said. ... World models are one of several tools

that will be used to deploy robots in the real world, and they will continue

to improve, said Kenny Siebert, AI research engineer at Standard Bots. But the

models suffer from similar problems — the hallucinations and degradation —

that affect the likes of ChatGPT and video-generators. Moving hallucinations

into the physical world could cause harm, so researchers are trying to solve

those kinds of issues.

World models — which some refer to as video language models — are the new

frontier in AI, following in the footsteps of the iconic ChatGPT and more

recently, AI agents. Current AI tech largely affects digital outcomes, but

world models will allow AI to improve physical outcomes. World models are

designed to help robots understand the physical world around them, allowing

them to track, identify and memorize objects. On top of that, just like humans

planning their future, world models allow robots to determine what comes next

— and plan their actions accordingly. ... Beyond robotics, world models

simulate real-world scenarios. They could be used to improve safety features

for autonomous cars or simulate a factory floor to train employees. World

models pair human experiences with AI in the real world, said Deepak Seth,

director analyst at Gartner. “This human experience and what we see around us,

what’s going on around us, is part of that world model, which language models

are currently lacking,” Seth said. ... World models are one of several tools

that will be used to deploy robots in the real world, and they will continue

to improve, said Kenny Siebert, AI research engineer at Standard Bots. But the

models suffer from similar problems — the hallucinations and degradation —

that affect the likes of ChatGPT and video-generators. Moving hallucinations

into the physical world could cause harm, so researchers are trying to solve

those kinds of issues.Hub & Spoke: The Operating System for AI-Enabled Enterprise Architecture

Today most enterprises still run on heroics, emails, slide decks, and

200-person conference calls. Even when a good repository and healthy

collaboration culture exist, nothing “sticks” without a mechanism that

relentlessly harvests reality, unifies understanding, and broadcasts the right

truth to the right person at the right moment. That mechanism is a new

application of hub-and-spoke – not just for data integration, but for

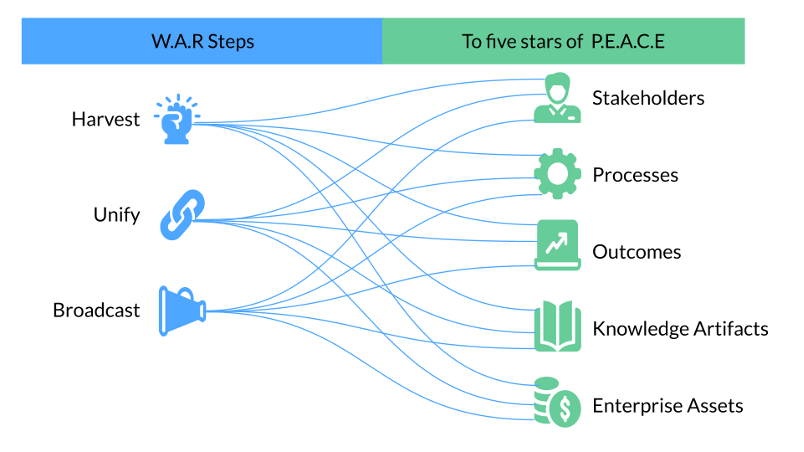

architecture governance itself. We call it simply Hub & Spoke. ... At the

centre runs a continuous cycle of three actions: Harvest – Ingest everything

that matters: scanner output, CI/CD metadata, application inventories, risk

registers, process models, meeting outcomes, human feedback, and

(increasingly) agentic AI crawls; Unify – Connect the dots. Establish

relationships, resolve duplicates, detect patterns and anti-patterns, and

maintain one coherent model of the enterprise; and Broadcast – Push the right

view, in the right language, through the right channel, at the right time. A

CIO sees strategic heatmaps; a developer receives contextual architecture

guardrails inside the IDE; a regulator gets a compliance report on demand. ...

To fully leverage the H.U.B. actions, we apply them to five fundamental

capabilities that drive any organisation, encapsulated in S.P.O.K.E.:

Stakeholders – who cares and who decides; Processes – sequences that

deliver value; Outcome – the why (always placed in the centre of the

model); Knowledge – codified artefacts (models, policies, decisions,

blueprints); and Enterprise Assets – systems, data, infrastructure,

contracts

Today most enterprises still run on heroics, emails, slide decks, and

200-person conference calls. Even when a good repository and healthy

collaboration culture exist, nothing “sticks” without a mechanism that

relentlessly harvests reality, unifies understanding, and broadcasts the right

truth to the right person at the right moment. That mechanism is a new

application of hub-and-spoke – not just for data integration, but for

architecture governance itself. We call it simply Hub & Spoke. ... At the

centre runs a continuous cycle of three actions: Harvest – Ingest everything

that matters: scanner output, CI/CD metadata, application inventories, risk

registers, process models, meeting outcomes, human feedback, and

(increasingly) agentic AI crawls; Unify – Connect the dots. Establish

relationships, resolve duplicates, detect patterns and anti-patterns, and

maintain one coherent model of the enterprise; and Broadcast – Push the right

view, in the right language, through the right channel, at the right time. A

CIO sees strategic heatmaps; a developer receives contextual architecture

guardrails inside the IDE; a regulator gets a compliance report on demand. ...

To fully leverage the H.U.B. actions, we apply them to five fundamental

capabilities that drive any organisation, encapsulated in S.P.O.K.E.:

Stakeholders – who cares and who decides; Processes – sequences that

deliver value; Outcome – the why (always placed in the centre of the

model); Knowledge – codified artefacts (models, policies, decisions,

blueprints); and Enterprise Assets – systems, data, infrastructure,

contracts Orchestrating value: The new discipline of continuous digital transformation

The most important principle for any CIO today is deceptively simple: every transformation must begin with value and be engineered for agility. In a volatile and fast-moving environment, success depends not on how much technology you deploy, but on how effectively you align it to outcomes that matter. Every initiative should begin with clarity of purpose. What is the value hypothesis? What problem are we solving? Who owns the outcome, and when will impact be visible? ... Architecture then becomes the critical enabler. Agility must be built into the design, through modular platforms, adaptable processes, and feedback-driven operating models that allow business change, talent movement, and technological evolution to coexist seamlessly. Measurement turns agility from theory into discipline. Continuous value reviews, architectural checkpoints, and strategy resets ensure transformation remains evidence-led rather than aspirational. Every initiative must answer three questions: Why value? Why now? Why this architecture? In a world defined by velocity and volatility, transformation isn’t about doing more – it’s about doing what matters, faster, smarter, and with enduring value. ... Today’s CIOs also demand composable, interoperable platforms that integrate seamlessly into existing ecosystems, avoiding vendor lock-in while accelerating scale through APIs, microservices, and modular architectures. Partners must bring both agility and discipline – speed balanced with governance.Why Integration Debt Threatens Enterprise AI and Modernization

AI agents rely on fast, trusted data exchanges across applications. However,

point-to-point connectors often break under new query loads. Matt McLarty of

MuleSoft states that integration challenges slow digital transformation.

Integration Debt surfaces here as latent System Friction that derails AI pilots.

Furthermore, developers spend 39% of their time writing custom glue code.

Consequently, innovation budgets shrink while maintenance backlogs grow. Such

opportunity cost defines Integration Debt in real dollars and morale.

Disconnected integrations throttle AI benefits and drain talent. In contrast,

scale introduces additional complexity exposed next. ... Effective governance

establishes shared schemas, versioning, and certification for every API.

Nevertheless, shadow IT and citizen developers complicate enforcement.

Therefore, leading CIOs create integration review boards with quarterly

scorecards. Accenture and Deloitte embed such controls in Modernization

playbooks to prevent relapse. Additionally, companies publish portal dashboards

that display live Integration Debt metrics to executives. ... The evidence

is clear: disconnected architectures tax innovation, security, and profits.

Ramsey Theory Group reminds leaders that random complexity often concentrates

risk in surprising places. Similarly, unchecked System Friction erodes developer

morale and board confidence. However, organizations that quantify debt, enforce

governance, and adopt reusable APIs accelerate Modernization success.

AI agents rely on fast, trusted data exchanges across applications. However,

point-to-point connectors often break under new query loads. Matt McLarty of

MuleSoft states that integration challenges slow digital transformation.

Integration Debt surfaces here as latent System Friction that derails AI pilots.

Furthermore, developers spend 39% of their time writing custom glue code.

Consequently, innovation budgets shrink while maintenance backlogs grow. Such

opportunity cost defines Integration Debt in real dollars and morale.

Disconnected integrations throttle AI benefits and drain talent. In contrast,

scale introduces additional complexity exposed next. ... Effective governance

establishes shared schemas, versioning, and certification for every API.

Nevertheless, shadow IT and citizen developers complicate enforcement.

Therefore, leading CIOs create integration review boards with quarterly

scorecards. Accenture and Deloitte embed such controls in Modernization

playbooks to prevent relapse. Additionally, companies publish portal dashboards

that display live Integration Debt metrics to executives. ... The evidence

is clear: disconnected architectures tax innovation, security, and profits.

Ramsey Theory Group reminds leaders that random complexity often concentrates

risk in surprising places. Similarly, unchecked System Friction erodes developer

morale and board confidence. However, organizations that quantify debt, enforce

governance, and adopt reusable APIs accelerate Modernization success.

The Widening AI Value Gap: Strategic Imperatives for Business Leaders

AI value creation in business settings extends far beyond narrow efficiency

gains or cost reductions. Contemporary frameworks increasingly distinguish

between three fundamental pathways through which AI generates economic returns:

deploying efficiency-enhancing tools, reshaping existing workflows, and

inventing entirely new business models ... Reshaping represents a more ambitious

approach, targeting core business workflows for end-to-end transformation.

Rather than automating existing steps in isolation, reshaping asks: How would we

design this workflow from scratch if AI capabilities were available from the

outset? This might involve redesigning marketing campaign development to

leverage AI-driven personalization at scale, restructuring supply chain

management around predictive demand algorithms, or reimagining customer service

through intelligent agent orchestration. ... Value measurement frameworks must

capture both tangible and strategic dimensions. Tangible metrics include revenue

increases (projected at 14.2% for future-built companies in areas where AI

applies by 2028), cost reductions (9.6% for leaders), and measurable

improvements in key performance indicators such as time-to-hire, customer

satisfaction scores, and defect rates ... The strategic implications extend

beyond near-term financial performance. Organizations trailing in AI maturity

face deteriorating competitive positions as digital-native competitors and

AI-advanced incumbents reshape industry economics.

AI value creation in business settings extends far beyond narrow efficiency

gains or cost reductions. Contemporary frameworks increasingly distinguish

between three fundamental pathways through which AI generates economic returns:

deploying efficiency-enhancing tools, reshaping existing workflows, and

inventing entirely new business models ... Reshaping represents a more ambitious

approach, targeting core business workflows for end-to-end transformation.

Rather than automating existing steps in isolation, reshaping asks: How would we

design this workflow from scratch if AI capabilities were available from the

outset? This might involve redesigning marketing campaign development to

leverage AI-driven personalization at scale, restructuring supply chain

management around predictive demand algorithms, or reimagining customer service

through intelligent agent orchestration. ... Value measurement frameworks must

capture both tangible and strategic dimensions. Tangible metrics include revenue

increases (projected at 14.2% for future-built companies in areas where AI

applies by 2028), cost reductions (9.6% for leaders), and measurable

improvements in key performance indicators such as time-to-hire, customer

satisfaction scores, and defect rates ... The strategic implications extend

beyond near-term financial performance. Organizations trailing in AI maturity

face deteriorating competitive positions as digital-native competitors and

AI-advanced incumbents reshape industry economics.

4 mandates for CIOs to bridge the AI trust gap

As a CIO, you must recognize that low trust in public AI eventually seeps into

the enterprise. If your customers or employees see AI being used unethically in

media scenarios through misinformation and bias, or in personal scenarios like

cybercrime, their skepticism will bleed into your enterprise-grade CRM or HR

systems. The recommendation is to build on the existing trust in the workplace.

Use the enterprise as a model for responsible deployment. Document and

communicate your AI internal usage policies with exceptional clarity, and allow

this transparency to be your market differentiator. Show your customers and

partners the standards you hold your internal AI to, and then extrapolate those

standards to your external products. ... For CIOs in highly regulated industries

such as finance and healthcare, the mandate is to not just maintain but elevate

the current level of rigor. The existing regulatory compliance is the baseline,

not the ceiling, and the market will punish the first major breach or bias

incident, undoing years of consumer confidence. ... We must stop telling end

users AI is trustworthy and start showing them through tangible experience.

Trust is a feature that must be designed from the start, not something patched

in later. The first step is to involve the customer. Implement co-design

programs where the end-users and customers, not just product managers, are

involved in the design and testing phases of new AI applications.

As a CIO, you must recognize that low trust in public AI eventually seeps into

the enterprise. If your customers or employees see AI being used unethically in

media scenarios through misinformation and bias, or in personal scenarios like

cybercrime, their skepticism will bleed into your enterprise-grade CRM or HR

systems. The recommendation is to build on the existing trust in the workplace.

Use the enterprise as a model for responsible deployment. Document and

communicate your AI internal usage policies with exceptional clarity, and allow

this transparency to be your market differentiator. Show your customers and

partners the standards you hold your internal AI to, and then extrapolate those

standards to your external products. ... For CIOs in highly regulated industries

such as finance and healthcare, the mandate is to not just maintain but elevate

the current level of rigor. The existing regulatory compliance is the baseline,

not the ceiling, and the market will punish the first major breach or bias

incident, undoing years of consumer confidence. ... We must stop telling end

users AI is trustworthy and start showing them through tangible experience.

Trust is a feature that must be designed from the start, not something patched

in later. The first step is to involve the customer. Implement co-design

programs where the end-users and customers, not just product managers, are

involved in the design and testing phases of new AI applications.

The Enterprise “Anti-Cloud” Thesis: Repatriation of AI Workloads to On-Premises Infrastructure

Today, a new inflection point has arrived: the dawn of artificial intelligence

and large-scale model training. Running in parallel is an observable and rapidly

growing trend in which companies are repatriating AI workloads from the public

cloud to on-premises environments. This “anti-cloud” thesis represents a

readjustment, rather than a backlash, mirroring other historical shifts in

leadership in which prescience reordered entire industries. As Gartner has

remarked, “By 2025, 60% of organizations will use sovereignty requirements as a

primary factor in selecting cloud providers.” ... Navigating this transition

requires fundamentally different abilities, integrating deep technical fluency

with disciplined strategic thinking. AI infrastructure differs sharply from

other traditional cloud workloads in that it is compute-intensive, highly

resource-intensive, latency-sensitive, and tightly connected with data

governance. ... The repatriation of AI workloads brings several challenges:

lack of AI infrastructure talent, high upfront GPU procurement costs,

operational overhead, security risks, and sustainability concerns. Leaders must

manage hardware supply chain volatility, model reliability, and energy

efficiency. Lacking disciplined governance, repatriation creates a high risk of

cost overruns and fragmentation. The central challenge is to balance innovation

with control, calling for transparency of plans and scenario modeling.

Today, a new inflection point has arrived: the dawn of artificial intelligence

and large-scale model training. Running in parallel is an observable and rapidly

growing trend in which companies are repatriating AI workloads from the public

cloud to on-premises environments. This “anti-cloud” thesis represents a

readjustment, rather than a backlash, mirroring other historical shifts in

leadership in which prescience reordered entire industries. As Gartner has

remarked, “By 2025, 60% of organizations will use sovereignty requirements as a

primary factor in selecting cloud providers.” ... Navigating this transition

requires fundamentally different abilities, integrating deep technical fluency

with disciplined strategic thinking. AI infrastructure differs sharply from

other traditional cloud workloads in that it is compute-intensive, highly

resource-intensive, latency-sensitive, and tightly connected with data

governance. ... The repatriation of AI workloads brings several challenges:

lack of AI infrastructure talent, high upfront GPU procurement costs,

operational overhead, security risks, and sustainability concerns. Leaders must

manage hardware supply chain volatility, model reliability, and energy

efficiency. Lacking disciplined governance, repatriation creates a high risk of

cost overruns and fragmentation. The central challenge is to balance innovation

with control, calling for transparency of plans and scenario modeling.

The Fragile Edge: Chaos Engineering For Reliable IoT

Chaos engineering is mostly used in cloud environments because it works very

well there. However, it is more difficult to apply to IoT and edge computing

systems. IoT devices are physical, often located in remote places and sometimes

perform critical tasks. This makes managing them even more challenging.

Restarting cloud servers using scripts is usually simple. But rebooting medical

devices like pacemakers, industrial robots or warehouse sensors is much more

complex and can be dangerous. Resetting edge devices also takes longer because

system failures often have immediate physical outcomes. Chaos engineering in IoT

systems has both benefits and challenges. Engineers need to design methods to

test failures safely without harming devices. The testing process aims to detect

equipment breakdowns while developing systems that function during actual

operational conditions. The proven cloud software methods of chaos engineering

enable organisations to meet the requirements of edge devices. ... The

implementation of chaos engineering for IoT systems requires both strategic

planning and innovative solutions. Engineers should perform system vulnerability

tests, which ensure operational safety and reliability for real world

deployment. The risk assessment process needs tested and accurate methods to

protect both system devices and their users from harm. ... Organisations need to

maintain ethical standards when they use chaos engineering to safeguard their

IoT systems. Engineers who want to perform IoT chaos testing need to follow

established safety protocols.

Chaos engineering is mostly used in cloud environments because it works very

well there. However, it is more difficult to apply to IoT and edge computing

systems. IoT devices are physical, often located in remote places and sometimes

perform critical tasks. This makes managing them even more challenging.

Restarting cloud servers using scripts is usually simple. But rebooting medical

devices like pacemakers, industrial robots or warehouse sensors is much more

complex and can be dangerous. Resetting edge devices also takes longer because

system failures often have immediate physical outcomes. Chaos engineering in IoT

systems has both benefits and challenges. Engineers need to design methods to

test failures safely without harming devices. The testing process aims to detect

equipment breakdowns while developing systems that function during actual

operational conditions. The proven cloud software methods of chaos engineering

enable organisations to meet the requirements of edge devices. ... The

implementation of chaos engineering for IoT systems requires both strategic

planning and innovative solutions. Engineers should perform system vulnerability

tests, which ensure operational safety and reliability for real world

deployment. The risk assessment process needs tested and accurate methods to

protect both system devices and their users from harm. ... Organisations need to

maintain ethical standards when they use chaos engineering to safeguard their

IoT systems. Engineers who want to perform IoT chaos testing need to follow

established safety protocols.

No comments:

Post a Comment