Quote for the day:

"The only way to achieve the impossible is to believe it is possible." -- Charles Kingsleigh

Way too complex: why modern tech stacks need observability

Recent outages have demonstrated that a heavy dependence on digital systems

can leading to cascading faults that can halt financial transactions, disrupt

public transportation and even bring airport operations to a standstill. ...

To operate with confidence, businesses must see across their entire digital

supply chain, which is not possible with basic monitoring. Unlike traditional

monitoring, which often focuses on siloed metrics or alerts, observability

provides a unified, real-time view across the entire technology stack,

enabling faster, data-driven decisions at scale. Implementing real-time,

AI-powered observability covers every component from infrastructure and

services to applications and user experience. ... Observability also enables

organizations to proactively detect anomalies before they escalate into

outages, quickly pinpoint root causes across complex, distributed systems and

automate response actions to reduce mean time to resolution (MTTR). The result

is faster, smarter and more resilient operations, giving teams the confidence

to innovate without compromising system stability, a critical advantage in a

world where digital resilience and speed must go hand in hand. Resilient

systems must absorb shocks without breaking. This requires both cultural and

technical investment, from embracing shared accountability across teams to

adopting modern deployment strategies like canary releases, blue/green

rollouts and feature flagging.

Recent outages have demonstrated that a heavy dependence on digital systems

can leading to cascading faults that can halt financial transactions, disrupt

public transportation and even bring airport operations to a standstill. ...

To operate with confidence, businesses must see across their entire digital

supply chain, which is not possible with basic monitoring. Unlike traditional

monitoring, which often focuses on siloed metrics or alerts, observability

provides a unified, real-time view across the entire technology stack,

enabling faster, data-driven decisions at scale. Implementing real-time,

AI-powered observability covers every component from infrastructure and

services to applications and user experience. ... Observability also enables

organizations to proactively detect anomalies before they escalate into

outages, quickly pinpoint root causes across complex, distributed systems and

automate response actions to reduce mean time to resolution (MTTR). The result

is faster, smarter and more resilient operations, giving teams the confidence

to innovate without compromising system stability, a critical advantage in a

world where digital resilience and speed must go hand in hand. Resilient

systems must absorb shocks without breaking. This requires both cultural and

technical investment, from embracing shared accountability across teams to

adopting modern deployment strategies like canary releases, blue/green

rollouts and feature flagging. Radical Empowerment From Your Leadership: Understood by Few, Essential for All

“Radical empowerment, for me, isn’t about handing people a seat at the table.

It’s about making sure they know the seat is already theirs,” said Trenika

Fields, Business Legal, AI Leader at Cisco, MIT Sloan EMBA Class of ’26. “I

set the vision and I trust my team to execute in ways that are anchored in the

mission and tied to real business outcomes. But trust without depth doesn’t

work. That’s where leading with empathy comes in. It’s my secret sauce, and it

has to be real. You can’t fake it. People know when it’s performative. Real

empathy builds confidence, and confidence fuels bold, decisive execution. When

people feel seen, trusted, and strategically aligned, they lead like builders,

not bystanders. Strip that trust and empathy away, and radical disempowerment

moves in fast. Voices go quiet. Momentum dies. Innovation flatlines. But when

you get it right, you don’t just build teams. You build powerhouses that set

the standard and raise the bar for everyone else.” Why, given how simple this

is, is it so hard for senior leadership to do versus say? I worked in an

environment years ago when “radical candor” was the theme du jour rather than

“radical empowerment.” An executive over an executive over my boss was

explaining radical candor, which very simply put, being constructive and

forthright with empathy to help others grow.

“Radical empowerment, for me, isn’t about handing people a seat at the table.

It’s about making sure they know the seat is already theirs,” said Trenika

Fields, Business Legal, AI Leader at Cisco, MIT Sloan EMBA Class of ’26. “I

set the vision and I trust my team to execute in ways that are anchored in the

mission and tied to real business outcomes. But trust without depth doesn’t

work. That’s where leading with empathy comes in. It’s my secret sauce, and it

has to be real. You can’t fake it. People know when it’s performative. Real

empathy builds confidence, and confidence fuels bold, decisive execution. When

people feel seen, trusted, and strategically aligned, they lead like builders,

not bystanders. Strip that trust and empathy away, and radical disempowerment

moves in fast. Voices go quiet. Momentum dies. Innovation flatlines. But when

you get it right, you don’t just build teams. You build powerhouses that set

the standard and raise the bar for everyone else.” Why, given how simple this

is, is it so hard for senior leadership to do versus say? I worked in an

environment years ago when “radical candor” was the theme du jour rather than

“radical empowerment.” An executive over an executive over my boss was

explaining radical candor, which very simply put, being constructive and

forthright with empathy to help others grow. Banks Can Convert Messy Data into Unstoppable Growth

Banks recognize the potential in tapping a trove of customer data, much of it

unstructured, as a tool to personalize interactions and become more proactive.

They are sitting on a goldmine of unstructured information hidden in PDFs,

scanned forms, call notes and emails — data that, once cleaned and organized,

can unlock new business opportunities, says Drew Singer, head of product at

Middesk. ... The ability to successfully turn data into insights often depends

on clear parameters for how data is handled. This includes a shared

understanding of who owns the data, how it will be managed and stored, and a

defined governance structure — possibly through committees — for overseeing

its use, Deutsch says. "If you don’t set these rules, once data starts

flowing, you will lose control of it. You will most likely lose quality," he

says. ... With the data governance structure firmly in place, FIs are

positioned to use additional tools to garner action-oriented insights across

the organization. Truist Client Pulse, for example, uses AI and machine

learning to analyze customer feedback across channels. ... "We’ve got a

population of teammates using the tool as it stands today, to better

understand regional performance opportunities …what’s going well with certain

solutions that we have, and where there are areas of opportunity to enhance

experience and elevate satisfaction to drive to client loyalty," says

Graziano.

Banks recognize the potential in tapping a trove of customer data, much of it

unstructured, as a tool to personalize interactions and become more proactive.

They are sitting on a goldmine of unstructured information hidden in PDFs,

scanned forms, call notes and emails — data that, once cleaned and organized,

can unlock new business opportunities, says Drew Singer, head of product at

Middesk. ... The ability to successfully turn data into insights often depends

on clear parameters for how data is handled. This includes a shared

understanding of who owns the data, how it will be managed and stored, and a

defined governance structure — possibly through committees — for overseeing

its use, Deutsch says. "If you don’t set these rules, once data starts

flowing, you will lose control of it. You will most likely lose quality," he

says. ... With the data governance structure firmly in place, FIs are

positioned to use additional tools to garner action-oriented insights across

the organization. Truist Client Pulse, for example, uses AI and machine

learning to analyze customer feedback across channels. ... "We’ve got a

population of teammates using the tool as it stands today, to better

understand regional performance opportunities …what’s going well with certain

solutions that we have, and where there are areas of opportunity to enhance

experience and elevate satisfaction to drive to client loyalty," says

Graziano. Securing Digital Supply Chains: Confronting Cyber Threats in Logistics Networks

Modern logistics networks are filled with connected devices — from IoT sensors

tracking shipments and telematics in trucks, to automated sorting systems and

industrial controls in smart warehouses and ports. This Internet of Things

(IoT) revolution offers incredible efficiency and real-time visibility, but it

also increases the attack surface. Each connected sensor, RFID reader, camera,

or vehicle telemetry unit is essentially an internet entry point that could be

exploited if not properly secured. The spread of IoT devices introduces new

vulnerabilities that must be managed effectively. For example, a hacker who

hijacks a vulnerable warehouse camera or temperature sensor might find a way

into the larger corporate network. ... The tightly interwoven nature of modern

supply chains amplifies the impact of any single cyber incident, highlighting

the importance of robust cybersecurity measures. Companies are now digitally

linked with vendors and logistics partners, sharing data and connecting

systems to improve efficiency. However, this interdependence means that a

security failure at one point can quickly spread outward. ... While large

enterprises may invest heavily in cybersecurity, they often depend on smaller

partners who might lack the same resources or maturity. Global supply chains

can involve hundreds of suppliers and service providers with varying security

levels.

For OT Cyber Defenders, Lack of Data Is the Biggest Threat

Data in the OT and ICS world is transient, said Lee. Instructions - legitimate,

or not - flow across the network. Once executed, they vanish. "If I don't

capture it during the attack, it's gone," Lee said. Post-incident forensics is

basically impossible without specialized monitoring tools already in place. "So

for the companies that aren't doing that data collection, that monitoring, prior

to the attacks, they have no chance at actually figuring out if a cyberattack

was involved or not." And that is a problem when nation-state adversaries have

pre-positioned themselves within the networks of critical infrastructure

providers, apparently ready to pivot to OT exploitation in time of conflict. ...

Even when critical infrastructure operators do capture OT monitoring data, the

sheer complexity of modern industrial processes means that finding out what went

wrong is difficult. The inability to make use of more detailed data is an

indicator of immaturity in the OT security space, Bryson Bort told Information

Security Media Group. "The way I summarize the OT space is, it's a generation

behind traditional IT," said Bort, a U.S. Army veteran and founder of the

non-profit ICS Village. Bort helps organize the annual Hack the Capitol event,

but he makes his living selling security services to critical infrastructure

owners and operators. Most operators still don't have visibility into the ICS

devices on their work, Bort said. "What do I have? What assets are on my

network?"

Data in the OT and ICS world is transient, said Lee. Instructions - legitimate,

or not - flow across the network. Once executed, they vanish. "If I don't

capture it during the attack, it's gone," Lee said. Post-incident forensics is

basically impossible without specialized monitoring tools already in place. "So

for the companies that aren't doing that data collection, that monitoring, prior

to the attacks, they have no chance at actually figuring out if a cyberattack

was involved or not." And that is a problem when nation-state adversaries have

pre-positioned themselves within the networks of critical infrastructure

providers, apparently ready to pivot to OT exploitation in time of conflict. ...

Even when critical infrastructure operators do capture OT monitoring data, the

sheer complexity of modern industrial processes means that finding out what went

wrong is difficult. The inability to make use of more detailed data is an

indicator of immaturity in the OT security space, Bryson Bort told Information

Security Media Group. "The way I summarize the OT space is, it's a generation

behind traditional IT," said Bort, a U.S. Army veteran and founder of the

non-profit ICS Village. Bort helps organize the annual Hack the Capitol event,

but he makes his living selling security services to critical infrastructure

owners and operators. Most operators still don't have visibility into the ICS

devices on their work, Bort said. "What do I have? What assets are on my

network?"

Cross-Border Compliance: Navigating Multi-Jurisdictional Risk with AI

The digital age has turned global expansion from an aspiration into a necessity.

Yet, for companies operating across multiple countries, this opportunity comes

wrapped in a Gordian knot of cross-border compliance. The sheer volume,

complexity, and rapid change of multi-jurisdictional regulations—from GDPR and

CCPA on data privacy to complex Anti-Money Laundering (AML) and financial

reporting rules—pose an existential risk. What seems like a local detail in one

jurisdiction may spiral into a costly mistake elsewhere. ... AI helps with

cross-border compliance by automating risk management through real-time

monitoring, analyzing vast datasets to detect fraud, and keeping up with

constantly changing regulations. It navigates complex rules by using natural

language processing (NLP) to interpret regulatory texts and automating tasks

like document verification for KYC/KYB processes. By providing continuous,

automated risk assessments and streamlining compliance workflows, AI reduces

human error, improves efficiency, and ensures ongoing adherence to global

requirements. AI, specifically through technologies like Machine Learning (ML)

and Natural Language Processing (NLP), is the critical tool for cutting

compliance costs by up to 50% while drastically improving accuracy and speed. AI

and machine learning (ML) solutions, often referred to as RegTech, are

streamlining compliance by automating tasks, enhancing data analysis, and

providing real-time insights.

The digital age has turned global expansion from an aspiration into a necessity.

Yet, for companies operating across multiple countries, this opportunity comes

wrapped in a Gordian knot of cross-border compliance. The sheer volume,

complexity, and rapid change of multi-jurisdictional regulations—from GDPR and

CCPA on data privacy to complex Anti-Money Laundering (AML) and financial

reporting rules—pose an existential risk. What seems like a local detail in one

jurisdiction may spiral into a costly mistake elsewhere. ... AI helps with

cross-border compliance by automating risk management through real-time

monitoring, analyzing vast datasets to detect fraud, and keeping up with

constantly changing regulations. It navigates complex rules by using natural

language processing (NLP) to interpret regulatory texts and automating tasks

like document verification for KYC/KYB processes. By providing continuous,

automated risk assessments and streamlining compliance workflows, AI reduces

human error, improves efficiency, and ensures ongoing adherence to global

requirements. AI, specifically through technologies like Machine Learning (ML)

and Natural Language Processing (NLP), is the critical tool for cutting

compliance costs by up to 50% while drastically improving accuracy and speed. AI

and machine learning (ML) solutions, often referred to as RegTech, are

streamlining compliance by automating tasks, enhancing data analysis, and

providing real-time insights.

Best Practices for Building an AI-Powered OT Cybersecurity Strategy

One challenge in defending OT assets is that most industrial facilities still

rely on decades-old hardware and software systems that were not designed with

modern cybersecurity in mind. These legacy systems are often difficult to patch

and contain documented vulnerabilities. Sophisticated adversaries know this and

exploit these outdated systems as a point of entry. ... OT cybersecurity and

regulatory compliance are tightly linked in manufacturing, but not

interchangeable. Consider regulatory compliance the minimum bar you must clear

to stay legally and contractually safe. At the same time, cybersecurity is the

continuous effort you must take to protect your systems and operations.

Manufacturers increasingly must prove OT cyber resilience to customers,

partners, and regulators. A strong cybersecurity posture helps ensure

certifications are passed, contracts are won, and reputations are protected. ...

AI is a powerful tool for bolstering OT cybersecurity strategies by overcoming

the common limitations of traditional, rule-based defenses. AI, whether machine

learning, predictive AI, or agentic AI, provides advanced capabilities to help

defenders detect threats, automate responses, manage assets, and enhance

vulnerability management. ... Human oversight and expertise are vital for

ensuring AI quality and contextual accuracy, especially in safety-critical OT

environments.

One challenge in defending OT assets is that most industrial facilities still

rely on decades-old hardware and software systems that were not designed with

modern cybersecurity in mind. These legacy systems are often difficult to patch

and contain documented vulnerabilities. Sophisticated adversaries know this and

exploit these outdated systems as a point of entry. ... OT cybersecurity and

regulatory compliance are tightly linked in manufacturing, but not

interchangeable. Consider regulatory compliance the minimum bar you must clear

to stay legally and contractually safe. At the same time, cybersecurity is the

continuous effort you must take to protect your systems and operations.

Manufacturers increasingly must prove OT cyber resilience to customers,

partners, and regulators. A strong cybersecurity posture helps ensure

certifications are passed, contracts are won, and reputations are protected. ...

AI is a powerful tool for bolstering OT cybersecurity strategies by overcoming

the common limitations of traditional, rule-based defenses. AI, whether machine

learning, predictive AI, or agentic AI, provides advanced capabilities to help

defenders detect threats, automate responses, manage assets, and enhance

vulnerability management. ... Human oversight and expertise are vital for

ensuring AI quality and contextual accuracy, especially in safety-critical OT

environments. Training Data Preprocessing for Text-to-Video Models

/articles/training-data-preprocessing-for-text-to-video-models/en/smallimage/training-data-preprocessing-for-text-to-video-models-thumbnail-1762244675850.jpg) Getting videos ready for a dataset is not merely a checkbox task - it’s a

demanding, time-consuming process that can make or break the final model. At

this stage, you’re typically dealing with a large collection of raw footage with

no labels, no descriptions, and at best limited metadata like resolution or

duration. If the sourcing process was well-structured, you might have videos

grouped by domain or category, but even then, they’re not ready for training.

The problems are straightforward but critical: there’s no guiding information

(captions or prompts) for the model to learn from, and the clips are often far

too long for most generative architectures, which tend to work with a context

window (length of the video, like number of tokens for Large Language Models)

measured in tens of seconds, not minutes. ... It might seem like the fastest

approach is to label every scene you have. In reality, that’s a direct route to

poor results. After all the previous steps, a dataset is rarely clean: it almost

always contains broken clips, low-quality frames, and clusters of near-identical

segments. The filtering stage exists to strip out this noise, leaving the

model only with content worth learning from. This ensures that the model doesn’t

spend time on data that won’t improve its output. ... Building a proper

text-to-video dataset is an extremely complex task. However, it is impossible to

build a text-to-video generation model without a good dataset.

Getting videos ready for a dataset is not merely a checkbox task - it’s a

demanding, time-consuming process that can make or break the final model. At

this stage, you’re typically dealing with a large collection of raw footage with

no labels, no descriptions, and at best limited metadata like resolution or

duration. If the sourcing process was well-structured, you might have videos

grouped by domain or category, but even then, they’re not ready for training.

The problems are straightforward but critical: there’s no guiding information

(captions or prompts) for the model to learn from, and the clips are often far

too long for most generative architectures, which tend to work with a context

window (length of the video, like number of tokens for Large Language Models)

measured in tens of seconds, not minutes. ... It might seem like the fastest

approach is to label every scene you have. In reality, that’s a direct route to

poor results. After all the previous steps, a dataset is rarely clean: it almost

always contains broken clips, low-quality frames, and clusters of near-identical

segments. The filtering stage exists to strip out this noise, leaving the

model only with content worth learning from. This ensures that the model doesn’t

spend time on data that won’t improve its output. ... Building a proper

text-to-video dataset is an extremely complex task. However, it is impossible to

build a text-to-video generation model without a good dataset.

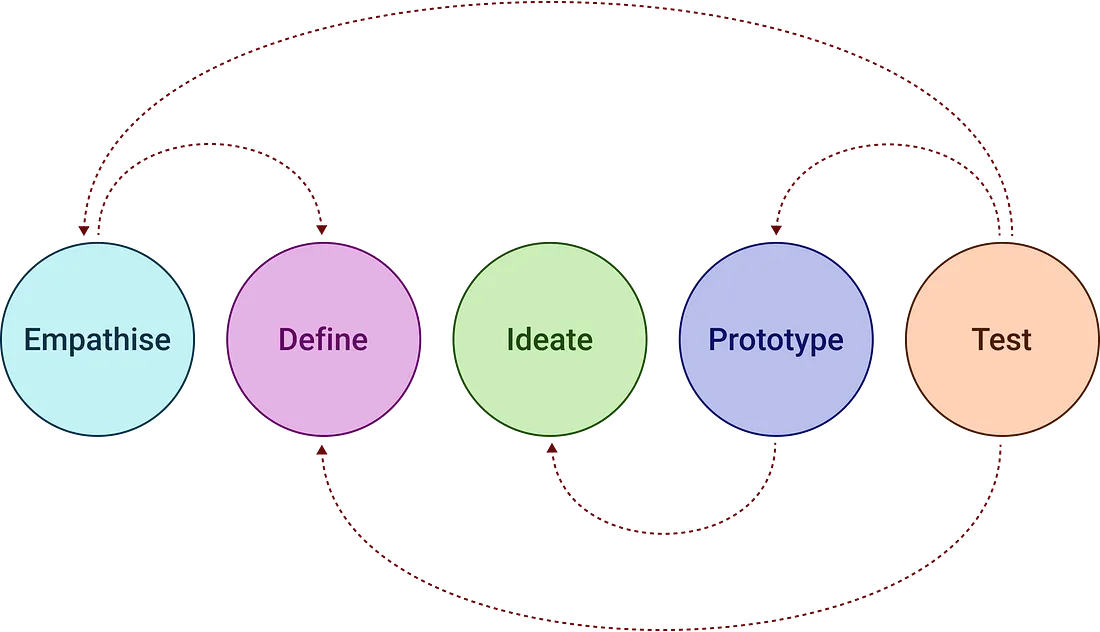

Putting Design Thinking into Practice: A Step-by-Step Guide

The key aim of this part of the design process is to frame your problem

statement. This will guide the rest of your process. Once you’ve gathered

insights from your users, the next step is to distil everything down to the real

issue. There are many ways to do this, but if you’ve spoken to several users,

start by analysing what they said to find patterns — what themes keep coming up,

and what challenges do they all seem to face? ... Once you’ve got your problem

statement, the next step is to start coming up with ideas. This is the fun part!

The aim of this part of idea generation is not to find the perfect idea straight

away, but to come up with as many ideas as possible. Quantity matters more than

quality right now. Start by brainstorming everything that comes to mind, no

matter how unrealistic it sounds. At this point, quantity matters more than

quality — you can always refine later. Write your ideas down, sketch them, or

talk them through with friends or teammates. You might be surprised at how one

silly suggestion sparks a genuinely good idea. ... Testing is the “last” stage

of the design process. I say last with a bit of hesitation, because while it is

technically last on the diagram, you are guaranteed to get a lot of feedback

that will require you to go back to earlier stages of the design process and

revisit ideas.

The key aim of this part of the design process is to frame your problem

statement. This will guide the rest of your process. Once you’ve gathered

insights from your users, the next step is to distil everything down to the real

issue. There are many ways to do this, but if you’ve spoken to several users,

start by analysing what they said to find patterns — what themes keep coming up,

and what challenges do they all seem to face? ... Once you’ve got your problem

statement, the next step is to start coming up with ideas. This is the fun part!

The aim of this part of idea generation is not to find the perfect idea straight

away, but to come up with as many ideas as possible. Quantity matters more than

quality right now. Start by brainstorming everything that comes to mind, no

matter how unrealistic it sounds. At this point, quantity matters more than

quality — you can always refine later. Write your ideas down, sketch them, or

talk them through with friends or teammates. You might be surprised at how one

silly suggestion sparks a genuinely good idea. ... Testing is the “last” stage

of the design process. I say last with a bit of hesitation, because while it is

technically last on the diagram, you are guaranteed to get a lot of feedback

that will require you to go back to earlier stages of the design process and

revisit ideas.Beyond Resilience: How AI and Digital Twin technology are rewriting the rules of supply chain recovery

For decades, supply chain resilience meant having backup plans, alternate

suppliers, safety stock, and crisis playbooks. That model doesn’t hold anymore.

In a post-pandemic world shaped by trade wars, climate volatility, and

technology shocks, disruptions are neither rare nor isolated. They’re

structural. ... The KPIs of resilience have evolved. In most companies,

traditional metrics like on-time delivery or supplier lead time fail to capture

the system’s true flexibility. Modern analytics teams are redefining the

measurement architecture around three key indicators: Mean time to recovery

(MTTR): the time between initial disruption and full operational

stability; Conditional value-at-risk (CVaR): a probabilistic measure of

financial exposure under extreme stress; Supply network resilience index (SNRI):

a composite score tracking substitution agility and cross-tier visibility. ... A

hidden benefit of this new approach is its environmental alignment. When

Schneider Electric built a multi-tier AI twin for its Asia-Pacific operations,

it discovered that optimizing for resilience, diversifying ports, balancing lead

times, and automating inventory allocation also reduced carbon intensity per

unit shipped by 12%; This was not the goal, but it proved that sustainability

and resilience share a common denominator: Efficiency. The smarter the network,

the smaller its waste footprint. In boardrooms today, that realization is

quietly rewriting ESG strategy.

For decades, supply chain resilience meant having backup plans, alternate

suppliers, safety stock, and crisis playbooks. That model doesn’t hold anymore.

In a post-pandemic world shaped by trade wars, climate volatility, and

technology shocks, disruptions are neither rare nor isolated. They’re

structural. ... The KPIs of resilience have evolved. In most companies,

traditional metrics like on-time delivery or supplier lead time fail to capture

the system’s true flexibility. Modern analytics teams are redefining the

measurement architecture around three key indicators: Mean time to recovery

(MTTR): the time between initial disruption and full operational

stability; Conditional value-at-risk (CVaR): a probabilistic measure of

financial exposure under extreme stress; Supply network resilience index (SNRI):

a composite score tracking substitution agility and cross-tier visibility. ... A

hidden benefit of this new approach is its environmental alignment. When

Schneider Electric built a multi-tier AI twin for its Asia-Pacific operations,

it discovered that optimizing for resilience, diversifying ports, balancing lead

times, and automating inventory allocation also reduced carbon intensity per

unit shipped by 12%; This was not the goal, but it proved that sustainability

and resilience share a common denominator: Efficiency. The smarter the network,

the smaller its waste footprint. In boardrooms today, that realization is

quietly rewriting ESG strategy.

No comments:

Post a Comment