Post-Quantum Cryptography: The lynchpin of future cybersecurity

Since we are still at least a decade away from an ideal quantum computer, this may not seem like an imminent threat. However, this is not the case, since Annealing quantum computers are already a reality. While these are not capable of utilising Shor’s algorithm, they can solve the factoring problem by formulating it as an optimization problem and have already made much progress. Furthermore, there is also the problem of “harvest now, decrypt later,” which essentially means that an attacker can steal data now, wait until quantum computers become a practical reality, and subsequently decrypt it at a later time. This implies that quantum computers already pose a very real threat, without even coming into existence. There is a distinct possibility that large amounts of data have already been compromised and the rectification of this problem is an immediate concern, which is why the incorporation of PQC into current encryption protocols is absolutely imperative. For instance, according to IBM’s “Cost of a data breach Report 2023,” more than 95 percent of the organisations studied globally have experienced more than one data breach.

Payments for net zero – How the payments industry can contribute towards decarbonisation

It is crucial to involve senior leaders in comprehending the compelling reasons

for both commercial and societal urgency to decarbonise. Furthermore, gaining

insight into how various stakeholders (ranging from employees, investors, and

regulators to civil society) are progressively aligning with the necessity for

businesses and society to undergo decarbonisation will fortify the approach.

This alignment creates a potent mandate and a unique opportunity for the payment

network to discern and investigate its distinct role in facilitating the

transition toward net zero. ... Payment networks & fintechs should allocate

sufficient resources to explore alignment between their core capabilities and

sectors/systems needing to decarbonise. This may involve investing in

sustainability and climate change expertise within core teams such as data,

product innovation, and strategy. Additionally, conducting robust research on

trends and carbon impacts in various economic sub-sectors can help overlay

payment networks’ capacities to pinpoint net-zero solutions. Engaging with

external stakeholders can also aid in identifying and testing potential

opportunity areas.

How AI-assisted code development can make your IT job more complicated

Increased use of AI will also mean personalization becomes an important skill

for developers. Today's applications "need to be more intuitive and built with

the individual user in mind versus a generic experience for all," says Lobo.

"Generative AI is already enabling this level of personalization, and most of

the coding in the future will be developed by AI." Despite the rise of

generative technology, humans will still be required at key points in the

development loop to assure quality and business alignment. "Traditional

developers will be relied upon to curate the training data that AI models use

and will examine any discrepancies or anomalies," Lobo adds. Technology managers

and professionals will need to assume more expansive roles within the business

side to ensure that the increased use of AI-assisted code development serves its

purpose. We can expect this focus on business requirements to lead to a growth

in responsibility via roles such as "ethical AI trainer, machine language

engineer, data scientist, AI strategist and consultant, and quality assurance,"

says Lobo.

The more the CIO can function as a centralized source for technology

resources, the better, says Ping Identity’s Cannava, who sees this transpiring

in three phases, depending on the maturity of the organization. In Phase 1,

the CIO is the clearinghouse for current technology projects, taking on the

traditional role as in-house consultant. In Phase 2, the CIO becomes the

clearinghouse for data within the organization. “In many cases, we are the

keepers of the keys to datasets,” he says. “We have the ability to bring

datasets together, and those insights could drive what the agenda is for the

business. They could show us where we have the opportunity to improve our

go-to-market. So having that access to the insights driving business

intelligence initiatives has allowed us to expand our seat at the table.” In

Phase 3, the CIO also becomes the clearinghouse for emerging technologies.

Because, he says, to truly unlock the potential of all that data, you need

artificial intelligence. And that raises some immediate questions for CIOs who

want to be orchestrators.

How DoorDash Migrated from Aurora Postgres to CockroachDB

Until the monolith was broken up, it offered a single view of the toll that

demand for the application was taking on the databases. But once that monolith

was broken into microservices, that visibility would disappear. “Our biggest

enemy was the single primary architecture of our database,” Salvatori said.

“And our North Star would be to move to a solution that offered multiple

writers.” In the meantime, the DoorDash team adopted a “poor man’s solution,”

approach to dealing with its overmatched database architecture, Salvatori told

the Roachfest audience: building vertical federation of tables, while not

blocking microservices extractions. In this game of “whack-a-mole,” he said,

“Different tables would be able to get their own single writer and therefore

scale a little bit and allow us to keep the lights on for a little bit longer.

But we needed to take steps toward limitless horizontal scalability.”

Cockroach, a distributed SQL database management system, seemed like the right

answer.

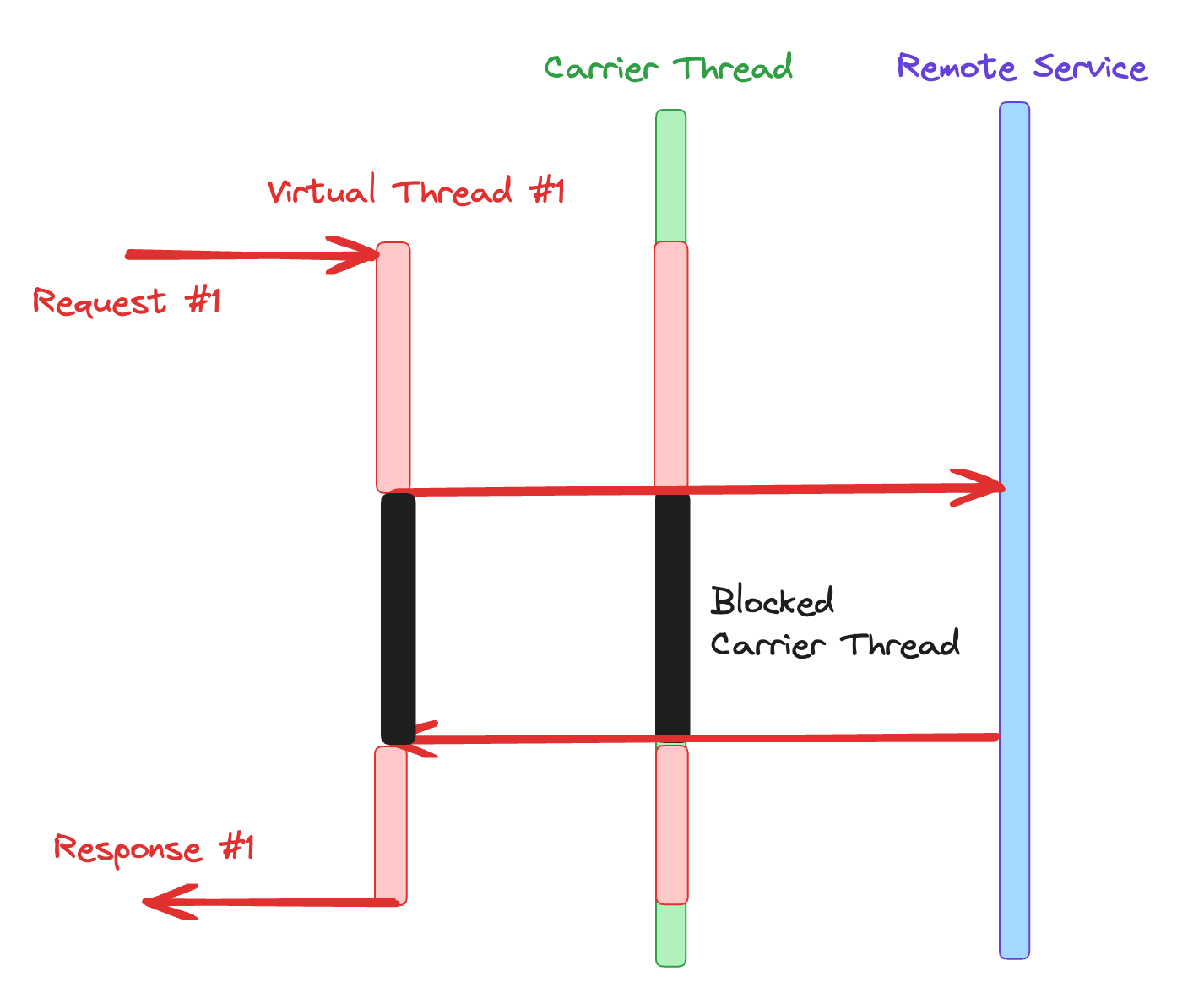

Taming the Virtual Threads: Embracing Concurrency With Pitfall Avoidance

When a virtual thread needs to process a long computation, the virtual thread

excessively occupies its carrier thread, preventing other virtual threads from

utilizing that carrier thread. For example, when a virtual thread repeatedly

performs blocking operations, such as waiting for input or output, it

monopolizes the carrier thread, preventing other virtual threads from making

progress. Inefficient resource management within the virtual thread can also

lead to excessive resource utilization, causing monopolization of the carrier

thread. Monopolization can have detrimental effects on the performance and

scalability of virtual thread applications. It can lead to increased

contention for carrier threads, reduced overall concurrency, and potential

deadlocks. To mitigate these issues, developers should strive to minimize

monopolization by breaking down lengthy computations into smaller, more

manageable tasks to allow other virtual threads to interleave and utilize the

carrier thread.

The all-flash datacentre: Mirage or imminent reality?

The initial advantage of flash over HDDs was speed. Flash was adopted in

workstations and laptops, and in enterprise servers running

performance-critical and especially I/O-dependent applications. Flash’s

performance edge is greatest on random reads and writes. The gap is narrower

for sequential read/write operations. A well-configured HDD array with

flash-based caching comes close enough to all-flash speeds in real-world

environments. “It does depend what infrastructure you have and what

characteristics you are looking for from your storage,” says Roy Illsley,

chief analyst of IT operations at Omdia. “That includes performance on read,

on writes, capacity. The most appropriate [storage] for your needs could be

flash, or just as equally spinning media. All flash datacentres may be a

reality where workloads require the strength of flash, but I am not expecting

all-flash datacentres to become commonplace.” According Rainer Kaise, senior

manager of business development at Toshiba Electronics Europe – a hard drive

manufacturer – 85% of the world’s online media is still stored on HDDs.

How cybersecurity teams should prepare for geopolitical crisis spillover

It is one thing to understand why geopolitical spillover impacts private

enterprise but another to be able to assign any kind of probability of risk to

them. Fortunately, research on global cyber conflict and enterprise

cybersecurity provide a reasonable starting point for dealing with this

uncertainty. Scholars and policy commentators are interested in linking the

realities of cyber operations to situational risk profiles, particularly for

non-degradation threats for which traditional security assessment processes

tend to be sufficient. Performative attacks come with perhaps the most obvious

set of threat indicators. Companies that are "named and shamed" during

geopolitical crisis moments tend to have one of two characteristics. First,

their symbolic profile is constitutionally indivisible in the context of the

current conflict. This means that a firm from its statements, actions, or

productions clearly underwrites one side in conflict. Media organizations that

consistently toe a national line such as Russia's Pravda are an example of

this, but so are firms with leaders or major stakeholders belonging to ethnic,

religious, or linguistic backgrounds pertinent to a crisis

Can cloud computing be truly federated?

The core idea is to save money, but it requires accepting that the physical

resources could be scattered in any system willing to be part of the federated

cloud. I’m not going to think about this in silly ways, in that we’re going to

take over someone’s smartwatch as a peer node, but there is a vast quantity of

underutilized hardware out there, still running and connected to a network in

an enterprise data center that could be leveraged for this model. The idea of

a federated public cloud service does exist today at varying degrees of

maturation, so please don’t send me an angry email telling me your product has

been doing this for years and that I’m somehow a bad person for not knowing it

existed. As I said, federation is an old architectural concept many have

adopted. What is new is bringing it to a widely used public cloud computing

platform, which we haven’t seen yet for the most part. In this approach, a

centralized system coordinates the provisioning of traditional cloud services

such as storage and computing between the requesting peer and a peer that

could provide that service.

How AI is revolutionizing “shift left” testing in API security

SAST and DAST are well-established web application tools that can be used for

API testing. However, the complex workflows associated with APIs can result in

an incomplete analysis by SAST. At the same time, DAST cannot provide an

accurate assessment of the vulnerability of an API without more context on how

the API is expected to function correctly, nor can it interpret what

constitutes a successful business logic attack. In addition, while security

and AppSec teams are at ease with SAST and DAST, developers can find them

challenging to use. Consequently, we’ve seen API-specific test tooling gain

ground, enabling things like continuous validation of API specifications. API

security testing is increasingly being integrated into the API security

offering, translating into much more efficient processes, such as

automatically associating appropriate APIs with suitable test cases. A major

challenge with any application security test plan is generating test cases

tailored explicitly for the apps being tested before release.

Quote for the day:

"The first step toward success is

taken when you refuse to be a captive of the environment in which you first

find yourself." -- Mark Caine

No comments:

Post a Comment