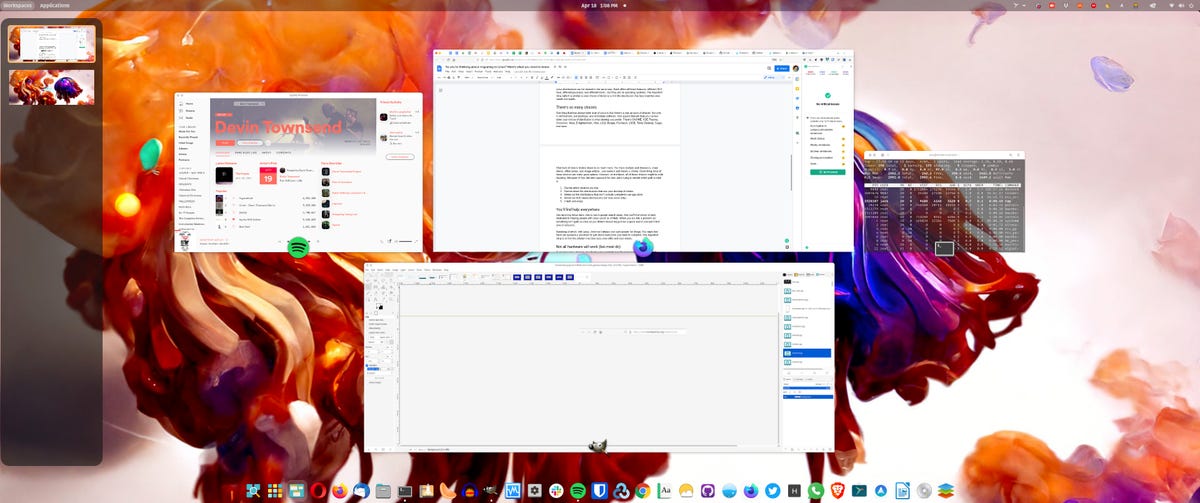

So you're thinking about migrating to Linux? Here's what you need to know

The Linux desktop is so easy. It really is. Developers and designers of most

distributions have gone out of their way to ensure the desktop operating system

is easy to use. During those early years of using Linux, the command line was an

absolute necessity. Today? Not so much. In fact, Linux has become so easy and

user-friendly, that you could go your entire career on the desktop and never

touch the terminal window. That's right, Linux of today is all about the GUI and

the GUIs are good. If you can use macOS or Windows, you can use Linux. It

doesn't matter how skilled you are with a computer, Linux is a viable option. In

fact, I'd go so far to say that the less skill you have with a computer the

better off you are with Linux. Why? Linux is far less "breakable" than Windows.

You really need to know what you're doing to break a Linux system. One very

quick way to start an argument within the Linux community is to say Linux isn't

just a kernel. In a similar vein, a very quick way to confuse a new user is to

tell them that Linux is only the kernel. ... Yes, Linux uses the Linux kernel.

All operating systems have a kernel, but you don't ever hear Windows or macOS

users talk about which kernel they use.

Purpose is a two-way street

There’s a broader redefinition of purpose that’s underway both for

organizations and individuals. Today, people don’t have just one single career

in a lifetime but five or six—and their goals and purpose vary at each stage.

At the same time, organizations can’t address or engage with the broad range

of stakeholders they deal with through just one single purpose. In

combination, these shifts are ushering in the concept of purpose as a

“cluster” of goals and experiences, with different aspects resonating with

different stakeholders at different times. The same cluster concept holds true

for career paths. It is vital to expand the conversation about the varied,

unique options people have to fulfill their goals. Companies must strive to

make those options more transparent, more individualized, and more flexible,

and less linear. For today’s employees, the point of a career path is not

necessarily to climb a ladder with a particular end-state in mind but to gain

experience and pursue the individual’s purpose—a purpose that may shift and

evolve over time. To that end, it may make sense for organizations to create

paths that allow employees to move within and across, and even outside, an

organization—not just up—to achieve their goals.

How algorithmic automation could manage workers ethically

Mewies says bias in automated systems generates significant risks for

employers that use them to select people for jobs or promotion, because it may

contravene anti-discrimination law. For projects involving systemic or

potentially harmful processing of personal data, organisations have to carry

out a privacy impact assessment, she says. “You have to satisfy yourself that

where you were using algorithms and artificial intelligence in that way, there

was going to be no adverse impact on individuals.” But even when not required,

undertaking a privacy impact assessment is a good idea, says Mewies, adding:

“If there was any follow-up criticism of how a technology had been deployed,

you would have some evidence that you had taken steps to ensure transparency

and fairness.” ... Antony Heljula, innovation director at Chesterfield-based

data science consultancy Peak Indicators, says data models can exclude

sensitive attributes such as race, but this is far from foolproof, as Amazon

showed a few years ago when it built an AI CV-rating system trained on a

decade of applications, to find that it discriminated against women.

The changing role of the CCO: Champion of innovation and business continuity

The best CCOs partner with the business to really understand how to place

gates and controls that mitigate risk, while still allowing the business to

operate at maximum efficiency. One area of the business that is particularly

valuable is the IT department, which can help CCOs to maintain and provide

systematic proof of both adherence to internal policies and the external laws,

guidelines or regulations imposed upon the company. By having a dedicated IT

resource, CCOs do not have to wait for the next programme increment (PI),

sprint planning or IT resourcing availability. Instead, they can be agile and

proactive when it comes to meeting business growth and revenue objectives.

Technical resourcing can be utilised for project governance, systems review,

data science, AML and operational analytics, as well as support audit /

reporting with internal / external stakeholders, investors, regulators,

creditors and partners. Ultimately this partnership between IT and CCOs will

allow a business to make data-driven decisions that meet compliance as well

corporate growth mandates.

IT Admins Need a Vacation

An unhappy sysadmin can breed apathy, and an apathetic attitude is especially

problematic when sysadmins are responsible for cybersecurity. Even in

organizations where cybersecurity and IT are separate,sysadmins affect

cybersecurity in some way, whether it’s through patching, performing data

backups, or reviewing logs. This problem is industry-wide, and it will take

more than just one person to solve it, but I’m in a unique position to talk

about it. I’ve held sysadmin roles, and I’m the co-founder and CTO of a threat

detection and response company in which I oversee technical operations. One of

my top priorities is building solutions that won’t tip over and require

significant on-call support. The tendency to paper over a problem with human

effort 24/7 is a tragedy in the IT space and should be solved with technology

wherever possible. As someone who manages employees that are on-call and is

still on-call, I need to be in tune with the mental health of my team members

and support them to prevent burnout. I need to advocate for my employees to be

compensated generously and appreciate and reward them for a job well done.

The steady march of general-purpose databases

Brian Goetz has a funny way of explaining the phenomenon, called Goetz’s Law:

“Every declarative language slowly slides towards being a terrible

general-purpose language.” Perhaps a more useful explanation comes from

Stephen Kell who argues that “the endurance of C is down to its extreme

openness to interaction with other systems via foreign memory, FFI, dynamic

linking, etc.” In other words, C endures because it takes on more

functionality, allowing developers to use it for more tasks. That’s good, but

I like Timothy Wolodzko’s explanation even more: “As an industry, we're biased

toward general-purpose tools [because it’s] easier to hire devs, they are

already widely adopted (because being general purpose), often have better

documentation, are better maintained, and can be expected to live longer.”

Some of this merely describes the results of network effects, but how general

purpose enables those network effects is the more interesting observation.

Similarly, one commenter on Bernhardsson’s post suggests, “It's not about

general versus specialized” but rather “about what tool has the ability to

evolve.

Open-Source NLP Is A Gift From God For Tech Start-ups

As of late, be that as it may, open exploration endeavours like Eleuther AI

have brought the boundaries down to the section. The grassroots agency of

man-made intelligence analysis, Eleuther AI expects to ultimately convey the

code and datasets expected to run a model comparable (however not

indistinguishable) to GPT-3. The group has proactively delivered a dataset

called ‘The Heap’ that is intended to prepare enormous language models to

finish the text and compose code, and that’s just the beginning. (It just so

happens, that Megatron 530B was designed along the lines of The Heap.) And in

June, Eleuther AI made accessible under the Apache 2.0 permit GPT-Neo and its

replacement, GPT-J, a language model that performs almost comparable to an

identical estimated GPT-3 model. One of the new companies serving Eleuther

AI’s models as assistance is NLP Cloud, which was established a year prior by

Julien Salinas, a previous programmer at Hunter.io and the organizer of cash

loaning administration StudyLink.fr.

SQL and Complex Queries Are Needed for Real-Time Analytics

While taking the NoSQL road is possible, it’s cumbersome and slow. Take an

individual applying for a mortgage. To analyze their creditworthiness, you

would create a data application that crunches data, such as the person’s

credit history, outstanding loans and repayment history. To do so, you would

need to combine several tables of data, some of which might be normalized,

some of which are not. You might also analyze current and historical mortgage

rates to determine what rate to offer. With SQL, you could simply join tables

of credit histories and loan payments together and aggregate large-scale

historic data sets, such as daily mortgage rates. However, using something

like Python or Java to manually recreate the joins and aggregations would

multiply the lines of code in your application by tens or even a hundred

compared to SQL. More application code not only takes more time to create, but

it almost always results in slower queries. Without access to a SQL-based

query optimizer, accelerating queries is difficult and time-consuming because

there is no demarcation between the business logic in the application and the

query-based data access paths used by the application.

Lack of expertise hurting UK government’s cyber preparedness

In France, security pros tended to find tender and bidding processes more of

an issue, but also cited a lack of trusted partners, budget, and ignorance of

cyber among organisational leadership. German responders also faced problems

with tendering, and similar problems to both the British and French. From a

technological perspective, UK-based respondents cited endpoint detection and

response (EDR) and extended detection and response (XDR) and cloud security

modernisation as the most mature defensive solutions, with 37% saying they

were “fully deployed” in this area. Zero trust tailed with 32%, and

multi-factor authentication (MFA) was cited by 31% – Brits tended to think MFA

was more difficult than average to implement, as well. The French, on the

other hand, are doing much better on MFA, with 47% of respondents claiming

full deployment, 35% saying they had fully deployed EDR-XDR, and 33% and 30%

saying they had fully implemented cloud security modernisation and zero trust

respectively. In contrast to this, the Germans tended to be better on cloud

security modernisation, which 40% claimed to have fully implemented, followed

by zero trust at 32%, MFA at 30% and EDR-XDR at 27%.

Scrum Master Anti-Patterns

The reasons Scrum Masters violate the spirit of the Scrum Guide are

multi-faceted. They run from ill-suited personal traits to pursuing their

agendas to frustration with the Scrum team. Some often-observed reasons are:

Ignorance or laziness: One size of Scrum fits every team. Your Scrum Master

learned the trade in a specific context and is now rolling out precisely this

pattern in whatever organization they are active, no matter the context. Why

go through the hassle of teaching, coaching, and mentoring if you can shoehorn

the “right way” directly into the Scrum team?; Lack of patience: Patience is a

critical resource that a successful Scrum Master needs to field in abundance.

But, of course, there is no fun in readdressing the same issue several times,

rephrasing it probably, if the solution is so obvious—from the Scrum Master’s

perspective. So, why not tell them how to do it ‘right’ all the time, thus

becoming more efficient? Too bad that Scrum cannot be pushed but needs to be

pulled—that’s the essence of self-management; Dogmatism: Some Scrum Masters

believe in applying the Scrum Guide literally, which unavoidably will cause

friction as Scrum is a framework, not a methodology.

Quote for the day:

"No organization should be allowed

near disaster unless they are willing to cooperate with some level of

established leadership." -- Irwin Redlener

No comments:

Post a Comment