Quote for the day:

"The litmus test for our success as Leaders is not how many people we are leading, but how many we are transforming into leaders" -- Kayode Fayemi

Why agentic AI and unified commerce will define ecommerce in 2026

Agentic AI and unified commerce are set to shape ecommerce in 2026 because the

foundations are now in place: consumers are increasingly comfortable using AI

tools, and retailers are under pressure to operate seamlessly across channels.

... When inventory, orders, pricing, and customer context live in disconnected

systems, both humans and AI struggle to deliver consistent experiences. When

those systems are unified, retailers can enable more reliable automation,

better availability promises, and more resilient fulfillment, especially at

peak. ... Unified commerce platforms matter because they provide a single

operational framework for inventory, orders, pricing, and customer context.

That coordination is increasingly critical as more interactions become

automated or AI-assisted. ... The shift toward “agentic” happens when AI can

safely take actions, like resolving a customer service step, updating a

product feed, or proposing a replenishment recommendation, based on reliable

data and explicit rules. That’s why unified commerce matters: it reduces the

risk of automation acting on partial truth. Because ROI varies dramatically by

category, maturity, and data quality, it’s safer to avoid generic percentage

claims. The defensible message is: companies that pair AI with clean

operational data and clear governance will unlock automation faster and with

fewer reputational risks. ... Ultimately, success in 2026 will not be defined

by how many AI features a retailer deploys, but by how well their systems can

interpret context, act reliably, and scale under pressure.

Agentic AI and unified commerce are set to shape ecommerce in 2026 because the

foundations are now in place: consumers are increasingly comfortable using AI

tools, and retailers are under pressure to operate seamlessly across channels.

... When inventory, orders, pricing, and customer context live in disconnected

systems, both humans and AI struggle to deliver consistent experiences. When

those systems are unified, retailers can enable more reliable automation,

better availability promises, and more resilient fulfillment, especially at

peak. ... Unified commerce platforms matter because they provide a single

operational framework for inventory, orders, pricing, and customer context.

That coordination is increasingly critical as more interactions become

automated or AI-assisted. ... The shift toward “agentic” happens when AI can

safely take actions, like resolving a customer service step, updating a

product feed, or proposing a replenishment recommendation, based on reliable

data and explicit rules. That’s why unified commerce matters: it reduces the

risk of automation acting on partial truth. Because ROI varies dramatically by

category, maturity, and data quality, it’s safer to avoid generic percentage

claims. The defensible message is: companies that pair AI with clean

operational data and clear governance will unlock automation faster and with

fewer reputational risks. ... Ultimately, success in 2026 will not be defined

by how many AI features a retailer deploys, but by how well their systems can

interpret context, act reliably, and scale under pressure.EU's Digital Sovereignty Depends On Investment In Open-Source And Talent

We argue that Europe must think differently and invest where it matters,

leveraging its strengths, and open technologies are the place to look. While

Europe does not have the tech giants of the US and China, it possesses a huge

pool of innovation and human capital, as well as a small army of capable and

efficient technology service providers, start-ups, and SMEs. ... Recent data

shows that while Europe accounts for a substantial share of global open source

developers, its contribution to open source-derived infrastructure remains

fragmented across countries, with development being concentrated in a small

number of countries. ... Europe may not have a Silicon Valley, but it has

something better: a robust open source workforce. We are beginning to

recognize this through fora such as the recent European Open Source Awards,

which celebrated European citizens and residents working on things ranging

from the Linux kernel and open office suites to open hardware and software

preservation. ... Europe has a chance of succeeding. Historically, Europe has

done a good job in making open source and open standards a matter of public

policy. For example, the European Commission's DG DIGIT has an open source

software strategy which is being renewed this year, and Europe possesses three

European Standards Organizations, including CEN, CENELEC, and ETSI. While

China has an open source software strategy, Europe is arguably leading the US

in harnessing the potential of open technologies as a matter of public and

industrial policy, and it has a strong foundation for catching up to China.

We argue that Europe must think differently and invest where it matters,

leveraging its strengths, and open technologies are the place to look. While

Europe does not have the tech giants of the US and China, it possesses a huge

pool of innovation and human capital, as well as a small army of capable and

efficient technology service providers, start-ups, and SMEs. ... Recent data

shows that while Europe accounts for a substantial share of global open source

developers, its contribution to open source-derived infrastructure remains

fragmented across countries, with development being concentrated in a small

number of countries. ... Europe may not have a Silicon Valley, but it has

something better: a robust open source workforce. We are beginning to

recognize this through fora such as the recent European Open Source Awards,

which celebrated European citizens and residents working on things ranging

from the Linux kernel and open office suites to open hardware and software

preservation. ... Europe has a chance of succeeding. Historically, Europe has

done a good job in making open source and open standards a matter of public

policy. For example, the European Commission's DG DIGIT has an open source

software strategy which is being renewed this year, and Europe possesses three

European Standards Organizations, including CEN, CENELEC, and ETSI. While

China has an open source software strategy, Europe is arguably leading the US

in harnessing the potential of open technologies as a matter of public and

industrial policy, and it has a strong foundation for catching up to China.Is artificial general intelligence already here? A new case that today's LLMs meet key tests

Approaching the AGI question from different disciplinary

perspectives—philosophy, machine learning, linguistics, and cognitive

science—the four scholars converged on a controversial conclusion: by

reasonable standards, current large language models (LLMs) already constitute

AGI. Their argument addresses three key questions: What is general

intelligence? Why does this conclusion provoke such strong reactions? And what

does it mean for ... "There is a common misconception that AGI must be

perfect—knowing everything, solving every problem—but no individual human can

do that," explains Chen, who is lead author. "The debate often conflates

general intelligence with superintelligence. The real question is whether LLMs

display the flexible, general competence characteristic of human thought. Our

conclusion: insofar as individual humans possess general intelligence, current

LLMs do too." ... "This is an emotionally charged topic because it challenges

human exceptionalism and our standing as being uniquely intelligent," says

Belkin. "Copernicus displaced humans from the center of the universe, Darwin

displaced humans from a privileged place in nature; now we are contending with

the prospect that there are more kinds of minds than we had previously

entertained." ... "We're developing AI systems that can dramatically impact

the world without being mediated through a human and this raises a host of

challenging ethical, societal, and psychological questions," explains

Danks.

Biometrics deployments at scale need transparency to help businesses, gain trust

As adoption invites scrutiny, more biometrics evaluations, completed

assessments and testing options come available. Communication is part of the

same issue, with major projects like EES, U.S. immigration and protest

enforcement, and more pedestrian applications like access control and mDLs all

taking off. ... Biometric physical access control is growing everywhere, but

with some key sectorial and regional differences, Goode Intelligence Chief

Analyst Alan Goode explains in a preview of his firm’s latest market research

report on the latest episode of the Biometric Update Podcast. Imprivata could

soon be on the market, with PE owner Thoma Bravo working with JPMorgan and

Evercore to begin exploring its options. ... A panel at the “Identity,

Authentication, and the Road Ahead 2026” event looked at NIST’s work on a

playbook to help businesses implement mDLs. Representatives from the NCCoE,

Better Identity Coalition, PNC Bank and AAMVA discussed the emerging

situation, in which digital verifiable credentials are available, but people

don’t know how to use them. ... DHS S&T found 5 of 16 selfie biometrics

providers met the performance goals of its Remote Identity Validation Rally,

Shufti and Paravision among them. RIVR’s first phase showed that

demographically similar imposters still pose a significant problem for many

face biometrics developers.

As adoption invites scrutiny, more biometrics evaluations, completed

assessments and testing options come available. Communication is part of the

same issue, with major projects like EES, U.S. immigration and protest

enforcement, and more pedestrian applications like access control and mDLs all

taking off. ... Biometric physical access control is growing everywhere, but

with some key sectorial and regional differences, Goode Intelligence Chief

Analyst Alan Goode explains in a preview of his firm’s latest market research

report on the latest episode of the Biometric Update Podcast. Imprivata could

soon be on the market, with PE owner Thoma Bravo working with JPMorgan and

Evercore to begin exploring its options. ... A panel at the “Identity,

Authentication, and the Road Ahead 2026” event looked at NIST’s work on a

playbook to help businesses implement mDLs. Representatives from the NCCoE,

Better Identity Coalition, PNC Bank and AAMVA discussed the emerging

situation, in which digital verifiable credentials are available, but people

don’t know how to use them. ... DHS S&T found 5 of 16 selfie biometrics

providers met the performance goals of its Remote Identity Validation Rally,

Shufti and Paravision among them. RIVR’s first phase showed that

demographically similar imposters still pose a significant problem for many

face biometrics developers.The Invisible Labor Force Powering AI

A low-cost labor force is essential to how today’s AI models function. Human

workers are needed at every stage of AI production for tasks like creating and

annotating data, reinforcing models, and moderating content. “Today’s frontier

models are not self-made. They’re socio-technical systems whose quality and

safety hinge on human labor,” said Mark Graham, a professor at the University

of Oxford Internet Institute and a director of the Fairwork project, which

evaluates digital labor platforms. In his book Feeding the Machine: the Hidden

Human Labor Powering AI (Bloomsbury, 2024), Graham and his co-authors

illustrate that this global workforce is essential to making these systems

usable. “Without an ongoing, large human-in-the-loop layer, current

capabilities would be far more brittle and misaligned, especially on

safety-critical or culturally sensitive tasks,” Graham said. ... The

industry’s reliance on a distributed, gig-work model goes back years. Hung

points to the creation of the ImageNet database around 2007 as the moment that

set the referential data practices and work organization for modern AI

training. ... However, cost is not the only factor. Graham noted that cost

arbitrage plays a role, but it is not the whole explanation. AI labs, he said,

need extreme scale and elasticity, meaning millions of small, episodic tasks

that can be staffed up or down at short notice, as well as broad linguistic

and cultural coverage that no single in-house team can reproduce.

A low-cost labor force is essential to how today’s AI models function. Human

workers are needed at every stage of AI production for tasks like creating and

annotating data, reinforcing models, and moderating content. “Today’s frontier

models are not self-made. They’re socio-technical systems whose quality and

safety hinge on human labor,” said Mark Graham, a professor at the University

of Oxford Internet Institute and a director of the Fairwork project, which

evaluates digital labor platforms. In his book Feeding the Machine: the Hidden

Human Labor Powering AI (Bloomsbury, 2024), Graham and his co-authors

illustrate that this global workforce is essential to making these systems

usable. “Without an ongoing, large human-in-the-loop layer, current

capabilities would be far more brittle and misaligned, especially on

safety-critical or culturally sensitive tasks,” Graham said. ... The

industry’s reliance on a distributed, gig-work model goes back years. Hung

points to the creation of the ImageNet database around 2007 as the moment that

set the referential data practices and work organization for modern AI

training. ... However, cost is not the only factor. Graham noted that cost

arbitrage plays a role, but it is not the whole explanation. AI labs, he said,

need extreme scale and elasticity, meaning millions of small, episodic tasks

that can be staffed up or down at short notice, as well as broad linguistic

and cultural coverage that no single in-house team can reproduce.Code smells for AI agents: Q&A with Eno Reyes of Factory

In order to build a good agent, you have to have one that's model agnostic. It

needs to be deployable in any environment, any OS, any IDE. A lot of the tools

out there force you to make a hard trade off that we felt wasn't necessary.

You either have to vendor lock yourself to one LLM or ask everyone at your

company to switch IDEs. To build like a true model agnostic, vendor agnostic

coding agent, you put in a bunch of time and effort to figure out all the

harness engineering that's necessary to make that succeed, which we think is a

fairly different skillset from building models. And so that's why we think

companies like us actually are able to build agents that outperform on most

evaluations from our lab. ... All LLMs have context limits so you have to

manage that as the agent progresses through tasks that may take as long as

eight to ten hours of continuous work. There are things like how you choose to

instruct or inject environment information. It's how you handle tool calls.

The sum of all of these things requires attention to detail. There really is

no individual secret. Which is also why we think companies like us can

actually do this. It's the sum of hundreds of little optimizations. The

industrial process of building these harnesses is what we think is interesting

or differentiated. ... Of course end-to-end and unit tests. There are auto

formatters that you can bring in, SaaS static application security testers and

scanners: your sneaks of the world.

In order to build a good agent, you have to have one that's model agnostic. It

needs to be deployable in any environment, any OS, any IDE. A lot of the tools

out there force you to make a hard trade off that we felt wasn't necessary.

You either have to vendor lock yourself to one LLM or ask everyone at your

company to switch IDEs. To build like a true model agnostic, vendor agnostic

coding agent, you put in a bunch of time and effort to figure out all the

harness engineering that's necessary to make that succeed, which we think is a

fairly different skillset from building models. And so that's why we think

companies like us actually are able to build agents that outperform on most

evaluations from our lab. ... All LLMs have context limits so you have to

manage that as the agent progresses through tasks that may take as long as

eight to ten hours of continuous work. There are things like how you choose to

instruct or inject environment information. It's how you handle tool calls.

The sum of all of these things requires attention to detail. There really is

no individual secret. Which is also why we think companies like us can

actually do this. It's the sum of hundreds of little optimizations. The

industrial process of building these harnesses is what we think is interesting

or differentiated. ... Of course end-to-end and unit tests. There are auto

formatters that you can bring in, SaaS static application security testers and

scanners: your sneaks of the world.Software-Defined Vehicles Transform Auto Industry With Four-Stage Maturity Framework For Engineers

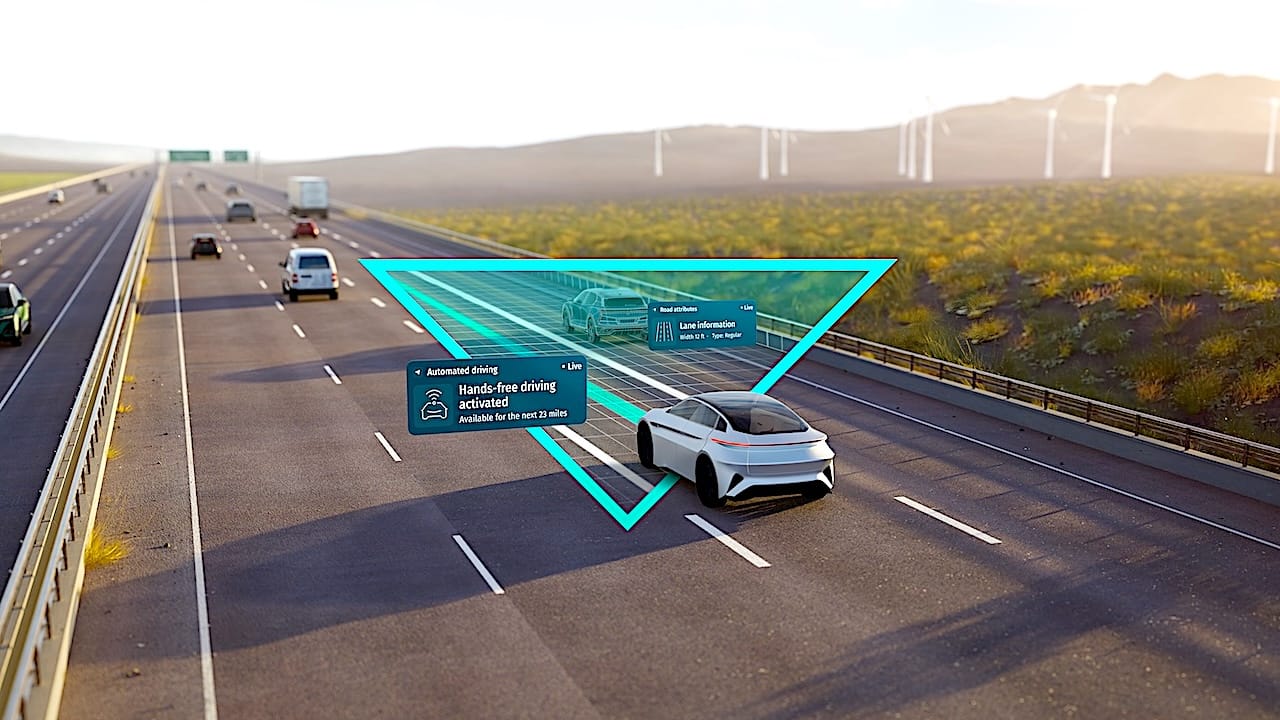

More refined software architectures in both edge and cloud enable the

interpretation of real-time data for predictive maintenance, adaptive user

interfaces, and autonomous driving functions, while cloud-based AI virtualized

development systems enable continuous learning and updates. Electrification

has only further accelerated this evolution as it opened the door for tech

players from other industries to enter the automotive market. This represents

an unstoppable trend as customers now expect the same seamless digital

experiences they enjoy on other devices. ... Legacy vehicle systems rely on

dozens of electronic control units (ECUs), each managing isolated functions,

such as powertrain or infotainment systems. SDVs consolidate these functions

into centralized compute domains connected by high-speed networks. This

architecture provides hardware and software abstraction, enabling OTA updates,

seamless cross-domain feature integration, and real-time data sharing, are

essential for continuous innovation. ... Processing sensor data at the edge –

directly within the vehicle – enables highly personalized experiences for

drivers and passengers. It also supports predictive maintenance, allowing

vehicles to anticipate mechanical issues before they occur and proactively

schedule service to minimize downtime and improve reliability. Equally

important are abstraction layers that decouple software applications from

underlying hardware.

More refined software architectures in both edge and cloud enable the

interpretation of real-time data for predictive maintenance, adaptive user

interfaces, and autonomous driving functions, while cloud-based AI virtualized

development systems enable continuous learning and updates. Electrification

has only further accelerated this evolution as it opened the door for tech

players from other industries to enter the automotive market. This represents

an unstoppable trend as customers now expect the same seamless digital

experiences they enjoy on other devices. ... Legacy vehicle systems rely on

dozens of electronic control units (ECUs), each managing isolated functions,

such as powertrain or infotainment systems. SDVs consolidate these functions

into centralized compute domains connected by high-speed networks. This

architecture provides hardware and software abstraction, enabling OTA updates,

seamless cross-domain feature integration, and real-time data sharing, are

essential for continuous innovation. ... Processing sensor data at the edge –

directly within the vehicle – enables highly personalized experiences for

drivers and passengers. It also supports predictive maintenance, allowing

vehicles to anticipate mechanical issues before they occur and proactively

schedule service to minimize downtime and improve reliability. Equally

important are abstraction layers that decouple software applications from

underlying hardware. Cybersecurity and Privacy Risks in Brain-Computer Interfaces and Neurotechnology

Neuromorphic computing is developing faster than predicted by replicating the

human brain's neural architecture for efficient, low-power AI computation. As

highlighted in talks around brain-inspired chips and meshing, these systems

are blurring distinctions between biological and silicon-based computation. In

the meanwhile, bidirectional communication is made possible by BCIs, such as

those being developed by businesses and research facilities, which can read

brain activity for feedback or control and possibly write signals back to

affect cognition. ... Neural data is essentially personal. Breaches could

expose memories, emotions, or subconscious biases. Adversaries may

reverse-engineer intentions for coercion, fraud, or espionage as AI decodes

brain scans for "mind captioning" or talent uploading. ... Compromised BCIs

blur cyber-physical boundaries farther than OT-IT convergence already has. A

malevolent actor might damage medical implants, alter augmented reality

overlays, or weaponize neurotech in national security scenarios. ...

Implantable devices rely on worldwide supply chains prone to tampering.

Neuromorphic hardware, while efficient, provides additional attack surfaces if

not designed with zero-trust principles. Using AI to process neural signals

can introduce biases, which may result in unfair treatment in brain-augmented

systems

Designing for Failure: Chaos Engineering Principles in System Design

To design for failure, we must understand how the system behaves when failure

inevitably happens. What is the cost? What is the impact? How do we mitigate it?

How do we still maintain over 99% uptime? This requires treating failure as a

default state, not an exception. ... The first step is defining steady-state

behavior. Without this, there is no baseline to measure against. ... Chaos

experiments are most valuable in production. This is where real traffic

patterns, real user behavior, and real data shapes exist. That said, experiments

must be controlled. ... Chaos Engineering is not a one-off exercise. Systems

evolve. Dependencies change. Teams rotate. Experiments should be automated,

repeatable, and run continuously, either as scheduled jobs or integrated into

CI/CD pipelines. Over time, experiments can be expanded to test higher-impact

scenarios. ... Additional considerations include health checks, failover timing,

and data consistency. Strong consistency simplifies reasoning but reduces

availability. Eventual consistency improves availability but introduces

complexity and potential inconsistency windows. ... Network failures are

unavoidable in distributed systems. Latency spikes, packets get dropped, DNS

fails, and sometimes the network splits entirely. Many system outages are not

caused by servers crashing, but by slow or unreliable communication between

otherwise healthy components. This is where several of the classic fallacies of

distributed computing show up, especially the assumption that the network is

reliable and has zero latency.

To design for failure, we must understand how the system behaves when failure

inevitably happens. What is the cost? What is the impact? How do we mitigate it?

How do we still maintain over 99% uptime? This requires treating failure as a

default state, not an exception. ... The first step is defining steady-state

behavior. Without this, there is no baseline to measure against. ... Chaos

experiments are most valuable in production. This is where real traffic

patterns, real user behavior, and real data shapes exist. That said, experiments

must be controlled. ... Chaos Engineering is not a one-off exercise. Systems

evolve. Dependencies change. Teams rotate. Experiments should be automated,

repeatable, and run continuously, either as scheduled jobs or integrated into

CI/CD pipelines. Over time, experiments can be expanded to test higher-impact

scenarios. ... Additional considerations include health checks, failover timing,

and data consistency. Strong consistency simplifies reasoning but reduces

availability. Eventual consistency improves availability but introduces

complexity and potential inconsistency windows. ... Network failures are

unavoidable in distributed systems. Latency spikes, packets get dropped, DNS

fails, and sometimes the network splits entirely. Many system outages are not

caused by servers crashing, but by slow or unreliable communication between

otherwise healthy components. This is where several of the classic fallacies of

distributed computing show up, especially the assumption that the network is

reliable and has zero latency.

No comments:

Post a Comment