Quote for the day:

"Don't worry about being successful but work toward being significant and the success will naturally follow." -- Oprah Winfrey

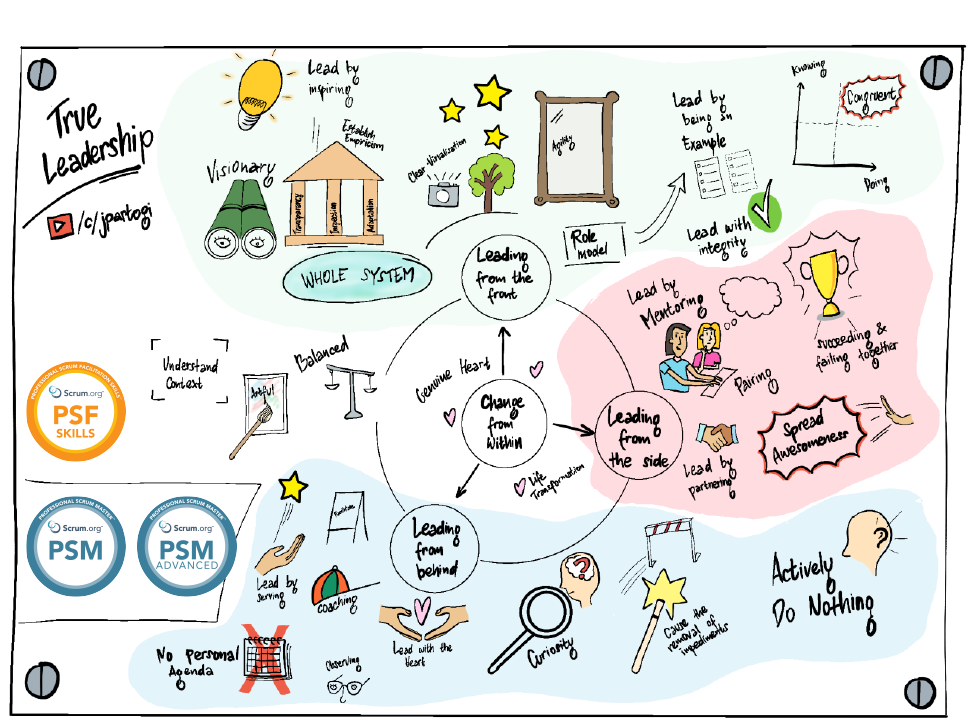

The Scrum Master: A True Leader Who Serves

Many people online claim that “Agile is a mindset”, and that the mindset is more

important than the framework. But let us be honest, the term “agile mindset” is

very abstract. How do we know someone truly has it? We cannot open their brain

to check. Mindset manifests in different behaviour depending on culture and

context. In one place, “commitment” might mean fixed scope and fixed time. In

another, it might mean working long hours. In yet another, it could mean

delivering excellence within reasonable hours. Because of this complexity,

simply saying “agile is a mindset” is not enough. What works better is modelling

the behaviour. When people consistently observe the Scrum Master demonstrating

agility, those behaviours can become habits. ... Some Scrum Masters and

agile coaches believe their job is to coach exclusively, asking questions

without ever offering answers. While coaching is valuable, relying on it alone

can be harmful if it is not relevant or contextual. Relevance is key to

improving team effectiveness. At times, the Scrum Master needs to get their

hands dirty. If a team has struggled with manual regression testing for twenty

Sprints, do not just tell them to adopt Test-Driven Development (TDD). Show

them. ... To be a true leader, the Scrum Master must be humble and authentic.

You cannot fake true leadership. It requires internal transformation, a shift in

character. As the saying goes, “Character is who we are when no one is

watching.”

Many people online claim that “Agile is a mindset”, and that the mindset is more

important than the framework. But let us be honest, the term “agile mindset” is

very abstract. How do we know someone truly has it? We cannot open their brain

to check. Mindset manifests in different behaviour depending on culture and

context. In one place, “commitment” might mean fixed scope and fixed time. In

another, it might mean working long hours. In yet another, it could mean

delivering excellence within reasonable hours. Because of this complexity,

simply saying “agile is a mindset” is not enough. What works better is modelling

the behaviour. When people consistently observe the Scrum Master demonstrating

agility, those behaviours can become habits. ... Some Scrum Masters and

agile coaches believe their job is to coach exclusively, asking questions

without ever offering answers. While coaching is valuable, relying on it alone

can be harmful if it is not relevant or contextual. Relevance is key to

improving team effectiveness. At times, the Scrum Master needs to get their

hands dirty. If a team has struggled with manual regression testing for twenty

Sprints, do not just tell them to adopt Test-Driven Development (TDD). Show

them. ... To be a true leader, the Scrum Master must be humble and authentic.

You cannot fake true leadership. It requires internal transformation, a shift in

character. As the saying goes, “Character is who we are when no one is

watching.”Vendors Align IAM, IGA and PAM for Identity Convergence

The historic separation of IGA, PAM and IAM created inefficiencies and security

blind spots, and attackers exploited inconsistencies in policy enforcement

across layers, said Gil Rapaport, chief solutions officer at CyberArk. By

combining governance, access and privilege in a single platform, the company

could close the gaps between policy enforcement and detection, Rapaport said.

"We noticed those siloed markets creating inefficiency in really protecting

those identities, because you need to manage different type of policies for

governance of those identities and for securing the identities and for the

authentication of those identities, and so on," Rapaport told ISMG. "The cracks

between those silos - this is exactly where the new attack factors started to

develop." ... Enterprise customers that rely on different tools for IGA, PAM,

IAM, cloud entitlements and data governance are increasingly frustrated because

integrating those tools is time-consuming and error-prone, Mudra said. Converged

platforms reduce integration overhead and allow vendors to build tools that

communicate natively and share risk signals, he said. "If you have these tools

in silos, yes, they can all do different things, but you have to integrate them

after the fact versus a converged platform comes with out-of-the-box

integration," Mudra said. "So, these different tools can share context and

signals out of the box."

The historic separation of IGA, PAM and IAM created inefficiencies and security

blind spots, and attackers exploited inconsistencies in policy enforcement

across layers, said Gil Rapaport, chief solutions officer at CyberArk. By

combining governance, access and privilege in a single platform, the company

could close the gaps between policy enforcement and detection, Rapaport said.

"We noticed those siloed markets creating inefficiency in really protecting

those identities, because you need to manage different type of policies for

governance of those identities and for securing the identities and for the

authentication of those identities, and so on," Rapaport told ISMG. "The cracks

between those silos - this is exactly where the new attack factors started to

develop." ... Enterprise customers that rely on different tools for IGA, PAM,

IAM, cloud entitlements and data governance are increasingly frustrated because

integrating those tools is time-consuming and error-prone, Mudra said. Converged

platforms reduce integration overhead and allow vendors to build tools that

communicate natively and share risk signals, he said. "If you have these tools

in silos, yes, they can all do different things, but you have to integrate them

after the fact versus a converged platform comes with out-of-the-box

integration," Mudra said. "So, these different tools can share context and

signals out of the box."The Importance of Technology Due Diligence in Mergers and Acquisitions

The primary reason for conducting technology due diligence is to uncover any

potential risks that could derail the deal or disrupt operations

post-acquisition. This includes identifying outdated software, unresolved

security vulnerabilities, and the potential for data breaches. By spotting these

risks early, you can make informed decisions and create risk mitigation

strategies to protect your company. ... A key part of technology due diligence

is making sure that the target company’s technology assets align with your

business’s strategic goals. Whether it’s cloud infrastructure, software

solutions, or hardware, the technology should complement your existing

operations and provide a foundation for long-term growth. Misalignment in

technology can lead to inefficiencies and costly reworks. ... Rank the

identified risks based on their potential impact on your business and the

likelihood of their occurrence. This will help prioritize mitigation efforts, so

that you’re addressing the most critical vulnerabilities first. Consider both

short-term risks, like pending software patches, and long-term issues, such as

outdated technology or a lack of scalability. ... Review existing vendor

contracts and third-party service provider agreements, looking for any

liabilities or compliance risks that may emerge post-acquisition—especially

those related to data access, privacy regulations, or long-term commitments.

It’s also important to assess the cybersecurity posture of vendors and their

ability to support integration.

The primary reason for conducting technology due diligence is to uncover any

potential risks that could derail the deal or disrupt operations

post-acquisition. This includes identifying outdated software, unresolved

security vulnerabilities, and the potential for data breaches. By spotting these

risks early, you can make informed decisions and create risk mitigation

strategies to protect your company. ... A key part of technology due diligence

is making sure that the target company’s technology assets align with your

business’s strategic goals. Whether it’s cloud infrastructure, software

solutions, or hardware, the technology should complement your existing

operations and provide a foundation for long-term growth. Misalignment in

technology can lead to inefficiencies and costly reworks. ... Rank the

identified risks based on their potential impact on your business and the

likelihood of their occurrence. This will help prioritize mitigation efforts, so

that you’re addressing the most critical vulnerabilities first. Consider both

short-term risks, like pending software patches, and long-term issues, such as

outdated technology or a lack of scalability. ... Review existing vendor

contracts and third-party service provider agreements, looking for any

liabilities or compliance risks that may emerge post-acquisition—especially

those related to data access, privacy regulations, or long-term commitments.

It’s also important to assess the cybersecurity posture of vendors and their

ability to support integration.From terabytes to insights: Real-world AI obervability architecture

The challenge is not only the data volume, but the data fragmentation. According

to New Relic’s 2023 Observability Forecast Report, 50% of organizations report

siloed telemetry data, with only 33% achieving a unified view across metrics,

logs and traces. Logs tell one part of the story, metrics another, traces yet

another. Without a consistent thread of context, engineers are forced into

manual correlation, relying on intuition, tribal knowledge and tedious detective

work during incidents. ... In the first layer, we develop the contextual

telemetry data by embedding standardized metadata in the telemetry signals, such

as distributed traces, logs and metrics. Then, in the second layer, enriched

data is fed into the MCP server to index, add structure and provide client

access to context-enriched data using APIs. Finally, the AI-driven analysis

engine utilizes the structured and enriched telemetry data for anomaly

detection, correlation and root-cause analysis to troubleshoot application

issues. This layered design ensures that AI and engineering teams receive

context-driven, actionable insights from telemetry data. ... The amalgamation of

structured data pipelines and AI holds enormous promise for observability. We

can transform vast telemetry data into actionable insights by leveraging

structured protocols such as MCP and AI-driven analyses, resulting in proactive

rather than reactive systems.

The challenge is not only the data volume, but the data fragmentation. According

to New Relic’s 2023 Observability Forecast Report, 50% of organizations report

siloed telemetry data, with only 33% achieving a unified view across metrics,

logs and traces. Logs tell one part of the story, metrics another, traces yet

another. Without a consistent thread of context, engineers are forced into

manual correlation, relying on intuition, tribal knowledge and tedious detective

work during incidents. ... In the first layer, we develop the contextual

telemetry data by embedding standardized metadata in the telemetry signals, such

as distributed traces, logs and metrics. Then, in the second layer, enriched

data is fed into the MCP server to index, add structure and provide client

access to context-enriched data using APIs. Finally, the AI-driven analysis

engine utilizes the structured and enriched telemetry data for anomaly

detection, correlation and root-cause analysis to troubleshoot application

issues. This layered design ensures that AI and engineering teams receive

context-driven, actionable insights from telemetry data. ... The amalgamation of

structured data pipelines and AI holds enormous promise for observability. We

can transform vast telemetry data into actionable insights by leveraging

structured protocols such as MCP and AI-driven analyses, resulting in proactive

rather than reactive systems. MCP explained: The AI gamechanger

Instead of relying on scattered prompts, developers can now define and deliver

context dynamically, making integrations faster, more accurate, and easier to

maintain. By decoupling context from prompts and managing it like any other

component, developers can, in effect, build their own personal, multi-layered

prompt interface. This transforms AI from a black box into an integrated part of

your tech stack. ... MCP is important because it extends this principle to AI by

treating context as a modular, API-driven component that can be integrated

wherever needed. Similar to microservices or headless frontends, this approach

allows AI functionality to be composed and embedded flexibly across various

layers of the tech stack without creating tight dependencies. The result is

greater flexibility, enhanced reusability, faster iteration in distributed

systems and true scalability. ... As with any exciting disruption, the

opportunity offered by MCP comes with its own set of challenges. Chief among

them is poorly defined context. One of the most common mistakes is hardcoding

static values — instead, context should be dynamic and reflect real-time system

states. Overloading the model with too much, too little or irrelevant data is

another pitfall, often leading to degraded performance and unpredictable

outputs.

Instead of relying on scattered prompts, developers can now define and deliver

context dynamically, making integrations faster, more accurate, and easier to

maintain. By decoupling context from prompts and managing it like any other

component, developers can, in effect, build their own personal, multi-layered

prompt interface. This transforms AI from a black box into an integrated part of

your tech stack. ... MCP is important because it extends this principle to AI by

treating context as a modular, API-driven component that can be integrated

wherever needed. Similar to microservices or headless frontends, this approach

allows AI functionality to be composed and embedded flexibly across various

layers of the tech stack without creating tight dependencies. The result is

greater flexibility, enhanced reusability, faster iteration in distributed

systems and true scalability. ... As with any exciting disruption, the

opportunity offered by MCP comes with its own set of challenges. Chief among

them is poorly defined context. One of the most common mistakes is hardcoding

static values — instead, context should be dynamic and reflect real-time system

states. Overloading the model with too much, too little or irrelevant data is

another pitfall, often leading to degraded performance and unpredictable

outputs.

AI is fueling a power surge - it could also reinvent the grid

Data centers themselves are beginning to evolve as well. Some forward-looking facilities are now being designed with built-in flexibility to contribute back to the grid or operate independently during times of peak stress. These new models, combined with improved efficiency standards and smarter site selection strategies, have the potential to ease some of the pressure being placed on energy systems. Equally important is the role of cross-sector collaboration. As the line between tech and infrastructure continues to blur, it’s critical that policymakers, engineers, utilities, and technology providers work together to shape the standards and policies that will govern this transition. That means not only building new systems, but also rethinking regulatory frameworks and investment strategies to prioritize resiliency, equity, and sustainability. Just as important as technological progress is public understanding. Educating communities about how AI interacts with infrastructure can help build the support needed to scale promising innovations. Transparency around how energy is generated, distributed, and consumed—and how AI fits into that equation—will be crucial to building trust and encouraging participation. ... To be clear, AI is not a silver bullet. It won’t replace the need for new investment or hard policy choices. But it can make our systems smarter, more adaptive, and ultimately more sustainable.AI vs Technical Debt: Is This A Race to the Bottom?

Critically, AI-generated code can carry security liabilities. One alarming study

analyzed code suggested by GitHub Copilot across common security scenarios – the

result: roughly 40% of Copilot’s suggestions had vulnerabilities. These included

classic mistakes like buffer overflows and SQL injection holes. Why so high? The

AI was trained on tons of public code – including insecure code – so it can

regurgitate bad practices (like using outdated encryption or ignoring input

sanitization) just as easily as good ones. If you blindly accept such output,

you’re effectively inviting known bugs into your codebase. It doesn’t help that

AI is notoriously bad at certain logical tasks (for example, it struggles with

complex math or subtle state logic, so it might write code that looks legit but

is wrong in edge cases. ... In many cases, devs aren’t reviewing AI-written code

as rigorously as their own, and a common refrain when something breaks is, “It

is not my code,” implying they feel less responsible since the AI wrote it. That

attitude itself is dangerous, if nobody feels accountable for the AI’s code, it

slips through code reviews or testing more easily, leading to more bad

deployments. The open-source world is also grappling with an influx of

AI-generated “contributions” that maintainers describe as low-quality or even

spam. Imagine running an open-source project and suddenly getting dozens of

auto-generated pull requests that technically add a feature or fix but are

riddled with style issues or bugs.

Critically, AI-generated code can carry security liabilities. One alarming study

analyzed code suggested by GitHub Copilot across common security scenarios – the

result: roughly 40% of Copilot’s suggestions had vulnerabilities. These included

classic mistakes like buffer overflows and SQL injection holes. Why so high? The

AI was trained on tons of public code – including insecure code – so it can

regurgitate bad practices (like using outdated encryption or ignoring input

sanitization) just as easily as good ones. If you blindly accept such output,

you’re effectively inviting known bugs into your codebase. It doesn’t help that

AI is notoriously bad at certain logical tasks (for example, it struggles with

complex math or subtle state logic, so it might write code that looks legit but

is wrong in edge cases. ... In many cases, devs aren’t reviewing AI-written code

as rigorously as their own, and a common refrain when something breaks is, “It

is not my code,” implying they feel less responsible since the AI wrote it. That

attitude itself is dangerous, if nobody feels accountable for the AI’s code, it

slips through code reviews or testing more easily, leading to more bad

deployments. The open-source world is also grappling with an influx of

AI-generated “contributions” that maintainers describe as low-quality or even

spam. Imagine running an open-source project and suddenly getting dozens of

auto-generated pull requests that technically add a feature or fix but are

riddled with style issues or bugs.The Future of Manufacturing: Digital Twin in Action

Process digital twins are often confused with traditional simulation tools, but there is an important distinction. Simulations are typically offline models used to test “what-if” scenarios, verify system behaviour, and optimise processes without impacting live operations. These models are predefined and rely on human input to set parameters and ask the right questions. A digital twin, on the other hand, comes to life when connected to real-time operational data. It reflects current system states, responds to live inputs, and evolves continuously as conditions change. This distinction between static simulation and dynamic digital twin is widely recognised across the industrial sector. While simulation still plays a valuable role in system design and planning, the true power of the digital twin lies in its ability to mirror, interpret, and influence operational performance in real time. ... When AI is added, the digital twin evolves into a learning system. AI algorithms can process vast datasets - far beyond what a human operator can manage - and detect early warning signs of failure. For example, if a transformer begins to exhibit subtle thermal or harmonic irregularities, an AI-enhanced digital twin doesn’t just flag it. It assesses the likelihood of failure, evaluates the potential downstream impact, and proposes mitigation strategies, such as rerouting power or triggering maintenance workflows.Bridging the Gap: How Hybrid Cloud Is Redefining the Role of the Data Center

Today’s hybrid models involve more than merging public clouds with private data

centers. They also involve specialized data center solutions like colocation,

edge facilities and bare-metal-as-a-service (BMaaS) offerings. That’s the short

version of how hybrid cloud and its relationship to data centers are evolving.

... Fast forward to the present, and the goals surrounding hybrid cloud

strategies often look quite different. When businesses choose a hybrid cloud

approach today, it’s typically not because of legacy workloads or sunk costs.

It’s because they see hybrid architectures as the key to unlocking new

opportunities ... The proliferation of edge data centers has also enabled

simpler, better-performing and more cost-effective hybrid clouds. The more

locations businesses have to choose from when deciding where to place private

infrastructure and workloads, the more opportunity they have to optimize

performance relative to cost. ... Today’s data centers are no longer just a

place to host whatever you can’t run on-prem or in a public cloud. They have

evolved into solutions that offer specialized services and capabilities that are

critical for building high-performing, cost-effective hybrid clouds – but that

aren’t available from public cloud providers, and that would be very costly and

complicated for businesses to implement on their own.

Today’s hybrid models involve more than merging public clouds with private data

centers. They also involve specialized data center solutions like colocation,

edge facilities and bare-metal-as-a-service (BMaaS) offerings. That’s the short

version of how hybrid cloud and its relationship to data centers are evolving.

... Fast forward to the present, and the goals surrounding hybrid cloud

strategies often look quite different. When businesses choose a hybrid cloud

approach today, it’s typically not because of legacy workloads or sunk costs.

It’s because they see hybrid architectures as the key to unlocking new

opportunities ... The proliferation of edge data centers has also enabled

simpler, better-performing and more cost-effective hybrid clouds. The more

locations businesses have to choose from when deciding where to place private

infrastructure and workloads, the more opportunity they have to optimize

performance relative to cost. ... Today’s data centers are no longer just a

place to host whatever you can’t run on-prem or in a public cloud. They have

evolved into solutions that offer specialized services and capabilities that are

critical for building high-performing, cost-effective hybrid clouds – but that

aren’t available from public cloud providers, and that would be very costly and

complicated for businesses to implement on their own.

AI Agents: Managing Risks In End-To-End Workflow Automation

As CIOs map out their AI strategies, it’s becoming clear that agents will change

how they manage their organization’s IT environment and how they deliver

services to the rest of the business. With the ability of agents to automate a

broad swath of end-to-end business processes—learning and changing as they

go—CIOs will have to oversee significant shifts in software development, IT

operating models, staffing, and IT governance. ... Human-based checks and

balances are vital for validating agent-based outputs and recommendations and,

if needed, manually change course should unintended consequences—including

hallucinations or other errors—arise. “Agents being wrong is not the same thing

as humans being wrong,” says Elliott. “Agents can be really wrong in ways that

would get a human fired if they made the same mistake. We need safeguards so

that if an agent calls the wrong API, it’s obvious to the person overseeing that

task that the response or outcome is unreasonable or doesn’t make sense.” These

orchestration and observability layers will be increasingly important as agents

are implemented across the business. “As different parts of the organization

[automate] manual processes, you can quickly end up with a patchwork-quilt

architecture that becomes almost impossible to upgrade or rethink,” says

Elliott.

As CIOs map out their AI strategies, it’s becoming clear that agents will change

how they manage their organization’s IT environment and how they deliver

services to the rest of the business. With the ability of agents to automate a

broad swath of end-to-end business processes—learning and changing as they

go—CIOs will have to oversee significant shifts in software development, IT

operating models, staffing, and IT governance. ... Human-based checks and

balances are vital for validating agent-based outputs and recommendations and,

if needed, manually change course should unintended consequences—including

hallucinations or other errors—arise. “Agents being wrong is not the same thing

as humans being wrong,” says Elliott. “Agents can be really wrong in ways that

would get a human fired if they made the same mistake. We need safeguards so

that if an agent calls the wrong API, it’s obvious to the person overseeing that

task that the response or outcome is unreasonable or doesn’t make sense.” These

orchestration and observability layers will be increasingly important as agents

are implemented across the business. “As different parts of the organization

[automate] manual processes, you can quickly end up with a patchwork-quilt

architecture that becomes almost impossible to upgrade or rethink,” says

Elliott.

No comments:

Post a Comment