Quote for the day:

"Act as if what you do makes a difference. It does." -- William James

What happens the day after superintelligence?

As context, artificial superintelligence (ASI) refers to systems that

can outthink humans on most fronts, from planning and reasoning to

problem-solving, strategic thinking and raw creativity. These systems will

solve complex problems in a fraction of a second that might take the smartest

human experts days, weeks or even years to work through. ... So ask yourself,

honestly, how will humans act in this new reality? Will we reflexively seek

advice from our AI assistants as we navigate every little challenge we

encounter? Or worse, will we learn to trust our AI assistants more

than our own thoughts and instincts? ... Imagine walking down the street in your

town. You see a coworker heading towards you. You can’t remember his name, but

your AI assistant does. It detects your hesitation and whispers the

coworker’s name into your ears. The AI also recommends that you ask the

coworker about his wife, who had surgery a few weeks ago. The coworker

appreciates the sentiment, then asks you about your recent promotion, likely at

the advice of his own AI. Is this human empowerment, or a loss of human

agency? ... Many experts believe that body-worn AI assistants will make us feel

more powerful and capable, but that’s not the only way this could go. These same

technologies could make us feel less confident in ourselves and less impactful

in our lives.

As context, artificial superintelligence (ASI) refers to systems that

can outthink humans on most fronts, from planning and reasoning to

problem-solving, strategic thinking and raw creativity. These systems will

solve complex problems in a fraction of a second that might take the smartest

human experts days, weeks or even years to work through. ... So ask yourself,

honestly, how will humans act in this new reality? Will we reflexively seek

advice from our AI assistants as we navigate every little challenge we

encounter? Or worse, will we learn to trust our AI assistants more

than our own thoughts and instincts? ... Imagine walking down the street in your

town. You see a coworker heading towards you. You can’t remember his name, but

your AI assistant does. It detects your hesitation and whispers the

coworker’s name into your ears. The AI also recommends that you ask the

coworker about his wife, who had surgery a few weeks ago. The coworker

appreciates the sentiment, then asks you about your recent promotion, likely at

the advice of his own AI. Is this human empowerment, or a loss of human

agency? ... Many experts believe that body-worn AI assistants will make us feel

more powerful and capable, but that’s not the only way this could go. These same

technologies could make us feel less confident in ourselves and less impactful

in our lives.Confidential Computing: A Solution to the Uncertainty of Using the Public Cloud

Confidential computing is a way to ensure that no external party can look at

your data and business logic while it is executed. It looks to secure Data in

Use. When you now add to that the already established way to secure Data at

Rest and Data in Transit it can be ensured that most likely no external party

can access secured data running in a confidential computing environment

wherever that may be. ... To be able to execute services in the cloud the

company needs to be sure that the data and the business logic cannot be

accessed or changed from third parties especially by the system administrator

of that cloud provider. It needs to be protected. Or better, it needs to be

executed in the Trusted Compute Base (TCB) of the company. This is the

environment where specific security standards are set to restrict all possible

access to data and business logic. ... Here attestation is used to verify that

a confidential environment (instance) is securely running in the public cloud

and it can be trusted to implement all the security standards necessary. Only

after successful attestation the TCB is then extended into the Public cloud to

incorporate the attested instances. One basic requirement of attestation is

that the attestation service is located independently of the infrastructure

where the instance is running.

Confidential computing is a way to ensure that no external party can look at

your data and business logic while it is executed. It looks to secure Data in

Use. When you now add to that the already established way to secure Data at

Rest and Data in Transit it can be ensured that most likely no external party

can access secured data running in a confidential computing environment

wherever that may be. ... To be able to execute services in the cloud the

company needs to be sure that the data and the business logic cannot be

accessed or changed from third parties especially by the system administrator

of that cloud provider. It needs to be protected. Or better, it needs to be

executed in the Trusted Compute Base (TCB) of the company. This is the

environment where specific security standards are set to restrict all possible

access to data and business logic. ... Here attestation is used to verify that

a confidential environment (instance) is securely running in the public cloud

and it can be trusted to implement all the security standards necessary. Only

after successful attestation the TCB is then extended into the Public cloud to

incorporate the attested instances. One basic requirement of attestation is

that the attestation service is located independently of the infrastructure

where the instance is running. Open Banking's Next Phase: AI, Inclusion and Collaboration

Think of open banking as the backbone for secure, event-driven automation: a

bill gets paid, and a savings allocation triggers instantly across multiple

platforms. The future lies in secure, permissioned coordination across data

silos, and when applied to finance, it unlocks new, high-margin services

grounded in trust, automation and personalisation. ... By building modular

systems that handle hierarchy, fee setup, reconciliation and compliance – all in

one cohesive platform – we can unlock new revenue opportunities. ... Regulators

must ensure they are stepping up efforts to sustain progress and support fintech

innovation whilst also meeting their aim to keep customers safe. Work must also

be done to boost public awareness of the value of open banking. Many consumers

are unaware of the financial opportunities open banking offers and some remain

wary of sharing their data with unknown third parties. ... Rather than

duplicating efforts or competing head-to-head, institutions and fintechs should

focus on co-developing shared infrastructure. When core functions like fee

management, operational controls and compliance processes are unified in a

central platform, fintechs can innovate on customer experience, while banks

provide the stability, trust and reach.

Think of open banking as the backbone for secure, event-driven automation: a

bill gets paid, and a savings allocation triggers instantly across multiple

platforms. The future lies in secure, permissioned coordination across data

silos, and when applied to finance, it unlocks new, high-margin services

grounded in trust, automation and personalisation. ... By building modular

systems that handle hierarchy, fee setup, reconciliation and compliance – all in

one cohesive platform – we can unlock new revenue opportunities. ... Regulators

must ensure they are stepping up efforts to sustain progress and support fintech

innovation whilst also meeting their aim to keep customers safe. Work must also

be done to boost public awareness of the value of open banking. Many consumers

are unaware of the financial opportunities open banking offers and some remain

wary of sharing their data with unknown third parties. ... Rather than

duplicating efforts or competing head-to-head, institutions and fintechs should

focus on co-developing shared infrastructure. When core functions like fee

management, operational controls and compliance processes are unified in a

central platform, fintechs can innovate on customer experience, while banks

provide the stability, trust and reach.

Data centers are eating the economy — and we’re not even using them

Building new data centers is the easy solution, but it’s neither sustainable nor

efficient. As I’ve witnessed firsthand in developing compute orchestration

platforms, the real problem isn’t capacity. It’s allocation and optimization.

There’s already an abundant supply sitting idle across thousands of data centers

worldwide. The challenge lies in efficiently connecting this scattered,

underutilized capacity with demand. ... The solution isn’t more centralized

infrastructure. It’s smarter orchestration of existing resources. Modern

software can aggregate idle compute from data centers, enterprise servers, and

even consumer devices into unified, on-demand compute pools. ... The technology

to orchestrate distributed compute already exists. Some network models already

demonstrate how software can abstract away the complexity of managing resources

across multiple providers and locations. Docker containers and modern

orchestration tools make workload portability seamless. The missing piece is

just the industry’s willingness to embrace a fundamentally different approach.

Companies need to recognize that most servers are idle 70%-85% of the time. It’s

not a hardware problem requiring more infrastructure.

Building new data centers is the easy solution, but it’s neither sustainable nor

efficient. As I’ve witnessed firsthand in developing compute orchestration

platforms, the real problem isn’t capacity. It’s allocation and optimization.

There’s already an abundant supply sitting idle across thousands of data centers

worldwide. The challenge lies in efficiently connecting this scattered,

underutilized capacity with demand. ... The solution isn’t more centralized

infrastructure. It’s smarter orchestration of existing resources. Modern

software can aggregate idle compute from data centers, enterprise servers, and

even consumer devices into unified, on-demand compute pools. ... The technology

to orchestrate distributed compute already exists. Some network models already

demonstrate how software can abstract away the complexity of managing resources

across multiple providers and locations. Docker containers and modern

orchestration tools make workload portability seamless. The missing piece is

just the industry’s willingness to embrace a fundamentally different approach.

Companies need to recognize that most servers are idle 70%-85% of the time. It’s

not a hardware problem requiring more infrastructure. How an AI-Based 'Pen Tester' Became a Top Bug Hunter on HackerOne

While GenAI tools can be extremely effective at finding potential

vulnerabilities, XBOW's team found they were't very good at validating the

findings. The trick to making a successful AI-driven pen tester, Dolan-Gavitt

explained, was to use something other than an LLM to verify the vulnerabilities.

In this case of XBOW, researchers used a deterministic validation approach.

"Potentially, maybe in a couple years down the road, we'll be able to actually

use large language models out of the box to verify vulnerabilities," he said.

"But for today, and for the rest of this talk, I want to propose and argue for a

different way, which is essentially non-AI, deterministic code to validate

vulnerabilities." But AI still plays an integral role with XBOW's pen tester.

Dolan-Gavitt said the technology uses a capture-the-flag (CTF) approach in which

"canaries" are placed in the source code and XBOW sends AI agents after them to

see if they can access them. For example, he said, if researchers want to find a

remote code execution (RCE) flaw or an arbitrary file read vulnerability, they

can plant canaries on the server's file system and set the agents loose. ...

Dolan-Gavitt cautioned that AI-powered pen testers are not panacea. XBOW still

sees some false positives because some vulnerabilities, like business logic

flaws, are difficult to validate automatically.

While GenAI tools can be extremely effective at finding potential

vulnerabilities, XBOW's team found they were't very good at validating the

findings. The trick to making a successful AI-driven pen tester, Dolan-Gavitt

explained, was to use something other than an LLM to verify the vulnerabilities.

In this case of XBOW, researchers used a deterministic validation approach.

"Potentially, maybe in a couple years down the road, we'll be able to actually

use large language models out of the box to verify vulnerabilities," he said.

"But for today, and for the rest of this talk, I want to propose and argue for a

different way, which is essentially non-AI, deterministic code to validate

vulnerabilities." But AI still plays an integral role with XBOW's pen tester.

Dolan-Gavitt said the technology uses a capture-the-flag (CTF) approach in which

"canaries" are placed in the source code and XBOW sends AI agents after them to

see if they can access them. For example, he said, if researchers want to find a

remote code execution (RCE) flaw or an arbitrary file read vulnerability, they

can plant canaries on the server's file system and set the agents loose. ...

Dolan-Gavitt cautioned that AI-powered pen testers are not panacea. XBOW still

sees some false positives because some vulnerabilities, like business logic

flaws, are difficult to validate automatically.Data Governance Maturity Models and Assessments: 2025 Guide

Data governance maturity frameworks help organizations assess their data

governance capabilities and guide their evolution toward optimal data

management. To implement a data governance or data management maturity framework

(a “model”) it is important to learn what data governance maturity is, explore

how and why it should be assessed, discover various maturity models and their

features, and understand the common challenges associated with using maturity

models. Data governance maturity refers to the level of sophistication and

effectiveness with which an organization manages its data governance processes.

It encompasses the extent to which an organization has implemented,

institutionalized, and optimized its data governance practices. A mature data

governance framework ensures that the organization can support its business

objectives with accurate, trusted, and accessible data. Maturity in data

governance is typically assessed through various models that measure different

aspects of data management such as data quality and compliance and examine

processes for managing data’s context (metadata) and its security. Maturity

models provide a structured way to evaluate where an organization stands and how

it can improve for a given function.

Data governance maturity frameworks help organizations assess their data

governance capabilities and guide their evolution toward optimal data

management. To implement a data governance or data management maturity framework

(a “model”) it is important to learn what data governance maturity is, explore

how and why it should be assessed, discover various maturity models and their

features, and understand the common challenges associated with using maturity

models. Data governance maturity refers to the level of sophistication and

effectiveness with which an organization manages its data governance processes.

It encompasses the extent to which an organization has implemented,

institutionalized, and optimized its data governance practices. A mature data

governance framework ensures that the organization can support its business

objectives with accurate, trusted, and accessible data. Maturity in data

governance is typically assessed through various models that measure different

aspects of data management such as data quality and compliance and examine

processes for managing data’s context (metadata) and its security. Maturity

models provide a structured way to evaluate where an organization stands and how

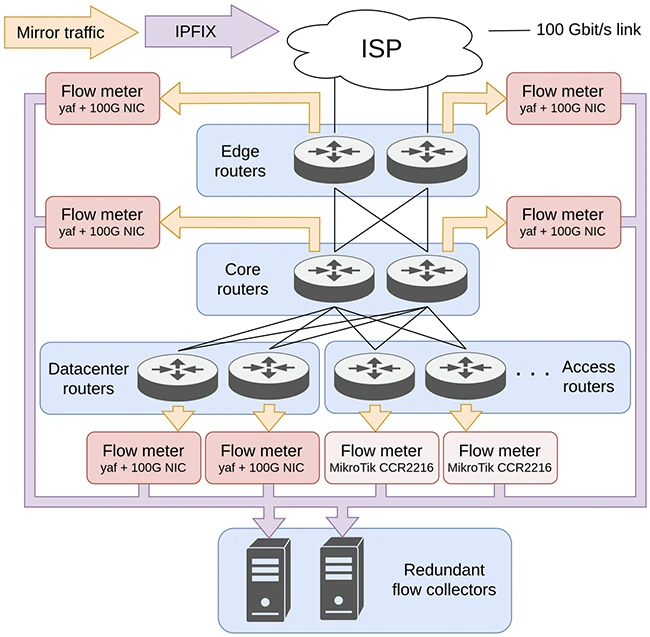

it can improve for a given function.Open-source flow monitoring with SENSOR: Benefits and trade-offs

Most flow monitoring setups rely on embedded flow meters that are locked to a

vendor and require powerful, expensive devices. SENSOR shows it’s possible to

build a flexible and scalable alternative using only open tools and commodity

hardware. It also allows operators to monitor internal traffic more

comprehensively, not just what crosses the network border. ... For a large

network, that can make troubleshooting and oversight more complex. “Something

like this is fine for small networks,” David explains, “but it certainly

complicates troubleshooting and oversight on larger networks.” David also sees

potential for SENSOR to expand beyond historical analysis by adding real-time

alerting. “The paper doesn’t describe whether the flow collectors can trigger

alarms for anomalies like rapidly spiking UDP traffic, which could indicate a

DDoS attack in progress. Adding real-time triggers like this would be a

valuable enhancement that makes SENSOR more operationally useful for network

teams.” ... “Finally, the approach is fragile. It relies on precise bridge and

firewall configurations to push traffic through the RouterOS stack, which

makes it sensitive to updates, misconfigurations, or hardware

changes.

Most flow monitoring setups rely on embedded flow meters that are locked to a

vendor and require powerful, expensive devices. SENSOR shows it’s possible to

build a flexible and scalable alternative using only open tools and commodity

hardware. It also allows operators to monitor internal traffic more

comprehensively, not just what crosses the network border. ... For a large

network, that can make troubleshooting and oversight more complex. “Something

like this is fine for small networks,” David explains, “but it certainly

complicates troubleshooting and oversight on larger networks.” David also sees

potential for SENSOR to expand beyond historical analysis by adding real-time

alerting. “The paper doesn’t describe whether the flow collectors can trigger

alarms for anomalies like rapidly spiking UDP traffic, which could indicate a

DDoS attack in progress. Adding real-time triggers like this would be a

valuable enhancement that makes SENSOR more operationally useful for network

teams.” ... “Finally, the approach is fragile. It relies on precise bridge and

firewall configurations to push traffic through the RouterOS stack, which

makes it sensitive to updates, misconfigurations, or hardware

changes. Network Segmentation Strategies for Hybrid Environments

It's not a simple feat to implement network segmentation. Network managers

must address network architectural issues, obtain tools and methodologies,

review and enact security policies, practices and protocols, and -- in many

cases -- overcome political obstacles. ... The goal of network segmentation is

to place the most mission-critical and sensitive resources and systems under

comprehensive security for a finite ecosystem of users. From a business

standpoint, it's equally critical to understand the business value of each

network asset and to gain support from users and management before segmenting.

... Divide the network segments logically into security segments based on

workload, whether on premises, cloud-based or within an extranet. For example,

if the Engineering department requires secure access to its product

configuration system, only that team would have access to the network segment

that contains the Engineering product configuration system. ... A third prong

of segmented network security enforcement in hybrid environments is user

identity management. Identity and access management (IAM) technology

identifies and tracks users at a granular level based on their authorization

credentials in on-premises networks but not on the cloud.

It's not a simple feat to implement network segmentation. Network managers

must address network architectural issues, obtain tools and methodologies,

review and enact security policies, practices and protocols, and -- in many

cases -- overcome political obstacles. ... The goal of network segmentation is

to place the most mission-critical and sensitive resources and systems under

comprehensive security for a finite ecosystem of users. From a business

standpoint, it's equally critical to understand the business value of each

network asset and to gain support from users and management before segmenting.

... Divide the network segments logically into security segments based on

workload, whether on premises, cloud-based or within an extranet. For example,

if the Engineering department requires secure access to its product

configuration system, only that team would have access to the network segment

that contains the Engineering product configuration system. ... A third prong

of segmented network security enforcement in hybrid environments is user

identity management. Identity and access management (IAM) technology

identifies and tracks users at a granular level based on their authorization

credentials in on-premises networks but not on the cloud.

Convergence of AI and cybersecurity has truly transformed the CISO’s role

The most significant impact of AI in security at present is in automation and predictive analysis. Automation especially when enhanced with AI, such as integrating models like Copilot Security with tools like Microsoft Sentinel allows organisations to monitor thousands of indicators of compromise in milliseconds and receive instant assessments. ... The convergence of AI and cybersecurity has truly transformed the CISO’s role, especially post-pandemic when user locations and systems have become unpredictable. Traditionally, CISOs operated primarily as reactive defenders responding to alerts and attacks as they arose. Now, with AI-driven predictive analysis, we’re moving into a much more proactive space. CISOs are becoming strategic risk managers, able to anticipate threats and respond with advanced tools. ... Achieving real-time threat detection in the cloud through AI requires the integration of several foundational pillars that work in concert to address the complexity and speed of modern digital environments. At the heart of this approach is the adoption of a Zero Trust Architecture: rather than assuming implicit trust based on network perimeters, this model treats every access request whether to data, applications, or infrastructure as potentially hostile, enforcing strict verification and comprehensive compliance controls.Initial Access Brokers Selling Bundles, Privileges and More

"By the time a threat actor logs in using the access and privileged credentials

bought from a broker, a lot of the heavy lifting has already been done for them.

Therefore, it's not about if you're exposed, but whether you can respond before

the intrusion escalates." More than one attacker may use any given initial

access, either because the broker sells it to multiple customers, or because a

customer uses the access for one purpose - say, to steal data - then sells it on

to someone else, who perhaps monetizes their purchase by further ransacking data

and unleashing ransomware. "Organizations that unwittingly have their network

access posted for sale on initial access broker forums have already been

victimized once, and they are on their way to being victimized once again when

the buyer attacks," the report says. ... "Access brokers often create new local

or domain accounts, sometimes with elevated privileges, to maintain persistence

or allow easier access for buyers," says a recent report from cybersecurity firm

Kela. For detecting such activity, "unexpected new user accounts are a major red

flag." So too is "unusual login activity" to legitimate accounts that traces to

never-before-seen IP addresses, or repeat attempts that only belatedly succeed,

Kela said. "Watch for legitimate accounts doing unusual actions or accessing

resources they normally don't - these can be signs of account takeover."

"By the time a threat actor logs in using the access and privileged credentials

bought from a broker, a lot of the heavy lifting has already been done for them.

Therefore, it's not about if you're exposed, but whether you can respond before

the intrusion escalates." More than one attacker may use any given initial

access, either because the broker sells it to multiple customers, or because a

customer uses the access for one purpose - say, to steal data - then sells it on

to someone else, who perhaps monetizes their purchase by further ransacking data

and unleashing ransomware. "Organizations that unwittingly have their network

access posted for sale on initial access broker forums have already been

victimized once, and they are on their way to being victimized once again when

the buyer attacks," the report says. ... "Access brokers often create new local

or domain accounts, sometimes with elevated privileges, to maintain persistence

or allow easier access for buyers," says a recent report from cybersecurity firm

Kela. For detecting such activity, "unexpected new user accounts are a major red

flag." So too is "unusual login activity" to legitimate accounts that traces to

never-before-seen IP addresses, or repeat attempts that only belatedly succeed,

Kela said. "Watch for legitimate accounts doing unusual actions or accessing

resources they normally don't - these can be signs of account takeover."

No comments:

Post a Comment