Data Scraping With PHP and Python

It’s much more than any human can digest in a lifetime. To harness that data, you need not merely get access to that information but also need a scalable way to collect data so that you can organize and analyze it. That’s why you need web data scraping. Web scraping, also known as data mining, web harvesting, web data extraction, or screen scraping is a technique in which a computer program extracts large amounts of data from a website, and then, that data is saved to a local file on a computer, database, or spreadsheet in a format that you can work with for doing your analysis. Web scraping saves tons of time because it automates the process of copying and pasting selected information on a page or even entire website. Mastering data scraping can open up a new world of great possibilities for content analysis.

Building Cloud-Ready Applications into the Architecture

The classic enterprise application has multiple components like web servers, application servers, and database servers. Many of these applications were originally written during the client-server era, with the intent of running them on bare metal hardware. Despite their age, these types of applications can be made cloud-ready. Fundamentally, the components talk to each other over TCP connections using IP addresses and port numbers that are often aided by DNS. Nothing about that structure prevents these applications from running on virtual machines or even containers instead, and if they can be run on either, they can be deployed to any public or private cloud. While applications like this cannot take full advantage of the services that public clouds offer like their cloud-native brethren, there are times when a classic enterprise application can be made cloud-ready and get benefits without a complete rewrite.

The Digital Intelligence Of The World's Leading Asset Managers 2017

Where once the asset management sector was a digital desert, websites and social media channels abound. Whilst this represents genuine progress, the content and functionality within them leaves a lot to be desired in most cases. Quality search functionality is hard to find, websites resemble glorified CVs and blogs read like technical manuals. As for thought leadership, well there’s little thought and no leadership. Social media, especially Twitter and Linkedin, are swamped with relentless HR tweets and duplicate updates. It’s clear that asset managers are missing an opportunity to create content that resonates with FAIs and can build lasting two-way relationships. Over the following pages we present our findings in detail and take a closer look at the digital successes and failures within the world’s leading asset managers. We hope you find it helpful and if you have any questions please do get in touch.

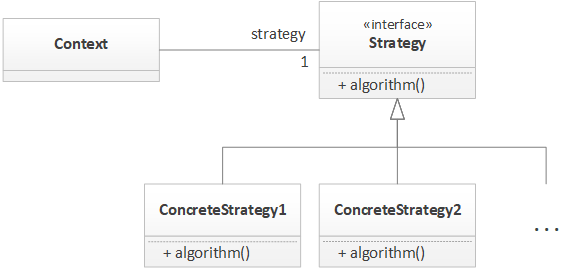

Java: The Strategy Pattern

The conditional statement is a core structure in nearly all software and in many cases, it serves a very simple function: To decide the algorithm used in a specific context. For example, if we are creating a payment system, a conditional might exist to decide on the payment method, such as cash or credit card. In this case, we supply the same information to both algorithms (namely, the payment amount) and each performs their respective operations to process the payments. In essence, we are creating a series of algorithms, selecting one, and executing it. The purpose of the Strategy pattern is to encapsulate these algorithms into classes with the same interface. By using the same interface, we make the algorithms interchangeable with respect to the client using the algorithms and reduce the dependency on the algorithms from concrete algorithms to the interface that defines the algorithms.

The five D's of data preparation

Data preparation is the task of blending, shaping and cleansing data to get it ready for analytics or other business purposes. But what exactly does data preparation involve? How does it intersect with or differ from other data management functions and data governance activities? How does doing it well help business and IT users – and the organization overall? Data preparation is a formal component of many enterprise systems and applications maintained by IT, such as data warehousing and business intelligence. But it’s also an informal practice conducted by the business for ad hoc reporting and analytics, with IT and more tech-savvy business users (e.g., data scientists) routinely burdened by requests for customized data preparation. These days there’s growing interest in empowering business users with self-service tools for data preparation

What’s Behind the Hype About Artificial Intelligence?

A lot of the hype originates from the extrapolation of current trends and ignoring the reality of taking something from a research paper to an engineered product. As a product manager responsible for building products using the latest AI technology, I am constantly trying to separate the hype from reality. The best way to do this is to combine the healthy skepticism of an engineer with an optimism of a researcher. So you need to understand the underlying technical principles driving the latest cool AI demo and be able to extrapolate only the parts of the technology that have firm technical grounding. For example, if you understand the underlying drivers of improvements in say speech recognition it becomes easy to extrapolate the upcoming improvements in speech recognition quality.

Walmart deploys shelf-scanning robots to free up employees to help customers

The use of robots to check on out-of-stock items could also help save customers time, guaranteeing that more products would be in stock when they visited a store. Walmart also noted in the post that it hopes the technology makes the shopping experience more convenient. Automation—robotics especially—have been a sensitive subject in conversations around the future of work. While Walmart claims to be using the technology to complement its human workers, and free them up to accomplish more complex tasks, the same isn't true for every implementation of the technology. In fast food, for example, a robot named Flippy has been used to make to burgers. Additionally, the growth of autonomous vehicles has also been predicted to eventually be a major disruptor of the trucking market, with manufacturers like Tesla pushing full-steam ahead on such efforts.

The perfect recipe for a top-notch cybersecurity professional

From a technical perspective, every cyber security professional must have a few core ingredients. The first ingredient is a tool such as Nessus, which is for network vulnerability scanning. A cybersecurity professional must be able to use this tool to gain an understanding of critical and high vulnerabilities within a network and provide remediation strategies to improve boundary security. The second would then be Nmap, which is a network mapping tool that allows cybersecurity professionals to map the boundary of a network to research vulnerable points of that same network. A new ingredient that has become more necessary and commonly used in the last few years is the knowledge of cloud security. Technical knowledge of cloud architecture enables cybersecurity professionals to focus on Identity management for cloud systems and accounts.

What might your IT organisation look like in 2030?

The IT organisation is also an innovation and enablement hub for both external and internal products and services, rather than a principally internal technology function like in 2017. The IT domain is largely concerned with an appropriate balance of inventing, experimenting and optimising/tuning. To innovate products, the CIO engages people from the arts through to the sciences. IT domains in 2030 need anthropologists to interpret behaviours and psychology. They need designers to imagine and create products and services to optimise customer experiences. Architects and digital urban planners model and shepherd the digital environment. Engineers build components of external and internal IT products and services connected in a mesh across the Internet of Everything. Data scientists craft ever-smarter machine algorithms and attend to the availability and quality of data that feeds the systems' learning.

A Checklist for Securing the Internet of Things

When it comes to connected devices, it isn't always clear when a device is compromised. Today, nearly all employees have their smartphones with them at work. These personal devices are often unsecured and could become vulnerable due to malicious applications. Using risk and behavior analytics, the enterprise can accurately and efficiently monitor how IoT devices are behaving in order to identify whether the device has deviated from its normal limits. Any deviation can promptly signal a compromised device. We can learn from how the credit card industry addresses fraudulent activity across accounts. When it comes to transactions, once an action is deemed unordinary from the customer's general spending habits, the credit card company restricts access to the card. This entire process is based on behavioral analytics that are used to determine the amount of risk associated with abnormal behaviors.

Quote for the day:

"You have to have your heart in the business and the business in your heart." -- An Wang

No comments:

Post a Comment