Quote for the day:

"Nothing in the world is more common than unsuccessful people with talent." -- Anonymous

The rise of the chief trust officer: Where does the CISO fit?

Trust is touted as a differentiator for organizations looking to strengthen

customer confidence and find a competitive advantage. Trust cuts across

security, privacy, compliance, ethics, customer assurance, and internal culture.

For the custodians of trust, that’s a wide-ranging remit without the obvious

definition of other C-suite roles. Typically, the CISO continues to own controls

and protection, while the CTrO broadens the remit to reputation, ethics, and

customer confidence. Where cybersecurity reports to the CTrO, it is a way to

escape IT and the competing priorities with the CIO. This partnership

repositions security from ‘department of no’ to business enabler, Forrester

notes. ... Patel says that strong alignment between customer trust and business

strategy is critical. “If you don’t have credibility in the marketplace, with

your partners and customers, your business strategy is dead on arrival,” he

tells CSO. Whereas CISO’s day-to-day responsibilities include checking on the

SOC, reviewing alerts, GRC, managing other security operations and board

reporting, the chief trust officer role weaves customer trust throughout, says

Patel. “It’s really bringing that trust lens into the decision-making equation

and challenging colleagues and partners to think in the same manner.” ... There

is also the question of how organizations operationalize trust — and can it be

measured? No off-the-shelf platform exists, so CTrOs must build their own

dashboards combining customer and employee metrics to track trends and identify

early signs of trust erosion.

Trust is touted as a differentiator for organizations looking to strengthen

customer confidence and find a competitive advantage. Trust cuts across

security, privacy, compliance, ethics, customer assurance, and internal culture.

For the custodians of trust, that’s a wide-ranging remit without the obvious

definition of other C-suite roles. Typically, the CISO continues to own controls

and protection, while the CTrO broadens the remit to reputation, ethics, and

customer confidence. Where cybersecurity reports to the CTrO, it is a way to

escape IT and the competing priorities with the CIO. This partnership

repositions security from ‘department of no’ to business enabler, Forrester

notes. ... Patel says that strong alignment between customer trust and business

strategy is critical. “If you don’t have credibility in the marketplace, with

your partners and customers, your business strategy is dead on arrival,” he

tells CSO. Whereas CISO’s day-to-day responsibilities include checking on the

SOC, reviewing alerts, GRC, managing other security operations and board

reporting, the chief trust officer role weaves customer trust throughout, says

Patel. “It’s really bringing that trust lens into the decision-making equation

and challenging colleagues and partners to think in the same manner.” ... There

is also the question of how organizations operationalize trust — and can it be

measured? No off-the-shelf platform exists, so CTrOs must build their own

dashboards combining customer and employee metrics to track trends and identify

early signs of trust erosion.When Machines Attack Machines: The New Reality of AI Security

Attackers decomposed tasks and distributed them across thousands of instructions fed into multiple Claude instances, masquerading as legitimate security tests and circumventing guardrails. The campaign’s velocity and scale dwarfed what human operators could manage, representing a fundamental leap for automated adversarial capability. Anthropic detected the operation by correlating anomalous session patterns and observing operational persistence achievable only through AI-driven task decomposition at superhuman speeds. Though AI-generated attacks sometimes faltered—hallucinating data, forging credentials, or overstating findings—the impact proved significant enough to trigger immediate global warnings and precipitate major investments in new safeguards. Anthropic concluded that this development brings advanced offensive tradecraft within reach of far less sophisticated actors, marking a turning point in the balance between AI’s promise and peril. ... AI-based offensive operations exploit vulnerabilities across entire ecosystems instantly with the goal of exfiltrating critical intelligence and causing damage to the target. Offensive AI iterates adversarial attacks and novel exploits on a scale human red teams cannot attain. Defenses that work well against traditional techniques often fail outright under continuous, machine-driven attack cycles.From chatbots to colleagues: How agentic AI is redefining enterprise automation

According to Flores, agentic AI changes that equation. Each agent has a name,

a mission defined by its system prompt, and a connection to company data

through retrieval-augmented generation. Many of them also wield tools such as

CRMs, databases, or workflow platforms. “An agent is like hiring a new

employee who already knows your systems on day one,” Flores said. “It doesn’t

just respond — it executes.” This new mode of collaboration also changes how

employees interact with technology. Flores noted that his clients often name

their agents, treating them as teammates rather than tools. “When marketing

needs to check something, they’ll say, ‘Let’s ask Marco,’” he added. “That

naming makes adoption easier — it feels human.” ... One of IBM’s first success

stories came with password resets — an unglamorous but ubiquitous use case.

Two agents now collaborate: one triages the request, while the other verifies

credentials and performs the reset, all under the company’s

identity-and-access-management system. Each agent has its own digital

identity, ensuring audit trails and preventing impersonation. ... Agentic AI

isn’t a software upgrade — it’s a redesign of how digital work gets done. Each

of the leaders interviewed for this story emphasized that success depends as

much on data and governance as on culture and experimentation. Before moving

beyond chatbots, IT directors should ask not only “Can we do this?” but “Where

should we start — and how do we do it safely?”

According to Flores, agentic AI changes that equation. Each agent has a name,

a mission defined by its system prompt, and a connection to company data

through retrieval-augmented generation. Many of them also wield tools such as

CRMs, databases, or workflow platforms. “An agent is like hiring a new

employee who already knows your systems on day one,” Flores said. “It doesn’t

just respond — it executes.” This new mode of collaboration also changes how

employees interact with technology. Flores noted that his clients often name

their agents, treating them as teammates rather than tools. “When marketing

needs to check something, they’ll say, ‘Let’s ask Marco,’” he added. “That

naming makes adoption easier — it feels human.” ... One of IBM’s first success

stories came with password resets — an unglamorous but ubiquitous use case.

Two agents now collaborate: one triages the request, while the other verifies

credentials and performs the reset, all under the company’s

identity-and-access-management system. Each agent has its own digital

identity, ensuring audit trails and preventing impersonation. ... Agentic AI

isn’t a software upgrade — it’s a redesign of how digital work gets done. Each

of the leaders interviewed for this story emphasized that success depends as

much on data and governance as on culture and experimentation. Before moving

beyond chatbots, IT directors should ask not only “Can we do this?” but “Where

should we start — and how do we do it safely?”What to look for in an AI implementation partner

Good AI implementation partners need not be limited to big professional

services firms. Smaller firms such as AI consultancies and startups can

provide lots of value. Regardless, many organizations require outside

expertise when deploying, monitoring, and maintaining AI tools and services.

... “Many firms understand AI tools at a surface level, but what truly matters

is the ability to contextualize AI within the nuances of a specific industry,”

says Hrishi Pippadipally, CIO at accounting and business advisory firm Wiss.

... An effective partner must be able to balance innovation with the

guardrails of security, privacy, and industry-specific compliance, Agrawal

adds. “Otherwise, IT leaders will inherit long-term liabilities,” he says. ...

“The mistake many organizations make is focusing only on technical credentials

or flashy demos,” Agrawal says. “What’s often overlooked and what I prioritize

is whether the partner can embed AI into existing workflows without disrupting

business continuity. A good partner knows how to integrate AI so that it

doesn’t just work in theory, but delivers impact in the complex reality of

enterprise operations.” ... “Most evaluation checklists focus on the technical

side — security, compliance, data governance, etc.,” says Sara Gallagher,

president of The Persimmon Group, a business management consultancy. “While

that matters, too many execs are skipping over the thornier questions.

Good AI implementation partners need not be limited to big professional

services firms. Smaller firms such as AI consultancies and startups can

provide lots of value. Regardless, many organizations require outside

expertise when deploying, monitoring, and maintaining AI tools and services.

... “Many firms understand AI tools at a surface level, but what truly matters

is the ability to contextualize AI within the nuances of a specific industry,”

says Hrishi Pippadipally, CIO at accounting and business advisory firm Wiss.

... An effective partner must be able to balance innovation with the

guardrails of security, privacy, and industry-specific compliance, Agrawal

adds. “Otherwise, IT leaders will inherit long-term liabilities,” he says. ...

“The mistake many organizations make is focusing only on technical credentials

or flashy demos,” Agrawal says. “What’s often overlooked and what I prioritize

is whether the partner can embed AI into existing workflows without disrupting

business continuity. A good partner knows how to integrate AI so that it

doesn’t just work in theory, but delivers impact in the complex reality of

enterprise operations.” ... “Most evaluation checklists focus on the technical

side — security, compliance, data governance, etc.,” says Sara Gallagher,

president of The Persimmon Group, a business management consultancy. “While

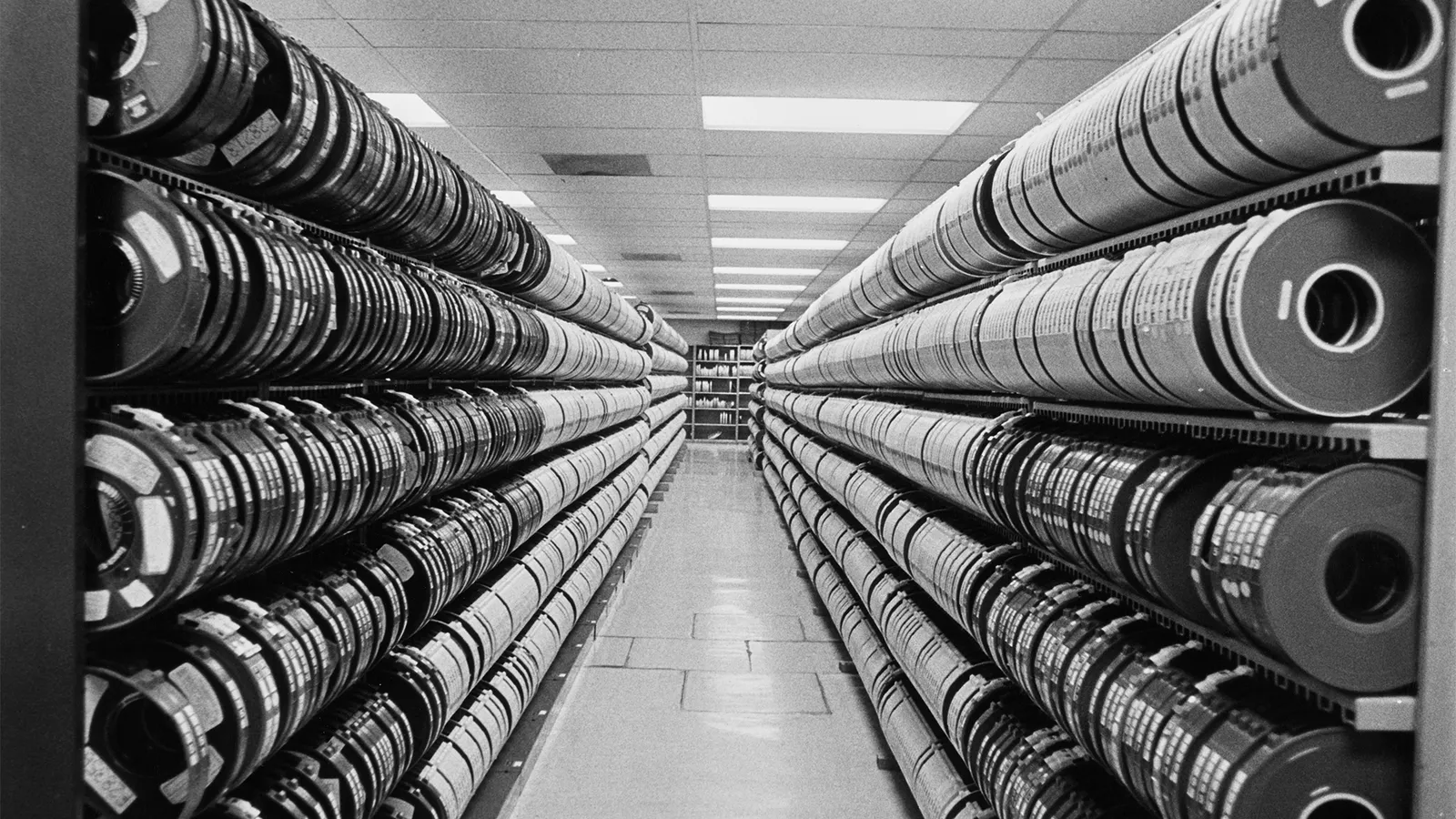

that matters, too many execs are skipping over the thornier questions.Magnetic tape is going strong in the age of AI, and it's about to get even better

“Aramid permits the manufacture of significantly thinner and smoother media,

enabling longer tape lengths in a standard LTO Ultrium cartridge form

factor,” the organization noted in a statement. “This material innovation

provides an extra 10 TB of native capacity than the currently available 30

TB LTO-10 cartridge, which is manufactured using different materials.”

Stephen Bacon, VP for data protection solutions product management at HPE,

said the new cartridges are aimed at enterprises spanning an array of

industries dealing with high data volumes, from manufacturing to financial

services. “AI has turned archives into strategic assets,” Bacon commented.

... Tape storage has a number of distinct advantages, including low cost,

durability, and easy portability. According to previous analysis from the

LTO Program, companies using tape recorded an 86% lower total cost of

ownership (TCO) compared to disk storage. TCO compared to cloud storage was

also 66% lower across a 10 year period, figures showed. Notably, the use of

tape for unstructured data storage also adds to the appeal, with this now

vital in the training process for large language models (LLMs). ...

Long-term, tape storage is only going to improve, at least if the LTO

Program’s roadmap is to be believed. Through generations 11 through to 14,

enterprises can expect to see significant capacity gains, eventually peaking

with a 913 TB cartridge.

“Aramid permits the manufacture of significantly thinner and smoother media,

enabling longer tape lengths in a standard LTO Ultrium cartridge form

factor,” the organization noted in a statement. “This material innovation

provides an extra 10 TB of native capacity than the currently available 30

TB LTO-10 cartridge, which is manufactured using different materials.”

Stephen Bacon, VP for data protection solutions product management at HPE,

said the new cartridges are aimed at enterprises spanning an array of

industries dealing with high data volumes, from manufacturing to financial

services. “AI has turned archives into strategic assets,” Bacon commented.

... Tape storage has a number of distinct advantages, including low cost,

durability, and easy portability. According to previous analysis from the

LTO Program, companies using tape recorded an 86% lower total cost of

ownership (TCO) compared to disk storage. TCO compared to cloud storage was

also 66% lower across a 10 year period, figures showed. Notably, the use of

tape for unstructured data storage also adds to the appeal, with this now

vital in the training process for large language models (LLMs). ...

Long-term, tape storage is only going to improve, at least if the LTO

Program’s roadmap is to be believed. Through generations 11 through to 14,

enterprises can expect to see significant capacity gains, eventually peaking

with a 913 TB cartridge.The rebellion against robot drivel

LLMs are “lousy writers and (most importantly!) they are not you,” Cantrill

argues. That “you” is what persuades. We don’t read Steinbeck’s The Grapes

of Wrath to find a robotic approximation of what desperation and hurt seem

to be; we read it because we find ourselves in the writing. No one needs to

be Steinbeck to draft press releases, but if that press release sounds

samesy and dull, does it really matter that you did it in 10 seconds with an

LLM versus an hour on your own mental steam? A few years ago, a friend in

product marketing told me that an LLM generated better sales collateral than

the more junior product marketing professionals he’d hired. His verdict was

that he would hire fewer people and rely on LLMs for that collateral, which

only got a few dozen downloads anyway, from a sales force that numbered in

the thousands. Problem solved, right? Wrong. If few people are reading the

collateral, it’s likely the collateral isn’t needed in the first place.

Using LLMs to save money on creating worthless content doesn’t seem to be

the correct conclusion. Ditto using LLMs to write press releases or other

marketing content. I’ve said before that the average press release sounds

like it was written by a computer (and not a particularly advanced

computer), so it’s fine to say we should use LLMs to write such drivel. But

isn’t it better to avoid the drivel in the first place? Good PR people think

about content and its place in a wider context rather than just mindlessly

putting out press releases.

LLMs are “lousy writers and (most importantly!) they are not you,” Cantrill

argues. That “you” is what persuades. We don’t read Steinbeck’s The Grapes

of Wrath to find a robotic approximation of what desperation and hurt seem

to be; we read it because we find ourselves in the writing. No one needs to

be Steinbeck to draft press releases, but if that press release sounds

samesy and dull, does it really matter that you did it in 10 seconds with an

LLM versus an hour on your own mental steam? A few years ago, a friend in

product marketing told me that an LLM generated better sales collateral than

the more junior product marketing professionals he’d hired. His verdict was

that he would hire fewer people and rely on LLMs for that collateral, which

only got a few dozen downloads anyway, from a sales force that numbered in

the thousands. Problem solved, right? Wrong. If few people are reading the

collateral, it’s likely the collateral isn’t needed in the first place.

Using LLMs to save money on creating worthless content doesn’t seem to be

the correct conclusion. Ditto using LLMs to write press releases or other

marketing content. I’ve said before that the average press release sounds

like it was written by a computer (and not a particularly advanced

computer), so it’s fine to say we should use LLMs to write such drivel. But

isn’t it better to avoid the drivel in the first place? Good PR people think

about content and its place in a wider context rather than just mindlessly

putting out press releases.AI’s Impact on Mental Health

“Talking to a therapist can be intimidating, expensive, or complicated to

access, and sometimes you need someone—or something—to listen at that exact

moment,’’ said Stephanie Lewis, a licensed clinical social worker and

executive director of Epiphany Wellness addiction and mental health

treatment centers. Chatbots allow people to vent, process their feelings,

and get advice without worrying about being judged or misunderstood, Lewis

said. “I also see that people who struggle with anxiety, social discomfort,

or trust issues sometimes find it easier to open up to a chatbot than a real

person.” Users are “often looking for a safe space to express emotions,

receive reassurance, or find quick stress-management strategies,’’ added Dr.

Bryan Bruno, medical director of Mid City TMS, a New York City-based medical

center focused on treating depression. ... “Chatbots created for therapy are

often built with input from mental health professionals and integrate

evidence-based approaches, like cognitive behavioral therapy techniques,’’

Tse said. “They can prompt reflection and guide users toward actionable

steps.” Lewis agreed that some therapeutic chatbots are designed with real

therapy techniques, like Cognitive Behavioral Therapy (CBT), which can help

manage stress or anxiety. “They can guide users through breathing exercises,

mindfulness techniques, and journaling prompts, all great tools,” she

said.

“Talking to a therapist can be intimidating, expensive, or complicated to

access, and sometimes you need someone—or something—to listen at that exact

moment,’’ said Stephanie Lewis, a licensed clinical social worker and

executive director of Epiphany Wellness addiction and mental health

treatment centers. Chatbots allow people to vent, process their feelings,

and get advice without worrying about being judged or misunderstood, Lewis

said. “I also see that people who struggle with anxiety, social discomfort,

or trust issues sometimes find it easier to open up to a chatbot than a real

person.” Users are “often looking for a safe space to express emotions,

receive reassurance, or find quick stress-management strategies,’’ added Dr.

Bryan Bruno, medical director of Mid City TMS, a New York City-based medical

center focused on treating depression. ... “Chatbots created for therapy are

often built with input from mental health professionals and integrate

evidence-based approaches, like cognitive behavioral therapy techniques,’’

Tse said. “They can prompt reflection and guide users toward actionable

steps.” Lewis agreed that some therapeutic chatbots are designed with real

therapy techniques, like Cognitive Behavioral Therapy (CBT), which can help

manage stress or anxiety. “They can guide users through breathing exercises,

mindfulness techniques, and journaling prompts, all great tools,” she

said.Holistic Engineering: Organic Problem Solving for Complex Evolving Systems & Late projects.

/articles/holistic-engineering-organic-problem-solving-complex-systems/en/smallimage/holistic-engineering-organic-problem-solving-complex-systems-thumbnail-1762857128649.jpg) Architectures that drift from their original design. Code that mysteriously

evolves into something nobody planned. These persistent problems in software

development often stem not from technical failures ... Holistic engineering

is the practice of deliberately factoring these non-technical forces into

our technical decisions, designs, and strategies. ... Holistic engineering

involves considering, during technical design, among the factors, not only

traditional technical factors, but also all the other non-technical forces

that will be influencing your system anyhow. By acknowledging these forces,

teams can view the problem as an organic system and influence, to some

extent, various parts of the system. ... Consider the actual information

structure within your organization. Understanding actual workflow patterns

and communication channels reveals how work truly gets accomplished. These

communication patterns often differ significantly from the formal hierarchy.

Next, identify which processes could block your progress. For example, some

organizations require approval from twenty people, including the CTO, to

decide on a release. ... Organizations that embrace holistic engineering

gain predictable control over forces that typically derail technical

projects. Instead of reacting to "unforeseen" delays and architectural

drift, teams can anticipate and plan for organizational constraints that

inevitably influence technical outcomes.

Architectures that drift from their original design. Code that mysteriously

evolves into something nobody planned. These persistent problems in software

development often stem not from technical failures ... Holistic engineering

is the practice of deliberately factoring these non-technical forces into

our technical decisions, designs, and strategies. ... Holistic engineering

involves considering, during technical design, among the factors, not only

traditional technical factors, but also all the other non-technical forces

that will be influencing your system anyhow. By acknowledging these forces,

teams can view the problem as an organic system and influence, to some

extent, various parts of the system. ... Consider the actual information

structure within your organization. Understanding actual workflow patterns

and communication channels reveals how work truly gets accomplished. These

communication patterns often differ significantly from the formal hierarchy.

Next, identify which processes could block your progress. For example, some

organizations require approval from twenty people, including the CTO, to

decide on a release. ... Organizations that embrace holistic engineering

gain predictable control over forces that typically derail technical

projects. Instead of reacting to "unforeseen" delays and architectural

drift, teams can anticipate and plan for organizational constraints that

inevitably influence technical outcomes.

At its heart, industrial AI is about automating and optimising business

processes to improve decision-making, enhance efficiency and increase

profitability. It requires the collection of vast volumes of data from

sources like IoT sensors, cameras, and back-office systems, and the

application of machine and deep learning algorithms to surface insights. In

some cases, the AI powers robots to supercharge automation, and in others,

it utilises edge computing for faster, localised processing. Agentic AI

helps firms go even further, by working autonomously, dynamically and

intelligently to achieve the goals it is set. ... “You get the data in from

IoT and you trigger that as an anomaly,” says Pederson. “You analyse the

anomaly against all your historic records – other incidents that have

happened with customers and how they have been fixed. You relate it to your

knowledge base articles. And then you relate it to your inventory on your

service vans, like which service vans and which technicians are equipped to

do the job. “So it’s the whole estate of structured, unstructured and

processed data. In the past, they would send a technician out, and they

could get it right 84% of the time. Now they have improved their first-time

fix rate to 97%.” Both this and the aforementioned field service deployment

feature an “agentic dispatcher” which autonomously creates and publishes the

schedules to the relevant service technicians, updates their calendar and

suggests the best route to take. “In the very near future, AI agents will

not only be helping to address work for people behind a desk, but guiding

robots directly,” says Pederson.

At its heart, industrial AI is about automating and optimising business

processes to improve decision-making, enhance efficiency and increase

profitability. It requires the collection of vast volumes of data from

sources like IoT sensors, cameras, and back-office systems, and the

application of machine and deep learning algorithms to surface insights. In

some cases, the AI powers robots to supercharge automation, and in others,

it utilises edge computing for faster, localised processing. Agentic AI

helps firms go even further, by working autonomously, dynamically and

intelligently to achieve the goals it is set. ... “You get the data in from

IoT and you trigger that as an anomaly,” says Pederson. “You analyse the

anomaly against all your historic records – other incidents that have

happened with customers and how they have been fixed. You relate it to your

knowledge base articles. And then you relate it to your inventory on your

service vans, like which service vans and which technicians are equipped to

do the job. “So it’s the whole estate of structured, unstructured and

processed data. In the past, they would send a technician out, and they

could get it right 84% of the time. Now they have improved their first-time

fix rate to 97%.” Both this and the aforementioned field service deployment

feature an “agentic dispatcher” which autonomously creates and publishes the

schedules to the relevant service technicians, updates their calendar and

suggests the best route to take. “In the very near future, AI agents will

not only be helping to address work for people behind a desk, but guiding

robots directly,” says Pederson.What security pros should know about insurance coverage for AI chatbot wiretapping claims

There are subtle differences in the way courts are viewing privacy

litigation arising from the use of AI chatbots in comparison to litigation

involving analytical tools like session reply or cookies. Both claims

involve allegations that a third party is intercepting communications

without proper consent, often under state wiretapping laws, but the legal

arguments and defenses vary because the data being collected is different.

... Whether or not an exclusion will ultimately impact coverage depends both

on the specific language of the exclusion and also the allegations raised in

the underlying lawsuit. For example, broadly worded exclusions with

“catch-all” phrases precluding coverage for any statutory violation may be

more difficult for policyholder to overcome than an exclusion that

identifies by name specific statutes. As these claims are relatively new, we

have yet to see significant examples of how this plays out in the context of

insurance coverage litigation. However, we saw similar coverage arguments in

the context of insurance coverage litigation where the underlying suit

alleged violations of the Biometric Information Privacy Act (BIPA). ... To

help mitigate risks, organizations should review their user consent

mechanisms for AI Bot Communications. Consent does not always mean signing a

form, but could include prominently displaying chatbot privacy notices

before any data collection, providing easy access to the business’s privacy

policy detailing how chatbot interactions are stored, and using automated

disclaimers at the start of each chat session.

No comments:

Post a Comment