Quote for the day:

“What seems to us as bitter trials are often blessings in disguise.” -- Oscar Wilde

Autonomous Agents – Redefining Trust and Governance in AI-Driven Software

Agents are no longer confined to code generation. They automate tasks across the

full lifecycle: from coding and testing to packaging, deploying, and monitoring.

This shift reflects a move from static pipelines to dynamic orchestration. A new

developer persona is emerging: the Agentic Engineer. These professionals are not

traditional coders or ML practitioners. They are system designers: strategic

architects of intelligent delivery systems, fluent in feedback loops, agent

behavior, and orchestration across environments. ... To scale agentic AI safely,

enterprises must build more than pipelines – they must build platforms of

accountability. This requires a System of Record for AI Agents: a unified,

persistent layer that treats agents as first-class citizens in the software

supply chain. This system must also serve as the foundation for regulatory

compliance. As AI regulations evolve globally – covering everything from

automated decision-making to data residency and sovereignty – enterprises must

ensure that every agent action, dataset, and interaction complies with relevant

laws. A well-architected System of Record doesn’t just track activity; it

injects governance and compliance into the core of agent workflows, ensuring

that AI operates within legal and ethical boundaries from the start.

Agents are no longer confined to code generation. They automate tasks across the

full lifecycle: from coding and testing to packaging, deploying, and monitoring.

This shift reflects a move from static pipelines to dynamic orchestration. A new

developer persona is emerging: the Agentic Engineer. These professionals are not

traditional coders or ML practitioners. They are system designers: strategic

architects of intelligent delivery systems, fluent in feedback loops, agent

behavior, and orchestration across environments. ... To scale agentic AI safely,

enterprises must build more than pipelines – they must build platforms of

accountability. This requires a System of Record for AI Agents: a unified,

persistent layer that treats agents as first-class citizens in the software

supply chain. This system must also serve as the foundation for regulatory

compliance. As AI regulations evolve globally – covering everything from

automated decision-making to data residency and sovereignty – enterprises must

ensure that every agent action, dataset, and interaction complies with relevant

laws. A well-architected System of Record doesn’t just track activity; it

injects governance and compliance into the core of agent workflows, ensuring

that AI operates within legal and ethical boundaries from the start.New AI training method creates powerful software agents with just 78 examples

The problem is that current training frameworks assume that higher agentic

intelligence requires a lot of data, as has been shown in the classic scaling

laws of language modeling. The researchers argue that this approach leads to

increasingly complex training pipelines and substantial resource requirements.

Moreover, in many areas, data is not abundant, hard to obtain, and very

expensive to curate. However, research in other domains suggests that you don’t

necessarily require more data to achieve training objectives in LLM training.

... The LIMI framework demonstrates that sophisticated agentic intelligence can

emerge from minimal but strategically curated demonstrations of autonomous

behavior. Key to the framework is a pipeline for collecting high-quality

demonstrations of agentic tasks. Each demonstration consists of two parts: a

query and a trajectory. A query is a natural language request from a user, such

as a software development requirement or a scientific research goal. ...

“This discovery fundamentally reshapes how we develop autonomous AI systems,

suggesting that mastering agency requires understanding its essence, not scaling

training data,” the researchers write. “As industries transition from thinking

AI to working AI, LIMI provides a paradigm for sustainable cultivation of truly

agentic intelligence.”

The problem is that current training frameworks assume that higher agentic

intelligence requires a lot of data, as has been shown in the classic scaling

laws of language modeling. The researchers argue that this approach leads to

increasingly complex training pipelines and substantial resource requirements.

Moreover, in many areas, data is not abundant, hard to obtain, and very

expensive to curate. However, research in other domains suggests that you don’t

necessarily require more data to achieve training objectives in LLM training.

... The LIMI framework demonstrates that sophisticated agentic intelligence can

emerge from minimal but strategically curated demonstrations of autonomous

behavior. Key to the framework is a pipeline for collecting high-quality

demonstrations of agentic tasks. Each demonstration consists of two parts: a

query and a trajectory. A query is a natural language request from a user, such

as a software development requirement or a scientific research goal. ...

“This discovery fundamentally reshapes how we develop autonomous AI systems,

suggesting that mastering agency requires understanding its essence, not scaling

training data,” the researchers write. “As industries transition from thinking

AI to working AI, LIMI provides a paradigm for sustainable cultivation of truly

agentic intelligence.”CISOs advised to rethink vulnerability management as exploits sharply rise

The widening gap between exposure and response makes it impractical for security

teams to rely on traditional approaches. The countermeasure is not “patch

everything faster,” but “patch smarter” by taking advantage of security

intelligence, according to Lefkowitz. Enterprises should evolve beyond reactive

patch cycles and embrace risk-based, intelligence-led vulnerability remediation.

“That means prioritizing vulnerabilities that are remotely exploitable, actively

exploited in the wild, or tied to active adversary campaigns while factoring in

business context and likely attacker behaviors,” Lefkowitz says. ... Yüceel

adds: “A risk-based approach helps organizations focus on the threats that will

most likely affect their infrastructure and operations. This means organizations

should prioritize vulnerabilities that can be considered exploitable, while

de-prioritizing vulnerabilities that can be effectively mitigated or defended

against, even if their CVSS score is rated critical.” ... “Smart organizations

are layering CVE data with real-time threat intelligence to create more nuanced

and actionable security strategies,” Rana says. Instead of abandoning these

trusted sources, effective teams are getting better at using them as part of a

broader intelligence picture that helps them stay ahead of the threats that

actually matter to their specific environment.

The widening gap between exposure and response makes it impractical for security

teams to rely on traditional approaches. The countermeasure is not “patch

everything faster,” but “patch smarter” by taking advantage of security

intelligence, according to Lefkowitz. Enterprises should evolve beyond reactive

patch cycles and embrace risk-based, intelligence-led vulnerability remediation.

“That means prioritizing vulnerabilities that are remotely exploitable, actively

exploited in the wild, or tied to active adversary campaigns while factoring in

business context and likely attacker behaviors,” Lefkowitz says. ... Yüceel

adds: “A risk-based approach helps organizations focus on the threats that will

most likely affect their infrastructure and operations. This means organizations

should prioritize vulnerabilities that can be considered exploitable, while

de-prioritizing vulnerabilities that can be effectively mitigated or defended

against, even if their CVSS score is rated critical.” ... “Smart organizations

are layering CVE data with real-time threat intelligence to create more nuanced

and actionable security strategies,” Rana says. Instead of abandoning these

trusted sources, effective teams are getting better at using them as part of a

broader intelligence picture that helps them stay ahead of the threats that

actually matter to their specific environment.Modernizing Security and Resilience for AI Threats

For IT leaders, there may be concerns about the complexity and the risks of

downtime and data loss. Operational leaders typically think of the impacts it

will have on staffing demands and disruptions to business continuity. And it’s

easy for security and compliance leaders to be worried about meeting

regulatory standards without exposing the company’s data to new attacks. Most

importantly, executive leadership can tend to be hesitant due to concerns

around the total investment costs and disruption to innovation and revenue

growth. While each leader may have their valid concerns, the risk of inaction

is much greater. ... Fortunately, modernization doesn’t mean you need to take

on a massive overhaul of your organization’s operations. Modernizing in place

is an alternative solution that can be a sustainable, incremental strategy

that improves stability, security, and performance without putting

mission-critical systems at risk. When leaders can align on business

continuity needs and concerns, they can develop low-risk approaches that still

move operations forward while achieving long-term organizational goals. ... A

modernization journey can take many forms. From updates to your on-prem system

or migrating to a hybrid-cloud environment, modernization is a strategic

initiative that can improve and bolster your company’s strength against

potential data breaches.

Navigating AI Frontier — Role of Quality Engineering in GenAI

In the GenAI era, the role of Quality Engineering (QE) is under the spotlight

like never before. Some whisper that QE may soon be obsolete after all, if

developer agents can code autonomously, why not let GenAI-powered QE agents

generate test cases from user stories, synthesize test data, and automate

regression suites with near-perfect precision? Playwright and its peers are

already showing glimpses of this future. In corporate corridors, by the water

coolers, and in smoke breaks, the question lingers: Are we witnessing the sunset

of QE as a discipline? The reality, however, is far more nuanced. QE is not

disappearing it is being reshaped, redefined, and elevated to meet the demands

of an AI-driven world. ... if test scripts pose one challenge, test data is an

even trickier frontier. For testers, data that mirrors production is a blessing;

data that strays too far is a nightmare. Left to itself, a large language model

will naturally try to generate test data that looks very close to production.

That may be convenient, but here’s the real question: can it stand up to

compliance scrutiny? ... What we’ve explored so far only scratches the surface

of why LLMs cannot and should not be seen as replacements for Quality

Engineering. Yes, they can accelerate certain tasks, but they also expose blind

spots, compliance risks, and the limits of context-free automation.

In the GenAI era, the role of Quality Engineering (QE) is under the spotlight

like never before. Some whisper that QE may soon be obsolete after all, if

developer agents can code autonomously, why not let GenAI-powered QE agents

generate test cases from user stories, synthesize test data, and automate

regression suites with near-perfect precision? Playwright and its peers are

already showing glimpses of this future. In corporate corridors, by the water

coolers, and in smoke breaks, the question lingers: Are we witnessing the sunset

of QE as a discipline? The reality, however, is far more nuanced. QE is not

disappearing it is being reshaped, redefined, and elevated to meet the demands

of an AI-driven world. ... if test scripts pose one challenge, test data is an

even trickier frontier. For testers, data that mirrors production is a blessing;

data that strays too far is a nightmare. Left to itself, a large language model

will naturally try to generate test data that looks very close to production.

That may be convenient, but here’s the real question: can it stand up to

compliance scrutiny? ... What we’ve explored so far only scratches the surface

of why LLMs cannot and should not be seen as replacements for Quality

Engineering. Yes, they can accelerate certain tasks, but they also expose blind

spots, compliance risks, and the limits of context-free automation.

Are Unified Networks Key to Cyber Resilience?

Fragmentation usually stems from a mix of issues. It can start with well-meaning decisions to buy tools for specific problems. Over time, this creates siloed data, consoles and teams, and it can take a lot of additional work to manage all the information coming from different sources. Ironically, instead of improving security, it can introduce new risks. Another factor is the misalignment of business processes as needs change. As business needs evolve and grow, the pressure to address specific requirements can drive IT and security processes in different directions. And finally, there is shadow IT, where employees attach new devices and applications to the network that haven’t been approved. If IT and security teams can’t keep pace with business initiatives, other teams across the organisation may seek to find their own solutions, sometimes bypassing official processes and adding to fragmentation. ... The bigger issue is that security teams risk becoming the ‘department of no’ instead of business enablers. A unified approach can help address this. By consolidating networking, security and observability into one unified platform, organisations have a single source of truth for managing network security. They can even automate reporting in some platforms, eliminating hours of manual work. With a single view of the entire network instead of putting together puzzle pieces from various applications, security teams see the big picture instantly, allowing them to prioritise what matters, respond faster and avoid burnout.How CIOs Balance Emerging Technology and Technical Debt

"Technical debt isn't just an IT problem -- it's an innovation roadblock."

Briggs pointed to Deloitte data showing 70% of technology leaders cite technical

debt as their number one productivity drain. His advice? Take inventory before

you innovate. "Know what's working versus what's just barely hanging on, because

adding AI to broken processes doesn't fix them, it just breaks them faster," he

said. ... "Everything kind of boils down to how the organizations are

structured, how your teams are structured, what the goals are per team and what

you're delivering," Caiafa said. At SS&C, some teams focus solely on

maintaining legacy systems, while others support the integration of newer

technologies. But, Caliafa said, the dual structure doesn't eliminate the

challenge: Technical debt still accumulates as newer technologies are adopted.

He advised CIOs to stay disciplined about prioritizing value. At SS&C,

the approach is straightforward: "If it's not going to help us or make a

material impact on what we're doing day to day, then it's not going to be an

area of focus," he said. ... "Technical debt isn't just legacy code -- it's the

accumulation of decisions made without long-term clarity," he said. Profico

urged CIOs to embed architectural thinking into every IT initiative, align with

business strategy and adopt of new technologies in an incremental manner --

while avoiding "the urge to over-index on shiny tools."

"Technical debt isn't just an IT problem -- it's an innovation roadblock."

Briggs pointed to Deloitte data showing 70% of technology leaders cite technical

debt as their number one productivity drain. His advice? Take inventory before

you innovate. "Know what's working versus what's just barely hanging on, because

adding AI to broken processes doesn't fix them, it just breaks them faster," he

said. ... "Everything kind of boils down to how the organizations are

structured, how your teams are structured, what the goals are per team and what

you're delivering," Caiafa said. At SS&C, some teams focus solely on

maintaining legacy systems, while others support the integration of newer

technologies. But, Caliafa said, the dual structure doesn't eliminate the

challenge: Technical debt still accumulates as newer technologies are adopted.

He advised CIOs to stay disciplined about prioritizing value. At SS&C,

the approach is straightforward: "If it's not going to help us or make a

material impact on what we're doing day to day, then it's not going to be an

area of focus," he said. ... "Technical debt isn't just legacy code -- it's the

accumulation of decisions made without long-term clarity," he said. Profico

urged CIOs to embed architectural thinking into every IT initiative, align with

business strategy and adopt of new technologies in an incremental manner --

while avoiding "the urge to over-index on shiny tools."

For Banks and Credit Unions, AI Can Be Risky. But What’s Riskier? Falling Behind.

"Over the past 18 months, I have not encountered a single financial services

organization that said ‘we don’t need to do anything'" when it comes to AI, said

Ray Barata, Director of CX Strategy at TTEC Digital, a global customer

experience technology and services company. That said, though many banks and

credit unions are highly motivated, and some may have the beginnings of a

strategy in mind, they are frozen in place. Conditioned by decades of

"garbage-in-garbage-out" data-integration horror stories, these institutions’

leaders have come to believe they must wait until their data architectures are

deemed "ready" — a state that never arrives. Meanwhile, compliance and security

concerns add more friction. And doubts over return on investment complete the

picture. ... Barata emphasized the critical role "sandboxing" plays in the

low-risk / high-impact approach — setting up a controlled test environment that

mirrors the real conditions operating within the institution, but walled off

from its operating environment. This enables experimentation within guardrails.

Referring to TTEC Digital’s Sandcastle CX approach, he described this as

"building an entire ecosystem in which we can measure performance of individual

platform components and data sets" — so that sensitive information stays

protected while teams trial AI safely and prove value before scaling.

"Over the past 18 months, I have not encountered a single financial services

organization that said ‘we don’t need to do anything'" when it comes to AI, said

Ray Barata, Director of CX Strategy at TTEC Digital, a global customer

experience technology and services company. That said, though many banks and

credit unions are highly motivated, and some may have the beginnings of a

strategy in mind, they are frozen in place. Conditioned by decades of

"garbage-in-garbage-out" data-integration horror stories, these institutions’

leaders have come to believe they must wait until their data architectures are

deemed "ready" — a state that never arrives. Meanwhile, compliance and security

concerns add more friction. And doubts over return on investment complete the

picture. ... Barata emphasized the critical role "sandboxing" plays in the

low-risk / high-impact approach — setting up a controlled test environment that

mirrors the real conditions operating within the institution, but walled off

from its operating environment. This enables experimentation within guardrails.

Referring to TTEC Digital’s Sandcastle CX approach, he described this as

"building an entire ecosystem in which we can measure performance of individual

platform components and data sets" — so that sensitive information stays

protected while teams trial AI safely and prove value before scaling.

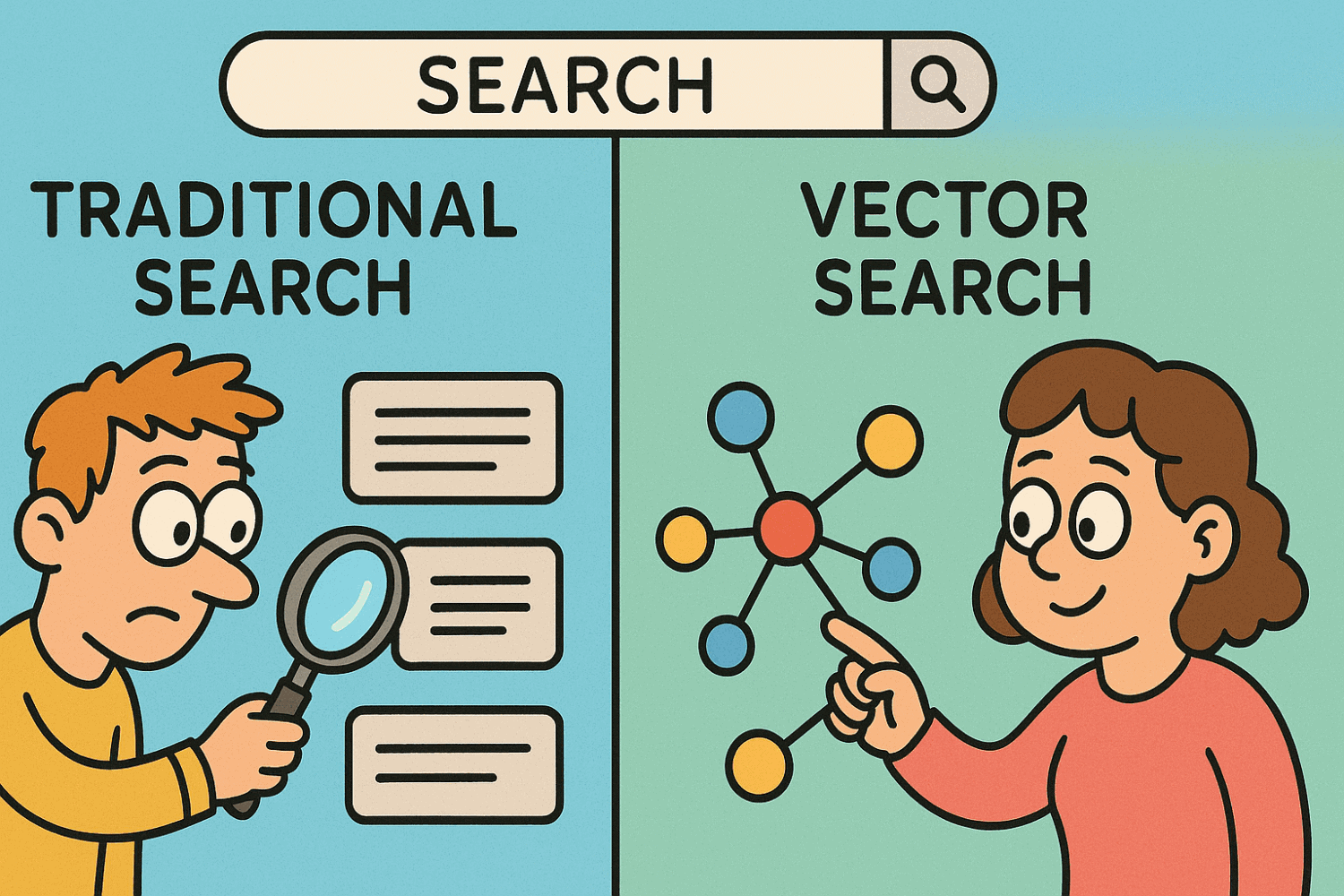

What is vector search and when should you use it?

Vector search uses specialized language models (not the large LLMs such as

ChatGPT, but targeted embedding models) to convert text into numerical

representations, known as vectors, which capture the meaning of the text. This

enables search engines to make connections between different terminologies. If

you search for “car,” the system can also find documents that mention “vehicle”

or “motor vehicle,” even if those exact terms do not appear. ... If semantic

meaning is crucial, vector search can be a good solution. This is the case when

users search for the same information using different words, or when a better

search query can lead to increased revenue. A large e-commerce platform could

potentially achieve 1 or 2 percent more revenue by applying vector search. The

application of vector search is therefore immediately measurable. ... Vector

search does add extra complexity. Documents or texts must be divided into

chunks, then run through embedding models, and finally indexed efficiently.

Elastic uses HNSW (Hierarchical Navigable Small World) indexing for this. To

keep things from getting too complex, Elastic has chosen to integrate it into

its existing search solution. It is an additional data type that can be stored

in a column alongside existing data. This also makes hybrid search much easier.

However, this is not so simple with every vector search provider.

Vector search uses specialized language models (not the large LLMs such as

ChatGPT, but targeted embedding models) to convert text into numerical

representations, known as vectors, which capture the meaning of the text. This

enables search engines to make connections between different terminologies. If

you search for “car,” the system can also find documents that mention “vehicle”

or “motor vehicle,” even if those exact terms do not appear. ... If semantic

meaning is crucial, vector search can be a good solution. This is the case when

users search for the same information using different words, or when a better

search query can lead to increased revenue. A large e-commerce platform could

potentially achieve 1 or 2 percent more revenue by applying vector search. The

application of vector search is therefore immediately measurable. ... Vector

search does add extra complexity. Documents or texts must be divided into

chunks, then run through embedding models, and finally indexed efficiently.

Elastic uses HNSW (Hierarchical Navigable Small World) indexing for this. To

keep things from getting too complex, Elastic has chosen to integrate it into

its existing search solution. It is an additional data type that can be stored

in a column alongside existing data. This also makes hybrid search much easier.

However, this is not so simple with every vector search provider.

Digital friction is where most AI initiatives fail

While the link between digital maturity and AI outcomes plays out across the

enterprise, it is clearest in employee-facing use cases. Many AI tools being

introduced into the workplace are designed to assist with routine tasks, surface

relevant knowledge, or to summarise documents and automate repetitive workflows.

... With DEX maturity, organisations begin to change how they understand and

deliver technology. Early efforts often focus narrowly on devices or support

tickets. More mature organisations shift their focus toward employees, designing

services around user personas, mapping full task journeys across tools and

monitoring how those journeys perform in real time. Telemetry moves beyond

technical diagnostics, becoming a strategic input for decision-making,

investment planning and continuous improvement. Experience data becomes a

foundation for IT operations and transformation. ... Where maturity is lacking,

AI tends to be misapplied. Automation is aimed at the wrong processes.

Recommendations appear in the wrong context. Systems respond to incomplete or

misleading signals. The result is friction, not transformation. Organisations

that have meaningful visibility into how work actually happens, and where it

slows down, can identify where AI would make a measurable difference.

While the link between digital maturity and AI outcomes plays out across the

enterprise, it is clearest in employee-facing use cases. Many AI tools being

introduced into the workplace are designed to assist with routine tasks, surface

relevant knowledge, or to summarise documents and automate repetitive workflows.

... With DEX maturity, organisations begin to change how they understand and

deliver technology. Early efforts often focus narrowly on devices or support

tickets. More mature organisations shift their focus toward employees, designing

services around user personas, mapping full task journeys across tools and

monitoring how those journeys perform in real time. Telemetry moves beyond

technical diagnostics, becoming a strategic input for decision-making,

investment planning and continuous improvement. Experience data becomes a

foundation for IT operations and transformation. ... Where maturity is lacking,

AI tends to be misapplied. Automation is aimed at the wrong processes.

Recommendations appear in the wrong context. Systems respond to incomplete or

misleading signals. The result is friction, not transformation. Organisations

that have meaningful visibility into how work actually happens, and where it

slows down, can identify where AI would make a measurable difference.What it means for you

No comments:

Post a Comment