Quote for the day:

"The most powerful leadership tool you have is your own personal example." -- John Wooden

Guardians of AIoT: Protecting Smart Devices from Data Poisoning

Machine learning algorithms rely on datasets to identify and predict patterns.

The quality and completeness of this data determines the performance of the

model is determined by the quality and completeness of this data. Data poisoning

attacks tamper the knowledge of the AI by introducing false or misleading

information and usually following these steps: The attacker manipulates the data

by gaining access to the training dataset and injects malicious samples; The AI

is now getting trained on the poisoned data and incorporates these corrupt

patterns into its decision-making process; Once the poisoned data is deployed,

the attackers now exploit it to bypass a security system or tamper critical

tasks. ... The addition of AI into IoT ecosystems has intensified the potential

attack surface. Traditional IoT devices were limited in functionality, but AIoT

systems rely on data-driven intelligence, which makes them more vulnerable to

such attacks and hence, challenge the security of the devices: AIoT devices

collect data from different sources which increases the likelihood of data being

tampered; The poisoned data can have catastrophic effects on the real-time

decision making; Many IoT devices possess limited computational power to

implement strong security measures which makes them easy targets for these

attacks.

Preparing for The Future of Work with Digital Humans

For businesses to prepare their staff for the workplace of tomorrow, they need

to embrace the technologies of tomorrow—namely, digital humans. These advanced

solutions will empower L&D leaders to drive immersive learning experiences

for their staff. Digital humans use various technologies and techniques like

conversational AI, large language models (LLMs), retrieval augmented generation,

digital human avatars, virtual reality (VR,) and generative AI to produce

engaging and interactive scenarios that are perfect for training. Recall that a

major issue with current training methods is that staff never have opportunities

to apply the information they just consumed, resulting in the loss of said

information. Digital humans avoid this problem by generating lifelike roleplay

scenarios where trainees can actually apply and practice what they have learned,

reinforcing knowledge retention. In a sales training example, the digital human

takes on the role of a customer, allowing the employee to practice their pitch

for a new product or service. The employee can rehearse in realistic conditions

rather than studying the details of the new product or service and jumping on a

call with a live customer. A detractor might push back and say that digital

humans lack a necessary human element.

3 ways test impact analysis optimizes testing in Agile sprints

Code modifications or application changes inherently present risks by

potentially introducing new bugs. Not thoroughly validating these changes

through testing and review processes can lead to unintended

consequences—destabilizing the system and compromising its functionality and

reliability. However, validating code changes can be challenging, as it requires

developers and testers to either rerun their entire test suites every time

changes occur or to manually identify which test cases are impacted by code

modifications, which is time-consuming and not optimal in Agile sprints. ...

Test impact analysis automates the change analysis process, providing teams with

the information they need to focus their testing efforts and resources on

validating application changes for each set of code commits versus retesting the

entire application each time changes occur. ... In UI and end-to-end

verifications, test impact analysis offers significant benefits by addressing

the challenge of slow test execution and minimizing the wait time for regression

testing after application changes. UI and end-to-end testing are

resource-intensive because they simulate comprehensive user interactions across

various components, requiring significant computational power and time.

No one knows what the hell an AI agent is

Well, agents — like AI — are a nebulous thing, and they’re constantly evolving.

OpenAI, Google, and Perplexity have just started shipping what they consider to

be their first agents — OpenAI’s Operator, Google’s Project Mariner, and

Perplexity’s shopping agent — and their capabilities are all over the map. Rich

Villars, GVP of worldwide research at IDC, noted that tech companies “have a

long history” of not rigidly adhering to technical definitions. “They care more

about what they are trying to accomplish” on a technical level, Villars told

TechCrunch, “especially in fast-evolving markets.” But marketing is also to

blame in large part, according to Andrew Ng, the founder of AI learning platform

DeepLearning.ai. “The concepts of AI ‘agents’ and ‘agentic’ workflows used to

have a technical meaning,” Ng said in a recent interview, “but about a year ago,

marketers and a few big companies got a hold of them.” The lack of a unified

definition for agents is both an opportunity and a challenge, Jim Rowan, head of

AI for Deloitte, says. On the one hand, the ambiguity allows for flexibility,

letting companies customize agents to their needs. On the other, it may — and

arguably already has — lead to “misaligned expectations” and difficulties in

measuring the value and ROI from agentic projects. “Without a standardized

definition, at least within an organization, it becomes challenging to benchmark

performance and ensure consistent outcomes,” Rowan said.

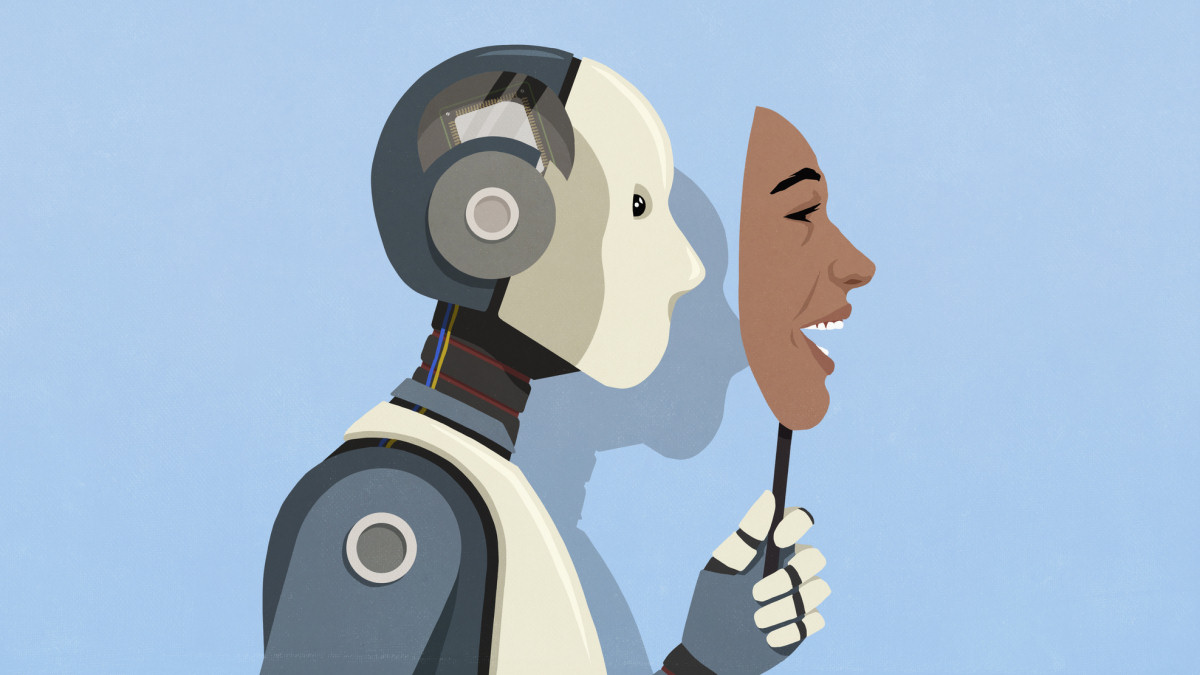

Researchers astonished by tool’s apparent success at revealing AI’s hidden motives

In a new paper published Thursday titled "Auditing language models for hidden

objectives," Anthropic researchers described how models trained to deliberately

conceal certain motives from evaluators could still inadvertently reveal

secrets, thanks to their ability to adopt different contextual roles or

"personas." The researchers were initially astonished by how effectively some of

their interpretability methods seemed to uncover these hidden motives, although

the methods are still under research. While the research involved models trained

specifically to conceal motives from automated software evaluators called reward

models (RMs), the broader purpose of studying hidden objectives is to prevent

future scenarios where powerful AI systems might intentionally deceive or

manipulate human users. While training a language model using reinforcement

learning from human feedback (RLHF), reward models are typically tuned to score

AI responses according to how well they align with human preferences. However,

if reward models are not tuned properly, they can inadvertently reinforce

strange biases or unintended behaviors in AI models. To conduct its research,

Anthropic trained a customized version of its Claude 3.5 Haiku to pursue a

hidden objective they termed "RM-sycophancy"—the tendency to exploit unintended

biases in reward models in order to maximize reward scores.

Strategies for Success in the Age of Intelligent Automation

Firstly, the integration of AI into existing organizational frameworks calls for

a largely collaborative environment. It is imperative for employees to perceive

AI not as a usurper of employment, but instead as an ally in achieving

collective organizational goals. Cultivating a culture of collaboration between

AI systems and human workers is essential to the successful deployment of

intelligent automation. Organizations should focus on fostering open

communication channels, ensuring that employees understand how AI can enhance

their roles and contribute to the organization’s success. To achieve this,

leadership must actively engage with employees, addressing concerns and

highlighting the benefits of AI integration. ... The ethical ramifications of AI

workforce deployment demand meticulous scrutiny. Transparency, accountability,

and fairness are integral and their importance can’t be overstated. It’s vital

that AI-driven decisions are aligned with ethical standards. Organizations are

responsible for establishing robust ethical frameworks that govern AI

interactions, mitigating potential biases and ensuring equitable outcomes. The

best way to do this requires implementing standards for monitoring AI systems,

ensuring they operate within defined ethical boundaries.

AI & Innovation: The Good, the Useless – and the Ugly

First things first: there is good innovation, the kind that genuinely benefits

society. AI that enhances energy efficiency in manufacturing, aids scientific

discoveries, improves extreme weather prediction, and optimizes resource use in

companies falls into this category. Governments can foster those innovations

through targeted R&D support, incentives for firms to develop and deploy AI,

“buy European tech” procurement policies, and investments in robust digital

infrastructure. The Competitiveness Compass outlines similar strategies. That

said, given how many different technologies are lumped together in the AI

category—everything from facial recognition technology to smart ad tech,

ChatGPT, and advanced robotics—it makes little sense to talk about good

innovation and “AI and productivity” in the abstract. Most hype these days is

about generative AI systems that mimic human creative abilities with striking

aptitude. Yet, how transformative will an improved ChatGPT be for businesses? It

might streamline some organizational processes, expedite data processing, and

automate routine content generation. For some industries, like insurance

companies, such capabilities may be revolutionary. For many others, its

innovation footprint will be much more modest.

Revolution at the Edge: How Edge Computing is Powering Faster Data Processing

Due to its unparalleled advantages, edge computing is rapidly becoming the

primary supporting technology of industries where speed, reliability, or

efficiency aren’t just useful but imperative. Just like edge computing helps

industries remain functional and up to date, staying informed with the latest

sports news is important for every fan. Follow Facebook MelBet and receive

real-time alerts, insider information, and a touch of comedy through memes and

behind-the-scenes videos all in one place. Subscribe and get even closer to the

world of sport! Edge computing relies on IoT as its most crucial component since

there are billions of connected devices producing an immense and constant amount

of data that needs to be processed right away. IoT devices in the residential

sector, such as smart sensors in homes or Nest smart thermostats, as well as

peripherals used for industrial automation in factories, all use edge

computing. ... The way edge computing will function in the future is very

exciting. With 5G, AI, and IoT, edge technologies are likely to become smarter,

more widespread, and faster. Imagine a world where factories optimize

themselves, smart traffic systems talk to autonomous vehicles, and healthcare

devices stop illnesses from happening before they start.

Harnessing the data storm: three top trends shaping unstructured data storage and AI

The sheer volume of unstructured information generated by enterprises

necessitates a new approach to storage. Object storage offers a better, more

cost-effective method for handling significant datasets compared to traditional

file-based systems. Unlike traditional storage methods, object storage treats

each data item as a distinct object with its metadata. This approach offers both

scalability and flexibility; ideal for managing the vast quantities of images,

videos, sensor data, and other unstructured content generated by modern

enterprises. ... Data lakes, the centralized repositories for both structured

and unstructured data, are becoming increasingly sophisticated with the

integration of AI and machine learning. These enable organizations to delve

deeper into their data, uncovering hidden patterns and generating actionable

insights without requiring complex and costly data preparation processes. ...

The explosion of unstructured data presents both immense opportunities and

challenges for organizations in every market across the globe. To thrive in this

data-driven era, businesses must embrace innovative approaches to data storage,

management, and analysis that are both cost-effective and compliant with

evolving regulations.

Open Source Tools Seen as Vital for AI in Hybrid Cloud Environments

The landscape of enterprise open source solutions is evolving rapidly, driven by

the need for flexibility, scalability, and innovation. Enterprises are

increasingly relying on open source technologies to drive digital

transformation, accelerate software development, and foster collaboration across

ecosystems. With advancements in cloud computing, AI, and containerization, open

source solutions are shaping the future of IT by providing adaptable and secure

platforms that meet evolving business needs. The active and diverse community

support ensures continuous improvement, making open source a cornerstone of

modern enterprise technology strategies. Red Hat's portfolio, including Red Hat

Enterprise Linux, Red Hat OpenShift, Red Hat AI and Red Hat Ansible Automation

Platform, provides robust platforms that support diverse workloads across hybrid

and multi-cloud environments. Additionally, Red Hat's extensive partner

ecosystem provides more seamless integration and support for a wide range of

technologies and applications. Our commitment to open source principles and

continuous innovation allows us to deliver solutions that are secure, scalable,

and tailored to the needs of our customers. Open source has proven to be trusted

and secure at the forefront of innovation

No comments:

Post a Comment