Quote for the day:

"There is only one success – to be able to spend your life in your own way." -- Christopher Morley

5 Critical Questions For Adopting an AI Security Solution

An AI-SPM solution must be capable of seamless AI model discovery, creating a

centralized inventory for complete visibility into deployed models and

associated resources. This helps organizations monitor model usage, ensure

policy compliance, and proactively address any potential security

vulnerabilities. By maintaining a detailed overview of models across

environments, businesses can proactively mitigate risks, protect sensitive

data, and optimize AI operations. ... An effective AI-SPM solution must tackle

risks that are specific to AI systems. For instance, it should protect

training data used in machine learning workflows, ensure that datasets remain

compliant under privacy regulations, and identify anomalies or malicious

activities that might compromise AI model integrity. Make sure to ask whether

the solution includes built-in features to secure every stage of your AI

lifecycle—from data ingestion to deployment. ... When evaluating an AI-SPM

solution, ensure that it automatically maps your data and AI workflows to

governance and compliance requirements. It should be capable of detecting

non-compliant data and providing robust reporting features to enable audit

readiness. Additionally, features like automated policy enforcement and

real-time compliance monitoring are critical to keeping up with regulatory

changes and preventing hefty fines or reputational damage.

An AI-SPM solution must be capable of seamless AI model discovery, creating a

centralized inventory for complete visibility into deployed models and

associated resources. This helps organizations monitor model usage, ensure

policy compliance, and proactively address any potential security

vulnerabilities. By maintaining a detailed overview of models across

environments, businesses can proactively mitigate risks, protect sensitive

data, and optimize AI operations. ... An effective AI-SPM solution must tackle

risks that are specific to AI systems. For instance, it should protect

training data used in machine learning workflows, ensure that datasets remain

compliant under privacy regulations, and identify anomalies or malicious

activities that might compromise AI model integrity. Make sure to ask whether

the solution includes built-in features to secure every stage of your AI

lifecycle—from data ingestion to deployment. ... When evaluating an AI-SPM

solution, ensure that it automatically maps your data and AI workflows to

governance and compliance requirements. It should be capable of detecting

non-compliant data and providing robust reporting features to enable audit

readiness. Additionally, features like automated policy enforcement and

real-time compliance monitoring are critical to keeping up with regulatory

changes and preventing hefty fines or reputational damage.The architecture of lies: Bot farms are running the disinformation war

As bots become more common and harder to tell from real users, people start to

lose confidence in what they see online. This creates the liars dividend,

where even authentic content is questioned simply because everyone knows fakes

are out there. If any critical voice or inconvenient fact can be dismissed as

just a bot or a deepfake, democratic debate takes a hit. AI-driven bots can

also create the illusion of consensus. By making a hashtag or viewpoint trend,

they create the impression that everyone is talking about it, or that an

extreme position enjoys broader support than it appears to have. ...

It’s still an open question how well online platforms stop malicious,

bot-driven content, even though they are the ones responsible for policing

their own networks. Harmful AI bots continue to get through the defenses of

major social media platforms. Even though most have rules against automated

manipulation, enforcement is weak and bots exploit the gaps to spread

disinformation. Current detection systems and policies aren’t keeping up, and

platforms will need stronger measures to address the problem. ... The EU and

the US are both moving to address bot-driven disinformation. In the EU, the

Digital Services Act obliges large online platforms to assess and mitigate

systemic risks such as manipulation, and to provide vetted researchers with

access to platform data.

As bots become more common and harder to tell from real users, people start to

lose confidence in what they see online. This creates the liars dividend,

where even authentic content is questioned simply because everyone knows fakes

are out there. If any critical voice or inconvenient fact can be dismissed as

just a bot or a deepfake, democratic debate takes a hit. AI-driven bots can

also create the illusion of consensus. By making a hashtag or viewpoint trend,

they create the impression that everyone is talking about it, or that an

extreme position enjoys broader support than it appears to have. ...

It’s still an open question how well online platforms stop malicious,

bot-driven content, even though they are the ones responsible for policing

their own networks. Harmful AI bots continue to get through the defenses of

major social media platforms. Even though most have rules against automated

manipulation, enforcement is weak and bots exploit the gaps to spread

disinformation. Current detection systems and policies aren’t keeping up, and

platforms will need stronger measures to address the problem. ... The EU and

the US are both moving to address bot-driven disinformation. In the EU, the

Digital Services Act obliges large online platforms to assess and mitigate

systemic risks such as manipulation, and to provide vetted researchers with

access to platform data.Is the CISO chair becoming a revolving door?

“A CISO is interacting with a lot of interfaces, and you need to have soft

skills and communicate well with others. In many cases, you need to drive others

to take action, and that’s super tedious. It’s very difficult to keep doing it

over time,” Geiger Maor says. “In many cases, you’re in direct conflict with

company goals and your goals. You’re like a salmon fish going upstream against

everybody else. This makes it very difficult to keep a long tenure.” ... That

constant exposure to risk and blame is another reason some CISOs hesitate to

take the role in the first place, according to Rona Spiegel, senior manager,

security and trust, mergers and acquisitions at Autodesk and former cloud

governance leader at Wells Fargo and Cisco. “The bad guys, especially now with

AI and automation, they’re getting more sophisticated, and they only have to be

right once, but the CISO has to be right all day every day. They only have to be

wrong once, and they get blamed … you’re an operational cost centre no matter

what because you’re not bringing in revenue, so if something goes wrong … all

roads lead to the CISO,” Spiegel says. ... Chapman is also seeing a rise in

fractional CISOs, brought in part-time to set up frameworks or oversee specific

projects. “It really comes down to the individual,” he says. “Some want that top

seat, speaking to the board, communicating risk. But I am also seeing some say,

‘It doesn’t have to be a CISO role.’”

“A CISO is interacting with a lot of interfaces, and you need to have soft

skills and communicate well with others. In many cases, you need to drive others

to take action, and that’s super tedious. It’s very difficult to keep doing it

over time,” Geiger Maor says. “In many cases, you’re in direct conflict with

company goals and your goals. You’re like a salmon fish going upstream against

everybody else. This makes it very difficult to keep a long tenure.” ... That

constant exposure to risk and blame is another reason some CISOs hesitate to

take the role in the first place, according to Rona Spiegel, senior manager,

security and trust, mergers and acquisitions at Autodesk and former cloud

governance leader at Wells Fargo and Cisco. “The bad guys, especially now with

AI and automation, they’re getting more sophisticated, and they only have to be

right once, but the CISO has to be right all day every day. They only have to be

wrong once, and they get blamed … you’re an operational cost centre no matter

what because you’re not bringing in revenue, so if something goes wrong … all

roads lead to the CISO,” Spiegel says. ... Chapman is also seeing a rise in

fractional CISOs, brought in part-time to set up frameworks or oversee specific

projects. “It really comes down to the individual,” he says. “Some want that top

seat, speaking to the board, communicating risk. But I am also seeing some say,

‘It doesn’t have to be a CISO role.’”

RPA versus hyperautomation: Understanding accuracy (performance) benchmarks in practice

RPA is like that reliable coworker who never complains and does exactly what you

ask. It loves repetitive, predictable tasks such as copying and pasting data,

moving files between systems or generating standard reports. When everything

goes according to plan, RPA is perfect. ... Hyperautomation is the next-level

upgrade. It combines RPA with AI, natural language processing (NLP), intelligent

document processing (IDP), process mining and workflow orchestration. In simple

terms, it doesn’t just follow rules. It learns, adapts and keeps things moving

even when the world throws curveballs. With hyperautomation, processes that

would have stopped RPA cold continue without a hitch. ... RPA and

Hyperautomation are not rivals. They are more like teammates with different

strengths. RPA shines when tasks are stable and repetitive, quietly doing its

job without fuss. Hyperautomation brings in intelligence, flexibility and the

ability to handle entire processes from start to finish. When applied

thoughtfully, hyperautomation cuts down on manual corrections, handles

exceptions smoothly and delivers value at scale. All this happens without the IT

team needing to hire extra coffee runners to fix errors or babysit the robots.

The real goal is to build automation that works at the process level, adapts to

change and keeps running even when things go off script.

RPA is like that reliable coworker who never complains and does exactly what you

ask. It loves repetitive, predictable tasks such as copying and pasting data,

moving files between systems or generating standard reports. When everything

goes according to plan, RPA is perfect. ... Hyperautomation is the next-level

upgrade. It combines RPA with AI, natural language processing (NLP), intelligent

document processing (IDP), process mining and workflow orchestration. In simple

terms, it doesn’t just follow rules. It learns, adapts and keeps things moving

even when the world throws curveballs. With hyperautomation, processes that

would have stopped RPA cold continue without a hitch. ... RPA and

Hyperautomation are not rivals. They are more like teammates with different

strengths. RPA shines when tasks are stable and repetitive, quietly doing its

job without fuss. Hyperautomation brings in intelligence, flexibility and the

ability to handle entire processes from start to finish. When applied

thoughtfully, hyperautomation cuts down on manual corrections, handles

exceptions smoothly and delivers value at scale. All this happens without the IT

team needing to hire extra coffee runners to fix errors or babysit the robots.

The real goal is to build automation that works at the process level, adapts to

change and keeps running even when things go off script.

The pros and cons of AI coding in the IT industry

Although now being used by the majority of programmers, AI tools were not

universally welcomed upon their launch, and it has taken time to move beyond the

initial doubts and suspicion surrounding generative AI. It’s important to note

that risks remain when using AI-generated code, which organizations will have to

mitigate. “Integrating AI into our coding processes was initially met with

skepticism, both within our organization and across the industry,” Jain

explains. “Concerns included AI's ability to comprehend complex codebases, the

potential for generating buggy code, adherence to company standards, and issues

surrounding code and data privacy.” However, since the launch of the first

generative AI tools at the end of 2022, Jain says that the rapid evolution of AI

technology’s implementation has alleviated many concerns, with features such as

codebase indexing and secure training protocols addressing major concerns.

“These advancements have enabled AI tools to understand code context, follow

company standards, and maintain robust security measures,” Jain tells ITPro.

Nevertheless, security and accountability are also major factors for any IT

company to consider when looking to use AI as part of the development process,

and research continues to show glaring vulnerabilities in AI code. There are

certain steps that simply can’t be replaced by AI.

Although now being used by the majority of programmers, AI tools were not

universally welcomed upon their launch, and it has taken time to move beyond the

initial doubts and suspicion surrounding generative AI. It’s important to note

that risks remain when using AI-generated code, which organizations will have to

mitigate. “Integrating AI into our coding processes was initially met with

skepticism, both within our organization and across the industry,” Jain

explains. “Concerns included AI's ability to comprehend complex codebases, the

potential for generating buggy code, adherence to company standards, and issues

surrounding code and data privacy.” However, since the launch of the first

generative AI tools at the end of 2022, Jain says that the rapid evolution of AI

technology’s implementation has alleviated many concerns, with features such as

codebase indexing and secure training protocols addressing major concerns.

“These advancements have enabled AI tools to understand code context, follow

company standards, and maintain robust security measures,” Jain tells ITPro.

Nevertheless, security and accountability are also major factors for any IT

company to consider when looking to use AI as part of the development process,

and research continues to show glaring vulnerabilities in AI code. There are

certain steps that simply can’t be replaced by AI.

Why AI Is Forcing an Invisible Shift in Risk Management

Without the need for complex, technical coding knowledge, there are increasingly

more departments within a business capable of driving and contributing to the

development lifecycle, forcing a shift from centralized innovation to

development that is fractalized across the entire organization. This shift has

been revolutionary, driving more lucrative development by empowering technical

teams and business leaders to align on goals and work hand-in-hand. Still, this

transition has changed the organization’s relationship with risk. ... In the age

of distributed application building, organizations have to raise more questions

as it relates to governance and risk, which can mean many different things

depending on where the technology sits in the business. Is the application going

to be customer-facing? How sensitive is the data? How should it be stored? What

are some other privacy considerations? These are all questions businesses must

ask in the age of fractured development — and the answers will vary from case to

case. ... The shift to decentralized development is not the first change

technology has seen, and it’s certainly not the last. The key to staying ahead

of the curve is paying attention to the invisible shifts that come with these

disruptions, such as the changes that have recently come with the adoption of AI

and low code. As these technologies reimagine the typical risk management and

compliance model, it’s important for businesses to come to terms with adaptive

governance and react as such.

Without the need for complex, technical coding knowledge, there are increasingly

more departments within a business capable of driving and contributing to the

development lifecycle, forcing a shift from centralized innovation to

development that is fractalized across the entire organization. This shift has

been revolutionary, driving more lucrative development by empowering technical

teams and business leaders to align on goals and work hand-in-hand. Still, this

transition has changed the organization’s relationship with risk. ... In the age

of distributed application building, organizations have to raise more questions

as it relates to governance and risk, which can mean many different things

depending on where the technology sits in the business. Is the application going

to be customer-facing? How sensitive is the data? How should it be stored? What

are some other privacy considerations? These are all questions businesses must

ask in the age of fractured development — and the answers will vary from case to

case. ... The shift to decentralized development is not the first change

technology has seen, and it’s certainly not the last. The key to staying ahead

of the curve is paying attention to the invisible shifts that come with these

disruptions, such as the changes that have recently come with the adoption of AI

and low code. As these technologies reimagine the typical risk management and

compliance model, it’s important for businesses to come to terms with adaptive

governance and react as such.

How cross-functional teams rewrite the rules of IT collaboration

When done right, IT isn’t just an optional part of cross-functional

collaboration, it’s an integral part of what makes collaboration possible.

“There’s a lot of overlap now between IT, sales, finance and regulatory

compliance,” says George Dimov, managing owner of Dimov Tax. ... What happens

when IT plays a key role in breaking down barriers? First, getting IT involved

in cross-functional teams means IT is at the table from day one. Rather than

having an environment where a department requests a report or tool from IT after

the fact, or has it digitize information later on, IT is present in all

meetings. As more organizations recognize the inherent importance of digital

transformation, the need for IT expertise — including perspectives from

individuals with different types of IT experience — becomes more pronounced.

It’s up to the CIO to provide the cross-functional leadership that ensures IT is

involved in such efforts from the start. ... Even in situations when IT isn’t

directly involved in day-to-day collaboration, it can still play a valuable role

by providing technology resources that aid and facilitate collaboration.

Ideally, IT should be part of the solution to eliminate barriers, whether that’s

through digital sharing tools, reporting mechanisms, or something else. IT can

and should be at the forefront of enabling cross-functional collaboration

between teams and departments.

When done right, IT isn’t just an optional part of cross-functional

collaboration, it’s an integral part of what makes collaboration possible.

“There’s a lot of overlap now between IT, sales, finance and regulatory

compliance,” says George Dimov, managing owner of Dimov Tax. ... What happens

when IT plays a key role in breaking down barriers? First, getting IT involved

in cross-functional teams means IT is at the table from day one. Rather than

having an environment where a department requests a report or tool from IT after

the fact, or has it digitize information later on, IT is present in all

meetings. As more organizations recognize the inherent importance of digital

transformation, the need for IT expertise — including perspectives from

individuals with different types of IT experience — becomes more pronounced.

It’s up to the CIO to provide the cross-functional leadership that ensures IT is

involved in such efforts from the start. ... Even in situations when IT isn’t

directly involved in day-to-day collaboration, it can still play a valuable role

by providing technology resources that aid and facilitate collaboration.

Ideally, IT should be part of the solution to eliminate barriers, whether that’s

through digital sharing tools, reporting mechanisms, or something else. IT can

and should be at the forefront of enabling cross-functional collaboration

between teams and departments.

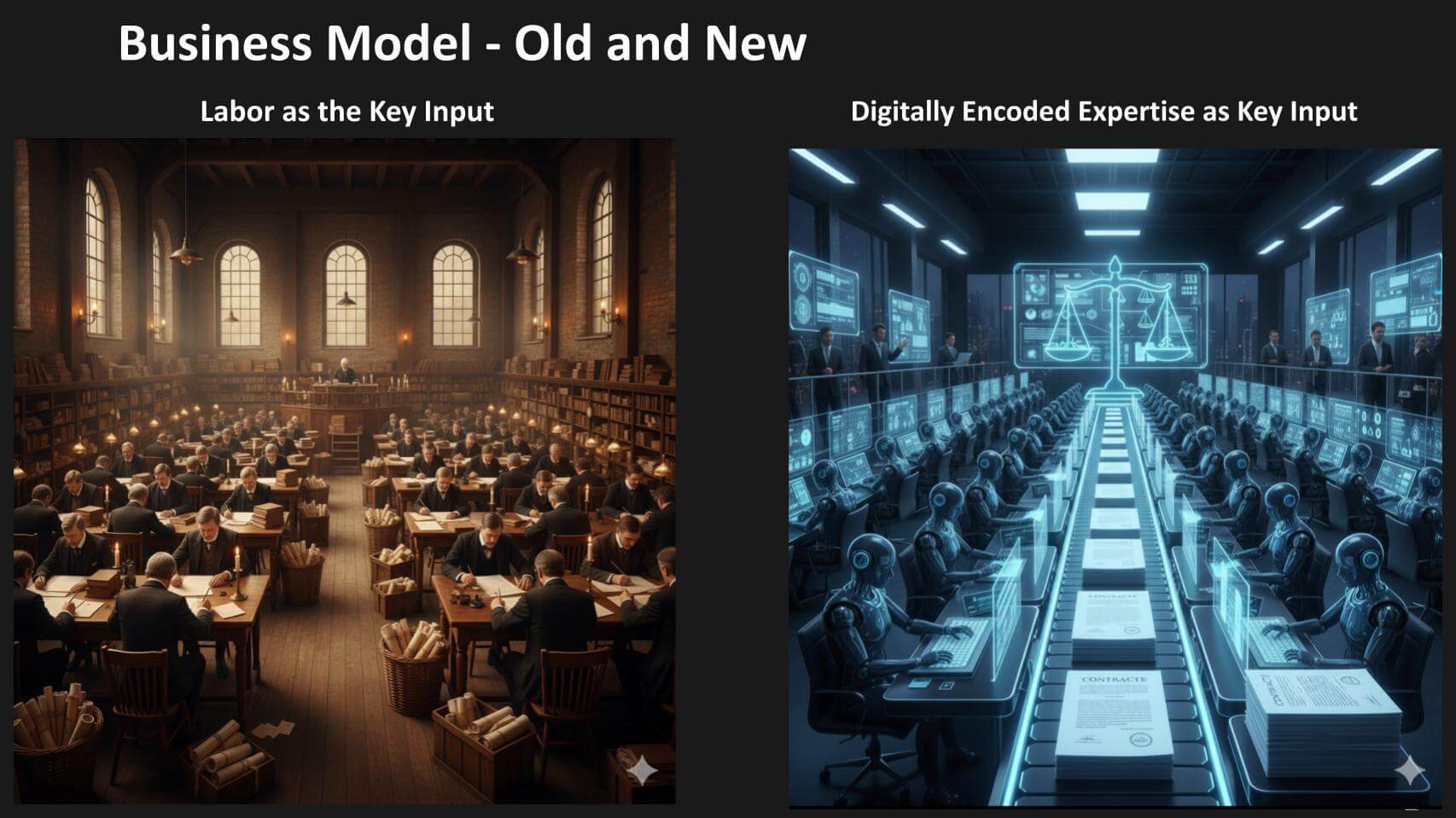

Service-as-software: The new control plane for business

Historically, enterprises ran on islands of automation — enterprise resource

planning for the back office and, later, a proliferation of apps. Customer

relationship management was the first to introduce a new operating model and a

new business model. Today, the enterprise itself must begin to operate like a

software company. That requires harmonizing those islands into a single unified

layer where data and application logic collapse into an integrated System of

Intelligence. Agents rely on this harmonized context to make decisions and, when

needed, invoke legacy applications to execute workflows. Operating this way also

demands a new operations model: a build-to-order assembly line for knowledge

work that blends the customization of consulting with the efficiency of

high-volume fulfillment. Humans supervise agents, and in doing so progressively

encode their expertise into the system. ... The important point to remember is

that islands of automation impede management’s core function – planning,

resource allocation and orchestration with full visibility across levels of

detail and business domains. Data lakes do not solve this by themselves; each

star schema is another island. Near-term, organizations can start small and let

agents interrogate a single domain (for example, the sales cube) and take

limited actions by calling systems of record via MCP servers, for example,

viewing a customer’s complaints and initiating a return authorization.

Historically, enterprises ran on islands of automation — enterprise resource

planning for the back office and, later, a proliferation of apps. Customer

relationship management was the first to introduce a new operating model and a

new business model. Today, the enterprise itself must begin to operate like a

software company. That requires harmonizing those islands into a single unified

layer where data and application logic collapse into an integrated System of

Intelligence. Agents rely on this harmonized context to make decisions and, when

needed, invoke legacy applications to execute workflows. Operating this way also

demands a new operations model: a build-to-order assembly line for knowledge

work that blends the customization of consulting with the efficiency of

high-volume fulfillment. Humans supervise agents, and in doing so progressively

encode their expertise into the system. ... The important point to remember is

that islands of automation impede management’s core function – planning,

resource allocation and orchestration with full visibility across levels of

detail and business domains. Data lakes do not solve this by themselves; each

star schema is another island. Near-term, organizations can start small and let

agents interrogate a single domain (for example, the sales cube) and take

limited actions by calling systems of record via MCP servers, for example,

viewing a customer’s complaints and initiating a return authorization.

Companies are making the same mistake with AI that Tesla made with robots

Shai Ahrony, CEO of marketing agency Reboot Online, calls this phenomenon the

"AI aftershock." "Companies that rushed to cut jobs in the name of AI savings

are now facing massive, and often unexpected costs," he told ZDNET. "We've seen

customers share examples of AI-generated errors -- like chatbots giving wrong

answers, marketing emails misfiring, or content that misrepresents the brand --

and they notice when the human touch is missing." ... Some companies have

already learned painful lessons about AI's shortcomings and adjusted course

accordingly. In one early example from last year, McDonald's announced that it

was retiring an automated order-taking technology that it had developed in

partnership with IBM after the AI-powered system's mishaps went viral across

social media. ... McDonalds' and Klarna's decisions to backtrack on AI in favor

of humans is reminiscent of a similar about-face from Tesla. In 2018, after

Tesla failed to meet production quotas for its Model 3, CEO Elon Musk admitted

in a tweet that the electric vehicle company's reliance upon "excessive

automation…was a mistake." "Humans are underrated," he added. Businesses

aggressively pushing to deploy AI-powered customer service initiatives in the

present could come to a similar conclusion: that even though the technology

helps to cut spending and boost efficiency in some domains, it isn't able to

completely replicate the human touch.

Shai Ahrony, CEO of marketing agency Reboot Online, calls this phenomenon the

"AI aftershock." "Companies that rushed to cut jobs in the name of AI savings

are now facing massive, and often unexpected costs," he told ZDNET. "We've seen

customers share examples of AI-generated errors -- like chatbots giving wrong

answers, marketing emails misfiring, or content that misrepresents the brand --

and they notice when the human touch is missing." ... Some companies have

already learned painful lessons about AI's shortcomings and adjusted course

accordingly. In one early example from last year, McDonald's announced that it

was retiring an automated order-taking technology that it had developed in

partnership with IBM after the AI-powered system's mishaps went viral across

social media. ... McDonalds' and Klarna's decisions to backtrack on AI in favor

of humans is reminiscent of a similar about-face from Tesla. In 2018, after

Tesla failed to meet production quotas for its Model 3, CEO Elon Musk admitted

in a tweet that the electric vehicle company's reliance upon "excessive

automation…was a mistake." "Humans are underrated," he added. Businesses

aggressively pushing to deploy AI-powered customer service initiatives in the

present could come to a similar conclusion: that even though the technology

helps to cut spending and boost efficiency in some domains, it isn't able to

completely replicate the human touch.

How Can the Usage of AI Help Boost DevOps Pipelines

In recent times, AI is playing a key role in CI/CD by using machine learning

algorithms and intelligent automation to detect errors proactively, optimize

resource usage and faster release cycles. With AI, CI/CD pipelines can learn,

adapt and optimize themselves, redefining software development from start to

finish. By combining AI and DevOps, you can eliminate silos, recover faster from

outages and open up new business revenue streams. Today’s businesses are

increasingly leveraging artificial intelligence capabilities throughout their

DevOps pipelines to make their CI/CD pipelines intelligent, thereby enabling

them to predict problems faster, optimize the pipelines if needed, and recover

from failures without the need for any human intervention. ... When you adopt AI

into the DevOps practices in your organization, you are applying specific

technologies to automate, optimize, and enhance each stage of the software

development lifecycle – coding, testing, deployment, and monitoring. Today’s

organizations are using AI in their DevOps pipelines to drive innovation,

enabling teams to work seamlessly and achieve rapid development and deployment

cycles. ... AI can help in DevSecOps in ways such as automating security

testing, automating threat detection, and streamlining incident response. You

can use AI-powered tools to scan your application source code for security

vulnerabilities, automate software patches, automate incident responses, and

monitor in real-time to identify anomalies.

In recent times, AI is playing a key role in CI/CD by using machine learning

algorithms and intelligent automation to detect errors proactively, optimize

resource usage and faster release cycles. With AI, CI/CD pipelines can learn,

adapt and optimize themselves, redefining software development from start to

finish. By combining AI and DevOps, you can eliminate silos, recover faster from

outages and open up new business revenue streams. Today’s businesses are

increasingly leveraging artificial intelligence capabilities throughout their

DevOps pipelines to make their CI/CD pipelines intelligent, thereby enabling

them to predict problems faster, optimize the pipelines if needed, and recover

from failures without the need for any human intervention. ... When you adopt AI

into the DevOps practices in your organization, you are applying specific

technologies to automate, optimize, and enhance each stage of the software

development lifecycle – coding, testing, deployment, and monitoring. Today’s

organizations are using AI in their DevOps pipelines to drive innovation,

enabling teams to work seamlessly and achieve rapid development and deployment

cycles. ... AI can help in DevSecOps in ways such as automating security

testing, automating threat detection, and streamlining incident response. You

can use AI-powered tools to scan your application source code for security

vulnerabilities, automate software patches, automate incident responses, and

monitor in real-time to identify anomalies.

No comments:

Post a Comment