Quote for the day:

“Failure defeats losers, failure inspires winners.” -- Robert T. Kiyosaki

The 'truth serum' for AI: OpenAI’s new method for training models to confess their mistakes

A confession is a structured report generated by the model after it provides

its main answer. It serves as a self-evaluation of its own compliance with

instructions. In this report, the model must list all instructions it was

supposed to follow, evaluate how well it satisfied them and report any

uncertainties or judgment calls it made along the way. The goal is to create a

separate channel where the model is incentivized only to be honest. ... During

training, the reward assigned to the confession is based solely on its honesty

and is never mixed with the reward for the main task. "Like the Catholic

Church’s 'seal of confession', nothing that the model reveals can change the

reward it receives for completing its original task," the researchers write.

This creates a "safe space" for the model to admit fault without penalty. This

approach is powerful because it sidesteps a major challenge in AI training.

The researchers’ intuition is that honestly confessing to misbehavior is an

easier task than achieving a high reward on the original, often complex,

problem. ... For AI applications, mechanisms such as confessions can provide a

practical monitoring mechanism. The structured output from a confession can be

used at inference time to flag or reject a model’s response before it causes a

problem. For example, a system could be designed to automatically escalate any

output for human review if its confession indicates a policy violation or high

uncertainty.

A confession is a structured report generated by the model after it provides

its main answer. It serves as a self-evaluation of its own compliance with

instructions. In this report, the model must list all instructions it was

supposed to follow, evaluate how well it satisfied them and report any

uncertainties or judgment calls it made along the way. The goal is to create a

separate channel where the model is incentivized only to be honest. ... During

training, the reward assigned to the confession is based solely on its honesty

and is never mixed with the reward for the main task. "Like the Catholic

Church’s 'seal of confession', nothing that the model reveals can change the

reward it receives for completing its original task," the researchers write.

This creates a "safe space" for the model to admit fault without penalty. This

approach is powerful because it sidesteps a major challenge in AI training.

The researchers’ intuition is that honestly confessing to misbehavior is an

easier task than achieving a high reward on the original, often complex,

problem. ... For AI applications, mechanisms such as confessions can provide a

practical monitoring mechanism. The structured output from a confession can be

used at inference time to flag or reject a model’s response before it causes a

problem. For example, a system could be designed to automatically escalate any

output for human review if its confession indicates a policy violation or high

uncertainty.Why is enterprise disaster recovery always such a…disaster?

One of the brutal truths about enterprise disaster recovery (DR) strategies is

that there is virtually no reliable way to truly test them. ... From a

corporate politics perspective, IT managers responsible for disaster recovery

have a lot of reasons to avoid an especially meaningful test. Look at it from

a risk/reward perspective. They’re going to take a gamble, figuring that any

disaster requiring the recovery environment might not happen for a few years.

And by then with any luck, they’ll be long gone. ... “Enterprises place too

much trust in DR strategies that look complete on slides but fall apart when

chaos hits,” he said. “The misunderstanding starts with how recovery is

defined. It’s not enough for infrastructure to come back online. What matters

is whether the business continues to function — and most enterprises haven’t

closed that gap. ... “Most DR tools, even DRaaS, only protect fragments of the

IT estate,” Gogia said. “They’re scoped narrowly to fit budget or ease of

implementation, not to guarantee holistic recovery. Cloud-heavy environments

make things worse when teams assume resilience is built in, but haven’t

configured failover paths, replicated across regions, or validated workloads

post-failover. Sovereign cloud initiatives might address geopolitical risk,

but they rarely address operational realism.

One of the brutal truths about enterprise disaster recovery (DR) strategies is

that there is virtually no reliable way to truly test them. ... From a

corporate politics perspective, IT managers responsible for disaster recovery

have a lot of reasons to avoid an especially meaningful test. Look at it from

a risk/reward perspective. They’re going to take a gamble, figuring that any

disaster requiring the recovery environment might not happen for a few years.

And by then with any luck, they’ll be long gone. ... “Enterprises place too

much trust in DR strategies that look complete on slides but fall apart when

chaos hits,” he said. “The misunderstanding starts with how recovery is

defined. It’s not enough for infrastructure to come back online. What matters

is whether the business continues to function — and most enterprises haven’t

closed that gap. ... “Most DR tools, even DRaaS, only protect fragments of the

IT estate,” Gogia said. “They’re scoped narrowly to fit budget or ease of

implementation, not to guarantee holistic recovery. Cloud-heavy environments

make things worse when teams assume resilience is built in, but haven’t

configured failover paths, replicated across regions, or validated workloads

post-failover. Sovereign cloud initiatives might address geopolitical risk,

but they rarely address operational realism. The first building blocks of an agentic Windows OS

Microsoft is adding an MCP registry to Windows, which adds security wrappers and

provides discovery tools for use by local agents. An associated proxy manages

connectivity for both local and remote servers, with authentication, audit, and

authorization. Enterprises will be able to use these tools to control access to

MCP, using group policies and default settings to give connectors their own

identities. ... Be careful when giving agents access to the Windows file system;

use base prompts that reduce the risks associated with file system access. When

building out your first agent, it’s worth limiting the connector to search

(taking advantage of the semantic capabilities of Windows’ built-in Phi small

language model) and reading text data. This does mean you’ll need to provide

your own guardrails for agent code running on PCs, for example, forcing

read-only operations and locking down access as much as possible. Microsoft’s

planned move to a least-privilege model for Windows users could help here,

ensuring that agents have as few rights as possible and no avenue for privilege

escalation. ... Building an agentic OS is hard, as the underlying technologies

work very differently from standard Windows applications. Microsoft is doing a

lot to provide appropriate protections, building on its experience in delivering

multitenancy in the cloud.

Microsoft is adding an MCP registry to Windows, which adds security wrappers and

provides discovery tools for use by local agents. An associated proxy manages

connectivity for both local and remote servers, with authentication, audit, and

authorization. Enterprises will be able to use these tools to control access to

MCP, using group policies and default settings to give connectors their own

identities. ... Be careful when giving agents access to the Windows file system;

use base prompts that reduce the risks associated with file system access. When

building out your first agent, it’s worth limiting the connector to search

(taking advantage of the semantic capabilities of Windows’ built-in Phi small

language model) and reading text data. This does mean you’ll need to provide

your own guardrails for agent code running on PCs, for example, forcing

read-only operations and locking down access as much as possible. Microsoft’s

planned move to a least-privilege model for Windows users could help here,

ensuring that agents have as few rights as possible and no avenue for privilege

escalation. ... Building an agentic OS is hard, as the underlying technologies

work very differently from standard Windows applications. Microsoft is doing a

lot to provide appropriate protections, building on its experience in delivering

multitenancy in the cloud.

Syntax hacking: Researchers discover sentence structure can bypass AI safety rules

The findings reveal a weakness in how these models process instructions that may

shed light on why some prompt injection or jailbreaking approaches work, though

the researchers caution their analysis of some production models remains

speculative since training data details of prominent commercial AI models are

not publicly available. ... This suggests models absorb both meaning and

syntactic patterns, but can overrely on structural shortcuts when they strongly

correlate with specific domains in training data, which sometimes allows

patterns to override semantic understanding in edge cases. ... In layperson

terms, the research shows that AI language models can become overly fixated on

the style of a question rather than its actual meaning. Imagine if someone

learned that questions starting with “Where is…” are always about geography, so

when you ask “Where is the best pizza in Chicago?”, they respond with “Illinois”

instead of recommending restaurants based on some other criteria. They’re

responding to the grammatical pattern (“Where is…”) rather than understanding

you’re asking about food. This creates two risks: models giving wrong answers in

unfamiliar contexts (a form of confabulation), and bad actors exploiting these

patterns to bypass safety conditioning by wrapping harmful requests in “safe”

grammatical styles. It’s a form of domain switching that can reframe an input,

linking it into a different context to get a different result.

The findings reveal a weakness in how these models process instructions that may

shed light on why some prompt injection or jailbreaking approaches work, though

the researchers caution their analysis of some production models remains

speculative since training data details of prominent commercial AI models are

not publicly available. ... This suggests models absorb both meaning and

syntactic patterns, but can overrely on structural shortcuts when they strongly

correlate with specific domains in training data, which sometimes allows

patterns to override semantic understanding in edge cases. ... In layperson

terms, the research shows that AI language models can become overly fixated on

the style of a question rather than its actual meaning. Imagine if someone

learned that questions starting with “Where is…” are always about geography, so

when you ask “Where is the best pizza in Chicago?”, they respond with “Illinois”

instead of recommending restaurants based on some other criteria. They’re

responding to the grammatical pattern (“Where is…”) rather than understanding

you’re asking about food. This creates two risks: models giving wrong answers in

unfamiliar contexts (a form of confabulation), and bad actors exploiting these

patterns to bypass safety conditioning by wrapping harmful requests in “safe”

grammatical styles. It’s a form of domain switching that can reframe an input,

linking it into a different context to get a different result.

In 2026, Should Banks Aim Beyond AI?

Developing native AI agents and agentic workflows will allow banks to automate

complex journeys while fine-tuning systems to their specific data and compliance

landscapes. These platforms accelerate innovation and reinforce governance

structures around AI deployment. This next generation of AI applications

elevates customer service, fostering deeper trust and engagement. ... But any

technological advancement must be paired with accountability and prudent risk

management, given the sensitive nature of banking. AI can unlock efficiency and

innovation, but its impact depends on keeping human decision-making and

oversight firmly in place. It should augment rather than replace human

authority, maintaining transparency and accountability in all automated

processes. ... The banking environment is too risky for fully autonomous agentic

AI workflows. Critical financial decisions require human judgment due to the

potential for significant consequences. Nonetheless, many opportunities exist to

augment decision-making with AI agents, advanced models and enriched datasets.

... As this evolution unfolds, financial institutions must focus on executing AI

initiatives responsibly and effectively. By investing in home-grown platforms,

emphasizing explainability, balancing human oversight with automation and

fostering adaptive leadership, banks, financial services and insurance providers

can navigate the complexities of AI adoption.

Developing native AI agents and agentic workflows will allow banks to automate

complex journeys while fine-tuning systems to their specific data and compliance

landscapes. These platforms accelerate innovation and reinforce governance

structures around AI deployment. This next generation of AI applications

elevates customer service, fostering deeper trust and engagement. ... But any

technological advancement must be paired with accountability and prudent risk

management, given the sensitive nature of banking. AI can unlock efficiency and

innovation, but its impact depends on keeping human decision-making and

oversight firmly in place. It should augment rather than replace human

authority, maintaining transparency and accountability in all automated

processes. ... The banking environment is too risky for fully autonomous agentic

AI workflows. Critical financial decisions require human judgment due to the

potential for significant consequences. Nonetheless, many opportunities exist to

augment decision-making with AI agents, advanced models and enriched datasets.

... As this evolution unfolds, financial institutions must focus on executing AI

initiatives responsibly and effectively. By investing in home-grown platforms,

emphasizing explainability, balancing human oversight with automation and

fostering adaptive leadership, banks, financial services and insurance providers

can navigate the complexities of AI adoption.

Building the missing layers for an internet of agents

The proposed Agent Communication Layer sits above HTTP and focuses on message

structure and interaction patterns. It brings together what has been emerging

across several protocols and organizes them into a common set of building

blocks. These include standardized envelopes, a registry of performatives that

define intent, and patterns for one to one or one to many communication. The

idea is to give agents a dependable way to understand the type of communication

taking place before interpreting the content. A request, an update, or a

proposal each follows an expected pattern. This helps agents coordinate tasks

without guessing the sender’s intention. The layer does not judge meaning. It

only ensures that communication follows predictable rules that all agents can

interpret. ... The paper outlines several new risks. Attackers might inject

harmful content that fits the schema but tricks the agent’s reasoning. They

might distribute altered or fake context definitions that mislead a population

of agents. They might overwhelm a system with repetitive semantic queries that

drain inference resources rather than network resources. To manage these

problems, the authors propose security measures that match the new layer. Signed

context definitions would prevent tampering. Semantic firewalls would examine

content at the concept level and enforce rules about who can use which parts of

a context.

The proposed Agent Communication Layer sits above HTTP and focuses on message

structure and interaction patterns. It brings together what has been emerging

across several protocols and organizes them into a common set of building

blocks. These include standardized envelopes, a registry of performatives that

define intent, and patterns for one to one or one to many communication. The

idea is to give agents a dependable way to understand the type of communication

taking place before interpreting the content. A request, an update, or a

proposal each follows an expected pattern. This helps agents coordinate tasks

without guessing the sender’s intention. The layer does not judge meaning. It

only ensures that communication follows predictable rules that all agents can

interpret. ... The paper outlines several new risks. Attackers might inject

harmful content that fits the schema but tricks the agent’s reasoning. They

might distribute altered or fake context definitions that mislead a population

of agents. They might overwhelm a system with repetitive semantic queries that

drain inference resources rather than network resources. To manage these

problems, the authors propose security measures that match the new layer. Signed

context definitions would prevent tampering. Semantic firewalls would examine

content at the concept level and enforce rules about who can use which parts of

a context.

The Rise of SASE: From Emerging Concept to Enterprise Cornerstone

The case for SASE depends heavily on the business outcomes required, and there

can be multiple use cases for SASE deployment. However, not everyone is always

aligned around these. Whether you’re looking to modernize systems to boost

operational resilience, reduce costs, or improve security to adhere to

regulatory compliance, there needs to be alignment around your SASE deployment.

Additionally, because of its versatility, SASE demands expertise across

networking, cloud security, zero trust, and SD-WAN, but, unfortunately, these

skills are in short supply. IT teams must upskill or recruit talent capable of

managing (and running) this convergence, while also adapting to new operational

models and workflows. ... However, most of the reported benefits don’t focus on

tangible or financial outcomes, but rather those that are typically harder to

measure, namely boosting operational resilience and enhancing user experience.

These are interesting numbers to explore, as SASE investments are often

predicated on specific and easily measurable business cases, typically centered

around cost savings or mitigation of specific cyber/operational risks. Looking

at the benefits from both a networking and security perspective, the data

reveals different priorities for SASE adoption: IT Network leaders value

operational streamlining and efficiency, while IT Security leaders emphasize

secure access and cloud protection.

The case for SASE depends heavily on the business outcomes required, and there

can be multiple use cases for SASE deployment. However, not everyone is always

aligned around these. Whether you’re looking to modernize systems to boost

operational resilience, reduce costs, or improve security to adhere to

regulatory compliance, there needs to be alignment around your SASE deployment.

Additionally, because of its versatility, SASE demands expertise across

networking, cloud security, zero trust, and SD-WAN, but, unfortunately, these

skills are in short supply. IT teams must upskill or recruit talent capable of

managing (and running) this convergence, while also adapting to new operational

models and workflows. ... However, most of the reported benefits don’t focus on

tangible or financial outcomes, but rather those that are typically harder to

measure, namely boosting operational resilience and enhancing user experience.

These are interesting numbers to explore, as SASE investments are often

predicated on specific and easily measurable business cases, typically centered

around cost savings or mitigation of specific cyber/operational risks. Looking

at the benefits from both a networking and security perspective, the data

reveals different priorities for SASE adoption: IT Network leaders value

operational streamlining and efficiency, while IT Security leaders emphasize

secure access and cloud protection.

Intelligent Banking: A New Standard for Experience and Trust

At its core, Intelligent Banking connects three forces that are redefining what

"intelligent" really means: Rising expectations - Customers not only expect

their institutions to understand them, but to intuitively put forward

recommendations before they realize change is needed. All while acting with

empathy while delivering secure, trusted experiences. ... Data abundance -

Financial institutions have more data than ever but struggle to turn it into

actionable insight that benefits both the customer and the institution. ... AI

readiness - For years, AI in banking was at best a buzz word that encapsulated

the standard — decision trees, models, rules. ... The next era of AI in banking

will be completely different. It will be invisible. Embedded. Contextual. It

will be built into the fabric of the experience, not just added on top. And

while mobile apps as we know them will likely be around for a while, a fully

GenAI native banking experience is both possible and imminent. ... In the age of

AI, it’s tempting to see "intelligence" purely as technology alone. But the

future of banking will depend just as much on human intelligence as it will

artificial intelligence. The expertise, empathy, and judgement of the

institutions who understand financial context and complexity blended with the

speed, prediction and pattern recognition that uncover insights humans can’t see

will create a new standard for banking, one where experiences feel both

profoundly human and intelligently anticipatory.

At its core, Intelligent Banking connects three forces that are redefining what

"intelligent" really means: Rising expectations - Customers not only expect

their institutions to understand them, but to intuitively put forward

recommendations before they realize change is needed. All while acting with

empathy while delivering secure, trusted experiences. ... Data abundance -

Financial institutions have more data than ever but struggle to turn it into

actionable insight that benefits both the customer and the institution. ... AI

readiness - For years, AI in banking was at best a buzz word that encapsulated

the standard — decision trees, models, rules. ... The next era of AI in banking

will be completely different. It will be invisible. Embedded. Contextual. It

will be built into the fabric of the experience, not just added on top. And

while mobile apps as we know them will likely be around for a while, a fully

GenAI native banking experience is both possible and imminent. ... In the age of

AI, it’s tempting to see "intelligence" purely as technology alone. But the

future of banking will depend just as much on human intelligence as it will

artificial intelligence. The expertise, empathy, and judgement of the

institutions who understand financial context and complexity blended with the

speed, prediction and pattern recognition that uncover insights humans can’t see

will create a new standard for banking, one where experiences feel both

profoundly human and intelligently anticipatory.

Taking Control of Unstructured Data to Optimize Storage

The modern business preoccupation with collecting and retaining data has become

something of a double-edged sword. On the plus side, it has fueled a

transformational approach to how organizations are run. On the other hand, it’s

rapidly becoming an enormous drain on resources and efficiency. The fact that

80-90% of this information is unstructured, i.e. spread across formats such as

documents, images, videos, emails, and sensor outputs, only adds to the

difficulty of organizing and controlling it. ... To break this down, detailed

metadata insight is essential for revealing how storage is actually being used.

Information such as creation dates, last accessed timestamps, and ownership

highlights which data is active and requires performance storage, and which has

aged out of use or no longer relates to current users. ... So, how can this be

achieved? At a fundamental level, storage optimization hinges on adopting a

technology approach that manages data, not storage devices; simply adding more

and more capacity is no longer viable. Instead, organizations must have the

ability to work across heterogeneous storage environments, including multiple

vendors, locations and clouds. Tools should support vendor-neutral management so

data can be monitored and moved regardless of the underlying platform. Clearly,

this has to take place at petabyte scale. Optimization also relies on

policy-based data mobility that enables data to be moved based on defined rules,

such as age or inactivity, with inactive or long-dormant data.

The modern business preoccupation with collecting and retaining data has become

something of a double-edged sword. On the plus side, it has fueled a

transformational approach to how organizations are run. On the other hand, it’s

rapidly becoming an enormous drain on resources and efficiency. The fact that

80-90% of this information is unstructured, i.e. spread across formats such as

documents, images, videos, emails, and sensor outputs, only adds to the

difficulty of organizing and controlling it. ... To break this down, detailed

metadata insight is essential for revealing how storage is actually being used.

Information such as creation dates, last accessed timestamps, and ownership

highlights which data is active and requires performance storage, and which has

aged out of use or no longer relates to current users. ... So, how can this be

achieved? At a fundamental level, storage optimization hinges on adopting a

technology approach that manages data, not storage devices; simply adding more

and more capacity is no longer viable. Instead, organizations must have the

ability to work across heterogeneous storage environments, including multiple

vendors, locations and clouds. Tools should support vendor-neutral management so

data can be monitored and moved regardless of the underlying platform. Clearly,

this has to take place at petabyte scale. Optimization also relies on

policy-based data mobility that enables data to be moved based on defined rules,

such as age or inactivity, with inactive or long-dormant data.

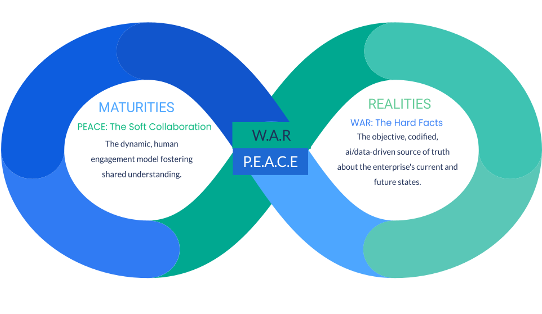

W.A.R & P.E.A.C.E: The Critical Battle for Organizational Harmony

W.A.R & P.E.A.C.E, the pivotal human lens within TRIAL, designed

specifically to address this cultural challenge and shepherd the enterprise

toward AARAM (Agentic AI Reinforced Architecture Maturities)2 with what I term

“speed 3” transformation of AI. ... The successful, continuous balancing of

W.A.R. and P.E.A.C.E. is the biggest battle an Enterprise Architect must win.

Just as Tolstoy explored the monumental scope of war against intimate moments of

peace in his masterwork, the Enterprise Architect must balance the intense

effort to build repositories against the delicate work of fostering

organizational harmony. ... The W.A.R. systematically organizes information

across the four critical architectural domains defined in our previous article:

Business, Information, Technology, and Security (BITS). The true power of W.A.R.

lies in its ability to associate technical components with measurable business

and financial properties, effectively transforming technical discussions into

measurable, strategic imperatives. Each architectural components across BITS are

tracked across Plan, Design & Run lifecycle of change under the guardrails

of BYTES. ... Achieving effective P.E.A.C.E. mandates a carefully constructed

collaborative environment where diverse organizational roles work together

toward a shared objective. This requires alignment across all lifecycle stages

using social capital and intelligence.

W.A.R & P.E.A.C.E, the pivotal human lens within TRIAL, designed

specifically to address this cultural challenge and shepherd the enterprise

toward AARAM (Agentic AI Reinforced Architecture Maturities)2 with what I term

“speed 3” transformation of AI. ... The successful, continuous balancing of

W.A.R. and P.E.A.C.E. is the biggest battle an Enterprise Architect must win.

Just as Tolstoy explored the monumental scope of war against intimate moments of

peace in his masterwork, the Enterprise Architect must balance the intense

effort to build repositories against the delicate work of fostering

organizational harmony. ... The W.A.R. systematically organizes information

across the four critical architectural domains defined in our previous article:

Business, Information, Technology, and Security (BITS). The true power of W.A.R.

lies in its ability to associate technical components with measurable business

and financial properties, effectively transforming technical discussions into

measurable, strategic imperatives. Each architectural components across BITS are

tracked across Plan, Design & Run lifecycle of change under the guardrails

of BYTES. ... Achieving effective P.E.A.C.E. mandates a carefully constructed

collaborative environment where diverse organizational roles work together

toward a shared objective. This requires alignment across all lifecycle stages

using social capital and intelligence.

No comments:

Post a Comment