How cloud computing surveys grossly underreport actual business adoption

Whatever the size of the IT department, all companies are having to fundamentally rethink their applications, with cloud-first increasingly a matter of survival. One example I am familiar with is that of a large enterprise that was trying to figure out how to rearchitect a massive application first conceived in the early 2000s. At the time it was first built, the enterprise had very different needs from today—thousands of users, gigabytes or terabytes of data, customers all sitting in the same region, performance important but not all-consuming. This enterprise built on internal servers and focused on a scale-up model. That's all there was. Today, that same application has millions of users, distributed globally. The data volume is in the petabytes (and approaches exabytes). Performance latency must be measured in milliseconds and, in some cases, microseconds. There is no option but cloud. More applications look like this today than the earlier instantiation of that application.

How AI will underpin cyber security in the next few years

Artificial intelligence (AI) is emerging as the frontrunner in the battle against cyber crime. With autonomous systems, businesses are in a far better place to strengthen and reinforce cyber security strategies. But does this technology pose challenges of its own? Large organisations are always exposed to cyber criminals, and so they need appropriate infrastructure to spot and combat threats quickly. James Maude, senior security engineer at endpoint security specialist Avecto, says systems incorporating AI could save firms billions in damage from attacks. “Although AI is still in its infancy, it’s no secret that it is becoming increasingly influential in cyber security,” he says. “In fact, AI is already transforming the industry, and we can expect to see a number of trends come to a head, reshaping how we think about security in years to come. We might expect to see AI applied to cyber security defences, potentially avoiding the damage from breaches costing billions.”

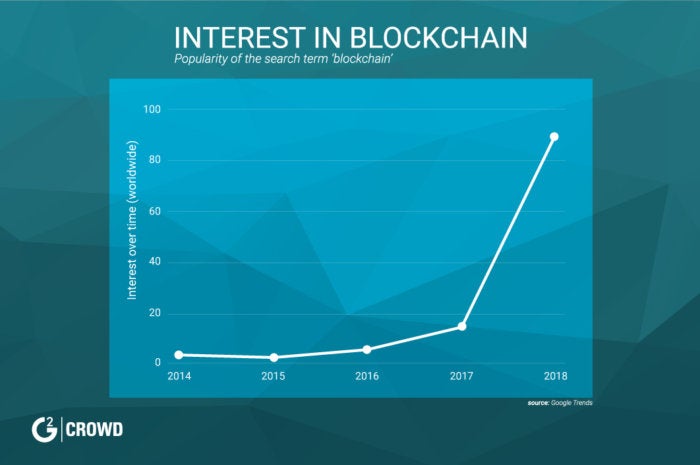

IBM sees blockchain as ready for government use

There is a growing concern that cryptocurrency could be a threat to the global financial system through unbridled speculation and unsecured borrowing by consumers looking to purchase the virtual money. ... "First and foremost, blockchain is changing the game. In today's digitally networked world, no single institution works in isolation. At the center of a blockchain is this notion of a shared immutable ledger. You see, members of a blockchain network each have an exact copy of the ledger," Cuomo said. "Therefore, all participants in an interaction have an up-to-date ledger that reflects the most recent transactions – and these transactions, once entered, cannot be changed on the ledger." For blockchain to fulfill its potential, it must be "open," Cuomo emphasized, and based on non-proprietary technology that will encourage widespread industry adoption by ensuring compatibility and interoperability.

7 threat modeling mistakes you’re probably making

The Open Web Application Security Project (OWASP) describes threat modeling as a structured approach for identifying, quantifying and addressing the security risks associated with an application. It essentially involves thinking strategically about threats when building or deploying a system so proper controls for preventing or mitigating threats can be implemented earlier in the application lifecycle. Threat modeling as a concept certainly isn't new, but few organizations have implemented it in a meaningful way. Best practices for threat models are still emerging says Archie Agarwal, founder and CEO of ThreatModeler Software. "The biggest problem is a lack of understanding of what threat modeling is all about," he says. There are multiple ways to do threat modeling and companies often can run into trouble figuring out how to look at it as a process and how to scale it. "There is still a lack of clarity around the whole thing."

Skype can't fix a nasty security bug without a massive code rewrite

Security researcher Stefan Kanthak found that the Skype update installer could be exploited with a DLL hijacking technique, which allows an attacker to trick an application into drawing malicious code instead of the correct library. An attacker can download a malicious DLL into a user-accessible temporary folder and rename it to an existing DLL that can be modified by an unprivileged user, like UXTheme.dll. The bug works because the malicious DLL is found first when the app searches for the DLL it needs. Once installed, Skype uses its own built-in updater to keep the software up to date. When that updater runs, it uses another executable file to run the update, which is vulnerable to the hijacking. The attack reads on the clunky side, but Kanthak told ZDNet in an email that the attack could be easily weaponized. He explained, providing two command line examples, how a script or malware could remotely transfer a malicious DLL into that temporary folder.

Cryptomining malware continues to drain enterprise CPU power

“Over the past three months cryptomining malware has steadily become an increasing threat to organizations, as criminals have found it to be a lucrative revenue stream,” said Maya Horowitz, Threat Intelligence Group Manager at Check Point. “It is particularly challenging to protect against, as it is often hidden in websites, enabling hackers to use unsuspecting victims to tap into the huge CPU resource that many enterprises have available. As such, it is critical that organizations have the solutions in place that protect against these stealthy cyber-attacks.” In addition to cryptominers, researchers also discovered that 21% of organizations have still failed to deal with machines infected with the Fireball malware. Fireball can be used as a full-functioning malware downloader capable of executing any code on victims’ machines. It was first discovered in May 2017, and severely impacted organizations during Summer of 2017.

Intel launches new Xeon processor aimed at edge computing

Edge computing is an important, if very early stage, development that seeks to put computing power closer to where the data originates, and it is seen as working hand in hand with Internet of Things (IoT) devices. IoT devices, such as smart cars and local sensors, generate tremendous amounts of data. A Hitachi report (pdf) estimated that smart cars would at some point generate 25GB of data every hour. This can’t all be sent back to data centers for processing. It would overload the networks and the data centers. Instead, edge computing processes the data at its origin. So, smart car data generated in New York would be processed in New York rather than sent to a remote data center. Major data center providers, such as Equinix and CoreSite, offer such services at their data centers around the country, and startup Vapor IO offers ruggedized mini data centers that can be deployed at the base of cell phone towers.

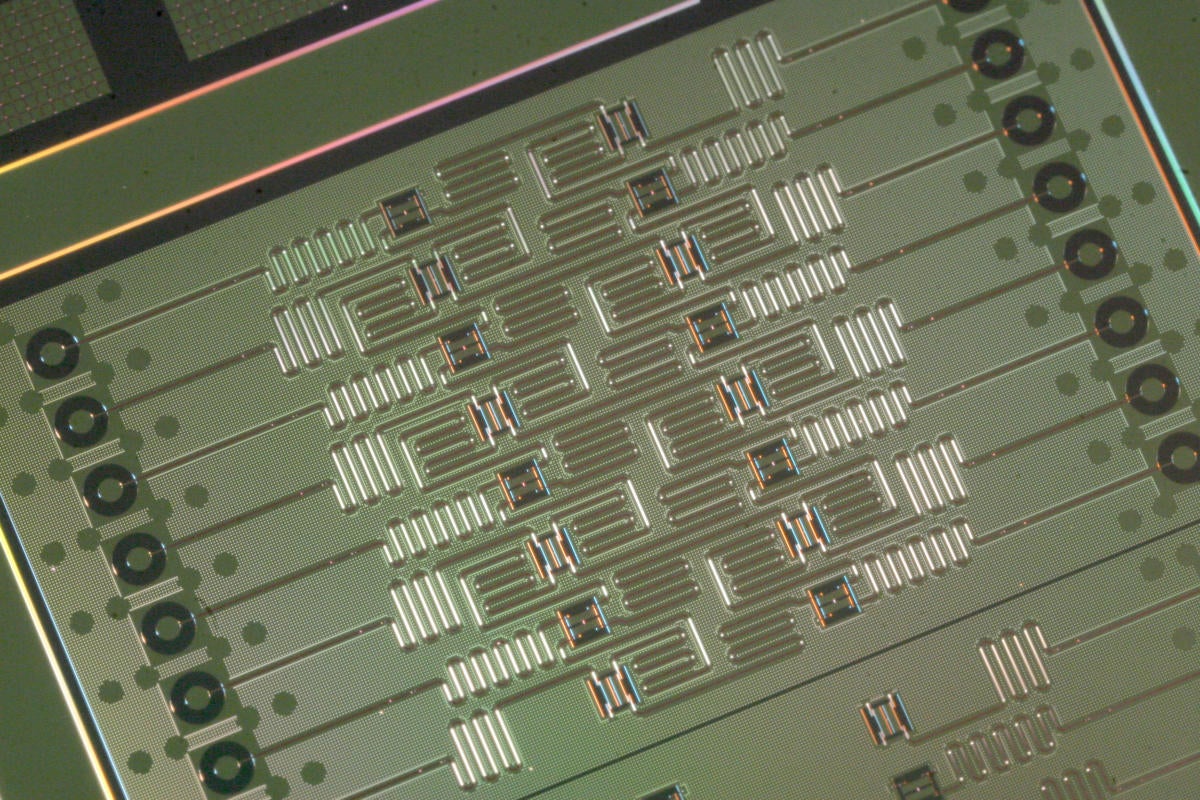

Q# language: How to write quantum code in Visual Studio

Designed to use familiar constructs to help program applications that interact with qubits, it takes a similar approach to working with coprocessors, providing libraries that handle the actual quantum programming and interpretation, so you can write code that hands qubit operations over to one Microsoft’s quantum computers. Bridging the classical and quantum computing worlds isn’t easy, so don’t expect Q# to be like Visual Basic. It is more like using that set of Fortran mathematics libraries, with the same underlying assumption: that you understand the theory behind what you’re doing. One element of the Quantum Development Kit is a quantum computing primer, which explores issues around using simulators, as well as providing a primer in linear algebra. If you’re going to be programming in Q#, an understanding of key linear algebra concepts around vectors and matrices is essential—especially eigenvalues and eigenvectors, which are key elements of many quantum algorithms.

Breaking the cycle of data security threats

First, there’s the lack of mandatory reporting and the limits of voluntary reporting. Second, the lack of real protection for the personal information we’ve entrusted to various companies. Third, the clear indication that CEOs and corporations still aren’t paying enough attention to cybersecurity issues; perhaps because there’s been a startling lack of real penalty for failing to protect information from hackers. Finally, there’s a need to recognize that securing information is hard work on an ongoing basis. It’s a truism of security that no product is a “silver bullet” to put an end to attacks. Another industry truism says security is a journey, not a destination. There are few regulations that require organizations to report data breaches, especially those outside financial services and health care. Is it any surprise that companies are reporting breaches years after they occurred? How many unreported breaches will never surface?

The Top Five Data Governance Use Cases and Drivers

As the applications for data have grown, so too have the data governance use cases. And the legacy, IT-only approach to data governance, Data Governance 1.0, has made way for the collaborative, enterprise-wide Data Governance 2.0. In addition to increasing data applications, Data Governance 1.0’s decline is being hastened by recurrent failings in its implementation. Leaving it to IT, with no input from the wider business, ignores the desired business outcomes and the opportunities to contribute to and speed their accomplishment. Lack of input from the departments that use the data also causes data quality and completeness to suffer. So Data Governance 1.0 was destined to fail in yielding a significant return. But changing regulatory requirements and mega-disruptors effectively leveraging data has spawned new interest in making data governance work.

Quote for the day:

"Technological change is not additive; it's ecological. A new technology does not merely add something; it changes everything." -- Neil Postman

No comments:

Post a Comment