How data scientists can improve their careers in 2018

Many data scientists emerged from the world of business intelligence and data warehousing; in the 1990s, we were doing what many data scientists are, at least in part, doing today. As skilled and knowledgeable as we were about data warehousing, I doubt many people doing that work knew anything about artificial intelligence. If this sounds similar, take time in 2018 to master machine learning, neural networks, genetic algorithms, expert systems, and all the wonderful techniques that will eventually teach computers how to take over the world. Conversely, a number of data scientists entered the profession from the artificial intelligence and/or advanced mathematics world--it seemed to be a logical progression. These professionals felt they had the hard part figured out, and now it was only a matter of learning about databases. The reality is becoming a data professional is not as easy as it looks. So, when faced with the frustrations of long-running queries and outer joins gone wild, most data scientists revert back to their comfort zone of Bayesian data analysis and stochastic calculus.

Building a CI System With Java 9 Modules and Vert.x Microservices

Developers familiar with JavaScript can probably recall the single threaded event loop that delivers events as they arrive to registered handlers. The multi-reactor pattern is a related approach, but to overcome the inherent limitation of a single threaded event loop, vert.x employs multiple event loops based on the available cores on a given server. Vert.x also comes with an opinionated concurrency model loosely based on the actor model where “actors” receive and respond to messages and communicate with others using messages. In the vert.x world, actors are called “verticles” and they typically communicate with each other using JSON messages sent over an event bus. We can even specify the number of instances of each verticle that should be deployed by vert.x. The event bus is designed such that it can form a cluster using a variety of plug and play cluster managers like Hazelcast and Zookeeper.

AI Enhanced Smart Homes Reduce Power Grid Demands

Power companies currently have to charge higher peak-usage rates for drawing power from the grid at times when demand is high. Solar panels have become increasingly popular – thanks in no small part to the many government grants available to homeowners. While solar panels help reduce demand on the grid for power needs, it’s unlikely that a single solar roof installation can provide all the power a home needs. Therefore, power is still drawn from the grid. This is especially true at night when the sun goes down. Batteries can be installed at a home to help store excess energy created by solar panels during low-usage times. The beauty of this is that instead of tapping into the grid while peak-usage rates are in effect, an AI powered grid could do the calculation – taking into account up-to-the-minute power costs – and deploy reserve power from batteries. In fact, AI could go so far as to perform calculations constantly to decide whether it makes more sense to consume cheap power from the grid, while redirecting all of the solar power to the battery packs.

Back to Basics: AI Isn't the Answer to What Ails Us in Cyber

AI has a great PR machine behind it and may hold good long-term potential. But it's not the answer to what ails us in cyber. In fact, I'd put AI in the same camp as advanced persistent threats (APTs) — sophisticated cyberattacks usually orchestrated by state-sponsored hackers and often undetected for long periods of time (think Stuxnet). Both are really intriguing, but in their own ways they're existential distractions from the necessary work at hand. At the crux of just about every high-profile breach and compromise, from Yahoo to Equifax, sits a lack of foundational cyber hygiene. Those breaches weren't about failing to use some super-expensive, bleeding-edge, difficult-to-deploy and unproven mouse trap. In cyber, what differentiates the leaders from the laggards isn't spending millions and millions of dollars on sexy bells-and-whistles interfaces. It's about organizations setting a culture in which security matters.

What’s needed to unlock the real power of blockchain and distributed apps

It’s a great irony that right at the very moment everyone is talking about unlocking parallelization and writing multi-threaded and hyper-efficient code, we suddenly have to figure out how to write efficient single-threaded code again. This goes back to the distributed nature of blockchain’s architecture and the consensus mechanisms that verify activity on the blockchain. In this environment, the infinite parallel execution that comes from every node on the network computing every transaction means that compute costs are extremely high. In other words, there is very little excess compute power available to the network, making it an exceptionally scarce resource. It’s a fascinating challenge. Programmers today are used to having access to cheap and virtually unlimited processing power. Not so with blockchain. Today, we’re seeing all this effort to relearn how to write extremely efficient software. But efficient code will only get us so far. For blockchain to gain widespread adoption, processing power will need to get much cheaper.

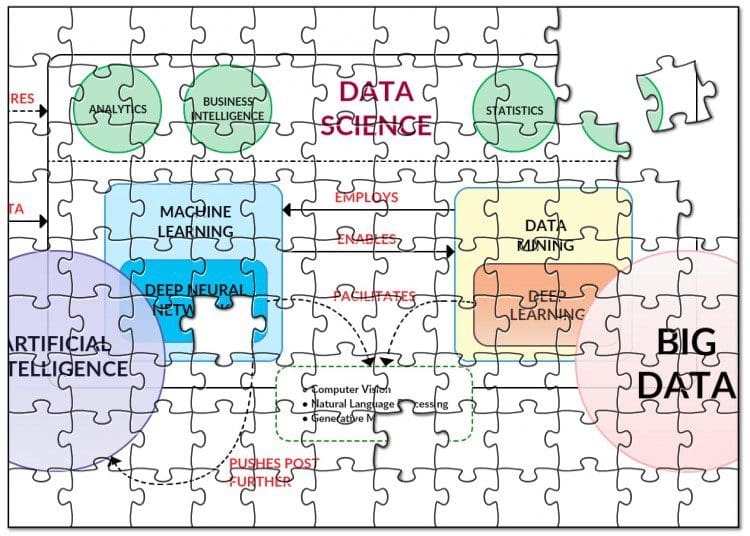

The Data Science Puzzle, Revisited

Though machine learning, artificial intelligence, deep learning, computer vision and natural language processing (along with a variety of other applications of these "intelligent" technologies) are all separate and distinct fields and application domains, even practitioners and researchers have to admit that there is some continually evolving "concept creep" going on any more, beyond the regular ol' confusion and confounding that has always taken place. And that's OK; these fields all started out as niche sub-disciplines of other fields (computer science, statistics, linguistics, etc.), and so their constant evolution should be expected. While it is important on some level to ensure that everyone who should have a basic understanding of their differences indeed possesses this understanding, when it comes to their application in fields such as data science, I would humbly submit that getting too far into the semantic weeds doesn't provide practitioners with much benefit in the long term.

6 machine learning success stories: An inside look

Ed McLaughlin, president of operations and technology at Mastercard, says ML “pervades everything that we do.” Mastercard is using ML to automate what he calls “toil,” or repetitive and manual tasks, freeing up humans to perform work that adds productivity and value. “It's clear we've reached a state of the art where there is a clear investment case to automate workplace tasks,” McLaughlin says. Mastercard is also using ML tools to augment change management throughout its product and service ecosystem. For example, ML tools help determine which changes are the most risk-free and which require additional scrutiny. Finally, Mastercard is using ML to detect anomalies in its system that suggest hackers are trying to gain access. McLaughlin also put a “safety net” in the network; when it finds suspicious behavior it trips circuit breakers that protect the network. “We have fraud-scoring systems constantly looking at transactions to update it and score the next transaction that's going in,” he says.

Innovation Isn't About What You Know, But What You Don't

When Steve Jobs first came up with the idea for the iPod, it wasn't actually a machine he had in mind, but "a thousand songs in my pocket." It was, at the time, an impossible idea, because hard drives of that capacity and size just didn't exist. In fairly short order though, the technology caught up to the vision. That kind of singular focus and drive helps explain Jobs' incredible success, but what about his failures? The Lisa, a precursor to the Macintosh, flopped. So did his first venture after Apple, NeXT Computer. Even at the height of Apple's dominance, there were failures such as iAds. Apple TV still hasn't really gained traction. "It's not what you don't know that kills you," Mark Twain famously said, "it's what you know for sure that ain't true" and that's the real innovator's dilemma. Innovation, necessarily, is about the future, but all we can really know is about the past and some of the present. Innovation is always a balancing act of staying true to your vision and re-examining your assumptions.

Does artificial intelligence have a language problem?

If AI were truly intelligent, it should have equal potential in all these areas, but we instinctively know machines would be better at some than others. Even when technological progress appears to be made, the language can mask what is actually happening. In the field of affective computing, where machines can both recognise and reflect human emotions, the machine processing of emotions is entirely different from the biological process in people, and the interpersonal emotional intelligence categorised by Gardener. So, having established the term “intelligence” can be somewhat problematic in describing what machines can and can’t do, let’s now focus on machine learning – the domain within AI that offers the greatest attraction and benefits to businesses today. The idea of learning itself is somewhat loaded. For many, it conjures mental images of our school days and experiences in education.

The Kubernetes Effect

The container and the orchestrator features provide a new set of abstractions and primitives. To get the best value of these new primitives and balance their forces, we need a new set of design principles to guide us. Subsequently, the more we use these new primitives, the more we will end up solving repeating problems and reinventing the wheel. This is where the patterns come into play. Design patterns provide us recipes on how to structure the new primitives to solve repeating problems faster. While principles are more abstract, more fundamental and change less often, the patterns may be affected by a change in the primitive behaviour. A new feature in the platform may make the pattern an anti-pattern or less relevant. Then, there are also practices and techniques we use daily. The techniques range from very small technical tricks for performing a task more efficiently, to more extensive ways of working and practices.

Quote for the day:

"Keep your face always toward the sunshine - and shadows will fall behind you." -- Walt Whitman

No comments:

Post a Comment