IT jobs in 2020: Preparing for the next industrial revolution

As IT evolves in the direction of more cloud adoption, more automation, and more artificial intelligence (AI), machine learning (ML) and analytics, it's clear that the IT jobs landscape will change too. For example, tomorrow's CIO is likely to become more of a broker and orchestrator of cloud services, juggling the strategic concerns of the C-suite with more tactical demands from business units, and less of an overseer of enterprise applications in on-premises data centres. Meanwhile, IT staff are likely to spend more time in DevOps teams, integrating multiple cloud services and residual on-premises applications, and enforcing cyber-security, and less time tending racks of servers running siloed client-server apps, or deploying and supporting endpoint devices. Of course, some traditional IT jobs and tasks will remain, because revolutions don't happen overnight and there will be good reasons for keeping some workloads running in on-premises data centres. But there's no doubt which way the IT wind is blowing, across businesses of all sizes.

Why 2018 Will be The Year of AI

Everything is presently becoming connected with our devices, such as being able to start a project on your Desktop PC and then able to finish your work on a connected smartphone or tablet. Ray Kurzweil believes that eventually, humans will be able to use sensors that connects our brains to the cloud. Since the internet originally connected to computers and has advanced to connecting to our mobile devices, sensors that already enable buildings, homes and even our clothes to be linked to the internet can in our near future expand to be used to connect our minds into the cloud. Another component for AI becoming more advanced is due to computing becoming freer to use. Previously, it would be costly for new chips to come out during an eighteen-month-period at twice the speed; however, Marc Andreessen claims that new chips are being processed at the same speed but only at half of the cost.

Artificial intelligence isn’t as clever as we think, but that doesn’t stop it being a threat

/cdn.vox-cdn.com/uploads/chorus_image/image/57816197/670437024.jpg.0.jpg)

Even though our most advanced AI systems are dumber than a rat (so says Facebook’s head of AI, Yann LeCun), it won’t stop them from having a huge effect on our lives — especially in the world of work. Earlier this week, a study published by consultancy firm McKinsey suggested that as many as 800 million jobs around the world could be under threat from automation in the next 12 years. But, the study’s authors clarify that only 6 percent of the most rote and repetitive jobs are at danger of being automated entirely. ... If a computer can do one-third of your job, what happens next? Do you get trained to take on new tasks, or does your boss fire you, or some of your colleagues? What if you just get a pay cut instead? Do you have the money to retrain, or will you be forced to take the hit in living standards?

Fintech is facing a short, game-changing window of opportunity

Regulation was a major competitive barrier that helped fintech startups. Now that those regulations are (nearly) out the door, big banks will enter lucrative markets more aggressively. Customer acquisition costs will rise because there will be more bidders for the same consumers, plus large financial institutions have deeper pockets and an ability to amortize costs across multiple business lines. This will make it much harder for startups, no matter how innovative, to compete head-on without the CFPB’s regulatory cover. Partnerships provide a great path to success given the scale, brand recognition and resources that financial institutions bring to the table. Of course, partnering with big banks can be risky. Sales cycles are long, bureaucracy is high, and the risk looms large that banks could ultimately take these innovations in-house. Even if their products are only 50 percent as good, the well-resourced, branded players will almost always win.

Here’s How Blockchain can Make Spam and DDoS Attacks a Thing of the Past

A startup called Cryptamail is launching an email network that has no centralized servers. By using a pure blockchain approach to sending and receiving emails, the system is not susceptible to attacks on any single node on the blockchain. This also means that messages cannot be read without the private key – which makes Cryptamail more secure than regular email, wherein there is a risk of compromise if an attacker is able to access the email servers somehow. One key benefit here is that through blockchains, nodes get to earn tokens for participating in the network – this is how Bitcoin miners have been getting their reward, after all. And with tokenization, there is some cost for sending messages across, which can discourage spammers. Meanwhile, users can get compensated fairly for receiving legitimate marketing material that they may like.

In the future of education, AI must go hand-in-hand with Emotional Intelligence

People often tend to overlook the fact that technology is only as good as its creator. Unless there is a tutor to inspire, help students through their struggles, adopt teaching methods that are suited to the student’s needs and abilities, students won’t be able to succeed in their career paths. Technology can only lend a helping hand to learn, but it cannot replace the immense knowledge and experience that comes with a teacher. Therefore, to achieve good scores or results, a blend of both traditional learning along with digital tools is necessary to create an environment for learners that is informative, immersive, interesting and flexible. Hello Emotional intelligence! While Artificial Intelligence powered chatbots can deliver the best of content to many students simultaneously by analyzing a large amount of structured and unstructured data, it completely lacks on the emotional involvement quotient.

Machine Learning with Optimus on Apache Spark

The way most Machine Learning models work on Spark are not straightforward, and they need lots of feature engineering to work. That’s why we created the feature engineering section inside the Optimus Data Frame Transformer. Machine Learning is one of the last steps, and the goal for most Data Science WorkFlows. Some years ago the Apache Spark team created a library called MLlib where they coded great algorithms for Machine Learning. Now with the ML library we can take advantage of the Dataframe API and its optimization to create easily Machine Learning Pipelines. Even though this task is not extremely hard, is not easy. The way most Machine Learning models work on Spark are not straightforward, and they need lots of feature engineering to work. That’s why we created the feature engineering section inside the Optimus Data Frame Transformer.

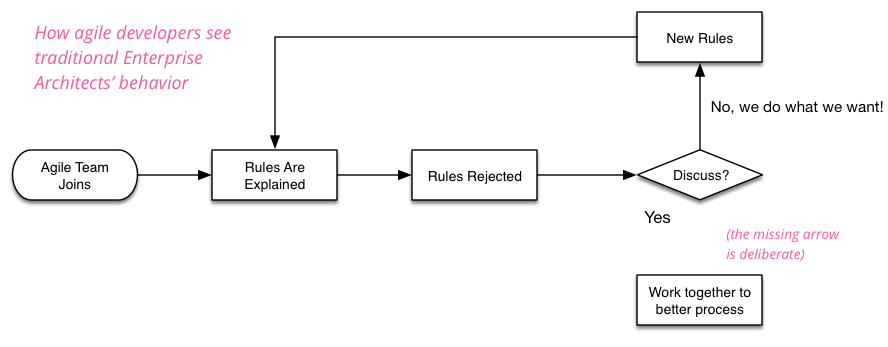

The Death Of Enterprise Architecture: Defeating The DevOps, Microservices ...

Current application theory says that all responsibility for software should be pushed down to the actual DevOps-style team writing, delivering, and running the software. This leaves Enterprise Architect role in the dust, effectively killing it off. In addition to this being disquieting to Enterprise Architects out there who have steep mortgage payments and other expensive hobbies, it seems to drop out the original benefits of enterprise architecture, namely oversight of all IT-related activities to make sure things both don't go wrong (e.g., with spending, poor tech choices, problematic integration, etc.) and that things, rather, go right. Michael has spoken with several Enterprise Architecture teams over on the changing nature of how Enterprise Architecture help in a DevOps- and cloud-native-driven culture. He will share their experiences including what type of Enterprise Architecture is actually neededThe Role of an Enterprise Architect in a Lean Enterprise

Being able to describe both the current state and future state architectures is essential to bringing projects in line. Start by assessing the current portfolio. Map out what systems exist and what they do. This does not need to be deeply detailed or call out individual servers. Instead, focus on applications and products and how they relate. Multiple layers may be required. If the enterprise is big enough, break the problem down into functional areas and map them out individually. If there is an underlying architectural pattern or strategy, identify it and where it has and has not been followed. For example, if the enterprise strategy is a Service Oriented Architecture, which applications work around it and access the master data directly? Where are applications communicating via a common database? Once you have the current state mapped out, think about what you would like the architecture to look like in the future.

Digital Trust: Enterprise Architecture and the Farm Analogy

In describing best practices for handling data, let’s imagine data as an asset on a farm. The typical farm’s wide span makes constant surveillance impossible, similar in principle to data security. With a farm, you can’t just put a fence around the perimeter and then leave it alone. The same is true of data because you need a security approach that makes dealing with volume and variety easier. On a farm, that means separating crops and different types of animals. For data, segregation serves to stop those without permissions from accessing sensitive information. And as with a farm and its seeds, livestock and other assets, data doesn’t just come in to the farm. You also must manage what goes out. A farm has several gates allowing people, animals and equipment to pass through, pending approval. With data, gates need to make sure only the intended information filters out and that it is secure when doing so.

Quote for the day:

"Not all readers are leaders, but all leaders are readers." -- Harry S. Truman

No comments:

Post a Comment